Abstract

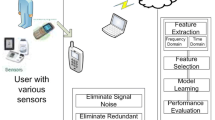

With the widespread application of mobile edge computing (MEC), MEC is serving as a bridge to narrow the gaps between medical staff and patients. Relatedly, MEC is also moving toward supervising individual health in an automatic and intelligent manner. One of the main MEC technologies in healthcare monitoring systems is human activity recognition (HAR). Built-in multifunctional sensors make smartphones a ubiquitous platform for acquiring and analyzing data, thus making it possible for smartphones to perform HAR. The task of recognizing human activity using a smartphone’s built-in accelerometer has been well resolved, but in practice, with the multimodal and high-dimensional sensor data, these traditional methods fail to identify complicated and real-time human activities. This paper designs a smartphone inertial accelerometer-based architecture for HAR. When the participants perform typical daily activities, the smartphone collects the sensory data sequence, extracts the high-efficiency features from the original data, and then obtains the user’s physical behavior data through multiple three-axis accelerometers. The data are preprocessed by denoising, normalization and segmentation to extract valuable feature vectors. In addition, a real-time human activity classification method based on a convolutional neural network (CNN) is proposed, which uses a CNN for local feature extraction. Finally, CNN, LSTM, BLSTM, MLP and SVM models are utilized on the UCI and Pamap2 datasets. We explore how to train deep learning methods and demonstrate how the proposed method outperforms the others on two large public datasets: UCI and Pamap2.

Similar content being viewed by others

References

Gao Z, Xuan H, Zhang H, Wan S, Choo KR (2019) Adaptive fusion and category-level dictionary learning model for multi-view human action recognition. IEEE Internet of Things Journal, pp 1–1

Ding S, Qu S, Xi Y, Sangaiah AK, Wan S (2019) Image caption generation with high-level image features. Pattern Recognition Letters 123:89–95

Gao H, Huang W, Yang X, Duan Y, Yin Y (2018) Toward service selection for workflow reconfiguration: An interface-based computing solution. Futur Gener Comput Syst 87:298–311

Xu Y, Yin J, Huang J, Yin Y (2018) Hierarchical topic modeling with automatic knowledge mining. Expert Syst Appl 103:106–117

Ding S, Qu S, Xi Y, Wan S (2019) A long video caption generation algorithm for big video data retrieval. Futur Gener Comput Syst 93:583–595

Zhang R, Xie P, Wang C, Liu G, Wan S (2019) Classifying transportation mode and speed from trajectory data via deep multi-scale learning. Computer Networks 162:106861

Gao H, Duan Y, Miao H, Yin Y (2017) An approach to data consistency checking for the dynamic replacement of service process. IEEE Access 5:11700–11711

He L, Chen C, Zhang T, Zhu H, Wan S (2018) Wearable depth camera: monocular depth estimation via sparse optimization under weak supervision. IEEE Access 6:41337–41345

Ronao CA, Cho S-B (2016) Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst Appl 59:235–244

Hassan MM, Uddin MZ, Mohamed A, Almogren A (2018) A robust human activity recognition system using smartphone sensors and deep learning. Futur Gener Comput Syst 81:307–313

Ignatov A (2018) Real-time human activity recognition from accelerometer data using convolutional neural networks. Appl Soft Comput 62:915–922

Wang L, Zhen H, Fang X, Wan S, Ding W, Guo Y (2019) A unified two-parallel-branch deep neural network for joint gland contour and segmentation learning. Futur Gener Comput Syst 100:316–324

Nweke HF, Teh YW, Al-Garadi MA, Alo UR (2018) Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Systems with Applications

Lee D-G, Lee S-W (2019) Prediction of partially observed human activity based on pre-trained deep representation. Pattern Recogn 85:198–206

Yang Y, Hou C, Lang Y, Guan D, Huang D, Xu J (2019) Open-set human activity recognition based on micro-doppler signatures. Pattern Recogn 85:60–69

Saini R, Kumar P, Roy PP, Dogra DP (2018) A novel framework of continuous human-activity recognition using kinect. Neurocomputing

Khan MUS, Abbas A, Ali M, Jawad M, Khan SU, Li K, Zomaya AY (2018) On the correlation of sensor location and human activity recognition in body area networks (bans). IEEE Syst J 12(1):82–91

Chen Z, Zhang L, Cao Z, Guo J (2018) Distilling the knowledge from handcrafted features for human activity recognition. IEEE Transactions on Industrial Informatics

Khalifa S, Lan G, Hassan M, Seneviratne A, Das SK (2018) Harke: Human activity recognition from kinetic energy harvesting data in wearable devices. IEEE Trans Mob Comput 17(6):1353–1368

Lv M, Chen L, Chen T, Chen G (2018) Bi-view semi-supervised learning based semantic human activity recognition using accelerometers. IEEE Transactions on Mobile Computing

Cheng W, Erfani SM, Zhang R, Kotagiri R (2018) Learning datum-wise sampling frequency for energy-efficient human activity recognition. In: AAAI

Rokni SA, Nourollahi M, Ghasemzadeh H (2018) Personalized human activity recognition using convolutional neural networks. arXiv:1801.08252

Yin Y, Chen L, Xu Y, Wan J, Zhang H, Mai Z (2019) Qos prediction for service recommendation with deep feature learning in edge computing environment. Mobile Networks and Applications, pp 1–11

Gao Z, Wang D, Wan S, Zhang H, Wang Y (2019) Cognitive-inspired class-statistic matching with triple-constrain for camera free 3d object retrieval. Futur Gener Comput Syst 94:641–653

Wan S, Zhao Y, Wang T, Gu Z, Abbasi QH, Choo K-KR (2019) Multi-dimensional data indexing and range query processing via voronoi diagram for internet of things. Futur Gener Comput Syst 91:382–391

Gao H, Mao S, Huang W, Yang X (2018) Applying probabilistic model checking to financial production risk evaluation and control: A case study of alibaba’s yu’e bao. IEEE Transactions on Computational Social Systems 99:1–11

Chen Y, Deng S, Ma H, Yin J (2019) Deploying data-intensive applications with multiple services components on edge. Mobile Networks and Applications, pp 1–16

Anguita D, Ghio A, Oneto L, Parra X, Reyes-Ortiz JL (2013) A Public Domain Dataset for Human Activity Recognition Using Smartphones. In: Esann

Ghio A, Oneto L (2014) Byte the bullet: Learning on real-world computing architectures. In: ESANN

Noor MHM, Salcic Z, Kevin I, Wang K (2017) Adaptive sliding window segmentation for physical activity recognition using a single tri-axial accelerometer. Pervasive and Mobile Computing 38:41–59

Lee Y-S, Cho S-B (2014) Activity recognition with android phone using mixture-of-experts co-trained with labeled and unlabeled data. Neurocomputing 126:106–115

Chen Z, Zhu Q, Soh YC, Zhang L (2017) Robust human activity recognition using smartphone sensors via ct-pca and online svm. IEEE Transactions on Industrial Informatics 13(6):3070– 3080

Cao L, Wang Y, Zhang B, Jin Q, Vasilakos AV (2018) Gchar: An efficient group-based context—aware human activity recognition on smartphone. Journal of Parallel and Distributed Computing 118:67–80

Bao L, Intille SS (2004) Activity recognition from user-annotated acceleration data. In: International Conference on Pervasive Computing. Springer, pp 1–17

Wu W, Dasgupta S, Ramirez EE, Peterson C, Norman GJ (2012) Classification accuracies of physical activities using smartphone motion sensors. Journal of Medical Internet Research 14(5):e130

Zhao Y, Li H, Wan S, Sekuboyina A, Hu X, Tetteh G, Piraud M, Menze B (2019) Knowledge-aided convolutional neural network for small organ segmentation. IEEE Journal of Biomedical and Health Informatics

Ding S, Qu S, Xi Y, Wan S (2019) Stimulus-driven and concept-driven analysis for image caption generation, Neurocomputing. https://doi.org/10.1016/j.neucom.2019.04.095

Xu X, Xue Y, Qi L, Yuan Y, Zhang X, Umer T, Wan S (2019) An edge computing-enabled computation offloading method with privacy preservation for internet of connected vehicles. Future Generation Computer Systems 96:89–100

Li W, Liu X, Liu J, Chen P, Wan S, Cui X (2019) On improving the accuracy with auto-encoder on conjunctivitis. Applied Soft Computing, p 105489

Reyes-Ortiz J-L, Oneto L, Samà A, Parra X, Anguita D (2016) Transition-aware human activity recognition using smartphones. Neurocomputing 171:754–767

Kwapisz JR, Weiss GM, Moore SA (2011) Activity recognition using cell phone accelerometers. ACM SigKDD Explorations Newsletter 12(2):74–82

Anguita D, Ghio A, Oneto L, Parra X, Reyes-Ortiz JL (2012) Human activity recognition on smartphones using a multiclass hardware-friendly support vector machine. In: International workshop on ambient assisted living, Springer, pp 216– 223

Wan S, Gu Z, Ni Q (2019) Cognitive computing and wireless communications on the edge for healthcare service robots. Computer Communications. https://doi.org/10.1016/j.comcom.2019.10.012

Chen Y, Shen C (2017) Performance analysis of smartphone-sensor behavior for human activity recognition. IEEE Access 5:3095–3110

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant 61672454; by the Fundamental Research Funds for the Central Universities of China under Grant 2722019PY052 and by the open project from the State Key Laboratory for Novel Software Technology, Nanjing University, under Grant No. KFKT2019B17.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors would like to declare that there are no conflicts of interest with any third party.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wan, S., Qi, L., Xu, X. et al. Deep Learning Models for Real-time Human Activity Recognition with Smartphones. Mobile Netw Appl 25, 743–755 (2020). https://doi.org/10.1007/s11036-019-01445-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11036-019-01445-x