Abstract

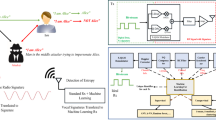

In wireless Internet of Things (IoT) systems, the multi-input multi-output (MIMO) and cognitive radio (CR) techniques are usually involved into the mobile edge computing (MEC) structure to improve the spectrum efficiency and transmission reliability. However, such a CR based MIMO IoT system will suffer from a variety of smart attacks from wireless environments, even the MEC servers in IoT systems are not secure enough and vulnerable to these attacks. In this paper, we investigate a secure communication problem in a cognitive MIMO IoT system comprising of a primary user (PU), a secondary user (SU), a smart attacker and several MEC servers. The target of our system design is to optimize utility of the SU, including its efficiency and security. The SU will choose an idle MEC server that is not occupied by the PU in the CR scenario, and allocates a proper offloading rate of its computation tasks to the server, by unloading such tasks with proper transmit power. In such a CR IoT system, the attacker will select one type of smart attacks. Then two deep reinforcement learning based resource allocation strategies are proposed to find an optimal policy of maximal utility without channel state information(CSI), one of which is the Dyna architecture and Prioritized sweeping based Edge Server Selection (DPESS) strategy, and the other is the Deep Q-network based Edge Server Selection (DESS) strategy. Specifically, the convergence speed of the DESS scheme is significantly improved due to the trained convolutional neural network (CNN) by utilizing the experience replay technique and stochastic gradient descent (SGD). In addition, the Nash equilibrium and existence conditions of the proposed two schemes are theoretically deduced for the modeled MEC game against smart attacks. Compared with the traditional Q-learning algorithm, the average utility and secrecy capacity of the SU can be improved by the proposed DPESS and DESS schemes. Numerical simulations are also presented to verify the better performance of our proposals in terms of efficiency and security, including the higher convergence speed of the DESS strategy.

Similar content being viewed by others

References

Chen X (2015) Decentralized computation offloading game for mobile cloud computing. IEEE Transaction on Parallel and Distributed Systems 26(4):974–983

Xu J, Chen L, Ren S (2015) Online learning for offloading and autoscaling in energy harvesting mobile edge computing. IEEE Transactions on Cognitive Communications and Networking 3(3):361–373

Mao Y, You C, Zhang J, Huang K, Lataief KB (2017) A survey on mobile edge computing: the communication perspective. IEEE Communications Surveys & Tutorials 19(4):2322–2358

Shirazi SN, Gouglidis A, Farshad A, Hutchison D (2017) The extended cloud: review and analysis of mobile edge computing and fog from a security and resilience perspective. IEEE Journal on Selected Areas in Communications 35(11):2586–2595

Xiao L, Xie C, Chen T, Dai H, Poor HV (2016) Mobile offloading game against smart attacks. In: Proc. IEEE International Conference on Computer Communications (INFOCOM WKSHPS), -BigSecurity, San Francisco, CA

Duan L, Gao L, Huang J (2014) Cooperative spectrum sharing: a Contract-Based approach. IEEE Transactions On Mobile Computing 13(1):174–187

Wang F, Xu J, Wang X, Cui S (2018) Joint offloading and computing optimization in wireless powered mobile-edge computing. IEEE Trans Wirel Commun 17(3):1784–1797

Li Y, Li Q, Liu J, Xiao L (2015) Mobile cloud offloading for malware detections with learning. In: Proc. IEEE international conference on computer communications, INFOCOM), -BigSecurity, Hongkong

Wan X, Sheng G, Li Y, Xiao L, Du X (2017) Reinforcement Learning Based Mobile Offloading for Cloud-based Malware Detection. In: Proc. IEEE Global Commun Conf, GLOBECOM, Singapore

Messous M, Sedjelmaci H, Houari N, Senouci S (2017) Computation offloading game for an UAV network in mobile edge computing. In: In Proc. of, IEEE International Conference on Communications (ICC). Paris, France, pp 1–6

Min M, Wan X, Xiao L, Chen Y, Xia M, Wu D, Dai H (2018) Learning-based privacy-aware offloading for healthcare IoT with energy harvesting. IEEE Internet of Things Journal, https://doi.org/10.1109/JIOT.2018.2875926

Li Y, Xiao L, Dai H, Poor HV (2017) Game Theoretic Study of Protecting MIMO Transmission Against Smart Attacks. In: Proc. of IEEE International Conference on Communications (ICC), Paris

Sutton RS, Barto AG (1998) Reinforcement learning: An introduction. MIT press

Xiao L, Chen T, Xie C, Dai H, Poor HV (2018) Mobile crowdsensing games in vehicular networks. IEEE Trans. Vehicular Technology 62(2):1535–1545

Ayatollahi H, Tapparello C, Heinzelman W (2017) Reinforcement learning in MIMO wireless networks with energy harvesting. In: Proc. of IEEE international conference on communications (ICC), Paris, France, pp 1-6

Wang Z, Liu L, Zhang H, Xiao G (2016) Fault-Tolerant Controller design for a class of nonlinear MIMO Discrete-Time systems via online reinforcement learning algorithm. IEEE transactions on systems, Man, and Cybernetics:, Systems 46(5):611– 622

Liu Y, Tang L, Tong S, Philip Chen CL, Li D (2015) Reinforcement learning Design-Based adaptive tracking control with less learning parameters for nonlinear Discrete-Time MIMO systems. IEEE Transactions on Neural Networks and Learning Systems 26(1):165–176

Xiao L, Li Y, Han G, Dai H, Poor HV (2018) A secure mobile crowdsensing game with deep reinforcement learning. IEEE Trans. Information Forensics & Security 13(1):35–47

Xiao L, Xie C, Min M, Zhuang W (2018) User-centric view of unmanned aerial vehicle transmission against smart attacks. IEEE Trans. Vehicular Technology 67(4):3420–3430

Xiao L, Xie C, Han G, Li Y, Zhuang W, Sun L (2016) Channel-based authentication game in MIMO systems. In: Proc. IEEE Global Commun. Conf. (GLOBCOM). Washington. DC

Moore AW, Atkeson CG (1993) Prioritized sweepin: Reinforecement learning with less data and less time. Mach Learn 13(1):103–130

Watkins CJ, Dayan P (1992) Q-learning. Machine Learning 8(3):279–292

Mnih V, Kavukcuoglu K, Silver D et al (2013) Playing Atari with Deep Reinforcement Learning. Computer Science

Xiao L, Xie C, Han G, Li Y, Zhuang W, Sun L (2017) Game Theoretic Study on Channel-based Authentication in MIMO Systems. IEEE Trans. Vehicular Technology 66(8):7474– 7484

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work is supported in part of Science, Technology and Innovation Commission of Shenzhen Municipality (No. JCYJ20170816151823313), NSFC (No. U1734209, No. 61501527), States Key Project of Research and Development Plan (No. 2017YFE0121300-6), The 54th Research Institute of China Electronics Technology Group Corporation (No. B0105), Guangdong R&D Project in Key Areas (No.2019B010156004) and Guangdong Provincial Special Fund For Modern Agriculture Industry Technology Innovation Teams (No. 2019KJ122).

Appendix : A: Proof of theorem 1

Appendix : A: Proof of theorem 1

Proof

By (2), we have

Let A and function f(p) denote xL − ρe0 − νt0 and \(-xL \frac {\log _{2} \det \left (\mathbf {I}+ \frac {p}{N_{U}} {\mathbf {H}}_{UM} {\mathbf {H}}_{UM}^{T} \right )}{\rho p+ \nu }\), respectively. Thus, we can obtain the equivalent expression of the utility as

By the expression of f(p), it is clear that we can get

By (9), we can obtain

If the equality (8) holds, we have \(\frac {\partial ^{2} f(p)}{\partial p^{2}}>0\). Thus, \(\frac {\partial f(p)}{\partial p}\) increases with p monotonically and it is always negative. From (11), we get

Therefore, it can be shown that the utility of the SU increases with p. Moreover, we can have the maximum of UU(0,p), i.e., \(U(0, \overline {p})\). Therefore, from (14),

holds for \(\overline {p}\).

By the definition of (1), we have

If the equations (5)-(7) hold, we can get

Therefore, the equality

holds for g∗ = 0.

Then by (15) and (23), we can conclude that the NE of the MEC system is \((\overline {p},0)\). By (9), we obtain

which implies that the utility of the SU increases with x when NE holds. Thus, we can know the utility can arrive at the maximum when x is maximal, i.e.,

Thus, the SU can get the optimal utility if the inequalities (5)-(9) hold. □

Rights and permissions

About this article

Cite this article

Ge, S., Lu, B., Xiao, L. et al. Mobile Edge Computing Against Smart Attacks with Deep Reinforcement Learning in Cognitive MIMO IoT Systems. Mobile Netw Appl 25, 1851–1862 (2020). https://doi.org/10.1007/s11036-020-01572-w

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11036-020-01572-w