Abstract

Recommendation systems have been investigated and implemented in many ways. In particular, in the case of a collaborative filtering system, the most important issue is how to manipulate the personalized recommendation results for better user understandability and satisfaction. A collaborative filtering system predicts items of interest for users based on predictive relationships discovered between each item and others. This paper proposes a categorization for grouping associative items discovered by mining, for the purpose of improving the accuracy and performance of item-based collaborative filtering. It is possible that, if an associative item is required to be simultaneously associated with all other groups in which it occurs, the proposed method can collect associative items into relevant groups. In addition, the proposed method can result in improved predictive performance under circumstances of sparse data and cold-start initiation of collaborative filtering starting from a small number of items. In addition, this method can increase prediction accuracy and scalability because it removes the noise generated by ratings on items of dissimilar content or level of interest. The approach is empirically evaluated by comparison with k-means, average link, and robust, using the MovieLens dataset. The method was found to outperform existing methods significantly.

Similar content being viewed by others

1 Introduction

The WWW hypertext system is a very large distributed digital information space. Some estimates suggest that the World Wide Web currently includes about 200+ million pages of information and that that number doubles every 4 months. As more information becomes available, it becomes increasingly difficult to search for the information one is looking for without specialized aide. With recent advances in information technology, the amount of information that users can access has increased. Because of the development of Web services, a wide variety of information is being produced and distributed rapidly in digital form. In this excess of information, it is not straightforward for users to search and retrieve desired information in a short time period. In response, collaborative filtering systems, in which users can use and control filtered information efficiently, have appeared in e-commerce. The term applies to information filtering for building a system that can predict and recommend customized items to users [1, 13, 14]. Collaborative filtering systems use each user’s rating information on various items. The most common approach compares rating information between users, discovers similar users, and then predicts a user preference for a certain item based on similarity of preferences. Because collaborative filtering does not require any information on the content of items, it can recommend items like music or movies for which the content is difficult to analyze. This approach enables the technique to be used on any type of object. A large variety of services can be created because collaborative filtering considers only human judgments about the value of objects. These judgments are usually expressed as numerical ratings which represent preferences for objects. The importance of collaborative filtering is reflected in a growing number of research projects on this topic. One of the earliest was the Grouplens project,Footnote 1 which focused on filtering news articles from Usenet, and more recently on movie recommendations. Ringo was a collaborative filtering prototype for recommending music which led to a spinoff company, Firefly. Footnote 2 Most collaborative filtering systems collect user opinions as ratings on a numerical scale, leading to a sparse ratings matrix {user, item}. The collaborative filtering technique then uses this ratings matrix to predict new ratings. Several algorithms have been proposed to use a ratings matrix {user, item}. The similarity of preferences between a specific user and other users is computed from the correlation coefficient. Predicted preferences for a certain item are based on other users’ preferences for that item and the similarity of users to each other [1, 13, 14, 17].

The remainder of this paper is organized as follows: Section 2 briefly describes recommendation systems and item-based collaborative filtering. Section 3 illustrates in detail the categorization for grouping associative items discovered by data mining using item-based collaborative filtering for the purpose of improving accuracy and performance. In Section 4, experimental results are presented. Finally, Section 5 draws some conclusions and presents future plans to extend this work.

2 Related research

2.1 Recommendation systems

A recommendation system is basically a system that can learn about a user’s personal preferences based on the user’s characteristics and behaviors and can then provides the most appropriate content to meet the user’s needs. Recommendation systems have been applied in various industries, and their usefulness has been recognized in recent years. There are various ways of designing recommendation systems. Different machine learning algorithms [15] can be used to construct a mapping from the features of an item to a number indicating the utility of the item to the user, based on previous ratings that the user has made on other items. For example, the words in a document can be used to constitute features that can be used to predict whether a document might be interesting for the user. An alternative method is to use the ratings provided by similar users to predict ratings on items that the user has not yet rated [9]. Most recommendation systems use two techniques: content-based filtering and collaborative filtering. Content-based filtering is based on analysis of the content of the objects under consideration and its relation to the user’s preferences. For content-based filtering, it is therefore necessary that the results of content analysis and user preferences be reliably determined. One of the most successful technologies used for recommendation systems is collaborative filtering. The basic premise is that users with similar tastes tend to like similar types of items and that consequently a rating by someone similar is a good predictor for a user’s rating of an item. Collaborative filtering can improve a recommendation system by taking advantage of this information. Numerous commercial companies have used this technology to provide recommendations to users. Collaborative filtering has one advantage over content-based filtering: the ability to filter any type of item, e.g., music, movies at MovieCritic,Footnote 3 books at Amazon,Footnote 4 drawings, fashion design, and photos. Because the filtering process is based only on historical information about whether a given target user has preferred an item before, analysis of the content itself is not necessarily required [7, 12]. However, despite its success and popularity, collaborative filtering has encountered limitations with quality evaluation, particularly the sparsity problem and the cold-start problem. The sparsity problem exists when the number of ratings already obtained is very small compared to the number of ratings that need to be predicted. Effective generation of ratings from a small number of examples is therefore important. This problem is particularly severe during the startup phase of the system when the number of users is small [9]. The second problem is the so-called cold-start problem. This problem can be subdivided into cold-start items and cold-start users. A cold-start item, which is the focus of the present research, is a new item that has just joined a collaborative filtering system and has garnered few opinions. In this situation, a recommendation system is generally unable to make high-quality recommendations.

Collaborative filtering intensifies personalization from a search point of view, improves the accuracy of searches, and decreases the time spent in searching, e.g., for following up changes on a particular web site. The basic mechanism behind collaborative filtering systems is as follows: first, the neighbor group, the users who have similar preferences for the item selected by the target user, is determined using a variety of similarity computing methods. Based on the neighbor group, the predicted values of particular items, which estimate how strongly the target user is likely to prefer the item, are obtained, and then the top N items with the highest predicted values that are likely to be of interest to the target user are identified.

2.2 Item-based collaborative filtering

Item-based collaborative filtering predicts new ratings based on weighted ratings between similar items. In practice, large numbers of ratings of similar items are usually not available because of the sparsity inherent in ratings data. Item-based approaches look at the item sets that the user has already rated, calculate how similar they are to item i, and then select the k most similar items. At the same time, their associative items’ similarities are calculated. Item-based approaches such as those proposed in [13, 17] use the same idea, but with similarities of items instead of users [7, 9, 12, 14]. The item-based approaches perform a similarity calculation between items by first identifying the users who have rated both items and then applying a similarity calculation such as correlation-based similarity or linear-combination similarity to determine a similarity value. The correlation-based similarity calculation is the Pearson correlation algorithm. This similarity measures the degree to which a linear relationship exists between items, using Eq. 1.

where sim(k,l) denotes the similarity between items k and l, m is the total number of users, and R u,k , R u,l are the ratings of user u on items k and l respectively. Note that sim(k,l)∈[−1,1]. The value of sim(k,l) measures the similarity between the items’ rating vectors. A high value (close to +1) means high similarity, a value close to 0 means low correlation (not much can be deduced), and a value close to −1 means that the items are often oppositely ranked. The linear-combination similarity calculation uses the difference in value range between the item-rating matrix and the group-rating matrix calculated using Eq. 2. In the item-rating matrix, the rating values are integers, whereas the group-rating matrix contains real values ranging from 0 to 1. The obvious way to perform this calculation is to expand the range of the continuous (real) data from [0,1] to [1,5] or to reduce the range of the discrete (integer) data from [1, 5] to [0,1] and then to use the correlation-based similarity calculation as follows:

where sim(k,l) is the similarity between items k and l, c is the combination coefficient, and sim(k,l) item , sim(k,l) group are the similarities between the items as calculated from the item-rating matrix and the group-rating matrix respectively [14].

The next step is to generate the prediction. Once the set of similar items has been identified based on the similarity measure, the next step is to look at the ratings of the target items and to apply weighted-sum and regression techniques to obtain predictions. The weighted-sum method must be scaled by the sum of the similarity values to make the predicted value fall within the defined range. The regression method is similar to the weighted-sum method, but instead of using the ratings of similar items directly, it uses the ratings obtained based on a regression model.

3 Categorization for grouping associatives using data mining

Associative item mining is done using data mining techniques to perform item-based collaborative filtering. This approach also improves accuracy and performance by reducing a high-dimensional feature space using hypergraph partitioning. The feature vectors represent the associative items observed after the preprocessing of the MovieLens datasets [16].

3.1 Selection for associative item mining

The Apriori algorithm can mine association rules using data mining techniques. The process of mining association rules between items consists of two stages. In the first stage, combinations with transaction support in excess of a minimum level are identified to constitute a list of frequent items. In the second stage, the frequent-item list is used to create association rules from the database. For all frequent items (L), find subset instead of all empty sets of frequent items. For each subset (A), if the ratio of support (L) to support (A) is not less than a minimum confidence threshold, a rule of the form A → (L-A) is created. The support of this rule is support (L) [9, 13].

Hypergraph partitioning is used to divide the vertices of a hypergraph into equal partitions such that the number of hyperedges connecting vertices in different partitions is minimized. A hyperedge is a generalization of a graph where the set of edges is replaced by a set of hyperedges. Formally, a hypergraph consists of a set of vertices made up of items and a set of hyperedges representing frequently occurring sets of items, where each hyperedge is a subset of the vertex set. A hypergraph is an extension of a graph in the sense that each hypergraph edge can connect more than two vertices. A coarsening step generates a sequence of hypergraghs that are approximations of the final hypergragh. Then, the initial partitioning step finds a partitioning by exploiting global information in the hypergragh. Iterative refinement in each coarseness-reduction step improves the partitioning quality because progressively smaller subsets of vertices are moved between the partitions. In this paper, the vertex set corresponds to the distinct items in the MovieLens dataset [16], and the hyperedges correspond to the k most frequent item sets. The weights in the hypergraph partitioning process are used as the confidence level of the association rules when using the Apriori algorithm. A transaction belongs to the group that has the highest score with respect to the transaction.

3.2 Similarity coefficient for associative item mining

The grouping required for associative item mining can be performed using some of the categorization methods used in machine learning. These methods are the Jaccard coefficient, dice coefficient, cosine coefficient, and multiplicity coefficient methods [9]. The process of calculating the similarity coefficients for the associative items obtained by data mining is presented in Table 1. Let {ni} be an item that has just been added to the associative item group, which means that group j (i) needs to be changed. Let {group 1 (i), group 2 (i),…, group itemnum (i)} be groups of associative items. Each element of the matrix contains the probability of the associative items in a class compared to the associative items in all classes.

Among the methods examined, the JC, DC, and CC methods improved categorization accuracy significantly. To evaluate the performance of the similarity coefficient using the associative item mining method designed in this research, the similarity coefficient method proposed was compared with JC, DC, and CC using the similarity coefficient in the CGAIM algorithm. Table 2 represents the response time (in seconds) and the F-score of the similarity coefficient obtained using the associative item mining process. The JC method exhibited poor performance. However, the accuracy of the process for obtaining similarity coefficient JC is higher than that for CC, but lower than that for DC. The process for obtaining similarity coefficient DC is much more accurate than the other methods. In addition, the DC case exhibited relatively good performance, while the CC case exhibited poor performance. However, the accuracy of the CC similarity coefficient was far lower than the others. Therefore, in this research, it was judged appropriate to use the dice coefficient as a similarity measure for associative item mining.

3.3 Categorization for grouping associative items from data mining

The main steps of the CGAIM method are presented in Table 3. The CGAIM method assigns each item to group j (i). If a new item arrives, the CGAIM method must assign it to group j (i). To start with, group j (i) is defined to have a value of one. Then, to determine group j (i), the CGAIM method calculates the similarity coefficients between the new item and the associative items already in the group.

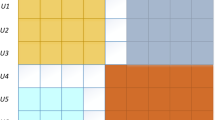

As shown in Fig. 1, there is a second case in which group j (i) of existing associative items has been newly assigned. When these associative items are first retrieved, an item’s group j (i) membership can change. In this case, before the associative item moves to another group, the similarity coefficient is calculated between the incoming item and the others in the current group, using the dice coefficient. If the similarity coefficient between the item and the others in the current group is less than the similarity threshold, the item’s group j (i) membership remains unchanged; otherwise, the item moves to the next group [8].

Figure 2 demonstrates the effect on the number of groups of varying the threshold (T). Appropriate thresholds such as 0.2, 0.5, and 0.8 for the MovieLens datasets are examined by changing one threshold while holding the others fixed. The high threshold value (T = 0.8) gives the greatest number of groups. However, when the number of rating data points is few, the effects of small group size are visible. The small threshold value (T = 0.2) yields only a small number of groups. As a result, the medium threshold value (T = 0.5) was used to distribute items into clusters.

3.4 Example of categorization for grouping associative items by data mining

An example of item categorization and grouping of associative items obtained by data mining is presented in this section. 450 items were selected from the MovieLens datasets. All items were classified into ten categories: action, classic, comedy, drama, family, horror, romance, thriller, animation, and foreign art films; an item could belong to more than one category. In this example, the target categorization for items is ten classes. Table 4 presents a selection of items categorized into the ten classes. Table 5 shows a mapping of the items using ten classes.

Item-based collaborative filtering uses preference ratings for specific items. The preference levels are represented as integers from 0 to 5, that is, 0, 1, 2, 3, 4, and 5. The preference ratings expressed as one of these six levels are shown as a mapping of the binary data. As a matter of convenience of calculation, scalability, and economy of memory, this study uses the following ratings data representation scheme: (0 → [0 0 0] || 1 → [0 0 1] || 2 → [0 1 0] || 3 → [0 1 1] || 4 → [1 0 0] || 5 → [1 1 0]). Table 5 can be reconstituted through the probability that the associative items occur in ten classes. Table 6 shows the results, which demonstrate that the numbers of associative items have been transformed into probabilities.

The CGAIM method can assign items to group j (i). Initially, group j (i) can be created by grouping associative items into groups of similar interest. To retrieve the visual group of associative items in Table 6, the CGAIM method calculates the similarity coefficients among associative items. The results of these calculations are presented in Table 7. When the similarity coefficient between items is greater than or equal to 0.5, the CGAIM method can group these items into the same group j (i). Here, the similarity threshold value used was 0.5.

In Table 7, when searching for {item-item} pairs with a similarity threshold greater than or equal to 0.5, eight pairs, {(item 2 , item 3 ), (item 2 , item 4 ), (item 3 , item 4 ), (item 4 , item 8 ), (item 5 , item 14 ), (item 9 , item 18 ), (item 14 , item 17 ), (item 5 , item 17 )}, can be found. Therefore, the CGAIM method creates three groups. The first is group 1 = {item 2 , item 3 , item 4 , item 8 }, and the others are group 2 = {item 9 , item 18 } and group 3 = {item 5 , item 14 , item 17 }. After the CGAIM method has created these groups of associative items, the associative item groups can change frequently as a result of two factors. The first factor is that a new item may enter the set of associative items. The second is that the interest level of existing associative items in a group can change frequently. In Table 8, the entry of a new {item 22 } into the set of associative items is described.

The CGAIM method can calculate the similarity between {item 22 } and its associative items in all groups. The resulting similarity values were 0.5421 for group 1 , 0.2106 for group 2 , and 0.4421 for group 3 . In addition, if there are some other groups for which the similarity is greater than or equal to 0.5, the CGAIM method assigns the new item to the group j (i) with the largest similarity. Finally, {item 22 } is categorized into group 1 .

4 Evaluations

Experiments have been performed using data from MovieLens datasets to evaluate different variants of item-based collaborative filtering. More experiments are currently underway using a subset of movie rating data collected from the MovieLens Web-based search [16] and Internet Movie Database.Footnote 5 IMDb produces the information about related film directors and movie titles using collaborative filtering. The site now has over 43,000+ users who have expressed preferences on 3,500+ different movies. The ratings for 1,612 movies were explicitly entered by 20,864 users and are represented as integers ranging from 0 to 5. To evaluate the preferences using varying numbers of training items, training item sets (50, 100, 200, and 300 training items respectively) were selected at random from the items in the MovieLens datasets, and the remaining items were placed into a test item set. Items in the training set are used only for making predictions, while the test items provide the basis for measuring prediction accuracy.

In this paper, the rank score measure (RSM) and mean absolute error (MAE) are used to gauge performance [6, 11–13]. The RSM is used to evaluate the performance of systems that recommend items from ranked lists, while the MAE is used to evaluate single-item recommendation systems. The RSM of an item in a ranked list is determined by user evaluation or user visits. The RSM is measured under the assumption that the probability of choosing an item lower in the list decreases exponentially. Suppose that each item is placed in decreasing order of value j, based on the weight of the preference. Equation 3 calculates the expected utility of a user’s RSM using the ranked list:

In Eq. 3, d is the mid-average value of the item, and α is its half-life. The half-life is the number of items in a list that have a 50/50 chance of being either reviewed or revisited. In the evaluation phase of this paper, a half-life value of 5 was used. In Eq. 4, the RSM is used to measure the accuracy of predictions about the user. If the user has evaluated or visited an item ranking highly in a ranked list, R u(max) is the maximum expected utility of the RSM:

The accuracy of the MAE, expressed as Eq. 5, is determined by the absolute value of the difference between the predicted value and the real value of the user’s evaluation. P a,j is the predicted preference, v a,j the real preference, and m a the number of items that have been evaluated by the new user:

Several well-known clustering algorithms with easily available implementations were chosen for these experiments. There are three methods for item clustering: those based on the average link hierarchical agglomeration (Alink) [3, 5]; robust clustering algorithms for categorical attributes (Robust) [4]; and k-means clustering (KM) [2, 10]. Alink is one of the classic basic clustering algorithms and was chosen to provide a base clustering case. Robust is a clustering algorithm developed at Bell Lab and is supposed to have improved performance on categorical data such as those from the MovieLens dataset. KM has been shown to be effective in producing good clustering results in many practical applications and for non-hierarchical clustering methods. This algorithm initially takes the number of elements in the population equal to the final required number of clusters. The final required number of clusters is chosen, such that the points are mutually farthest apart. Next, each element in the population is examined and assigned to one of the clusters, depending on the minimum distance. The center position is recalculated as an element is added to the cluster, and this process continues until all the elements are grouped into the final required number of clusters [2].

By comparing the proposed CGAIM method with item-based collaborative filtering system with these earlier approaches, Alink, Robust, and KM analyses of predictive accuracy measures such as RSM and MAE can be achieved. Figures 3 and 4 present the RSM and MAE as frequencies in increasing order, where the user evaluates the nth rating number. Figures 3 and 4 show the results obtained using Robust, which are unsatisfactory because of the algorithm’s inability to predict items occurring in small clusters; it also exhibits lower performance when the frequency of evaluations is low. The other method demonstrates higher performance than Robust. As a result, the KM and CGAIM methods, both when solving the cold-start problem and when dealing with noise generated by ratings data, yielded the highest accuracy rates. These results are encouraging and provide empirical evidence that use of the CGAIM method can lead to improved performance on item-based collaborative filtering. In addition, the authors believe that the CGAIM method can also improve recommendation quality for a cold-start item, which is another notable challenge for recommendation systems. Several interesting research issues remain to be addressed before the proposed algorithm can be successfully implemented in a practical environment.

To evaluate the proposed system, CGAIM was compared with Alink, KM, and Robust. T-tests were calculated for the four kinds of recommendation systems. The T-test is a method that uses the T-distribution in a statistical verification process. The T-distribution has bilateral symmetry, like the normal distribution, but the peak of the distribution moves according to the number of cases. In addition, the T-test can be used to verify a possible difference in average values between two target groups. It can also classify groups into cases of independent and dependent sampling.

The researchers generated a set of evaluation data based on the results of a survey performed both online and in person to verify the effectiveness and validity of CGAIM. By surveying 300 users, evaluation data were obtained and analyzed using CGAIM to evaluate the specific level of user satisfaction for a recommended final design style list, where the rating values were integers ranging from 1 (very negative) to 5 (very positive). Note that in the numerical range from 1 to 5, 1 means a very negative evaluation, and 5 means a very positive evaluation. The survey collected 1,200 evaluation data points over 25 days. Figure 5 illustrates the distribution of the rating data for level of satisfaction. In these rating data, the distribution of the satisfaction obtained by the proposed method showed better scores by more than 4 points compared to the distributions obtained from Alink, KM, and Robust. It is apparent that users were positively satisfied with the recommendation system using the proposed CGAIM method compared to systems using other methods.

Tables 9 and 10 show the results of the paired-sample T-test for KM, Alink, Robust, and CGAIM. As a significance level of α = 0.05, the critical value for accepting a hypothesis of difference was T < {0.4284, 0.9293, 1.2222} or T > {0.7249, 1.2173, 1.5378}. Because the values of t were presented as 7.654 > 0.7249, 14.667 > 1.2173, 17.211 > 1.5378 in the T-test of the evaluation data presented in this paper, it can be concluded that the hypothesis, “there is a statistical difference in the level of satisfaction as assessed by CGAIM and by {KM, Alink, Robust}” can be accepted. Furthermore, it was verified that the level of satisfaction assessed for CGAIM showed high values (0.5767, 1.0733, 1.3800), which result from the difference in the average value of the evaluation data compared to those for KM, Alink, or Robust. From these comparison experiments, it can be concluded that the CGAIM method for recommendation systems provides better quality than other methods in the cases of sparse data and cold-start items.

5 Conclusions

Nowadays, most recommendation systems in IT convergence utilize collaborative filtering systems, in order to recommend increasingly appropriate items. Analysis of items’ group attributes is important in a marketplace that is becoming more and more customer-oriented. Collaborative filtering based on a ratings matrix, which recommends items to users based on the preference of other users, has become a widely used approach in recent years for building personalized recommendation systems. Therefore, various efforts to overcome its drawbacks have been made to improve prediction quality. In this paper, a categorization for grouping associative items obtained by data mining is proposed to improve accuracy and performance in e-commerce systems. It is possible that if each associative item is required to be simultaneously associative with all other groups in which it occurs, the proposed method is capable of classifying all the associative items into their relevant groups. The results obtained are encouraging and provide empirical evidence that use of categorization for grouping associative items obtained from data mining can lead to improved predictive performance, particularly with sparse data and under cold-start conditions that can occur when starting collaborative filtering with only a small number of items. Moreover, this method can increase prediction accuracy and scalability because it removes the noise generated by ratings on items of dissimilar content or interest. In the future, the availability of associative rules for feature-space reduction may significantly ease the application of more powerful and computationally intensive learning methods.

Notes

Grouplens project (http://www.grouplens.org)

Firefly (http://www.firefly.com)

Moviecritic (http://www.moviecritic.com)

Amazon (http://www.amazon.com)

The Internet Movie Database is a good example of a movie recommendation system (http://www.imdb.com)

References

Connor MO, Herlocker J (1999) “Clustering items for collaborative filtering”, Proc. of the ACM SIGIR Workshop on Recommender Systems, Berkeley, CA

Ding C, He X (2004) “K-means clustering via principal component analysis”, Proc. of the 21th Int. Conf. on Machine Learning, pp 225–232

Gose E, Johnsonbaugh R, Jost S (1996) Pattern recognition and image analysis, Prentice Hall

Guha S, Rastogi R, Shim K (2000) “ROCK: a robust clustering algorithm for categorical attributes”, Elsevier Science Ltd., vol. 25, No. 5, pp 345–366

Han EH, Karypis G, Kumar V (1997) “Clustering based on association rule hypergraphs”, Proc. of the SIGMOD’97 Workshop on Research Issues in Data Mining and Knowledge Discovery, pp 9–13

Herlocker JL, Konstan JA, Terveen LG, Riedl JT (2004) Evaluating collaborative filtering recommender systems. J ACM Trans Inform Syst 22(1):5–53

Jalali M, Mustapha N, Sulaiman Md N, Mamat A (2010) WebPUM: a web-based recommendation system to predict user future movements. J Expert Syst Appl 37(Issue 9):6201–6212

Jung KY (2006) “Automatic classification for grouping designs in fashion design recommendation agent system”, LNAI 4251, Springer-Verlag, pp 310–317

Jung KY, Lee JH (2004) User preference mining through hybrid collaborative filtering and content-based filtering in recommendation System. IEICE Trans Inform Syst E87-D(12):2781–2790

Kangas S (2001) “Collaborative filtering and recommendation systems,” Technical Report TTE4-2001-35, VTT Information Technology

Kim TH, Yang SB (2005) An effective recommendation algorithm for clustering-based recommender systems. J Adv Artif Intell 3809:1150–1153

Kim HN, Jia AT, Haa IA, Joa GS (2010) Collaborative filtering based on collaborative tagging for enhancing the quality of recommendation. J Electron Commerce Res Appl 9(1):73–83

Ko SJ, Lee JH (2002) “User preference mining through collaborative filtering and content based filtering in recommender system”, Proc. of the Int. Conference on E-Commerce and Web Technologies, pp 244–253

Li Q, Kim BM (2003) “Clustering approach for hybrid recommender system”, Proc. of the IEEE/WIC International Conference on Web Intelligence, pp 33–38

Michael T (1997) Machine learning, McGraq-Hill, pp 154–200

MovieLens Data Set, http://www.cs.umn.edu/research/GroupLens/, Grouplens Research Project, 2000

Wang J, de Vries AP, Reinders MJT (2006) “A user-item relevance model for log-based collaborative filtering”, Proc. of the European Conference on Information Retrieval, pp 37–48

Acknowledgment

This research was supported by Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education, Science and Technology. (No. 2011–0008934)

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper is significantly revised from an earlier version presented at the International Conference on Information Science and Applications 2011.

Rights and permissions

About this article

Cite this article

Chung, KY., Lee, D. & Kim, K.J. Categorization for grouping associative items using data mining in item-based collaborative filtering. Multimed Tools Appl 71, 889–904 (2014). https://doi.org/10.1007/s11042-011-0885-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-011-0885-z