Abstract

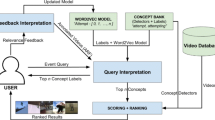

Automatic video annotation is to bridge the semantic gap and facilitate concept based video retrieval by detecting high level concepts from video data. Recently, utilizing context information has emerged as an important direction in such domain. In this paper, we present a novel video annotation refinement approach by utilizing extrinsic semantic context extracted from video subtitles and intrinsic context among candidate annotation concepts. The extrinsic semantic context is formed by identifying a set of key terms from video subtitles. The semantic similarity between those key terms and the candidate annotation concepts is then exploited to refine initial annotation results, while most existing approaches utilize textual information heuristically. Similarity measurements including Google distance and WordNet distance have been investigated for such a refinement purpose, which is different with approaches deriving semantic relationship among concepts from given training datasets. Visualness is also utilized to discriminate individual terms for further refinement. In addition, Random Walk with Restarts (RWR) technique is employed to perform final refinement of the annotation results by exploring the inter-relationship among annotation concepts. Comprehensive experiments on TRECVID 2005 dataset have been conducted to demonstrate the effectiveness of the proposed annotation approach and to investigate the impact of various factors.

Similar content being viewed by others

References

Ballan L, Bertini M, Bimbo AD, Serra G (2010) Video annotation and retrieval using ontologies and rule learning. IEEE Multimed 17(4):80–88

Benitez AB, Chang SF (2003) Image classification using multimedia knowledge networks. In: IEEE International Conference on Image Processing (ICIP), vol 2. Barcelona, Spain, pp 613–616

Bertini M, Bimbo AD, Torniai C (2006) Automatic annotation and semantic retrieval of video sequences using multimedia ontologies. In: ACM international conference on multimedia. Santa Barbara, CA, pp 679–682

Carneiro G, Chan A, Moreno P, Vasconcelos N (2007) Supervised learning of semantic classes for image annotation and retrieval. IEEE Trans Pattern Anal Mach Intell 29(3):394–410

Chang SF, He J, Jiang YG, Khoury EE, Ngo CW, Yanagawa A, Zavesky E (2008) Columbia University/VIREO-CityU/IRIT TRECVID2008 high-level feature extraction and interactive video search. In: NIST TRECVID workshop (TRECVID’08). Gaithersburg, MD

Chen Z, Cao J, Xia T, Song Y, Zhang Y, Li J (2011) Web video retagging. Multimed Tools Appl 55:53–82

Cilibrasi R, Vitanyi PMB (2007) The Google similarity distance. IEEE Trans Knowl Data Eng 19(3):370–383

Datta R, Joshi D, Li J, James Wang Z (2008) Image retrieval: ideas, influences, and trends of the new age. ACM Comput Surv 40(2):5:1–5:49

Deng J, Berg AC, Li K, Fei-Fei L (2010) What does classifying more than 10,000 image categories tell us? In: European Conference of Computer Vision (ECCV). Crete, Greece, pp 71–84

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) ImageNet a large-scale hierarchical image database. In: IEEE international conference on Computer Vision and Pattern Recognition (CVPR). Miami, Florida, pp 248–255

Deschacht K, Moens MF (2007) Text analysis for automatic image annotation. The 45th annual meeting of the Association for Computational Linguistics (ACL) pp 1000–1007

Fan J, Luo H, Gao Y, Jain R (2007) Incorporating concept ontology for hierarchical video classification, annotation, and visualization. IEEE Trans Multimedia 9(5):939–957

Fellbaum C (ed) (1998) WordNet: an electronic lexical database. MIT Press

Feng D, Siu WC, Zhang HJ (2003) Multimedia information retrieval and management. Springer-Verlag, Germany

Feng Y, Lapata M (2008) Automatic image annotation using auxiliary text information. In: The 46th annual meeting of the association for computational linguistics: human language technologies. Columbus, Ohio, pp 272–280

Fu H, Chi Z, Feng D (2006) Attention-driven image interpretation with application to image retrieval. Pattern Recogn 39(9):1604–1621

Fu H, Chi Z, Feng D (2009) An efficient algorithm for attention-driven image interpretation from segments. Pattern Recogn 42(1):126–140

Fu H, Chi Z, Feng D (2010) Recognition of attentive objects with a concept association network for image annotation. Pattern Recogn 43:3539–3547

Fu H, Chi Z, Feng D, Song J (2004) Machine learning techniques for ontology-based leaf classification. In: International conference on control, automation, robotics and vision. Kunming, China, pp 681–686

Guan G, Wang Z, Tian Q, Feng D (2009) Improved concept similarity measuring in visual domain. In: IEEE international workshop on multimedia signal processing. Rio de Janeiro, Brazil, pp 1–6

Hanbury A (2008) A survey of methods for image annotation. J Visual Lang Comput 19:617–627

Hauptmann AG, Chen MY, Christel M, Lin WH, Yang J (2007) A hybrid approach to improving semantic extraction of news video. In: International conference on semantic computing. Irvine, CA, pp 79–86

Hollink L, Little S, Hunter J (2005) Evaluating the application of semantic inferencing rules to image annotation. In: International conference on knowledge capture. Banff, Alberta

Hoogs A, Rittscher J, Stein G, Schmiederer J (2003) Video content annotation using visual analysis and a large semantic knowledgebase. In: IEEE international conference on computer vision and pattern recognition. Madison, Wisconsin, pp 327–334

Jain R, Sinha P (2010) Content without context is meaningless. In: ACM international conference on multimedia. Firenze, Italy, pp 1259–1268

Jiang W, Xie L, Chang SF (2009) Visual saliency with side information. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP). Taiwan, pp 1765–1768

Jiang YG, Wang J, Chang SF, Ngo CW (2009) Domain adaptive semantic diffusion for large scale content-based video annotation. In: IEEE International Conference on Computer Vision (ICCV). Kyoto Japan, pp 1420–1427

Jiang YG, Yang J, Ngo CW, Hauptmann AG (2010) Representations of keypoint-based semantic concept detection: a comprehensive study. IEEE Trans Multimedia 12(1):42–53

Jin Y, Khan L, Wang L, Awad M (2005) Image annotations by combining multiple evidence & WordNet. In: ACM international conference on multimedia. Singapore, pp 706–715

Lew MS, Sebe N, Djeraba C, Jain R (2006) Content-based multimedia information retrieval: State of the art and challenges. ACM TOMCCAP 2(1):1–19

Li H, Shi Y, Chen MY, Hauptmann AG, Xiong Z (2010) Hybrid active learning for cross-domain video concept detection. In: ACM international conference on multimedia. Firenze, Italy, pp 1003–1996

Li X, Snoek CGM, Worring M (2009) Learning social tag relevance by neighbor voting. IEEE Trans. Multimedia 11(7):1310–1322

Lin D (1998) An information-theoretic definition of similarity. In: International conference on machine learning, pp 296–304

Liu D, Hua XS, Wang M, Zhang HJ (2010) Image retagging. In: ACM international conference on multimedia. Firenze, Italy, pp 491–500

Liu J, Lai W, Hua XS, Huang Y, Li S (2007) Video search re-ranking via multi-graph propagation. In: ACM international conference on multimedia

Manning CD, Schütze H (1999) Foundations of statistical natural language processing. MIT Press, MA

Naphade M, Kozinstev IV, Huang TS (2002) A factor graph ramework for semantic video indexing. IEEE Trans Circuits Syst Video Technol 12(1):40–52

Naphade M, Smith JR, Tesic J, Chang SF, Hsu W, Kennedy L, Hauptmann A, Curtis J (2006) Large-scale concept ontology for multimedia. IEEE Multimed 13(3):86–91

Page L, Brin S, Motwani R, Winograd T (1998) The PageRank citation ranking: bringing order to the web. Tech. rep., Stanford University

Qi GJ, Hua XS, Rui Y, Tang J, Mei T, Zhang HJ (2007) Correlative multi-label video annotation. In: ACM international conference on multimedia. ACM, New York, pp 17–26

Qiu Y, Guan G, Wang Z, Feng D (2010) Improving news video annotation with semantic context. In: International conference on Digital Image Computing: Techniques and Applications (DICTA). Sydney, Australia

Schreiber AT, Dubbeldam B, Wielemaker J, Wielinga B (2001) Ontology-based photo annotation. IEEE Intell Syst 16(3):66–74

Shi R, Chua TS, Lee CH, Gao S (2006) Bayesian learning of hierarchical multinomial mixture models of concepts for automatic image annotation. In: ACM international conference on image and video retrieval. Arizona, USA, pp 102–112

Snoek CGM, Huurnink B, Hollink L, de Rijke M, Schreiber G, Worring M (2007) Adding semantics to detectors for video retrieval. IEEE Trans Multimedia 9(5):975–986

Velivelli A, Huang TS (2006) Automatic video annotation by mining speech transcripts. In: IEEE conference on computer vision and pattern recognition workshop. New York, USA, pp 115–122

Wang C, Jing F, Zhang L, Zhang HJ (2006) Image annotation refinement using random walk with restarts. In: ACM international conference on multimedia. Santa Barbara, California, pp 647–650

Wang G, Chua TS (2009) Multimedia content analysis theory and applications, chap. capturing text semantics for concept detection in news video. Springer, US, pp 1–25

Wang M, Hua XS, Hong R, Tang J, Qi GJ, Song Y (2009) Unified video annotation via multigraph learning. IEEE Trans Circuits Syst Video Technol 19(5):733–746

Wang M, Hua XS, Mei T, Hong R, Qi GJ, Song Y, Dai LR (2009) Semi-supervised kernel density estimation for video annotation. Comput Vis Image Underst 113(3):384–396

Wang M, Hua XS, Tang J, Hong R (2009) Beyond distance measurement: constructing neighborhood similarity for video annotation. IEEE Trans Multimedia 11(3):465–476

Wang XJ, Zhang L, Li X, Ma WY (2008) Annotating images by mining image search results. IEEE Trans Pattern Anal Mach Intell 30(11):1919–1932

Wang Y, Gong S (2007) Refining image annotation using contextual relations between words. In: ACM international conference on image and video retrieval. Amsterdam, The Netherlands, pp 425–432

Wang Z, Feng D (2010) Machine learning techniques for adaptive multimedia retrieval: technologies applications and perspectives, chap. discovering semantics from visual information. IGI Global

Wei XY, Jiang YG, Ngo CW (2009) Exploring inter-concept relationship with context space for semantic video indexing. In: ACM international conference on image and video retrieval. Island of Santorini, Greece

Wu Y, Tseng BL, Smith JR (2004) Ontology-based multi-classification learning for video concept detection. In: IEEE International Conference on Multimedia and Expo (ICME). Taipei, Taiwan, pp 1003–1006

Xu H, Wang J, Hua XS, Li S (2009) Tag refinement by regularized LDA. In: ACM international conference on multimedia. Beijing, China, pp 573–576

Yan R, Chen MY, Hauptmann AG (2006) Mining relationship between video concepts using probabilistic graphical model. In: IEEE International Conference On Multimedia and Expo (ICME). Toronto, Canada, pp 301–304

Zha ZJ, Mei T, Wang Z, Hua XS (2007) Building a comprehensive ontology to refine video concept detection. In: International workshop on multimedia information retrieval. Augsburg, Bavaria, pp 227–236

Zhang D, MonirulIslam M, Lu G (2011) A review on automatic image annotation techniques. Pattern Recogn 45:346–362

Zhuang J, Hoi SC (2011) A two-view learning approach for image tag ranking. In: ACM international conference on Web Search and Data Mining (WSDM). Hong Kong, China, pp 625–634

Acknowledgements

We would like to thank the anonymous reviewers for their constructive comments. The work presented in this paper is partially supported by grants from ARC, Hong Kong Polytechnic University, Nature Science Foundation of China (60772069), 863 High-Tech Project (2009AA12Z111), and Nature Science Foundation of Beijing (4102008).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, Z., Guan, G., Qiu, Y. et al. Semantic context based refinement for news video annotation. Multimed Tools Appl 67, 607–627 (2013). https://doi.org/10.1007/s11042-012-1060-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-012-1060-x