Abstract

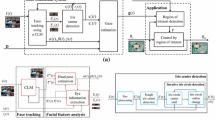

Eye detection and gaze estimation play an important role in many applications, e.g., the eye-controlled mouse in the assisting system for disabled or elderly persons, eye fixation and saccade in psychological analysis, or iris recognition in the security system. Traditional research usually achieves eye tracking by employing intrusive infrared-based techniques or expensive eye trackers. Nowadays, there are more and more needs to analyze user behaviors from tracking eye attention in general applications, in which users usually use a consumer-grade computer or even laptop with an inexpensive webcam. To satisfy the requirements of rapid developments of such applications and reduce the cost, it is no more practical to apply intrusive techniques or use expensive/specific equipment. In this paper, we propose a real-time eye-gaze estimation system by using a general low-resolution webcam, which can estimate eye-gaze accurately without expensive or specific equipment, and also without an intrusive detection process. An illuminance filtering approach is designed to remove the influence from light changes so that the eyes can be detected correctly from the low-resolution webcam video frames. A hybrid model combining the position criterion and an angle-based eye detection strategy are also derived to locate the eyes accurately and efficiently. In the eye-gaze estimation stage, we employ the Fourier Descriptor to describe the appearance-based features of eyes compactly. The determination of eye-gaze position is then carried out by the Support Vector Machine. The proposed algorithms have high performances with low computational complexity. The experiment results also show the feasibility of the proposed methodology.

Similar content being viewed by others

References

Betke M, Gips J, Fleming P (2002) The camera mouse: visual tracking of body features to provide computer access for people with severe disabilities. IEEE Trans Neural Syst Rehabil Eng 10(1):1–10

Chang CC and Lin CJ (2011) “LIBSVM: a library for support vector machines.” ACM Trans Intell Syst Technol Vo. 2, No. 3

Colombo C, Bimbo AD (1999) Real-time head tracking from the deformation of eye contours using a piecewise affine camera. Pattern Recognit Lett 20(7):721–730

Cortes C, Vapnik V (1995) Support-vector network. Kluwer, Boston

Fasel I, Fortenberry B, Movellan J (2005) A generative framework for real time object detection and classification. Comput Vis Image Underst 98(1):182–210

Feng GC, Yuen PC (2006) Multi-cues eye detection on gray intensity image. Pattern Recog 34(5):1033–1046

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Froba B and Kublbeck C (May 2002) “Robust face detection at video frame rate based on edge orientation feature,” IEEE Conf Automatic Face Gesture Recog pp. 342–347, Washington, DC, USA

Guestrin ED, Eizenman M (2006) General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans Biomed Eng 53(6):1124–1133

Hamzah A, Fauzan A, Noraisyah MS (2008) Face localization for facial features extraction using a symmetrical filter and linear Hough transform. Artif Life Robot 12:157–160

Hansen DW and Hansen JP (June 2006) “Robustifying eye interaction,” IEEE Int’l Conf Comput Vis Pattern Recog Work pp. 152–159

Hansen D.W, Hansen PJ, Nielsen M, Johansen AS, and Stegmann MB (Dec. 2002) “Eye typing using Markov and active appearance models.” Sixth IEEE Workshop Appl Comput Vis pp. 132–136

Hansen DW, Pece AEC (2005) Eye tracking in the wild. Comput Vis Image Underst 98(1):182–210

Hansen DW, Qiang J (2010) In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans Pattern Anal Mach Intell 32(3):478–500

Ishikawa T, Baker S, Matthews I, and Kanade T (Oct. 2004) “Passive driver gaze tracking with active appearance models.” Proc. 11th World Congress Intell Transp Syst

Kapur JN, Sahoo PK, Wong AKC (1985) A new method for gray level picture thresholding using the entropy of histogram. Comput vis graphics image process 29(3):273–285

Kittler JN, Illingworth J (1985) Threshold selection based on a simple image statistic. Comput Vis Graphics Image Process 30(2):125–147

Liu Z, Wang Y (2000) Face detection and tracking in video using dynamic programming. IEEE Proc ICIP 1:53–56

Magee JJ, Betke M, Gips J, Scott MR, Waber BN (2008) A human-computer interface using symmetry between eyes to detect gaze direction. IEEE Trans Syst Man Cybern 38(6):1248–1261

Magee JJ, Betke M, Gips J, Scott MR, Waber BN (2008) A human-computer interface using symmetry between eyes to detect gaze direction. IEEE Trans Syst Man Cybern 38(6):1–14

Newman R, Matsumoto Y, Rougeaux S, and Zelinsky A (Mar. 2000) “Real-time stereo tracking for head pose and gaze estimation.” Proc Fourth IEEE Int’l Conf Autom Face Gesture Recog pp. 122–128

Ohno T, Mukawa N and Yoshikawa A (Mar. 2002) “Freegaze: a gaze tracking System for Everyday Gaze Interaction,” Proc Eye Tracking Res Appl Symp pp. 125–132

Parameter values for the HDTV standards for production and international programme exchange, ITU-R Rec. BT.709-5, 2002

Peng K, Chen L, Ruan S and Kukharev G (Oct. 2005) “A robust algorithm for eye detection on gray intensity face without spectacles.” J Comput Sci Technol Vol. 5, No. 3

Shih SW, Wu YT, Liu J (2000) A calibration-free gaze tracking technique. 15th Int’l Conf Pattern Recog 3:201–204

Stiefelhagen R, Yang J, and Waibel A (1997) “Tracking eyes and monitoring eye gaze.” Proc Workshop Perceptual User Interfac pp. 98–100

Tsai WH (1985) Moment-preserving thresholding: a new approach. Comput vis graphics image process 29:377–393

Valenti R and Gevers T (2008) “Accurate eye center location and tracking using isophote curvature,” IEEE Conf Comput Vis Pattern Recog pp. 1–8

Villanueva A and Cabeza R (Nov. 2007) “Models for gaze tracking systems.” J Image Video Process Vol. 2007, Issue 4, No. 3

Viola P, Jones MJ (2001) Rapid object detection using a boosted cascade of simple features. Proc IEEE Comput Soc Int Conf Comput Vis Pattern Recog 1:511–518

Wang G, Sung E, Venkateswarlu R (2005) Estimating the eye gaze from one eye. Comput Vis Image Underst 98(1):83–103

Williams O, Blake A, and Cipolla R (Jun. 2006) “Sparse and semi-supervised visual mapping with the S3GP,” Conf Comput Vis Pattern Recog pp. 230–237

Xu LQ, Machin D, and Sheppard P (1998) “A novel approach to real-time non-intrusive gaze finding.” Proc British Mach Vis Conf

Yuille A, Hallinan P, Cohen D (1992) Feature extraction from faces using deformable templates. Int’l J Comput Vis 8(2):99–111

Zhang D and Lu G (July 2003) “Evaluation of MPEG-7 shape descriptors against other shape descriptors.” Multimedia Syst Vol 9, Issue 1

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lin, YT., Lin, RY., Lin, YC. et al. Real-time eye-gaze estimation using a low-resolution webcam. Multimed Tools Appl 65, 543–568 (2013). https://doi.org/10.1007/s11042-012-1202-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-012-1202-1