Abstract

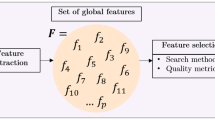

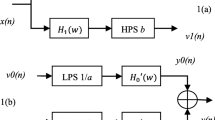

Multimedia database retrieval is rapidly growing and its popularity in online retrieval systems is consequently increasing. Large datasets are major challenges for searching, retrieving, and organizing the music content. Therefore, a robust automatic music-genre classification method is needed for organizing this music data into different classes according to specific viable information. Two fundamental components are to be considered for genre classification: audio feature extraction and classifier design. In this paper, we propose diverse audio features to precisely characterize the music content. The feature sets belong to four groups: dynamic, rhythmic, spectral, and harmonic. From the features, five statistical parameters are considered as representatives, including the fourth-order central moments of each feature as well as covariance components. Ultimately, insignificant representative parameters are controlled by minimum redundancy and maximum relevance. This algorithm calculates the score level of all feature attributes and orders them. Only high-score audio features are considered for genre classification. Moreover, we can recognize those audio features and distinguish which of the different statistical parameters derived from them are important for genre classification. Among them, mel frequency cepstral coefficient statistical parameters, such as covariance components and variance, are more frequently selected than the feature attributes of other groups. This approach does not transform the original features as do principal component analysis and linear discriminant analysis. In addition, other feature reduction methodologies, such as locality-preserving projection and non-negative matrix factorization are considered. The performance of the proposed system is measured based on the reduced features from the feature pool using different feature reduction techniques. The results indicate that the overall classification is competitive with existing state-of-the-art frame-based methodologies.

Similar content being viewed by others

References

Baniya BK, Ghimire D, Lee J (2013) Evaluation of different audio features for musical genre classification. In Proc. IEEE workshop on Signal Processing Systems, Taipei, Taiwan

Baniya BK, Ghimire D, Lee J (2014) A novel approach of automatic music genre classification based on timbral texture and rhythmic content features. Int. conference on Advance Communications Technology (ICACT), pp.96–102

Belkin M, Niyogi P (2002) “Laplacian Eigenmaps and Spectral Techniques for Embedding and Clustering”, Advances in Neural Information Processing Systems 14. Vancouver, British Columbia

Benetos E, Kotropoulos C (2008) A tensor-based approach for automatic music genre classification. Proceedings of the European Signal Processing Conference, Lausanne

Bergstra J, Casagrande N, Erhan D, Eck D, Kegl B (2006) Aggregate features and AdaBoost for music classification”. Mach Learn 65(2–3):473–484

Casey M, Veltkamp RC, Goto M, Leman M, Rhodes C, Slaney M (2008) Content-based music information retrieval: current directions and future challenges. Proc IEEE 96(4):668–696

Cortes C, Vapnik V (1995) Support vector networks. J Mach Learn

Dowling WJ, Harwood DL (1986) Music cognition. Academic

Groeneveld RA, Meeden G (1984) Measuring Skewness and Kurtosis. J R Stat Soc Ser D (Stat) 33:391–399

He X, Niyogi P (2003) Locality Preserving Projections. Proceedings of the 17thAnnual Conference on Neural Information Processing Systems. The MIT Press, Cambridge, pp 153–160

Lartillot O, Toiviainen P (2007) MIR in Matlab (II): A toolbox for musical feature extraction from audio. In Proc. Int. Conf. Music Inf. Retrieval, pp. 127–130 [Online]. Available: http://users.jyu.fi/lartillo/mirtoolbox/

Li Z, Liu J, Yang Y, Zhou X, Lu H. Clustering-guided sparse structural learning for unsupervised feature selection. IEEE Trans. Knowl. Data Eng. [Online]. Available: http://www.computer.org/csdl/trans/tk/preprint/06509368-abs.html

Li T, Ogihara M, Li Q (2003) A comparative study on content-based music genre classification. Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 282–289, Toronto

Li Z, Yang Y, Liu J, Zhou X, Lu H (2012) Unsupervised feature selection using nonnegative spectral analysis. In: AAAI

Lidy T, Rauber A, Pertusa A, Inesta J (2007) Combining audio and symbolic descriptors for music classification from audio. Music Information Retrieval Information Exchange (MIREX)

Lim S-C, Lee J-S, Jang S-J, Lee S-P, Kim MY (2012) Music-genre classification system based on spectro-temporal features and feature selection. IEEE Trans Consum Electron 58–4:1262–1268

Marasys, Data sets http://marsysas.info/download/data

Peng H, Long F (2004) An efficient max-dependency algorithm for gene selection. In 36th Symp. Interface: Computational Biology and Bioinformatics

Peng H, Long F, Ding C (2005) Feature selection based on mutual information: Criteria of max-dependency, max-relevance and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

S Roweis, LK Saul (2000) Nonlinear dimensionality reduction by locally linear embedding. Science, 290

Scheirer E, Slaney M (1997) Construction and evaluation of a robust multi-feature speech/music discriminator, in Proc. Int. Conf. Acoustics, Speech, Signal Processing, Munich

Seo SS, Lee S (2011) Higher-order moments for musical genre classification. Signal Process 91(8):2154–2157

Shen J, Pang H, Wang M, Yan S(2012) Modeling concept dynamics for large scale music search. In Proc ACM SIGIR, pp. 455–464

Shen J, Meng W, Yan S, Pang H, Hua X (2010) Effective music tagging through advanced statistical modeling. In Proc. of ACMSIGIR

L. Smith (2002) A tutorial on principal components analysis, www.cs.otago.ac.nz/cosc453/student_tutorials/principal_components.pdf

Tzanetakis G, Cook P (2002) Musical genre classification of audio signals. IEEE Trans Speech Audio Process 10(3):293–302

Tzanetakis G, Essl G, Cook P (2001)Automatic musical genre classification of audio signals, in Proc. Int. Conf. Music Information Retrieval, Bloomington, pp. 205–210

Welling M (2000) Fisher linear discriminant analysis. Technical report, University of Toronto, Kings College Road, Toronto, M5S 3G5, Canada

Wold E, Blum T, Keislar D, Wheaten J (1996) Content based classification, search, and retrieval of audio. IEEE Trans Multimedia 3(3):27–36

Xu C, Maddage NC, Shao X (2005) Automatic music classification and summarization. IEEE Trans Speech and Audio Process 6(5):441–450

Acknowledgments

This paper was supported by research funds of Chonbuk National University in 2013 and also partially supported by the National Research Foundation of Korea grant funded by the Korean government (2011-0022152).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Baniya, B.K., Lee, J. Importance of audio feature reduction in automatic music genre classification. Multimed Tools Appl 75, 3013–3026 (2016). https://doi.org/10.1007/s11042-014-2418-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-014-2418-z