Abstract

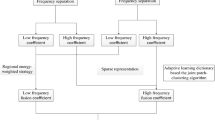

Sparse code theory with the sliding window technique can be used for the efficient fusion of multi-exposure images. However, when the size of the source images is large, this process requires a significant amount of time. To solve this problem, we propose a method that uses low-frequency sub-images of the source images as the input to the sparse code fusion framework. These low-frequency sub-images (which are far smaller than the entire image) provide a coarse representation of the original image. Regarding multi-scale decomposition, the high redundancy ratio of some methods limits their applicability to image fusion, especially multi-exposure image fusion (usually more than two source images). In this paper, we propose a method that employs a novel shiftable complex directional pyramid with shift-invariance and a low redundancy ratio to obtain the low- and high-frequency sub-images. For the high-frequency sub-image, we introduce a novel fusion rule based on the entropy of the segmented block, allowing more details of the source images to be preserved. Experiments show that our method attains results that are comparable to or better than existing methods.

Similar content being viewed by others

References

Aharon M, Elad M, Bruckstein A (2006) The K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans Signal Process 54(11):4311–4322

Ashikhmin M (2002) A tone mapping algorithm for high contrast images. Proceedings of the 13th Eurographics Workshop on Rendering 28:145–156

Bamberger RH, Smith MJT (1992) A filter bank for the directional decomposition of images: theory and design. IEEE Trans Signal Process 40(4):882–893

Bin Y, Shutao L (2010) Multifocus image fusion and restoration with sparse representation. IEEE Trans Instrum Meas 59(4):884–892

Burt PJ, Adelson EH (1983) The Laplacian pyramid as a compact image code. IEEE Trans Commun 31(4):532–540

Burt PJ, Kolczynski RJ (1993) Enhanced image capture through fusion. Proc. Fourth International Conf. on Computer Vision, Berlin, pp. 173–182

Cadik M, Wimmer M, Neumann L, Artusi A (2008) Evaluation of HDR Tone Mapping Methods Using Essential Perceptual Attributes. Comput Graph 32(3):330–349

Cunha AL, Zhou J, Do MN (2006) The nonsubsampled contourlet transform: theory, design, and applications. IEEE Trans Image Process 15(10):3089–3101

Debevec PE, Malik J (1997) Recovering high dynamic range radiance maps from photographs, Proceedings of SIGGRAPH ‘97. ACM, New York, pp. 369–378

Do MN, Vetterli M (2005) The contourlet transform: an efficient directional multiresolution image representation. IEEE Trans Image Process 14(12):2091–2106

Drago F, Myszkowski K, Annen T, Chiba N (2003) Adaptive logarithmic mapping for displaying high contrast scenes. Proceedings of Eurographics 22(3):419–426

Fattal R, Lischinski D, Werman M (2002) Gradient domain high dynamic range compression. ACM Trans Graph 21(3):249–256

Goshtasby A (2005) Fusion of multi-exposure images. Image Vis Comput 23(6):611–618

Gu B, Li WJ, Wong JT, Zhu MY (2012) Gradient field multi-exposure images fusion for high dynamic range image visualization. J Vis Commun Image Represent 23(4):604–610

Hu J, Gallo O, Pulli K (2012) Exposure stacks for live scenes with hand-held cameras. Proc ECCV 499–512.

Krawczyk G, Myszkowski K, Seidel HP (2006) Computational model of lightness perception in high dynamic range imaging. In: Proc. of IS&T/SPIE’s Human Vision and Electronic Imaging.

Li H, Manjunath BS, Mitra SK (1995) Multisensor image fusion using the wavelet transform. Graphical Models and Image Processing 57(3):235–245

Mertens T, Kautz J, Reeth FV (2007) Exposure fusion. In Proceedings of Pacific graphics, Maui, Hawaii

Nguyen TT, Oraintara S (2008a) The shiftable complex directional pyramid, part I: theoretical aspects. IEEE Trans Signal Process 56(10):4651–4660

Nguyen TT, Oraintara S (2008b) The shiftable complex directional pyramid, part II: implementation and applications. IEEE Trans Signal Process 56(10):4661–4672

Pajares G, Cruz JM (2004) A wavelet-based image fusion tutorial. Pattern Recogn 37(9):1855–1872

Reinhard E, Stark M, Shirley P, Ferwerda J (2002) Photographic tone reproduction for digital images. ACM Trans Graph 21(3):267–276

Rubinstein R, Zibulevsky M, Elad M (2008) Efficient implementation of the K-SVD algorithm using batch orthogonal matching pursuit. Technical Report - CS Technion

Shen R, Cheng I, Shi JB (2011) Generalized random walks for fusion of multi-exposure images. IEEE Trans Image Process 99:3634–3646

Shen JB, Zhao Y, Yan SC (2014) Exposure Fusion Using Boosting using Laplacian Pyramid. IEEE Trans Cybern 44(9):1579–1590

Song M et al. (2012) Probabilistic Exposure Fusion. IEEE Trans Image Process 21(1):341–357

Wang JH, Xu D (2009) Exposure fusion based on steerable pyramid for displaying high dynamic range scenes, SPIE. Opt Eng 48

Wang JH, Liu HZ, He N (2014) Exposure fusion based on sparse representation using approximate K-SVD. Neurocomputing 135:145–154

Acknowledgments

This work is partly supported by the National Natural Science Foundation of China (Nos. 61202245, 61271370, 61271369, 61372148, 91420202, and 61370138), the Project of Construction of Innovative Teams and Teacher Career Development for Universities and Colleges under Beijing Municipality (CIT&TCD20130513), the importation and development of High-Caliber Talents Project of Beijing Municipal Institutions (CIT&TCD20130320), the Beijing Education Commission Science and Technology Project, and Research on Image Recognition of Seal in Chinese Painting and Calligraphy on Multi-features Fusion (KM201311417015).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, J., Wang, W., Li, B. et al. Exposure fusion via sparse representation and shiftable complex directional pyramid transform. Multimed Tools Appl 76, 15755–15775 (2017). https://doi.org/10.1007/s11042-016-3868-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-3868-2