Abstract

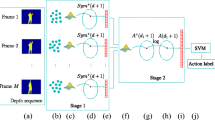

Feature extraction and encoding represent two of the most crucial steps in an action recognition system. For building a powerful action recognition pipeline it is important that both steps are efficient and in the same time provide reliable performance. This work proposes a new approach for feature extraction and encoding that allows us to obtain real-time frame rate processing for an action recognition system. The motion information represents an important source of information within the video. The common approach to extract the motion information is to compute the optical flow. However, the estimation of optical flow is very demanding in terms of computational cost, in many cases being the most significant processing step within the overall pipeline of the target video analysis application. In this work we propose an efficient approach to capture the motion information within the video. Our proposed descriptor, Histograms of Motion Gradients (HMG), is based on a simple temporal and spatial derivation, which captures the changes between two consecutive frames. For the encoding step a widely adopted method is the Vector of Locally Aggregated Descriptors (VLAD), which is an efficient encoding method, however, it considers only the difference between local descriptors and their centroids. In this work we propose Shape Difference VLAD (SD-VLAD), an encoding method which brings complementary information by using the shape information within the encoding process. We validated our proposed pipeline for action recognition on three challenging datasets UCF50, UCF101 and HMDB51, and we propose also a real-time framework for action recognition.

Similar content being viewed by others

References

Arandjelović R, Zisserman A (2012) Three things everyone should know to improve object retrieval. In: CVPR

Arandjelovic R, Zisserman A (2013) All about VLAD. In: CVPR

Bilen H, Fernando B, Gavves E, Vedaldi A, Gould S (2016) Dynamic image networks for action recognition. In: CVPR

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Brox T, Malik J (2011) Large displacement optical flow: descriptor matching in variational motion estimation. TPAMI 33(3):500–513

Brox T, Bruhn A, Papenberg N, Weickert J (2004) High accuracy optical flow estimation based on a theory for warping. In: ECCV

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: CVPR

Dalal N, Triggs B, Schmid C (2006) Human detection using oriented histograms of flow and appearance. In: ECCV

de Souza CR, Gaidon A, Vig E, López AM (2016) Sympathy for the details: Dense trajectories and hybrid classification architectures for action recognition. In: ECCV

Duta IC, Nguyen TA, Aizawa K, Ionescu B, Sebe N (2016) Boosting VLAD with double assignment using deep features for action recognition in videos. In: ICPR

Duta IC, Uijlings JRR, Nguyen TA, Aizawa K, Hauptmann AG, Ionescu B, Sebe N (2016) Histograms of motion gradients for real-time video classification. In: CBMI

Duta IC, Ionescu B, Aizawa K, Sebe N (2017) Spatio-temporal VLAD encoding for human action recognition in videos. In: MMM

Farnebäck G. (2003) Two-frame motion estimation based on polynomial expansion. In: Image analysis, pp 363–370

Horn B K, Schunck BG (1981) Determining optical flow. In: 1981 Technical symposium east, pp 319–331. International Society for Optics and Photonics

Jain M, Jégou H, Bouthemy P (2013) Better exploiting motion for better action recognition. In: CVPR

Jégou H, Perronnin F, Douze M, Sanchez J, Perez P, Schmid C (2012) Aggregating local image descriptors into compact codes. TPAMI 34(9):1704–1716

Jurie F, Triggs B (2005) Creating efficient codebooks for visual recognition. In: ICCV

Kantorov V, Laptev I (2014) Efficient feature extraction, encoding and classification for action recognition. In: CVPR

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks. In: CVPR

Kliper-Gross O, Gurovich Y, Hassner T, Wolf L (2012) Motion interchange patterns for action recognition in unconstrained videos. In: ECCV

Krapac J, Verbeek J, Jurie F (2011) Modeling spatial layout with fisher vectors for image categorization. In: ICCV

Kuehne H, Jhuang H, Garrote E, Poggio T, Serre T (2011) Hmdb: a large video database for human motion recognition. In: ICCV

Laptev I (2005) On space-time interest points. IJCV 64(2-3):107–123

Laptev I, Marszałek M, Schmid C, Rozenfeld B (2008) Learning realistic human actions from movies. In: CVPR

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In: CVPR

Lowe D G (2004) Distinctive image features from scale-invariant keypoints. IJCV 60(2):91–110

Lucas BD, Kanade T et al (1981) An iterative image registration technique with an application to stereo vision. In: IJCAI

Mironică I, Duţă I C, Ionescu B, Sebe N (2016) A modified vector of locally aggregated descriptors approach for fast video classification. Multimed Tools Appl

Oneata D, Verbeek J, Schmid C (2013) Action and event recognition with fisher vectors on a compact feature set. In: ICCV

Park E, Han X, Berg TL, Berg AC (2016) Combining multiple sources of knowledge in deep cnns for action recognition. In: WACV

Peng X, Wang L, Wang X, Qiao Y (2014) Bag of visual words and fusion methods for action recognition: comprehensive study and good practice. arXiv:1405.4506

Peng X, Wang L, Qiao Y, Peng Q (2014) Boosting vlad with supervised dictionary learning and high-order statistics. In: ECCV

Perronnin F, Sánchez J, Mensink T (2010) Improving the fisher kernel for large-scale image classification. In: ECCV

Poularakis S, Avgerinakis K, Briassouli A, Kompatsiaris I (2015) Computationally efficient recognition of activities of daily living. In: ICIP

Reddy K K, Shah M (2013) Recognizing 50 human action categories of web videos. Mach Vis Appl 24(5):971–981

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: NIPS

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Sivic J, Zisserman A (2003) Video google: a text retrieval approach to object matching in videos. In: Ninth international conference on computer vision, 2003 Proceedings, pp 1470–1477

Solmaz B, Assari S M, Shah M (2013) Classifying web videos using a global video descriptor. Mach Vis Appl

Soomro K, Zamir A R, Shah M (2012) Ucf101: a dataset of 101 human actions classes from videos in the wild. arXiv:1212.0402

Sun J, Wu X, Yan S, Cheong L-F, Chua T-S, Li J (2009) Hierarchical spatio-temporal context modeling for action recognition. In: CVPR

Sun L, Jia K, Yeung D-Y, Shi BE (2015) Human action recognition using factorized spatio-temporal convolutional networks. In: ICCV

Uijlings J R, Smeulders A W, Scha R J (2010) Real-time visual concept classification. TMR 12(7):665– 681

Uijlings JRR, Duta IC, Rostamzadeh N, Sebe N (2014) Realtime video classification using dense hof/hog. In: ICMR

Uijlings J R R, Duta I C, Sangineto E, Sebe N (2015) Video classification with densely extracted hog/hof/mbh features: an evaluation of the accuracy/computational efficiency trade-off. Int J Multimed Inf Retriev

Vedaldi A, Fulkerson B (2010) Vlfeat: an open and portable library of computer vision algorithms. In: ACM Multimedia

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: ICCV

Wang H, Schmid C (2013) Lear-inria submission for the thumos workshop. In: ICCV Workshop

Wang H, Ullah MM, Klaser A, Laptev I, Schmid C (2009) Evaluation of local spatio-temporal features for action recognition. In: BMVC

Wang H, Kläser A, Schmid C, Liu C -L (2013) Dense trajectories and motion boundary descriptors for action recognition. IJCV 103(1):60–79

Wang H, Oneata D, Verbeek J, Schmid C (2015) A robust and efficient video representation for action recognition. IJCV

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Van Gool L (2016) Temporal segment networks: towards good practices for deep action recognition. In: ECCV

Yue-Hei Ng J, Hausknecht M, Vijayanarasimhan S, Vinyals O, Monga R, Toderici G (2015) Beyond short snippets: deep networks for video classification. In: CVPR

Zach C, Pock T, Bischof H (2007) A duality based approach for realtime tv-l 1 optical flow. In: Pattern recognition

Zhou X, Yu K, Zhang T, Huang T S (2010) Image classification using super-vector coding of local image descriptors. In: Computer Vision–ECCV 2010, pp 141–154

Acknowledgments

Part of this work was funded under research grant PN-III-P2-2.1-PED-2016-1065, agreement 30PED/2017, project SPOTTER. This material is based in part on work supported by the National Science Foundation (NSF) under grant number IIS-1251187.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Duta, I.C., R. Uijlings, J.R., Ionescu, B. et al. Efficient human action recognition using histograms of motion gradients and VLAD with descriptor shape information. Multimed Tools Appl 76, 22445–22472 (2017). https://doi.org/10.1007/s11042-017-4795-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4795-6