Abstract

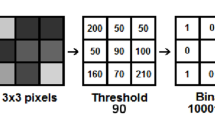

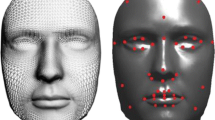

Facial expression synthesis is getting a wide-spread attention since past several years due to its multimedia applications. In most of the earlier research works, example images of target expressions are required to produce synthesized facial expressions. The paper aims to generate six basic and twelve blended facial expressions from a static and RGB neutral face image without any exemplar of expressive face images. The proposed automatic expression generation system consists of several sub-systems, namely, a knowledge-based system, a module for symbolic formulations of basic and blended facial expressions, an expressive facial components generator and an expressive face generator. The knowledge-based system stores the normalized facial feature parameter values. Symbolic formulations of facial expressions are used to reconstruct facial expressions from a static neutral face image using the parameters stored in the knowledge base. Expressive facial components generator performs automatic expressive facial feature generation as well as automatic facial feature extraction and landmark annotation. Finally, expressive facial components are combined to produce an expressive face in expressive face generator. The system generated expressive face images are validated in four different ways: inter-rater reliability measure, similarity measurement in the frequency domain, similarity measurement using SSIM, FSIM, HOG features and accuracy measurement using both appearance-based and geometry-based feature extraction methods. The geometry based feature extraction method generates 90% recognition accuracy for system generated face images.

Similar content being viewed by others

References

Abboud B, Davoine F (2005) Bilinear factorization for facial expression analysis and synthesis. IEE-proc vision, image and. Signal Process 152(3):327–333

Adams R (1993) Radial decomposition of discs and spheres. CVGIP Graph Model Image Process 55(5):325–332. https://doi.org/10.1006/cgip.1993.1024

Agarwal S, Chatterjee M, Mukherjee DP (2012) Synthesis of emotional expressions specific to facial structure. Proc. 8th Indian Conf. Computer vision, graphics and image processing, 2012, https://doi.org/10.1145/2425333.2425361

Agarwala A (2007) Efficient gradient-domain compositing using Quadtrees. ACM Trans Graph. https://doi.org/10.1145/1276377.1276495

Bhattacharjee D, Halder S, Nasipuri M, Basu DK, Kundu M (2009) Construction of human faces from textual descriptions. Soft Comput 15:429–447. https://doi.org/10.1007/s00500-009-0524-z.

Bhowmik MK, Saha K, Saha P, Bhattacharjee D (2014) DeitY-TU face database: its design, multiple cameras capturing, characteristics, and evaluation. Opt Eng 53(10):102106–1–102106-24. https://doi.org/10.1117/1.OE.53.10.102106

Calvin M, Qi R, Raghavan V (2003) A linear time algorithm for computing exact Euclidean distance transforms of binary images in arbitrary dimensions. IEEE Trans Pattern Anal Mach Intell 25(2):265–270. https://doi.org/10.1109/TPAMI.2003.1177156

Chen Y, Lin W, Zhang C, Chen Z, Xu N, Xie J (2013) Intra-and-inter-constraint-based video enhancement based on piecewise tone mapping. IEEE Trans Circuits Syst Video Technol 23(1):74–82. https://doi.org/10.1109/TCSVT.2012.2203198

Contreras V (2005) Artnatomy/Artnatomia. Spain. http://www.artnatomia.net

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. Proc. Computer Vision and Pattern Recognition, San Diego, pp 886–893

Daugman J (2004) How iris recognition works. IEEE Trans Circuits Syst Video Technol 14(1):21–30. https://doi.org/10.1109/TCSVT.2003.818350

Du S, Tao Y, Martinez AM (2014) Compound facial expressions of emotion. Proc Ntl Acad Sci USA 111:E1454–E1462. https://doi.org/10.1073/pnas.1322355111

Ekman P, Friesen WV (1978) Facial action coding system. Consulting Psychologist Press, Palo Alto

Fleiss JL (1971) Measuring nominal scale agreement among many raters. Psychol Bull 76(5):378–382. https://doi.org/10.1037/h0031619

Haralick RM, Shapiro L (1992) Computer and Robot Vision. Vol. 1, Addison-Wesley Publishing Company, Boston pp 174–185.

Huang D, Torre FD (2010) Bilinear kernel reduced rank regression for facial expression synthesis. In: Daniilidis K, Maragos P, Paragios N (eds), Lecture notes in computer science, Springer, https://doi.org/10.1007/978-3-642-15552-9_27

Johnson NL, Kotz SI, Balakrishnan N (1995) Continuous univariate distributions, 2nd edn. Wiley, New York

King I, Hou HT (1996) Radial basis network for facial expression synthesis. Proc. Int Conf Neural Info Processing

Kouzani AZ, Nahavandi S (2001) Constructing artificial images of facial expressions. Seventh Australian and New Zealand Intelligent Information Systems Conf, pp 18–21. https://doi.org/10.1109/ANZIIS.2001.974072

Lankton S (2009) Sparse Field Methods. Technical Report. Georgia institute of technology.

Li H, Weise T, Pauly M (2010) Example-based facial rigging. ACM Trans Graph 29(4):1–12. https://doi.org/10.1145/1778765.1778769

Li K, Dai Q, Wang R, Liu Y, Xu F, Wang J (2014) A data-driven approach for facial expression retargeting in video. IEEE Trans Multimedia 16(2):299–310. https://doi.org/10.1109/TMM.2013.2293064

Liu Z, Shan V, Zhang V (2001) Expressive expression mapping with ratio images. Proc. 28th annual Conf on computer graphics and interactive techniques, pp 271-276, https://doi.org/10.1145/383259.383289

Marrand D, Hildreth E (1980) Theory of edge detection. Proc R Soc Lond B Bio Sci 207(1167):187–217. https://doi.org/10.1098/rspb.1980.0020

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9(1):62–66. https://doi.org/10.1109/TSMC.1979.4310076

Parker JR (1997) Algorithms for image processing and computer vision. Wiley, New York

Pratt WK (1991) Digital Image Processing. Wiley, New York

Saha P, Bhattacharjee D, De BK, Nasipuri M (2016) Mathematical representations of blended facial expressions towards facial expression modeling. Procedia Comput Sci 84:94–98. https://doi.org/10.1016/j.procs.2016.04.071

Sheu J-S, Hsieh T–S, Shou H–N (2014) Automatic generation of facial expression using triangular geometric deformation. J Appl Res Technol 12(6):1115–1130. https://doi.org/10.1016/S1665-6423(14)71671-2

Smith JO (2007) Mathematics of the discrete Fourier transform (DFT). 2nd edition, W3K publishing

Soille P (1999) Morphological image analysis: principles and applications. Springer-Verlag, Berlin Heidelberg

Song M, Dong Z, Theobalt C, Wang H, Liu Z, Seidel H (2007) Generic framework for efficient 2D and 3D facial expression analogy. IEEE Trans Multimedia 9(7):1384–1395. https://doi.org/10.1109/TMM.2007.906591

Susskind JM, Hinton GE, Movellan JR, Anderson AK (2008) Generating facial expressions with deep belief nets. In: Jimmy or (ed) affective computing, InTech, pp. 421–440, https://doi.org/10.5772/6167, 2008.

Tang X, Stewart WK (2000) Optical and sonar image classification: wavelet packet transform vs. Fourier transform. Comput Vis Image Underst 79(1):25–46. https://doi.org/10.1006/cviu.2000.0843

Thies J, Zollhofer M, NeiBner M, Valgaerts L, Stamminger M, Theobalt C (2015) Real-time expression transfer for facial reenactment. ACM Trans Graph 34(6):183:1–183:14. https://doi.org/10.1145/2816795.2818056

Tian YL, Kanade T, Cohn JF (2001) Recognizing action units for facial expression analysis. IEEE Trans Pattern Anal Mach Intell 23:97–115. https://doi.org/10.1109/34.908962

Tsai Y, Lin H J, Yang F W (2012) Facial expression synthesis based on imitation. In: Dornaika F (ed) International journal of advanced robotic systems, InTech, pp 1–6 https://doi.org/10.5772/51906

Viola P, Jones MJ (2001) Robust real time object detection. Int J Comp Vis 57(2):137–154. https://doi.org/10.1023/B:VISI.0000013087.49260.fb

Wan X, Jin X (2012) Data-driven facial expression synthesis via Laplacian deformation. Multimed Tools Appl 58:109–123. https://doi.org/10.1007/s11042-010-0688-7

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans IP 13(4):600–612

Wu J, Chi H, Chi L (2011) A cloud model-based approach for facial expression synthesis. J Multimed 6(2):217–224. https://doi.org/10.4304/jmm.6.2.217-224.

Xavier I, Pereira1 M, Giraldi G, Gibson S, Solomon C, Rueckert D, Gillies D, Thomaz C (2015) A photo-realistic generator of most expressive and discriminant changes in 2D face images. Proc Sixth Int Conf Emerging Security Technologies, pp 80–85. https://doi.org/10.1109/EST.2015.17

Xie W, Shen L, Yang M, Jiang J (2017, 2017) Facial expression synthesis with direction field preservation based mesh deformation and lighting fitting based wrinkle mapping. Multimed Tools Appl. https://doi.org/10.1007/s11042-017-4661-6

Xiong L, Zheng N, Du S, Wu L (2009) Extended facial expression synthesis using statistical appearance model. 4th IEEE Conf industrial electronics and applications, pp 1582-1587. https://doi.org/10.1109/ICIEA.2009.5138461

Yang C–K, Chiang W–T (2008) An interactive facial expression generation system. Multimed Tools Appl 40(1):41–60. https://doi.org/10.1007/s11042-007-0184-x

Zhang Y, Ji Q (2005) Active and dynamic information fusion for facial expression understanding from image sequences. IEEE Trans Pattern Anal Mach Intell 27(5):699–714. https://doi.org/10.1109/TPAMI.2005.93

Zhang Q, Liu Z, Guo B, Terzopoulos D, Shum H–Y (2006) Geometry-driven photorealistic facial expression synthesis. IEEE Trans Vis Comput Graph 12(1):48–60. https://doi.org/10.1109/TVCG.2006.9

Zhang L, Zhang D, Mou X (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386

Zhang Y, Lin W, Sheng B, Wu J, Li H, Zhang C (2012) Facial expression mapping based on elastic and muscle-distribution-based models. IEEE Int Symp Circuits Syst:2685–2688. https://doi.org/10.1109/ISCAS.2012.6271860

Zhang Y, Lin W, Zhou B, Chen Z, Sheng B, Wu J (2014) Facial expression cloning with elastic and muscle models. J Vis Commun Image Represent 25(5):916–927. https://doi.org/10.1016/j.jvcir.2014.02.010

Zhe X, John D, Boucouvalas AC (2004) Emotion extraction engine: expressive image generator. Proc HCI:367–381. https://doi.org/10.1007/978-1-4471-3754-2_23

Zhu X, Li X, Song L (2013) A 2D personalized facial expression generation approach. Proc. 2nd Int. Conf. Innovative computing and cloud computing, China, pp. 78–82, https://doi.org/10.1145/2556871.2556889

Acknowledgements

The work presented here is being conducted under the research project supported by the Grant No. 12(2)/2011-ESD, dated 29/03/2011, from DeitY, MCIT, Government of India. The first author is grateful to Department of Science and Technology (DST), Government of India for providing her Junior Research Fellowship-Professional (JRF-Professional) under DST-INSPIRE fellowship program (No. IF131067).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Saha, P., Bhattacharjee, D., De, B.K. et al. Facial component-based blended facial expressions generation from static neutral face images. Multimed Tools Appl 77, 20177–20206 (2018). https://doi.org/10.1007/s11042-017-5436-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-5436-9