Abstract

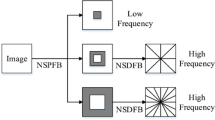

The multi-source image fusion has been a hot topic during the recent years because of its higher detection rate. To improve the accuracy of pig-body multi-feature detection, a multi-source image fusion method was adopted in this field. However, the traditional multi-source image fusion methods could not obtain better contrast and more details of the fused image. To better detect shape and temperature feature of pig-body, a novel infrared and visible image fusion method was proposed in non-subsampled contourlet transform (NSCT) domain and named NSCT-GF-IAG. Through this technique, the visible and infrared images were first decomposed into a series of multi-scale and multi-directional sub-bands using NSCT. Then, to better represent the fine-scale of texture information and coarse-scale detail information, Gabor filter with even-symmetry and improved average gradient (IAG) were employed to fuse low-frequency and high-frequency sub-bands, respectively. Next, the fused coefficients were reconstructed into a final fusion image by inverse NSCT. Finally, the shape feature of pig-body was obtained by automatic threshold segmentation and optimized by morphological processing. Moreover, the highest temperature was extracted based on shape segmentation of pig-body. Experimental results showed that the proposed fusion method for detecting multi-feature was capable of achieving 2.175–5.129% higher average segmentation rate than the prevailing conventional methods. Besides this, the proposed method also improved efficiency in terms of time consumption.

Similar content being viewed by others

References

Bai XZ (2015) Infrared and visual image fusion through feature extraction by morphological sequential toggle operator, infrared Phys. Technol. 71:77–86

Bai X, Zhou F, Xue B (2011) Fusion of infrared and visual images through region extraction by using multiscale center-surround top-hat transform. Opt Express 19(9):8444–8457

Balakrishnan S, Cacciola M, Udpa L, Rao BP, Jayakumar T, Raj B (2012) Development of image fusion methodology using discrete wavelet transform for eddy current images. Ndt & E International 51(10):51–57

Bhatnagar G, Wu QMJ, Liu Z (2015) A new contrast based multimodal medical image fusion framework. Neurocomputing 157:143–152

Chen Y, Sang N (2015) Attention-based hierarchical fusion of visible and infrared images. Optik - International Journal for Light and Electron Optics 126(23):4243–4248

Fendri E, Boukhriss RR, Hammami M (2017) Fusion of thermal infrared and visible spectra for robust moving object detection. Pattern Analysis & Applications 20(10):1–20

Font-I-Furnols M, Carabús A, Pomar C, Gispert M (2015) Estimation of carcass composition and cut composition from computed tomography images of live growing pigs of different genotypes. Animal 9(01):166–178

He LI, Lei L, Chao Y, Wei H (2016) An improved fusion algorithm for infrared and visible images based on multi-scale transform. Semiconductor Optoelectronics 74:28–37

Hong Z, Chen Z, Yan X, Chen H (2012) Visible and infrared image fusion algorithm based on shearlet transform. Chinese Journal of Scientific Instrument 33(7):1613–1619

Howe K, Clark MD, Torroja CF, Torrance J, Stemple DL (2013) Corrigendum: the zebrafish reference genome sequence and its relationship to the human genome. Nature 496(7446):498–503

Huang Z, Ding M, Zhang X (2017) Medical image fusion based on non-subsampled shearlet transform and spiking cortical model. Journal of Medical Imaging & Health Informatics 7(1):229–234

Huang Y, Bi D, Wu D (2018) Infrared and visible image fusion based on different constraints in the non-subsampled shearlet transform domain. Sensors 18(4):1169

Jin X, Jiang Q, Yao S, Zhou D, Nie R, Lee SJ et al (2017) Infrared and visual image fusion method based on discrete cosine transform and local spatial frequency in discrete stationary wavelet transform domain Infrared Physics & Technology:88

Kadar, I. . (1998). Pixel-level image fusion: the case of image sequences. Proceedings of SPIE - The International Society for Optical Engineering, 3374, 378-388.

Kashisha MA, Bahr C, Ott S, Moons C, Niewold TA, Tuyttens F et al (2013) Automatic monitoring of pig activity using image analysis. Springer International Publishing, Advanced Concepts for Intelligent Vision Systems

Kong WW, Lei Y, Ren MM (2016) Fusion method for infrared and visible images based on improved quantum theory model. Neurocomputing 212:12–21

Leung Y, Liu J, Zhang J (2013) An improved adaptive intensity–hue–saturation method for the fusion of remote sensing images. IEEE Geoscience & Remote Sensing Letters 11(5):985–989

Li M, Zhao L, Pagemccaw PS, Chen W (2016) Zebrafish genome engineering using the crispr – cas9 system. Science 8(11):2281–2308

Liu C, Jin L, Tao H, Li G, Zhuang Z, Zhang Y (2014a) Multi-focus image fusion based on spatial frequency in discrete cosine transform domain. IEEE Signal Processing Letters 22(2):220–224

Liu X, Zhou Y, Wang J (2014b) Image fusion based on shearlet transform and regional features. AEUE - International Journal of Electronics and Communications 68(6):471–477

Liu Y, Chen X, Peng H, Wang Z (2017) Multi-focus image fusion with a deep convolutional neural network. Information Fusion 36:191–207

Ma K, Zeng K, Wang Z (2015) Perceptual quality assessment for multiexposure image fusion. IEEE Trans Image Process 24(11):3345–3356

Ma Y, Chen J, Chen C, Fan F, Ma JY (2016a) Infrared and visible image fusion using total variation model. Neurocomputing 202:12–19

Ma JY, Chen C, Li C, Huang J (2016b) Infrared and visible image fusion via gradient transfer and total variation minimization. Information Fusion 31:100–109

Ma, J. Y. , Yu, W. , Liang, P. W. , Li, C. , & Jiang, J. J. . (2018). Fusiongan: a generative adversarial network for infrared and visible image fusion. Information fusion, S1566253518301143-.

Mcculloch JETA (1961) A direct measurement of the radiation sensitivity of normal mouse bone marrow cells. Radiat Res 14(2):213–222

Meng F, Song M, Guo B, Shi R, Shan D (2016) Image fusion based on object region detection and non-subsampled contourlet transform Computers & Electrical Engineering:S0045790616303044

Moghadam, F. V. , and Shahdoosti, H. R. . (2017). A new multifocus image fusion method using contourlet transform.

Paramanandham N, Rajendiran K (2017) Infrared and visible image fusion using discrete cosine transform and swarm intelligence for surveillance applications Infrared Physics & Technology:88

Pu T, Ni G (2000) Contrast-based image fusion using the discrete wavelet transform. Opt Eng 39(8):2075–2082

Qian, J. X., Jiang, S. W., Yao, et al. (2018). Infrared and visual image fusion method based on discrete cosine transform and local spatial frequency in discrete stationary wavelet transform domain. Infrared physics and technology.

Séverine R, Tesson L, Séverine M, Usal C, Cian AD, Thepenier V (2014) Efficient gene targeting by homology-directed repair in rat zygotes using tale nucleases. Genome Res 24(8):1371–1383

Shen G, Luo Z (2011) On the research of pig individual identification and automatic weight collecting system. International Conference on Digital Manufacturing & Automation, IEEE

Stajnko D, Brus M, Hočevar M (2008) Estimation of bull live weight through thermographically measured body dimensions. Comput Electron Agric 61(2):233–240

Wang L, Li B, Tian LF (2014) Eggdd: an explicit dependency model for multi-modal medical image fusion in shift-invariant shearlet transform domain. Information Fusion 19(11):29–37

Xiang T, Yan L, Gao R (2015) A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking pcnn in nsct domain. Infrared Phys Technol 69:53–61

Xie, K. . (2005). Multifocus image fusion based on discrete cosine transform. Computer Engineering & Applications.

Yang, J. , and Yang, J. . (2009). Multi-Channel Gabor filter Design for Finger-Vein Image Enhancement. Fifth international conference on image and graphics (pp.87-91). IEEE computer society.

Yang J, Shi Y, Yang J (2011) Personal identification based on finger-vein features. Comput Hum Behav 27(5):1565–1570

Yang, G. , Ikuta, C. , Zhang, S. , Uwate, Y. , Nishio, Y. , and Lu, Z. . (2017). A novel image fusion algorithm using an nsct and a pcnn with digital filtering. International Journal of Image & Data Fusion, 1-13.

Yang, S. , Wang, X. , Zhen, L. I. , Zhao, W. , and Yang, L. . (2018). Research on fingerprint image reconstruction based on contourlet transform. Technology Innovation & Application.

Zambottivillela L, Yamasaki SC, Villarroel JS, Alponti RF, Silveira PF (2014) Novel fusion method for visible light and infrared images based on nsst-sf-pcnn. Infrared Phys Technol 65(7):103–112

Zhang Q, Guo BL (2009) Multifocus image fusion using the nonsubsampled contourlet transform. Signal Process 89(7):1334–1346

Zheng Y (2005) Advanced discrete wavelet transform fusion algorithm and its optimization by using the metric of image quality index. Opt Eng 44(3):037003

Zijun F, Xiaoling Z, Huijie Z (2012) Fusion scheme of infrared and visible images for moving object detection. Computer Engineering & Applications 48(7):9–11

Acknowledgements

The authors would like to thank their colleagues for their support of this work. The detailed comments from the anonymous reviewers were gratefully acknowledged. This work was supported by the Key Research and Development Project of Shandong Province (Grant No. 2019GNC106091) and the National Key Research and Development Program (Grant No. 2016YFD0200600-2016YFD0200602).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhong, Z., Gao, W., Khattak, A.M. et al. A novel multi-source image fusion method for pig-body multi-feature detection in NSCT domain. Multimed Tools Appl 79, 26225–26244 (2020). https://doi.org/10.1007/s11042-020-09044-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09044-9