Abstract

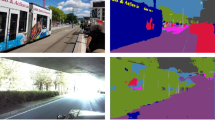

Robust multimodal fusion is one of the challenging research problems in semantic scene understanding. In real-world applications, the fusion system can overcome the drawbacks of individual sensors by taking different feature representations and statistical properties of multiple modalities (e.g., RGB-depth cameras, multispectral cameras). In this paper, we propose a novel central multimodal fusion framework for semantic image segmentation of road scenes, aiming to effectively learn joint feature representations and optimally combine deep neural networks with statistical priors. More specifically, the proposed fusion framework can automatically generate a central branch by sequentially mapping multimodal features into a common space, including both low-level and high-level features. Besides, in order to reduce the model uncertainty, we employ statistical fusion to compute the final prediction, which leads to significant performance improvement. We conduct extensive experiments on various outdoor scene datasets. Both qualitative and quantitative experiments demonstrate that our central fusion framework achieves competitive performance against existing multimodal fusion methods.

Similar content being viewed by others

References

Alghamdi A, Hammad M, Ugail H, Abdel-Raheem A, Muhammad K, Khalifa H, Abd El-Latif A (2020) Detection of myocardial infarction based on novel deep transfer learning methods for urban healthcare in smart cities. Multimedia Tools Appl https://doi.org/10.1007/s11042-020-08769-x

Arevalo J, Solorio T, Montes-y Gómez M, González FA (2017) Gated multimodal units for information fusion. arXiv:1702.01992

Atrey PK, Hossain MA, El Saddik A, Kankanhalli MS (2010) Multimodal fusion for multimedia analysis: a survey. Multimedia Sys 16(6):345–379

Badrinarayanan V, Kendall A, Cipolla R (2017) Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Patt Anal Mach Intell 39(12):2481–2495

Ben-Younes H, Cadene R, Cord M, Thome N (2017) Mutan: Multimodal tucker fusion for visual question answering. In: Proceedings of the IEEE international conference on computer vision, pp 2612–2620

Blanchon M, Morel O, Zhang Y, Seulin R, Crombez N, Sidibé D (2019) Outdoor scenes pixel-wise semantic segmentation using polarimetry and fully convolutional network. In: 14th international conference on computer vision theory and applications (VISAPP 2019). Prague, Czech Republic. https://hal-univ-bourgogne.archives-ouvertes.fr/hal-02024107

Blum H, Gawel A, Siegwart R, Cadena C (2018) Modular sensor fusion for semantic segmentation. In: 2018 IEEE/RSJ International conference on intelligent robots and systems (IROS). IEEE, pp 3670–3677

Chen LC, Zhu Y, Papandreou G, Schroff F, Adam H (2018) Encoder-decoder with atrous separable convolution for semantic image segmentation. arXiv:1802.02611

Cordts M, Omran M, Ramos S, Rehfeld T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B (2016) The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Deng J, Dong W, Socher R, Li LJ, Li FF (2009) Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision & pattern recognition

Deng L, Yang M, Li T, He Y, Wang C (2019) Rfbnet: deep multimodal networks with residual fusion blocks for rgb-d semantic segmentation. arXiv:1907.00135

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: the kitti dataset. Int J Robot Res (IJRR)

Gupta S, Girshick R, Arbeláez P, Malik J (2014) Learning rich features from rgb-d images for object detection and segmentation. In: European conference on computer vision. Springer, pp 345–360

Harchanko JS, Chenault DB (2005) Water-surface object detection and classification using imaging polarimetry. In: Polarization science and remote sensing II, vol 5888. International Society for Optics and Photonics, p 588815

Hazirbas C, Ma L, Domokos C, Cremers D (2016) Fusenet: incorporating depth into semantic segmentation via fusion-based cnn architecture. In: Asian conference on computer vision. Springer, pp 213–228

Jacobs RA, Jordan MI, Nowlan SJ, Hinton GE, et al. (1991) Adaptive mixtures of local experts. Neural Comput 3(1):79–87

Jiang J, Zheng L, Luo F, Zhang Z (2018) Rednet: residual encoder-decoder network for indoor rgb-d semantic segmentation. arXiv:1806.01054

Kaymak Ç, Uçar A (2019) A brief survey and an application of semantic image segmentation for autonomous driving. In: Handbook of deep learning applications. Springer, pp 161–200

Lahat D, Adali T, Jutten C (2015) Multimodal data fusion: an overview of methods, challenges, and prospects. Proc IEEE 103(9):1449–1477

Li Y, Zhang J, Cheng Y, Huang K, Tan T (2017) Semantics-guided multi-level rgb-d feature fusion for indoor semantic segmentation. In: 2017 IEEE International conference on image processing (ICIP). IEEE, pp 1262–1266

Li Z, Gan Y, Liang X, Yu Y, Cheng H, Lin L (2016) Lstm-cf: Unifying context modeling and fusion with lstms for rgb-d scene labeling. In: European conference on computer vision. Springer, pp 541–557

Lin M, Chen Q, Yan S (2013) Network in network. arXiv:1312.4400

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3431–3440

Lu X, Wang W, Ma C, Shen J, Shao L, Porikli F (2019) See more know more: unsupervised video object segmentation with co-attention siamese networks

Oberweger M, Wohlhart P, Lepetit V (2015) Hands deep in deep learning for hand pose estimation. arXiv:1502.06807

Park SJ, Hong KS, Lee S (2017) Rdfnet: Rgb-d multi-level residual feature fusion for indoor semantic segmentation. In: Proceedings of the IEEE international conference on computer vision, pp 4980–4989

Paszke A, Chaurasia A, Kim S, Culurciello E (2016) Enet: a deep neural network architecture for real-time semantic segmentation. arXiv:1606.02147

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in pytorch

Ramachandram D, Taylor GW (2017) Deep multimodal learning: a survey on recent advances and trends. IEEE Signal Proc Mag 34(6):96–108

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 234–241

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Computer Ence

Valada A, Mohan R, Burgard W (2018) Self-supervised model adaptation for multimodal semantic segmentation. arXiv:1808.03833

Valada A, Oliveira G, Brox T, Burgard W (2016) Deep multispectral semantic scene understanding of forested environments using multimodal fusion. http://ais.informatik.uni-freiburg.de/publications/papers/valada16iser.pdf

Valada A, Vertens J, Dhall A, Burgard W (2017) Adapnet: adaptive semantic segmentation in adverse environmental conditions. In: Proceedings of the IEEE international conference on robotics and automation (ICRA). IEEE, pp 4644–4651

Vielzeuf V, Lechervy A, Pateux S, Jurie F (2018) Centralnet: a multilayer approach for multimodal fusion. In: Proceedings of the European conference on computer vision (ECCV), pp 0–0

Wang W, Lu X, Shen J, Crandall D, Shao L (2019) Zero-shot video object segmentation via attentive graph neural networks

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv:1511.07122

Zhang Y, Morel O, Blanchon M, Seulin R, Rastgoo M, Sidibé D (2019) Exploration of deep learning-based multimodal fusion for semantic road scene segmentation. In: VISAPP 2019 14Th international conference on computer vision theory and applications

Acknowledgements

This research was supported by the French Agence Nationale de la Recherche (ANR), under the ANR-17-CE22-0011 (project ICUB). We gratefully acknowledge the support of NVIDIA Corporation with the donation of GPUs used in this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Y., Morel, O., Seulin, R. et al. A central multimodal fusion framework for outdoor scene image segmentation. Multimed Tools Appl 81, 12047–12060 (2022). https://doi.org/10.1007/s11042-020-10357-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-10357-y