Abstract

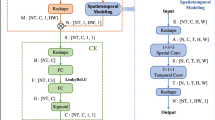

Video-based action recognition has become a challenging task in computer vision and attracted extensive attention from the academic community. Most existing methods for action recognition treat all spatial or temporal input features equally, thus ignoring the difference of contribution provided by different features. To address this problem, we propose a spatial-temporal channel-wise attention network (STCAN) that is able to effectively learn discriminative features of human actions by adaptively recalibrating channel-wise feature responses. Specifically, the STCAN is constructed on a two-stream structure and we design a channel-wise attention unit (CAU) module. Two-stream network can effectively extract spatial and temporal information. Using the CAU module, the interdependencies between channels can be modelled to further generate a weight distribution for selectively enhancing informative features. The network performance of STCAN has been evaluated on two typical action recognition datasets, namely UCF101 and HMDB51, and comparable experiments have been performed to demonstrate the effectiveness of the proposed STCAN.

Similar content being viewed by others

References

Anderson P, He X, Buehler C, Teney D, Johnson M (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6077–6086

Beddiar DR, Nini B, Sabokrou M, Hadid A (2020) Vision-based human activity recognition: a survey. Multimed Tools Appl. https://doi.org/10.1007/s11042-020-09004-3

Bianco S, Ciocca G, Cusano C (2016) CURL: Image classification using co-training and unsupervised representation learning. Comput Vis Image Underst 145:15–29

Cai Z, Wang L, Peng X, Qiao Y (2014) Multi-view super vector for action recognition. In: Proceedings IEEE conference on computer vision and pattern recognition, pp 596–603

Carreira J, Zisserman A (2017) Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4724–4733

Crasto N, Weinzaepfel P, Alahari K, Schmid C (2019) MARS: Motion-augmented RGB stream for action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7874–7883

Diba A, Fayyaz M, Sharma V, Karami AH, Arzani MM, Yousefzadeh R (2017) Temporal 3d convnets: new architecture and transfer learning for video classification. arXiv:1711.08200

Dong X, Shen J (2018) Triplet loss in siamese network for object tracking. In: Proceedings of the European conference on computer vision (ECCV), pp 472–488

Dong X, Shen J, Wu D, Guo K, Jin X, Porikli F (2019) Quadruplet network with one-shot learning for fast visual object tracking. IEEE Trans Image Process 28(7):3516–3527

Feichtenhofer C, Pinz A, Zisserman A (2016) Convolutional two-stream network fusion for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1933–1941

Guo S, Qing L, Miao J, Duan L (2019) Action prediction via deep residual feature learning and weighted loss. Multimed Tools Appl 79(7-8):4713–4727

Hao W, Zhang Z (2019) Spatiotemporal distilled dense-connectivity network for video action recognition. Pattern Recognit 92:13–24

Hara K, Kataoka H, Satoh Y (2018) Can spatiotemporal 3D CNNs retrace the history of 2D CNNs and ImageNet?. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6546–6555

He P, Jiang X, Su T, Li H (2018) Computer graphics identification combining convolutional and recurrent neural networks. IEEE Signal Proc Lett 25(9):1369–1373

He D, Zhou Z, Gan C, Li F, Liu X, Li Y, Wang L, Wen S (2019) StNet: Local and global spatial-temporal modeling for action recognition. In: Proceedings of the AAAI conference on artificial intelligence, pp 8401–8408

Hinton GE, Srivastava N, Krizhevsky A (2012) Improving neural networks by preventing co-adaptation of feature detectors. arXiv:1207.0580v1

Hu J, Shen L, Albanie S, Sun G, Wu E (2020) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42(8):2011–2023

Kuehne H, Jhuang H, Garrote E, Poggio TA, Serre T (2011) HMDB51: A large video database for human motion recognition. In: Proceedings of the IEEE international conference on computer vision. IEEE, pp 2556–2563

Kwon H, Kim Y, Lee J, Cho M (2018) First person action recognition via two-stream ConvNet with long-term fusion pooling. Pattern Recignit Lett 112:161–167

Lai Q, Wang W, Sun H, Shen J (2020) Video saliency prediction using spatiotemporal residual attentive networks. IEEE Trans Image Process 29:1113–1126

Laptev I (2005) On space-time interest points. Int J Comput Vis 64(2-3):107–123

Laptev I, Marszalek M, Schmid C, Rozenfeld B (2008) Learning realistic human actions from movies. In: Proceedings IEEE conference on computer vision and pattern recognition, pp 1–8

Li T, Liang Z, Zhao S, Gong J, Shen J (2020) Self-learning with rectification strategy for human parsing. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9260–9269

Li J, Liu X, Zhang W, Zhang M, Song J, Sebe N (2020) Spatio-temporal attention networks for action recognition and detefction. IEEE Trans Multimed 22(11):2990–3001

Liang Z, Shen J (2020) Local semantic siamese networks for fast tracking. IEEE Trans Image Process 29:3351–3364

Liao Z, Hu H, Zhang J, Yin C (2019) Residual attention unit for action recognition. Comput Vis Image Underst 189:102821

Lv Z, Halawani A, Feng S, Li H, Réhman S (2013) Multimodal hand and foot gesture interaction for handheld devices. In: Proceedings of the 21st ACM international conference multimedia, pp 621–624

Lv Z, Halawani A, Feng S, Réhman S, Li H (2015) Touch-less interactive augmented reality game on vision-based wearable device. Personal Ubiquit Comput 19(3-4):551–567

Lv Z, Penades V, Blasco S, Chirivella J, Gagliardo P (2016) Evaluation of kinect2 based balance measurement. Neurocomputing 208:290–298

Ma Z, Sun Z (2018) Time-varying LSTM networks for action recognition. Multimed Tools Appl 77(24):32275–32285

McNeely D, Beveridge J, Draper B (2020) Inception and ResNet features are (almost) equivalent. Cogn Syst Res 59:312–218

Murphy PK (2012) Machine learning: a probabilistic perspective. MIT Press, Cambridge

Ng JYH, Hausknecht M, Vijayanarasimhan S, Vinyals O, Monga R, Toderici G (2015) Beyond short snippets: Deep networks for video classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4694–4702

Peng X, Wang L, Wang X, Qiao Y (2016) Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice. Comput Vis Image Underst 150:109–125

Plizzari C, Cannici M, Matteucci M (2020) Spatial temporal transformer network for skeleton-based action recognition. arXiv:2008.07404

Shen J, Tang X, Dong X, Shao L (2020) Visual object tracking by hierarchical attention siamese network. IEEE Trans Cybern 50(7):3068–3080

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: Proceedings of the 27th International conference on neural information process system, pp 568–576

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: Proceedings of the international conference Learning representations, pp 1–14

Soomro K, Zamir AR, Shah M (2012) UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv:1212.0402

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2818–2826

Tan Z, Wang M, Xie J, Chen Y, Shi X (2017) Deep semantic role labeling with self-attention. arXiv:1712.01586

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp 4489–4497

Vaswani A, Shazeer N, Parmar N, Uszkoreit J (2017) Attention is all you need. arXiv:1706.03762

Wang H, Kläser A, Schmid C, Liu CL (2011) Action recognition by dense trajectories. In: Proceedings IEEE conference on computer vision and pattern recognition, pp 3169–3176

Wang L, Qiao Y, Tang X (2016) MoFAP: A multi-level representation for action recognition. Int J Comput Vis 119(3):254–271

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: Proceedings of the IEEE international conference on computer vision, pp 3551–3558

Wang W, Shen J, Ling H (2019) A deep network solution for attention and aesthetics aware photo cropping. IEEE Trans Pattern Anal Mach Intell 41(7):1531–1544

Wang W, Shen J, Shao L (2018) Video salient object detection via fully convolutional networks. IEEE Trans Image Process 27(1):38–49

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Gool LV (2019) Temporal segment networks for action recognition in videos. IEEE Trans Pattern Anal Mach Intell 41(11):2740–2755

Wang W, Zhang Z, Qi S, Shen J, Pang Y, Shao L (2019) Learning compositional neural information fusion for human parsing. In: International conference on computer vision, pp 5702–5712

Wang W, Zhu H, Dai J, Pang Y, Shen J, Shao L (2020) Hierarchical human parsing with typed part-relation reasoning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8926–8936

Willems G, Tuytelaars T, Gool LJV (2008) An efficient dense and scale-invariant spatio-temporal interest point detector. In: Proceedings European conference on computer vision. Springer, Berlin, pp 650–663

You Q, Jin H, Wang Z, Fang C, Luo J (2016) Image captioning with semantic attention. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4651–4659

Zach C, Pock T, Bischof H (2007) A duality based approach for realtime TV-L1 optical flow. In: Proceeding of the 29th DAGM symposium pattern recognition, pp 214–223

Zhang J, Hu H, Lu X (2019) Moving foreground-aware visual attention and key volume mining for human action recognition. ACM Trans Multimed Comput Comm Appl 15(3):1–16

Zhang B, Wang L, Wang Z, Qiao Y, Wang H (2018) Real-Time action recognition with deeply transferred motion vector CNNs. IEEE Trans Image Process 27(5):2326–2339

Zhang K, Zhang L (2017) Extracting hierarchical spatial and temporal features for human action recognition. Multimed Tools Appl 77(13):16053–16068

Zheng W, Jing P, Xu Q (2019) Action recognition based on spatial temporal graph convolutional networks. In: Proceedings of the 3rd international conference on computer science and application engineering, pp 1–5

Zhu J, Zou W, Zhu Z, Xu L, Huang G (2019) Action machine: Toward person-centric action recognition in videos. IEEE Sig Proc Lett 26(11):1633–1637

Acknowledgements

The research is supported by the National Natural Science Foundations of China (62033007, 61873146, 61973186, 61821004 and 62073192), the Key and Development Plan of Shandong Province (Grant No. 2019JZZY010433) and the Taishan Scholars Climbing Program of Shandong Province.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, L., Liu, Y. & Man, Y. Spatial-temporal channel-wise attention network for action recognition. Multimed Tools Appl 80, 21789–21808 (2021). https://doi.org/10.1007/s11042-021-10752-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-10752-z