Abstract

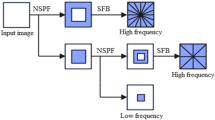

Multimodal medical image fusion technology can assist doctors diagnose diseases accurately and efficiently. However the multi-scale decomposition based image fusion methods exhibit low contrast and energy loss. And the sparse representation based fusion methods exist weak expression ability caused by the single dictionary and the spatial inconsistency. To solve these problems, this paper proposes a novel multimodal medical image fusion method based on nonsubsampled shearlet transform (NSST) and convolutional sparse representation (CSR). First, the registered source images are decomposed into multi-scale and multi-direction sub-images, and then these sub-images are trained respectively to obtain different sub-dictionaries by the alternating direction product method. Second, different scale sub-images are encoded by the convolutional sparse representation to get the sparse coefficients of the low frequency and the high frequency, respectively. Third, the coefficients of the low frequency are fused by the regional energy and the average \({L}_{1}\) norm. Meanwhile the coefficients of the high frequency are fused by the improved spatial frequency and the average \({l}_{1}\) norm. Finally, the final fused image is reconstructed by inverse NSST. Experimental results on serials of multimodal brain images including CT,MR-T2,PET and SPECT demonstrate that the proposed method has the state-of-the-art performance compared with other current popular medical fusion methods whatever in objective and subjective assessment.

Similar content being viewed by others

References

Aharon M, Elad M, Bruckstein A (2012) K-SVD: an algorithm for designing over-complete dictionaries for sparse representation. IEEE Trans Signal Process 54:4311–4322

Bengueddoudj A, Messali Z (2019) Subjective and Objective Evaluation of Noisy Multimodal Medical Image Fusion Using 2D-DTCWT and 2D-SMCWT: Proceedings of the 3rd Conference on Computing Systems and Applications 4:225–234

Bristow H, Eriksson A, Lucey S (2013) Fast convolutional sparse coding. In: Proc IEEE Conf Comput Vis Pattern Recognit 391–398

Chavana SS, Mahajan A, Talbar SN, Desai S, Thakur M, D’Cruz A (2017) Nonsubsampled rotated complex wavelet transform (NSRCxWT) for medical image fusion related to clinical aspects in neurocysticercosis. Comput Biol Med 81(2):64–78

Das S, Kundu MK (2013) A neuro-fuzzy approach for medical image fusion. IEEE Trans Biomed Eng 60(12):3347–3353

Ganasala P, Kumar V (2014) Multimodality medical image fusion based on new features in NSST domain. Biomed Eng Lett 4:414–424

Heide F, Heidrich W, Wetzstein G (2015) Fast and flexible convolutional sparse coding. In: Proc IEEE Conf Comput Vis Pattern Recognit 5135–5143

Kim M, Han DK, Ko H (2016) Joint patch clustering-based dictionary learning for multimodal image fusion. Inf Fus 27(1):198–214

Kong W, Lei Y, Zhao H (2014) Adaptive fusion method of visible light and infrared images based on Nonsubsampled shearlet transform and fast non-negative matrix factorization. Infrared Phys Technol 67:161–172

Li S, Yin H, Fang L (2012) Group-sparse representation with dictionary learning for medical image denoising and fusion. IEEE Trans Biomed Eng 59(12):3450–3459

Li S, Kang X, Hu J (2013) Image Fusion With Guided Filtering. IEEE Trans Image Process 22(7):2864–2875

Li LS, Kang X, Fang L et al (2016) Pixel-level image fusion: a survey of the state of the art. Inf Fusion 33:100–112

Li XX, Guo XP, Han PF, Wang X, Li HG, Luo T (2020) Laplacian Redecomposition for Multimodal Medical Image Fusion. IEEE Trans on Instrum Meas 69(9):6880–6890

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation. Inf Fus 24:147–164

Liu Y, Chen X, Ward RK, Wang ZJ (2016) Image Fusion With Convolutional Sparse Representation. IEEE Signal Process Lett (99):1–1

Liu Y, Wang Z (2015) Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process 9(5):347–357

Lzonin I, Tkachenko R, Peleshko D, Rak T, Batyuk D (2015) Learning-based image super-resolution using weight coefficients of synaptic connections. In: 2015 Xth International Scientific and Technical Conference: Computer Sciences and Information Technologies. CSIT 2015:25–29

Martinez J, Pistonesi S, Maciel MC, Flesia AG (2019) Multi-scale fidelity measure for image fusion quality assessment. Inf Fusion 50(10):197–211

Muzammil SR, Maqsood S, Haider S, Damasevicius R (2020) CSID: A Novel Multimodal Image Fusion Algorithm or Enhanced Clinical Diagnosis. Diagnostics 10:1–22

Rashkevych Y, Peleshko D, Vynokurova O, Lzonin I, Lotoshynska N (2017) Single-frame image super-resolution based on singular square matrix operator. In: 2017 IEEE First Ukraine Conference on Electrical and Computer Engineering (UKRCON) 10:944–948

Singh S, Gupta D, Anand RS (2015) Nonsubsampled shearlet based CT and MR medical image fusion using biologically inspired spiking neural network. Biomed Signal Process Control 18(4):91–101

Tawfik N, Elnemr HA, Fakhr M, Dessouky MI, AbdEi-Samie FE (2020) Survey study of multimodality medical image fusion methods. Multimed Tools Appl 10:1–28

Tkachenko R, Tkachenko P, Lzonin I, Tsymbal Y (2018) Learning-Based Image Scaling Using Neural-Like Structure of Geometric Transformation Paradigm. Advances in Soft Computing and Machine Learning in Image Processing 537–565

Vidoni Eric D (2016) The whole brain atlas. Online: http://www.med.harvard.Edu/aanlib/home.html

Wan W, Yang Y, Lee HJ (2018) Practical remote sensing image fusion method based on guided filter and improved SML in the NSST domain. Signal Image Video Process 1:1–8

Wang K, Qi G, Zhu Z, Chai Y (2017) A novel geometric dictionary construction approach for sparse representation based image fusion. Entropy 19(7):306

Wang QX, Yang XP (2020) An efficient fusion algorithm combining feature extraction and variational optimization for CT and MR images. J Appl Clin Med Phys 21(6):139–150

Wohlberg B (2016) Efficient algorithms for convolutional sparse representation. IEEE Trans Image Process 25(1):301–315

Wohlberg B (2017) Convolutional sparse representations with gradient penalties. Online: Available: https://arxiv.org/abs/1705.04407

Yang B, Li S (2012) Pixel-level image fusion with simultaneous orthogonal matching pursuit. Inf Fusion 13(1):10–19

Zhu Z, Yin H, Yi C, Li Y (2018) A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf Sci 432(3):516–529

Zhang ZC, Xi XX, Luo XQ, Jiang YT, Dong J, Wu XJ (2021) Multimodal image fusion based on global-regional-local rule in NSST domain. Multimedia Tools Appl 80:2847–2873

Funding

Natural Science Foundation of Shanxi Province: Research on texture and edge information in medical image fusion of cancer and brain tumor, China(201901D111152).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, L., Dou, J., Qin, P. et al. Multimodal medical image fusion based on nonsubsampled shearlet transform and convolutional sparse representation. Multimed Tools Appl 80, 36401–36421 (2021). https://doi.org/10.1007/s11042-021-11379-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11379-w