Abstract

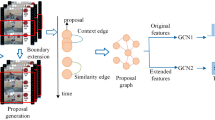

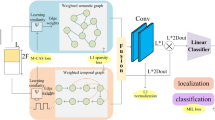

Graph convolutional network has been applied for temporal action detection. However, it is performed on the temporal context from the local view of segments containing incomplete actions, which weakens its ability to learn global representation. To alleviate this problem, a novel mask attention-guided graph convolution layer with the cooperation of local and global views is introduced. The graph weighted by feature similarity between segments is regarded as local view, while the global view takes the overall action distribution of the video as a guide to establish the relevance of all actions in a video. Via adding such a global guide, graph convolutional network can learn more discriminative spatio-temporal correlation representation, therefore, we propose mask attention-guided graph convolution layer for weakly supervised temporal action detection. Taking the segments features as the graph nodes, the mask attention-guided foreground–background graph and the transition-aware temporal mask graph are constructed, and then the segments association features and temporal context features are obtained, and finally cascaded and used for action detection. Experiments on the Thumos14 and Activitynet1.2 datasets achieve a mean average precision of 29.7% and 32.7% under the tIoU threshold 0.5, respectively. The results show that the proposed approach can effectively improve the performance of action detection.

Similar content being viewed by others

References

Fernando B, Yin Chet C, Bilen H (2020) Weakly supervised Gaussian networks for action detection. 2020 IEEE Winter Conference on Applications of Computer Vision:526–535

Ge Y, Qin X, Yang D et al (2021) Deep snippet selective network for weakly supervised temporal action localization. Pattern Recogn 110:107686

Heilbron F, Escorcia V, Ghanem B et al (2015) Activitynet: A large-scale video benchmark for human activity understanding. 2015 IEEE Conference on Computer Vision and Pattern Recognition:961–970

Huang L, Huang Y, Ouyang W et al (2020) Relational Prototypical Network for Weakly Supervised Temporal Action Localization. Proceedings of the AAAI Conference on Artificial Intelligence:11053–11060

Idrees H, Zamir A, Jiang Y et al (2017) The THUMOS challenge on action recognition for videos “in the wild.” Comput Vis Image Underst 155:1–23

Islam A, Radke R (2020) Weakly supervised temporal action localization using deep metric learning. 2020 IEEE Winter Conference on Applications of Computer Vision:536–545

Kang S, Kim Y, Park T et al (2013) Automatic player behavior analysis system using trajectory data in a massive multiplayer online game. Multimed Tools Appl 66(3):383–404

Lee P, Uh Y, Byun H (2020) Background suppression network for weakly-supervised temporal action localization. Proceedings of the AAAI Conference on Artificial Intelligence:11320–11327

Lei Y, Zhou X, Xie L (2019) Emergency monitoring and disposal decision support system for sudden pollution accidents based on multimedia information system. Multimed Tools Appl 78(8):11047–11071

Lin T, Zhao X, Su H et al (2018) BSN: Boundary sensitive network for temporal action proposal generation. European Conference on Computer Vision:3–21

Liu D, Jiang T, Wang Y (2019) Completeness modeling and context separation for weakly supervised temporal action localization. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition:1298–1307

Liu Z, Wang L, Zhang Q et al (2021) Weakly supervised temporal action localization through contrast based evaluation networks. IEEE Trans Pattern Anal Mach Intell (Early Access ). https://doi.org/10.1109/TPAMI.2021.3078798

Lu X, Wang W, Shen J et al (2020) Learning Video Object Segmentation From Unlabeled Videos. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition:8957–8967

Lu X, Wang W, Danelljan M et al (2020) Video object segmentation with episodic graph memory networks. European Conference on Computer Vision:661–679

Paul S, Roy S, Roy-Chowdhury A (2018) W-TALC: Weakly-supervised Temporal activity localization and classification. European Conference on Computer Vision:588–607

Rashid M, Kjellstrom H, Lee Y (2020) Action Graphs: Weakly-supervised Action Localization with Graph Convolution Networks. 2020 IEEE Winter Conference on Applications of Computer Vision:604–613

Shi B, Dai Q, Mu Y et al (2020) Weakly-supervised action localization by generative attention modeling. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition:1006–1016

Shou Z, Wang D, Chang S (2016) Temporal Action Localization in Untrimmed Videos via Multi-stage CNNs. 2016 IEEE Conference on Computer Vision and Pattern Recognition:1049–1058

Shou Z, Gao H, Zhang L et al (2018) AutoLoc: weakly-supervised temporal action localization in untrimmed videos. European Conference on Computer Vision:162–179

Wang W, Lu X, Shen J et al (2019) Zero-shot video object segmentation via attentive graph neural networks. 2019 IEEE/CVF International Conference on Computer Vision:9235–9244

Wang L, Xiong Y, Lin D et al (2017) UntrimmedNets for weakly supervised action recognition and detection. 2017 IEEE Conference on Computer Vision and Pattern Recognition:6402–6411

Xiong Y, Zhao Y, Wang L et al (2017) A pursuit of temporal accuracy in general activity detection. arXiv preprint, arXiv:1703.02716

Xu Y, Zhang C, Cheng Z et al (2019) Segregated temporal assembly recurrent networks for weakly supervised multiple action detection. Proceedings of the AAAI Conference on Artificial Intelligence:9070–9078

Zeng R, Huang W, Tan M et al (2019) Graph Convolutional Networks for Temporal Action Localization. 2019 IEEE/CVF International Conference on Computer Vision:7093–7102

Zhai Y, Wang L, Tang W et al (2020) Two-stream consensus network for weakly-supervised temporal action localization. European Conference on Computer Vision:37–54

Zhai Y, Wang L, Tang W et al (2021) Action coherence network for weakly-supervised temporal action localization. IEEE Trans Multimed (Early Access). https://doi.org/10.1109/TMM.2021.3073235

Zhang X, Shi H, Li C et al (2019) Learning transferable self-attentive representations for action recognition in untrimmed videos with weak supervision. Proceedings of the AAAI Conference on Artificial Intelligence:9227–9234

Zhang X, Li C, Shi H et al (2020) AdapNet: adaptability decomposing encoder-decoder network for weakly supervised action recognition and localization. IEEE Trans Neural Netw Learn Syst (Early Access). https://doi.org/10.1109/TNNLS.2019.2962815

Zhao Y, Xiong Y, Wang L et al (2020) Temporal action detection with structured segment networks. Int J Comput Vision 128(1):74–95

Zhuang W, Tan M, Zhuang B, et al (2018) Discrimination-aware channel pruning for deep neural networks. In Advances in Neural Information Processing Systems:875–886.

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants 61771420 and 62001413, as well as the Natural Science Foundation of Hebei Province under Grants F2020203064.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhao, M., Hu, Z., Li, S. et al. Mask attention-guided graph convolution layer for weakly supervised temporal action detection. Multimed Tools Appl 81, 4323–4340 (2022). https://doi.org/10.1007/s11042-021-11768-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11768-1