Abstract

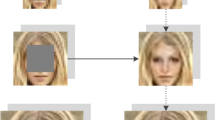

At present, most of the existing image inpainting methods can not reconstruct the reasonable structure of the image, especially when the important part of the image are missing. Some methods focus on reconstructing a continuous and reasonable structure between the missing area and the undamaged area, but when restoring the image texture, it will generate a fuzzy texture inconsistent with the surrounding area. In order to make the inpainted image have continuous structure and vivid texture, a three-stage model is proposed in this paper: in the first stage, the edge generator is trained by using the edge map of the image to inpaint the input missing edge structure; in the second stage, the predicted edge structure map is used as a guide, and the smooth image is used to train the structure reconstructor to complete the overall structure of the image; in the third stage, based on the reconstructed structure, the texture generator using appearance flow operation is used to generate the texture details of the image. We have conducted experiments on multiple datasets. Compared with the state-of-the-art methods, the repaired images using our method have more reasonable structure and vivid texture,and our method has better performance.

Similar content being viewed by others

References

Abdulla AA, Ahmed MW (2021) An improved image quality algorithm for exemplar-based image inpainting. Multimed Tools Appl (11):1–14

Antipov G, Baccouche M, Dugelay JL (2017) Face aging with conditional generative adversarial networks. In: IEEE international conference on image processing (ICIP)

Bertalmio M, Sapiro G, Caselles V, Ballester C (2000) Image inpainting. In: SIGGRAPH conference

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell PAMI-8(6):679–698

Carrillo JA, Kalliadasis S, Liang F, Perez SP (2020) Enhancement of damaged-image prediction through cahn-hilliard image inpainting

Chan TF, Shen J (2001) Nontexture inpainting by curvature-driven diffusions. J Vis Commun Image Represent 12(4):436–449

Cheng G, Sun X, Li K, Guo L, Han J (2021) Perturbation-seeking generative adversarial networks: A defense framework for remote sensing image scene classification. IEEE Trans Geosci Remote Sens PP(99):1–11

Ciortan IM, George S, Hardeberg JY (2021) Colour-balanced edge-guided digital inpainting: Applications on artworks. Sensors 21(6):2091

Criminisi A, Perez P, Toyama K (2004) Region filling and object removal by exemplar-based image inpainting. IEEE Trans Image Process 13(9):1200–1212

Dolhansky B, Ferrer CC (2018) Eye in-painting with exemplar generative adversarial networks. In: 2018 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Efros AA, Leung TK (1999) Texture synthesis by non-parametric sampling. In: Proceedings of the seventh IEEE international conference on computer vision, vol 2, pp 1033–1038

Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial networks. Adv Neural Inf Process Syst 3:2672–2680

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778

Hedjazi MA, Genc Y (2021) Efficient texture-aware multi-gans for image inpainting. Knowl-Based Syst (3):106789

Iizuka S, Simo-Serra E, Ishikawa H (2017) Globally and locally consistent image completion. ACM Trans Graph 36(4)

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25(2)

Lecun Y, Bottou L (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Ledig C, Theis L, Huszar F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z (2016) Photo-realistic single image super-resolution using a generative adversarial network. IEEE Computer Society

Li X, Lu C, Yi X, Jia J (2011) Image smoothing via l0gradient minimization. ACM Trans Graph 30(6):1–12

Li X, Lu C, Yi X, Jia J (2012) Structure extraction from texture via relative total variation. ACM Trans Graph 31(6)

Liu G, Reda F A, Shih K J, Wang T C, Tao A, Catanzaro B (2018) Image inpainting for irregular holes using partial convolutions. European Conference on Computer Vision

Liu J, Jung C (2021) Facial image inpainting using attention-based multi-level generative network. Neurocomputing 437(12)

Liu Z, Ping L, Wang X, Tang X (2016) Deep learning face attributes in the wild. In: IEEE international conference on computer vision

Nazeri K, Ng E, Joseph T, Qureshi FZ, Ebrahimi M (2019) Edgeconnect: Generative image inpainting with adversarial edge learning. In: IEEE international conference on computer vision (ICCV)

Odena A, Buckman J, Olsson C, Brown TB, Olah C, Raffel C, Goodfellow I (2018) Is generator conditioning causally related to gan performance?. In: the 35th international conference on machine learning

Pathak D, Krähenbühl P, Donahue J, Darrell T, Efros AA (2016) Context encoders: feature learning by inpainting. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 2536–2544

Ren Y, Yu X, Zhang R, Li T H, Li G (2019) Structureflow: image inpainting via structure-aware appearance flow. IEEE International Conference on Computer Vision (ICCV)

Shen J, Chan TF (2001) Mathematical models for local nontexture inpaintings. Siam J Appl Math 62:1019–1043

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Computer Science

Song Y, Chao Y, Shen Y, Peng W, Kuo C (2018) Spg-net: Segmentation prediction and guidance network for image inpainting. British Machine Vision Conference 2018

Song Y, Yang C, Lin Z, Liu X, Huang Q, Li H, Kuo C (2017) Contextual-based image inpainting: Infer, match, and translate. European Conference on Computer Vision

Souly N, Spampinato C, Shah M (2017) Semi and weakly supervised semantic segmentation using generative adversarial network, pp 5689–5697

Wang Y, Tao X, Qi X, Shen X, Jia J (2018) Image inpainting via generative multi-column convolutional neural networks. Advances in Neural Information Processing Systems(NIPS)

Xie S, Tu Z (2015) Holistically-nested edge detection. Int J Comput Vis 125(1-3):3–18

Xiong W, Yu J, Lin Z, Yang J, Lu X, Barnes C, Luo J (2019) Foreground-aware image inpainting. In: IEEE conference on computer vision and pattern recognition (CVPR)

Yang C, Lu X, Lin Z, Shechtman E, Wang O, Li H (2017) High-resolution image inpainting using multi-scale neural patch synthesis. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp 4076–4084

Yang Y, Cheng Z, Yu H, Zhang Y, Xie G (2021) Mse-net: generative image inpainting with multi-scale encoder. Vis Comput (2)

Yeh RA, Chen C, Lim TY, Schwing AG, Do MN (2017) Semantic image inpainting with deep generative models. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR)

Yu J, Lin Z, Yang J, Shen X, Huang T (2019) Free-form image inpainting with gated convolution. In: 2019 IEEE/CVF international conference on computer vision (ICCV)

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang TS (2018) Generative image inpainting with contextual attention. In: 2018 IEEE/CVF conference on computer vision and pattern recognition

Zhang H, Goodfellow I, Metaxas D, Odena A (2018) Self-attention generative adversarial networks. arXiv:1805.08318

Zhou B, Lapedriza A, Khosla A, Oliva A, Torralba A (2018) Places: A 10 million image database for scene recognition. IEEE Trans Pattern Anal Mach Intell:1–1

Zhou T, Tulsiani S, Sun W, Malik J, Efros AA (2016) View synthesis by appearance flow. European Conference on Computer Vision

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Q., Ji, H. & Liu, G. Generative image inpainting using edge prediction and appearance flow. Multimed Tools Appl 81, 31709–31725 (2022). https://doi.org/10.1007/s11042-022-12486-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12486-y