Abstract

Mathematical models of Ca2+ release sites derived from Markov chain models of intracellular Ca2+ channels exhibit collective gating reminiscent of the experimentally observed phenomenon of stochastic Ca2+ excitability (i.e., puffs and sparks). Ca2+ release site models are composed of a number of individual channel models whose dynamic behavior depends on the local Ca2+ concentration which is influenced by the state of all channels. We consider this application area to illustrate how stochastic Petri nets and in particular stochastic activity networks can be used to model dynamical phenomena in cell biology. We highlight how state-sharing composition operations as supported by the Möbius framework can represent both mean-field and spatial coupling assumptions in a natural manner. We investigate how state-of-the-art techniques for the numerical and simulative analysis of Markov chains associated with stochastic Petri nets scale when modeling Ca2+ signaling complexes of physiological size and complexity.

Similar content being viewed by others

1 Introduction

Monte Carlo Markov chain (MCMC) simulation and Gillespie’s method are frequently applied in the analysis of stochastic models of biophysical and biochemical phenomena, in particular for those aspects that are discrete in nature and where traditional approaches based on differential equations find their limits. Most challenges in working with Markov chains are related to largeness, be it largeness of model descriptions or largeness of state spaces. While largeness of descriptions is primarily a challenge for a human–computer interface that can be resolved using modeling formalisms that are expressive, intuitive, and easy to communicate, largeness of state spaces relates to the amount of computation time and memory necessary to compute the required results, i.e., the properties of interest for a given model.

Many modeling formalisms that allow a modeler to work with Markov chains at a higher level of abstraction have been developed and discussed in the last decades. Those can be seen as almost canonical extensions of an originally untimed formalism that describes a state transition system and then adds transition rates to those elements of the formalism that perform changes in the system’s state. Examples for such endeavors include (generalized) stochastic Petri nets (GSPNs) (Marsan et al. 1995) as an extension of Petri nets, Markovian process algebras like PEPA (Hillston 2005) and process algebras like Milner’s calculus of communicating systems (CCS), pi calculus and stochastic pi calculus (Priami 1995), and state charts and stocharts (Jansen and Hermanns 2004).

GSPNs have a number of positive aspects for modeling biological systems: they provide a clear yet simple separation of states and transitions with easy to grasp and formally well-defined semantics; and they come with a graphical representation (with the usual limits of scalability for any graphical formalism). GSPNs are particularly suitable to describe entities that act asynchronously yet occasionally synchronize. GSPNs allow a modeler to describe extremely large continuous time Markov chains (CTMCs) in a concise manner using a few powerful constructs. As for Petri nets, GSPNs can have associated CTMCs that have state spaces whose size in general cannot even be bounded by a primitive recursive function (Priese and Wimmel 2003), a phenomenon that is called state space explosion. Although special cases like CTMCs that are amenable to matrix geometric solutions exist, in general, numerical analysis techniques to compute transient or steady-state distributions are restricted to finite CTMCs, i.e., GSPNs that have a finite set of reachable states. The model-based evaluation of biophysical and biochemical phenomena often takes place by MCMC simulation and not by numerical solution of a CTMC, but there are existing numerical techniques (Stewart 1994). The efficiency of simulation depends on the level of detail considered by reward measures, the required precision of the results (width of confidence intervals), and the frequency with which relevant events can be observed (rare events are a well-known borderline case for efficient simulation). In many cases, the desired results could, in principle, also be derived through analysis of the underlying CTMCs; however, in practice, the size of the system of equations that needs to be solved is prohibitive. This “largeness problem” has motivated much research in the construction and numerical solution of CTMCs.

Thus, we face a quest for scalability on both ends, for a graphical model description as well as for scalability of numerical analysis techniques to compute steady-state or transient distributions. Among possible ways to make Petri net descriptions scale, some approaches improve the scalability of descriptions, while others provide structural information that is valuable for a subsequent analysis. The classical approach of place and transition refinement only serves the first purpose and helps to describe large Petri nets in an organized manner. However, compositional operators like synchronization of transitions as for superposed GSPNs (Donatelli 1994), sharing places (state variables) in Replicate/Join and Graph composition operations as supported in Möbius (Deavours et al. 2002), as well as GSPN extensions towards stochastic well-formed nets (SWN) (Chiola et al. 1993) provide ways to structure large models as well as give rise to a model decomposition that may reduce the underlying CTMC based on lumpability. We consider the latter approaches more valuable since they provide a modeler with means to describe large Markov models in a well-structured manner, while simultaneously allowing advanced analysis techniques to apply.

In what follows, we first discuss existing work on the use of timed and stochastic Petri nets in modeling aspects of biological systems. In Sect. 3, we briefly introduce Markovian models of Ca2+ signaling complexes as a particular application area. In Sect. 4, we recall stochastic Petri nets and composition operators in order to cope with largeness of descriptions. We focus in particular on operations implemented in the Replicate/Join and Graph composer functionality of Möbius. Section 5 is devoted to a survey of common analysis techniques for Markovian models. In Sect. 6, we show different ways to model Ca2+ signaling complexes with stochastic Petri nets when the mean-field coupling assumption applies (e.g., identical and indistinguishable channels). Finally, in Sect. 7, we include spatial considerations into a model Ca2+ signaling complex using the Graph composer. We conclude in Sect. 8.

2 Related work

Petri nets have been used in many areas including software design and performance analysis, data analysis, reliability engineering, and biological systems. Our focus here is on biological applications of Petri nets.

Most previous papers on Petri nets and biological systems focus on how and when to use Petri nets to model biological systems. Several have discussions of how to construct and use Petri nets to solve problems in computational biology (Chaouiya 2007; Schaub et al. 2007). Others relate Petri net formalisms to the modelings, simulations, and analysis of biological pathways (Matsuno et al. 2006; Hardy and Robillard 2007). To mention just a few others, Peleg et al. (2002) developed a model of biological processes solved by combining a Workflow/Petri Net and the biological concept model TAMBIS. Steggles et al. (2007) describe a technique to construct and analyze qualitative models of genetic regulatory networks using a Petri net formalism. Heiner and Koch (2004) discuss model validation of qualitative models of biological pathways. Srivastava et al. (2002) give a comparison of the results of implementing a model of intracellular kinetics of a generic virus both deterministically and stochastically. Griffith et al. (2006) present an algorithm to do a hybrid simulation of a well-mixed chemical system by dynamically partitioning fast and slow reactions into discrete and continuous reactions, respectively.

There has also been some previous work on using different tools to model and solve Petri nets. One such study compares different Petri net tools (including Möbius) based on the mathematical capabilities and the ability to model specific features of biological systems and answer a set of biological questions relating to process activation, network regulation, and state reachability (Peleg et al. 2005). The test cases used for this study involved malaria invasion, tRNA mutations, and drug pathways in a human cell. The work in Materi and Wishart (2007) is a summary and assessment of several techniques used to model complex temporal and spatiotemporal processes, with a focus on using these methods for drug discovery and development. Another gives an approach to defining molecular interaction networks as stochastic Petri nets, with results from models solved with UltraSAN (Goss and Peccoud 1998), the predecessor of Möbius. A brief overview of the features of Möbius in terms of stochastic Petri nets can be found in Peccoud et al. (2007). In contrast to existing work, this paper emphasizes scalability issues that evolve in the use of GSPNs for stochastic models of Ca2+ signaling complexes (Nguyen et al. 2005).

3 Markov models of Ca2+ release sites

Cell signal transduction is often mediated by molecular assemblies composed of multiple interacting transmembrane receptors; systems biologists investigate the rules that govern the emergent behavior of these signaling complexes (Gomperts et al. 2002; Krauss 2003). Examples for such problems include coliform bacteria where the high sensitivity of lattices of plasma membrane proteins to chemoattractants is due to allosteric protein-protein interactions which leads to conformational spread through the receptor array (Bray 1998; Duke and Bray 1999; Duke et al. 2001). Signaling complexes are not restricted to the plasma membrane, but are also found on mitochondrial and nuclear membranes and on the endoplasmic or sarcoplasmic reticulum (Berridge 1997; Cho 2006; Berridge 2006). Clusters of 5–50 IP3Rs on both the cortical endoplasmic reticulum (Yao et al. 1995; Sun et al. 1998) and outer nuclear membrane (Mak and Foskett 1997) of immature Xenopus laevis oocytes exhibit coordinated gating that gives rise to spatially localized Ca2+ elevations known as “Ca2+ puffs.” During cardiac excitation–contraction coupling, plasma membrane depolarization leads to Ca2+ influx via L-type Ca2+ channels that triggers “Ca2+ sparks,” i.e., the release of sarcoplasmic reticulum Ca2+ from two-dimensional arrays of ryanodine receptors.

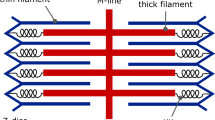

In this paper, we consider clusters of Ca2+-regulated channels whose stochastic gating depends on Ca2+. The local Ca2+ concentration experienced by a particular channel depends on its own state (open or closed) and the state of other channels (to varying degrees dependent on the distance), that is, open channels increase the Ca2+ concentration experienced by neighboring channels. Model Ca2+ release sites often consist of multiple single channel models in a hypothetical spatial arrangement. Figure 1a shows two representative Ca2+ release site models with 10 randomly positioned channels. Figure 1b displays the change in the number of open channels as time progresses—Fig. 1c shows the probability distribution of the number of open channels at each model Ca2+ release site.

From DeRemigio et al. (2008b): a Local Ca2+ near 3 × 3 μm ER membrane with 10 Ca2+-regulated Ca2+ channels modeled as 0.05 pA point sources with positions randomly chosen from a uniform distribution on a disc radius of 2 μm. Buffered Ca2+ diffusion is modeled as in Nguyen et al. (2005). Top and bottom show two different possible channel positions. b Top Stochastic dynamics of the number of open channels at the release site (N O ) that does not include puffs/sparks. Bottom Stochastic Ca2+ excitability reminiscent of Ca2+ puffs/sparks. c Probability distribution of the number of open channels leading to a puff/spark Score of 0.19 for the top and 0.39 for the bottom

Because the spatial arrangement of intracellular channels is often unknown and not necessarily regular, simplifying modeling assumptions may be made. One common modeling assumption is that each channel experiences a local Ca2+ concentration that depends only on the number of open channels (i.e., mean-field coupling). For a more detailed model that takes location of channels on the endoplasmic reticulum membrane into account, one needs to specify those locations, the pair-wise distances between channels, and the influence of inter-channel communication via elevated Ca2+ concentration. DeRemigio et al. (2008a) showed that the spatial arrangement can have an impact on the stochastic activity of Ca2+ release site models.

Single channel techniques such as patch clamp and planar lipid bilayer recording reveal the stochastic gating of voltage- and ligand-gated ion channels in biological membranes. There is a long history of modeling the stochastic gating of single channels using CTMCs. These models vary in their complexity—from aggregated, abstract ones with only two physicochemically distinct states to very detailed ones with hundreds of states (Colquhoun and Hawkes 1995). Several recent publications (Nguyen et al. 2005; Groff et al. 2009) present CTMC models of Ca2+ release sites composed of instantaneously coupled intracellular Ca2+ channels.

In order to understand the structure of such Ca2+ release site models, we briefly recall definitions related to CTMCs for clarification. A CTMC for a state space S of n states is described by an n × n matrix Q of real values where Q ij ≥ 0 for i ≠ j and Q ii = −∑j,j ≠ i Q ij such that its row sums are zero and an initial distribution \(\pi(0) \in {\rm I\kern-0.25em R}^n\) with ∑ i π i (0) = 1. An entry Q ij = λ denotes a state transition from s i to s j that has a stochastic delay given by an exponential distribution with rate λ. If the current state is s i the subsequent state is s j with probability p ij = λ/|Q ii | and the value of 1/|Q ii | gives the mean sojourn time the process stays in state s i . For a given CTMC, we can compute the value for the probability of a certain state s ∈ S at some point of time t ≥ 0, denoted by π s (t) by solving π(t) = π(0) eQt. For t → ∞ and an irreducible CTMC, we can compute the so-called steady-state distribution π as a solution of π Q = 0 such that ∑ s∈S π s = 1. Hence we can study a CTMC model of a Ca2+ release site by transient analysis (the specific pattern of channel openings and closings during a given time period) and by steady-state analysis (how many channels are open on average). An important property of CTMCs is that this analysis can be done by either simulation or numerical analysis (see Sect. 5). The measures of primary interest for a Ca2+ release site model are: (1) is the model configuration of the kind that shows puffs and sparks? and (2) if so, what is the average amplitude and duration of a spark? The Score of a model indicates the presence of puffs and sparks observed in the Ca2+ release site model. Let N O denote the number of open channels, N the total number of channels. The puff/spark Score is the index of dispersion of the fraction of open channels (f O ≡ N O /N),

a simple measure that agrees with subjective evaluations of how puff-like simulated Ca2+ release site dynamics appear (Nguyen et al. 2005). While several different statistical properties of sparks are of interest, the one we focus on here is to select an initial state where k out of n channels are open and compute the time until all channels are closed (i.e., the spark duration). This calculation is performed by modifying the original model so that the state where all channels are closed is an absorbing state and then evaluating the time to absorption. This artificial change to have an absorbing state is made to the model in order to more easily observe the measure of interest.

In the following, we present a sequence of CTMC models of increasing complexity and start off with the simplest possible model of a Ca2+-regulated channel, namely that of a single two-state model. Our presentation closely follows that in Nguyen et al. (2005).

3.1 Single channel models

3.1.1 Two-state channel

A CTMC of a two-state single channel activated by Ca2+ is derived from the transition diagram

where \(k^+c^{\eta}_{\infty}\) and k − are transition rates with units of reciprocal time, k + is an association rate constant with units of \(\hbox{conc}^{-\eta}\hbox{time}^{-1},\) η is the cooperativity of Ca2+ binding, and c ∞ is the background [Ca2+]. For Eq. 2, the infinitesimal generator matrix (often called the Q matrix) is,

While this two-state model of a Ca2+-activated channel is minimal in nature, additional aspects of the Ca2+ regulation of intracellular Ca2+ channels (e.g., slow Ca2+-inactivation resulting in a bell-shaped steady-state open probability curve as a function of [Ca2+]) can be represented by increasing the number of states and transitions (De Young and Keizer 1992; Sneyd and Falcke 2005). Further explanation of the Ca2+-inactivation can be found in Groff and Smith (2008).

3.1.2 Four-state channel

The four-state Ca2+-regulated Ca2+ channel considered here includes both Ca2+ activation (horizontal) and Ca2+ inactivation (vertical) and has the transition state diagram

with the inactivation and de-inactivation processes assumed slow compared to activation and de-activation (\(k_b^{\pm}, k_d^{\pm} \ll k_a^{\pm}, k_c^{\pm}\)). Furthermore, the parameters are chosen to satisfy the thermodynamic constraint (Hill 1977)

where the K i are dissociation constants and \(K_i^{\eta}=k_i^-/k_i^+\) for i ∈ {a, b, c, d}. Thus, Eq. 4 has eight rate constraints, but only seven are free parameters because

The Q matrix for Eq. 4is defined similar to the Q matrix for Eq. 2 (not shown).

3.1.3 n-State channel

If we define u to be a M × 1 column vector, where M is the number of states in a channel, to indicate open states, the Q matrix for both the two-state and four-state models can be written as

where K − and K + involve the dissociation and association rate constants, respectively. For the two-state channel, u = (0, 1)T and for the four-state channel u = (0, 1, 0, 0)T, where the states are ordered C 1, O, C 2, C 3.

3.2 Q-matrix expansion for instantaneously-coupled channels

For release sites composed of multiple Ca2+-regulated Ca2+ channels, interaction between channels must be accounted for. The first case to consider is the Q matrix for two interacting two-state channels,

where a diamond (⋄) indicates a diagonal element leading to a zero row sum in Q. The c ij values represent the effect experienced by channel j when channel i is open under the assumption of a single high concentration of Ca2+ buffer (Nguyen et al. 2005).

Instead of considering the Q-matrix expansion for four-state channels, we skip to the general case. While single-channel Q matrix in the form of Eq. 6 is

the expanded Q matrix, coupled through the interaction matrix C, for N channels is given by

where Q (N)− and Q (N)+ are as follows,

In this expression, \(\Upgamma_{\ast n}\) is the nth column of the M N × N matrix \(\Upgamma\) given by

where C is an N × N ‘coupling matrix’ that summarizes the channel interactions and U is an M N × N matrix indicating the open channels for every state of the release site. Further explanation can be found in Nguyen et al. (2005).

Expansion on Eq. 9 gives us the Q matrix for N channels, with M states per channel, which results in |S| = M N states in total. Note that this matrix will quickly get large as N and M are increased. A method to reduce the largeness of the model is discussed in Sect. 3.4.

3.3 A spatial model and its coupling matrix

If the position of individual channels is to be explicitly accounted for, we need to consider N M-state channels, which yields a state space of M N states. Following Nguyen et al. (2005), channel coupling scales the rate of Ca2+-mediated transitions and a concise representation of that relation is formalized as an N × N coupling matrix C where c ij gives scaling factor (see Eq. 7). Assuming that all channels are identical, c ii = c d for all \(i=1,\ldots, N\) and some \(c_d \in {\rm I\kern-0.25em R},\) independently of the spatial relationship of the channels. If the chosen spatial arrangement is irregular, C is a symmetric matrix with c ij = c ji because the inter-channel distance is undirected. For an arbitrary spatial arrangement, one can select up to \({\frac{N(N-1)}{2}}\) distances (for the number of ways to choose two out of N channels). If the spatial arrangement is regular, this number is reduced accordingly.

In Sect. 7, we consider examples with a grid arrangement and a hexagonal lattice. With a grid arrangement, the distance between pairs of channels would match for each level of the grid. For instance, with the four channel grid arrangement,

the pairs (1,2), (2,3), (3,4), and (4,1) would all have the same distance since they are all neighboring pairs. The pairs (1,3) and (2,4) would both be the same distance apart since they are each one channel removed from each other. So in this case there are only two different values for the distance between any pair of channels. For any fixed release site size, we obtain a matrix C with only three different values

Study of this matrix shows that the distinct values are c 0 on the diagonal for the domain Ca2+ concentration, and c 1 > c 2 on the off-diagonal. In Sect. 7, we discuss how to make use of the symmetries imposed by regular spatial arrangements to achieve a reduction of the CTMC based on lumpability.

3.4 Mean-field Ca2+ release site model

As N and/or M is increased, a common simplification is to assume mean-field coupling, where every channel experiences an average Ca2+ concentration and, consequently, there is no need to differentiate between channels. In this case, one need only keep track of how many of the N channels are in each of the M states. With a single, replicated model the interactions are reduced to a count of how many are doing a certain activity, a linear function of the number of atomic models instead of a factorial function. This leads to a state space reduction from M N to

A consequence of using mean-field approximation is the need to find a feasible approximation for the Ca2+ concentration if x of the N channels are open. As discussed in Nguyen et al. (2005), using c = c ∞ + N O c*, where c* is the average of the off-diagonal entries of C, often works well. The approximation used for the experiments done in this paper follows DeRemigio and Smith (2005), where the c* value used with N channels was

where N ≥ K and \(c^{\ast}_{\rm opt}\) was the ‘optimal’ value of c* for K channels (i.e., the value of c* that maximized the puff/spark Score).

4 Modeling with stochastic Petri nets

In this section, we outline essential steps to transform the model characteristics given in the previous section into a model-based study of Ca2+ release sites using stochastic Petri nets and a modeling framework like Möbius.

4.1 Modeling with stochastic Petri nets

For a generalized SPN model, we use the following formal definition, where we allow for enabling, firing and weight functions being state/marking-dependent. State-dependent functions are considered in Ciardo (1989) and exceed the classic definition of GSPNs, for instance, in Marsan et al. (1995):

Definition 1

A GSPN is a 7-tuple (P, T, m 0, e, f, w, p) where P and T are non-empty finite sets of places and transitions, \(m_0 \in {\rm I\kern-0.25em N}_0^P\) is the initial state (or marking) of places, functions e,f,w,p describe the enabling \(e: T \times {\rm I\kern-0.25em N}_0^P \rightarrow {\rm I \kern-0.25em B }\) of a transition for a transition t ∈ T in given state in \({\rm I\kern-0.25em N}_0^P,\) the successor state reached by firing of a transition \(f: T \times {\rm I\kern-0.25em N}_0^P \rightarrow {\rm I\kern-0.25em N}_0^P,\) the weight of that transition \(w:T\times {\rm I\kern-0.25em N}_0^P \rightarrow {\rm I \kern-0.25em R }_0^+\) and its priority of any transition \(P: T \rightarrow {\rm I\kern-0.25em N}_0.\)

In any given state (or marking in Petri net terminology) \(m \in {\rm I\kern-0.25em N}_0^P,\) a transition t ∈ T is enabled if e(t, m) is true. Priorities impose a constraint on the possible ways to define e in the following sense: let e(t, m) = true then there is no transition t′ ∈ T with p(t′) > p(t) for which e(t′, m) = true. Let \(E(m) \subseteq T\) be the set of transitions that are enabled in a marking m. Any enabled transition t ∈ E(m) can fire and yield a successor marking m′ = f(t, m). A transition with p(t) = 0 is called timed and its firing (if enabled at m) takes place after an exponentially distributed random delay with rate w(t, m) if p(t) = 0. A transition with p(t) > 0 is called immediate, it fires with no delay. If several transition are enabled at a marking m, then t ∈ E(m) fires with probability \(w(t,m)/\sum_{{t^{\prime}}\in E(m)}w(t^{\prime},m).\) Probabilities are calculated irrespectively of the priority of t (which is the same for all transitions in E(m)). With the help of the firing rule, we can explore all reachable states S for a given initial state m 0. States that enable immediate transitions are called vanishing and if present imply that the resulting state space describes a semi-Markov chain and not a CTMC. The reduction towards a CTMC is a well-known procedure called elimination of vanishing states in the literature (Marsan et al. 1995). Immediate transitions are a mean to handle models that operate with different time scales, i.e., models that include extremely fast transitions and very slow transitions. If one assumes that the extremely fast transitions are irrelevant for the fraction of time the stochastic process spends in them, one can treat them as immediate and thus reduce their sojourn times to zero as well as their steady-state probabilities which also justifies their elimination. We introduced priorities only for the sake of consistency with GSPNs and the SAN variant of GSPNs supported in Möbius, we will not make use of priorities to model Ca2+ signaling complexes.

So a GSPN has a finite number of integer state variables as places and transitions that modify a marking vector (state) by subtraction and addition of possibly marking dependent integer vectors of appropriate dimension. To encode a single channel with M states is straightforward if we use M places, one per state, put one token into the place that corresponds to the initial state and leave all other places empty. Every possible change from one state s i to another s j is represented by a transition that has the corresponding place p i as input place and p j as output place. In order to express multiple channels, e.g., N channels, we can either generate N instances (copies) of the single channel net or use the single channel net and assign N tokens as the initial marking instead of 1. With the first variant, we are able to distinguish states of individual channels and are able (at least in principle) to take spatial aspects into account when we define transition rates. For the second variant, we cannot distinguish among channels, such that any possible definition of transition rates can only rely on the number of channels being in a particular state (e.g., open versus closed). In both cases, we need to make use of marking dependent transitions rates to take into account that in the CTMC transition rates depend on the states of all channels. If a single channel model includes a lot of states (M large) or if we wish to distinguish among instances of a single channel model, we need some form of composition operation for GSPNs. A GSPN has two basic concepts—places/state variables and transitions—that can serve as building blocks for a composition operations. If a composition is based on transitions, then two or more separate GSPNs are composed so that they perform certain dedicated transitions only in a jointly manner; such transitions fire in a synchronized manner, the synchronization is of a rendez-vous type. Formally, this is often achieved by merging transitions of the same name t across several GSPNs \(i=1,\ldots,n\) in that their enabling function becomes a logical AND, \(e(t,M)=\bigwedge e_i(t_i,M_i),\) firing function f(t,M) = M′ with \(M^{\prime}_i ={\sum}f_i(t_i,M_i).\) There are a number of ways to define a joint weight function, one way is \(w(t,M) = w_t \prod w_i(t_i,M_i).\) Note that synchronized or shared transitions are usually restricted to timed transitions. The resulting class of nets is called superposed GSPNs in Donatelli (1994). Synchronization by shared transitions is similar to the parallel composition operator of process algebras like PEPA and that of stochastic automata. Transition sharing is known to be a congruence for a performance bisimulation that matches with lumpability for CTMCs, which means that for a model composed of multiple GSPNs, we can first generate and minimize CTMCs associated with each individual CTMC with the help of lumpability and then compose the reduced CTMC into a large CTMC of the composed model.

As an alternative to sharing transitions, one can consider sharing of state variables or places in order to build large models by composing a set of smaller models. Both types of composition are currently supported by Möbius. For the following discussion of composition based on shared variables we focus on Möbius-related concepts since those will result in composed models that can be reduced according to lumpability. The two types of composition based on sharing variables in Möbius are called Replicate/Join composition and Graph composition. Individual basic models that are used to compose large models are called atomic models. Möbius supports several formalisms to describe atomic models; the one that extends GSPNs and that we consider here is called stochastic activity networks (SANs) (Sanders and Meyer 2001). In addition to common generalized SPN concepts of places and transitions with different levels of priority, SANs provide for input and output gates which are graphically denoted by small triangles and allow a modeler to specify state-dependent functions e, f, and w in a general manner (i.e., as C++ code fragments in Möbius). Timed activities have an activity time distribution function, which can be a generally distributed random variable and state-dependent. Activities also have case probabilities to represent different possible outcomes after an activity completes. Edges between an input (output) gate and a place graphically indicate that a function e, f, or w depends on the place p. However, to avoid the cluttering of large graphs, the Möbius implementation does not enforce the existence of such edges.

Sharing state variables is supported by the Replicate/Join composition, which particularly facilitates instantiation of multiple copies of the same atomic model and results in a tree type model structure. State variable sharing is also supported in the Graph composition which results in a model structure of an undirected graph.

The formalism for the Replicate/Join composition was first defined for SAN models in Sanders and Meyer (1991). It uses two separate operations, Replicate and Join, to achieve composed models that have the form of a tree. Each leaf is a predefined atomic model or a composed model, and each non-leaf is either a Join or Rep node. The Join node is used to connect multiple submodels (composed or atomic) by merging (sharing) a set of variables that is specified by the modeler. This implies that all children of the Join node can read and write to a state variable they share and thus can communicate with each other. The Rep node identifies a special case of the Join where all children are instances of the same model. This simplifies the specification, i.e., only a single submodel needs to be identified together with the number of instances that shall be created. As for the Join, the replicated submodels only share those variables that are selected as shared. The key advantage of the Rep node is that it imposes lumpability for the associated CTMC which can be used for an automated reduction of the resulting CMTC; this works even in a nested manner with tree-type structures of multiple Replicate and Join nodes. With both types of nodes, the modeler can specify the state variables that are to be shared between the submodels below the node.

The second composer, Graph Composer, uses a graph structure, rather than the tree structure of the Replicate/Join Composer, and only the Join operator. Again, the Join node allows the modeler to specify state variables that are to be shared between submodels connected to the Join.

One motivation for looking into composition operations is to identify a natural way to represent the fact that certain parts of a biological model result from instantiating a certain type multiple times. The composition operations supported in Möbius and in particular the ones based on shared variables serve this purpose very well as we will see in Sect. 6. However, we would like to note that these are not the only concepts one could discuss at this point. For instance, in stochastic well-formed nets (SWNs), tokens are colored to exploit behavioral symmetries in the model (Chiola et al. 1993). For SWNs, reduction operations based on lumpability are also known. For object-oriented Petri nets (OOPN), tokens are object-oriented, meaning that a token can itself be an OOPN. Nevertheless, we decided to focus on state variable composition, since we believe those are the most suitable to support mean-field coupled and spatial models of Ca2+ signaling complexes.

4.2 Defining rewards

Analysis of a system begins with a decision of what is to be measured. The dynamic behavior of a stochastic model results in being in a certain state at some point of time and performing certain transitions to move from one state to another. In terms of the Möbius model, the state of a system is defined as the value of the places. In close correspondence to this, rate and impulse rewards are defined. A rate reward is a function \(rr: S \rightarrow {\rm I \kern-0.25em R },\) a function of the state of the system evaluated at an instant of time and rr(s) gives the rate at which a reward is earned while being in state s over time. An impulse reward is a function of the state of the system and the identity of a transition that completes, and is evaluated when a particular transition completes, so \(ir: S \times T \rightarrow {\rm I \kern-0.25em R }\) where T denotes the set of transitions. Computation of rewards also needs a decision on what timing to use for reward collection. The time can be chosen as an instant of time, a steady-state, an accumulation over an interval of time, or an accumulation over a time-averaged interval. With Möbius, all of these options are available plus the possibility to select the characteristics of the resulting values of interest: mean, variance or distribution of the measure. For example, to measure the duration of puff/spark in a CTMC model of a signaling complex, we can choose the initial state such that k out of n channels are open and modify the opening transitions such that once all channels are closed the model stays in that state. In that way, we obtain an absorbing Markov chain and can measure the duration of a spark as the time to absorption (DeRemigio and Smith 2005). To do so, we define a rate reward rr(s) = 1 if s is a state where at least one channel is open and 0 otherwise. The accumulated reward over an interval of time (of sufficient length) gives the time to absorption and, for example, the mean and variance of this random variable may be of interest.

5 Analysis techniques

In this section, we briefly outline analysis techniques for a CTMC derived from a GSPN model. We start off with discrete event simulation that applies to stochastic models in general and then focus on numerical methods to compute transient and steady-state distributions, π(t) and π.

5.1 Simulation

Simulation applies to stochastic models of discrete event systems in general, including GSPNs. The key of discrete event simulation is to exercise a given model to observe its dynamic behavior, such that those observations result in samples for a statistical evaluation of rewards (much in the same manner as observations of the real system would be statistically evaluated). Simulation is a process where the behavior of a system is represented as a sequence of events (Fishman 1978). Simulation usually generates a set of samples from performing multiple independent runs that start from the same initial state but with different settings of values for the seed of a random generator. This approach is straightforward to parallelize; one can distribute computations over a network with good speedup and scalability. In case of steady-state analysis, one can alternatively compute a single but very long simulation run and obtain approximately independent samples from batch means. This approach avoids the repeated simulation of an initial transient phase that is required with replicated simulation runs.

The efficiency of simulation depends in part on the desired measurements and the dynamics of the model, i.e., on the ratio of events that need to be computed to obtain a single sample for the statistical evaluation. If this ratio is low, i.e., few simulated events are needed to generate a sample, then this is to the advantage of a discrete event simulation. For example, estimating the mean and variance of impulse rewards for transitions that fire frequently requires less computation time than doing so for transitions that occur rarely. To give another example, a reward defined for an early instant of time can be calculated faster than one at a later instant. Likewise, the size of the interval can also increase the amount of work needed to be done for a simulation to solve for the reward. Estimating the distribution of a variable with few possible values and high probabilities per value requires less effort than for a variable with many possible values or values that have very small probabilities (and thus are rarely seen in a simulation run).

In addition, to estimate the mean, variance, or distribution of a reward R, one uses simulation to also produce confidence intervals for these estimates. Let \(\bar{R}\) denote the estimated mean reward, \(\bar{\sigma}\) the estimated standard deviation of R, then the confidence interval for E[R] is

where n is the number of samples and α1,α2 are values obtained from either a standard normal distribution or a student t distribution for a given confidence level (Law and Kelton 2000). Assigning an upper bound for the width of a confidence intervals for every reward is a common way to tell a simulation framework when to stop since confidence intervals indicate the accuracy of the computed estimates of rewards. This information is important to be able to recognize if the amount of simulated samples is sufficient. Note that this formula also gives rise to a classical rule in simulation: in order to reduce confidence intervals to half of its size, one needs to generate four times its samples (provided \(\bar{R}\) and \( \bar{\sigma}\) stay the same).

Discrete event simulation of stochastic models is common in many areas including computer systems and networks, manufacturing and production systems, and in the biological sciences. In the later case, Gillespie’s method is often employed to simulate CTMCs (Gillespie 1977). This method was developed for stochastic simulation of coupled ordinary differential equations that describe the process of changes in a molecular population via chemical reactions. Gillespie’s method can be computationally expensive but has been adapted to obtain results in a more reasonable time frame (Lecca (2006) and many others).

Simulation can be broadly applied because of its few constraints. The key challenge in simulation is not its applicability but to obtain results of good quality. Due to its random nature, there is no guarantee that a simulation visits relevant parts of the state space of a given CTMC sufficiently often to obtain accurate results.

5.2 Numerical analysis

As alternative methods to simulation, one can use numerical analysis techniques to compute π(t) and π for a given finite CTMC, (discussed below). As a first step, CTMC analysis requires to generate the underlying CTMC from a given GSPN model with the help of a state space exploration.

5.2.1 State space exploration

For a given GSPN with an initial marking s 0, we can compute the overall state space of reachable states S as the transitive closure of the reachability relation. Much research has gone into the development of data structures and algorithms that enable exploration of extremely large, yet finite sets S. If the GSPN models makes use of immediate transitions (or priorities), then those give rise to so-called vanishing states that can be eliminated such that the resulting state space (of tangible states that enable timed transitions), and thus the dimensions of Q, becomes much smaller. Symbolic data structures like multi-terminal binary decision diagrams (MTBDDs), matrix diagrams (MDs) and interval decision diagrams (IDDs, as in Schwarick 2008), as well as Kronecker representations, are suitable for representing Q in a space-efficient manner for a subsequent numerical analysis. In particular, MD representations of Q (Ciardo and Miner 1999) can be derived directly from many formalisms with the help of a symbolic state-space exploration as well as from a given sparse matrix or Kronecker representation of Q; see Miner and Parker (2004) for a recent overview paper on symbolic representations. Generation of an MD for models with state spaces in the order of 101000 states can be achieved, thanks to symbolic data structures and the so-called “saturation technique” (Ciardo et al. 2003). Symbolic or Kronecker representations help to reduce the memory requirements for Q to an extent that the bottleneck for the numerical methods described below is the space needed to store iteration vectors for π(t) and π.

5.2.2 Transient analysis

For given π(0) and Q, the distribution π(t) at time t ≤ 0 is given by

where α ≥ max|Q(s, s)| and \( P = {\frac{1}{\alpha}} Q+I.\) The right hand side of this equation is the basis of the uniformization method (also called randomization or Jensen’s method) which is the one that is frequently deployed for its numerical robustness and efficiency. Uniformization computes π(t) from

where l < r are appropriate constants (determined as described in Fox and Glynn (1988) to avoid numerical instabilities for high values of αt). Uniformization is based on the observation that p k is the distribution after exactly k steps of a corresponding discrete-time Markov chain (DTMC), which is given by matrix P, and w k is the probability of observing exactly k steps in a time interval [0,t] (a Poisson distribution). The advantage of uniformization is that it results in an accurate computation of π(t) which is then used to evaluate rate and impulse rewards. The fundamental and computationally most expensive calculation is the sequence of vectors p k for \(k=1,2, {\ldots},r.\) Since this distribution may converge towards the steady-state distribution of the DTMC for high enough values of k, recognizing a fix point where p k+1 = p k helps to compute results for high values of t since for p k+1 = p k the summation for π(t) becomes trivial for all p k+i, i > 0. Given a rate reward rr() that one evaluates for π(t) as rr t = ∑ s∈S rr(s) π s (t), it is well known that one can derive results for a series of values of t by one single computation of p k based on rr t = ∑ rk = l w k x k where x k = ∑ s∈S rr (s) π s (t). Uniformization gets ineffective if α is relatively high with respect to t, i.e., the uniformization rate makes the process proceed with small steps and t is large enough such that a large number of such steps fit into the interval from 0 to t. While the former difficulties impose numerical problems and high computation times, a bottleneck in terms of space is the representation vectors π(t), p k of lengths |S| (given that Q is represented efficiently by a symbolic or Kronecker representation). For large values of t and an irreducible CTMC, the process may reach its steady state whose distribution, which we can compute in a different manner.

5.2.3 Steady-state analysis

As t goes towards infinity, an irreducible CTMC will reach a unique steady state π. Because this steady-state can be found by solving the linear system

a large number of different approaches exist to numerically evaluate π (Stewart 1994). Among those approaches, iterative methods that retain sparsity of Q and that allow for symbolic or structured representations of Q are the ones that are best for extremely large CTMCs. Commonly applied and simple iterative methods are the Power method, the method of Jacobi and Gauss-Seidel. We recall the method of Jacobi to illustrate the point. Let Q = D−R be a decomposition of Q into a diagonal matrix D, a R with 0 values on its main diagonal. The method of Jacobi is a fix point method that computes x (k+1) = x (k) R D −1 starting with π(0) = x (0) and terminating at some k where x (k+1) = x (k) which then gives π. Advanced techniques include some form of preconditioning and projection methods (Krylov subspace methods), for details see the textbook of Stewart (1994). For signaling complexes, iterative Multi-level methods (Buchholz and Dayar 2004) are the most promising according to an experimental evaluation in DeRemigio et al. (2007).

There are also many approximate techniques that can be used for analysis, including approximate vector representations (Buchholz 2004) and iterated fix point methods (Ciardo and Trivedi 1993). For exact solution methods, the bottleneck for applications is the computation time and space needed to represent iteration vectors, which directly relates to the size of S. However, for CTMCs with certain regularities it is possible to perform state space reduction.

5.2.4 Lumping techniques

State space lumping (e.g., Buchholz 1994; Kemeney and Snell 1960) is a well-known approach that reduces the size of a CTMC by considering the quotient of the CTMC with respect to an equivalence relation that yields a generator matrix \(\widetilde{Q },\) preserves the Markov property and supports the desired reward measures defined on the CTMC. By solving the smaller lumped CTMC, exact results for the larger CTMC, and therefore measures of interest for the model, can be computed. We classify the many publications on lumping into three categories.

State-level lumping applies directly to a given generator matrix Q of a CTMC and computes \(\widetilde{Q}\) of the lumped CTMC. It yields an optimal partition, i.e., the smallest possible lumped CTMC. However, the size of Q limits its application. Efficient algorithms have been designed, e.g., see Derisavi et al. (2003) for the fastest known algorithm.

Model-level lumping is used to generate \(\widetilde{Q}\) directly from a model description. Hence, it is specific to a modeling formalism. The approach is based on symmetry detection among components of a compositional model. Results are known for a variety of Petri net related formalisms, such as stochastic well-formed networks (SWN) (Chiola et al. 1993; Colquhoun and Hawkes 1995), stochastic activity networks (Sanders and Meyer 1991), general state-sharing composed models (Obal II 1998), stochastic automata networks (Benoit et al. 2004), and Kronecker representations, among others. The Replicate/Join and Graph composition operations considered in Sect. 4 fall into this category as well and Derisavi et al. (2004) and McQuinn et al. (2007) describe how to apply those for Markov chains that are represented by a symbolic data structure like a MD. While this approach manages to avoid processing a large matrix Q, it is limited to those symmetries that can be identified from a given model description. Hence, in general, it does not obtain an optimal lumping as the first approach.

Compositional lumping applies the state-level lumping approach to individual components of a compositional model. The original components are replaced by lumped and “equivalent” components during generation of \(\widetilde{Q }.\) Like model-level lumping, this approach is formalism-dependent; specifically, it relies on properties of the composition operators. For instance, based on the fact that lumping is a congruence with respect to parallel composition in a number of process algebra formalisms and stochastic automata networks (SANs), compositional lumping can be used in those formalisms (e.g., Hermanns 2002; Buchholz 1995).

Note that in principle, approaches from different categories can be combined. For example, a compositional approach may yield smaller lumped components that can then be fed to a model-level technique for further reduction of the CTMC. Then state-level lumping can be applied to obtain optimal reduction of the resulting matrix. Finally, the approach in Derisavi et al. (2005) is useful for exact and ordinary lumping of Markov chains represented as MDs without knowledge of the modeling formalism from which the MDs were generated. Obviously these approaches are involved and should be well-encapsulated in a software tool such that a modeler can take advantage of these capabilities without necessarily being aware of them.

6 Mean-field coupling

In models of Ca2+ signaling complexes, a common simplifying assumption is to abstract from the details of a spatial layout of individual channels and assume that a single channel experiences an average Ca2+ concentration that depends only on the number of open channels.

6.1 A straightforward stochastic Petri net model

To begin our discussion and exploration of coupled Ca2+-regulated Ca2+ channels modeled through Möbius, we first implemented the model as a stochastic Petri net with marking dependent rates and for the simplest possible Ca2+-regulated channel with only two states, namely Open and Closed. Figure 2 shows the corresponding atomic Möbius model, which has an Open and Closed place, connected through timed activities, Opening and Closing. The transition rates are as defined in Eq. 2 with the expansion for instantaneously-coupled channels defined in Eqs. 6 and 7. With this model, we heavily rely on the mean-field coupling assumption since we cannot distinguish among different tokens which in turn helps us to keep the model simple. Specifically, the opening rate is

where \(N_C\in\{0,\ldots,N\}\) is the marking, or value, of the Closed place. The closing rate is

where \(N_O\in\{0,\ldots,N\}\) is the marking of the Open place. Note that the N C and N O occurring as the last factor in these expressions accounts for the number of channels that can potentially make a C → O or O → C transition, respectively. The marking of the Closed place is initialized to the total number of channels in the system, while the Open place has an initial marking of 0. The number of distinguishable states is

where N is the number of channels and M is the number of states in each channel. For the mean field model with N channels and M = 2 states per channel, this yields |S| = N + 1 which is far less than M N states in a model where we could distinguish each channel.

In Möbius, measures of interest (such as the number of open channels) are defined through the reward variables. We defined a performance variable, numopen, to return the value of the place Open at a specific moment in time. For a range of values of N and c*, we report the mean and variance for the number of open channels, the resulting Score and the wall clock time in seconds used to compute these values with different analysis techniques (parameters k −, k +, η, and c ∞ are fixed). In Table 1a, the first column reports on results for the state of the model at time t = t(N) ms, where t(N) = {200, 2000, 20000, 200000, 2000000, 20000000}, for N = {19, 50, 100, 150, 200, 250}, respectively. These times are chosen to be far enough into the simulation that steady-state behavior can be expected. Such transient results are obtained through Möbius by simulation or transient CTMC analysis based on randomization, i.e., the Möbius TRS solver. However, since the only difference between the two methods was the time taken to solve for the reward, only the numerical analysis results are included here. The simulation times for transient results ranged from about 2 s for a 19 channel system, to about 3 h for a 150 channel system, and to about 2 days for a 200 channel system. For steady-state analysis, the second column of Table 1a gives results from a numerical computation of the steady-state distribution with ISS, an iterative steady-state solver performing Gauss-Seidel iterations with successive over-relaxation (SOR). The steady-state distribution was also calculated by simulation, but these results are not included here because the only difference was the time taken to solve for the reward. The simulation times for steady-state results ranged from about 3 s for a 19 channel system, to 260 s for a 150 channel system, and to about 520 s for a 200 channel system.

The GSPN approach is appropriate and straightforward to model mean-field coupled channel models when the number of states per channel is not too large for a clear graphical presentation. Considering computation times, we see that for CTMCs of small size, transient and steady-state numerical solvers obtain results much faster than a discrete event simulation. However, numerical solvers are currently limited to CTMCs in the order of 106–107 states and for such large models their performance depends on a number of factors. We note that DeRemigio et al. (2007) report that multi-level solution methods for steady-state computations are competitive with simulation for Markovian models of Ca2+ signaling complexes.

6.2 A Replicate/Join composed stochastic Petri net model

We derive an N channel release site model by composing N single channel models. In the previous section, we derived the same model using only the atomic model in Möbius. Now, we use the composition methods in Möbius in order to get a more robust model. A single two-state channel model is described as an atomic SAN model in Möbius as shown in Fig. 3a. The channel can be in either the open or closed state, the same as for the previous section. Because there is interaction between the channels which now needs to be shared among different instances of the atomic model, an extra place is added to keep a count of how many states are open, NumOpen. This place is shared among all instances of this atomic model and used to define transition rates that depend on the number of open channels. It is common practice to graphically represent dependencies with edges; in SANs, it is possible to omit edges between input/output gates and places. The motivation is to avoid cluttering the graphs of large SANs. Input and output gates allow a modeler to define state-dependent enabling and firing functions in a very general manner (Möbius supports C++ code fragments to describe e, f, and w). The right-pointing arrows, OG_Open and OG_Close, are output gates. These are used to change the marking of the place NumOpen which is incremented upon firing transition Opening, and decremented upon firing transition Closing. These transitions use rates from Eq. 2 with the expansion for instantaneously-coupled channels as defined in Eq. 6 and Eq. 7 for the rates to transition between the Open and Closed states.

The Möbius atomic SAN model for a a two-state channel and b a four-state channel under the REP composition when position is not considered. NumOpen is the place used to store the information of how many channels in the system are in the open state. The output gates (right pointing triangles) are used to increment and decrement the value of NumOpen

We consider the two-state model as the most simple one for illustrating purposes, while extensions to more complex single channel models with more states are straightforward. Therefore, some experiments have also been done using the four-state channel model shown in Fig. 3b. Transitions Opening[x] and Closing[x] in Fig. 3b use transition rates from Eq. 4 with the expansion for instantaneously-coupled channels as defined in Eq. 6 and 7. The output gates are used in a manner similar to the two-state model of Fig. 3a.

When reaching a certain number of places and transitions, we are in need of some structuring mechanism or composition operations for SANs. In addition to this, we are in need of a composition operation to construct the single channel model into a release site model with N channels. We make use of the Möbius Replicate/Join composer that provides a Replicate composition operator which instantiates N copies of a given model. All N instances can share a user-given set of state variables/places, as it is the case for NumOpen in our model. This restricted communication and the symmetry among the N instances of the same atomic model implies lumpability, and Möbius leverages on this to derive a reduced lumped CTMC in an automated manner. It is this reduction that reduces the state space from an otherwise M N states for an M-state N channel model to the size of Eq. 18 (as in the plain GSPN model discussed in Sect. 6.1). Figure 4a shows the composed model in the graphical notation for the Replicate/Join composer.

a The Möbius composed model using the REP operator. b The Möbius composed model using the REP and JOIN operator. With the addition of the JOIN node, the modeler can study the movement and behavior of a single “tagged” channel. c The Möbius composed model using a JOIN operator to tag a channel in the GSPN model discussed in Sect. 6.1. For all compositions, The Submodel can be either of the atomic models shown in Fig. 3

To measure the number of open channels, we again defined a performance variable, numopen, to return the value of the place NumOpen at a specific moment in time. Using the Replicate composed model, we ran one simulation of 50 two-state channels for 2,000 ms. The results from this experiment can be seen in Fig. 5. These results are similar to Fig. 1. Figure 5a shows stochastic Ca2+ excitability reminiscent of that of Ca2+ puffs/sparks, while Fig. 5b shows that most of the time very few channels are open.

a Ca2+ release site simulation with 50 two-state channels leading to a Score of 0.38. b Probability distribution of the number of open channels for the same experiment. Parameters as in Table 1

As before, we performed both transient analysis and steady-state analysis. Table 1b shows results for the same type of experiments as for the GSPN model discussed in the previous section. Both sets of experiments give consistent results. The state space generation times for the composed model are higher due to the automated reduction that is necessary to achieve a CTMC of same dimension as for the plain GSPN model. Since the resulting lumped CTMCs are of size |S| = N + 1 only, the automated reduction induces overhead if it is used to this extent. Note also that the simulator, which does not apply any lumping but simulates the unreduced model, gives significantly higher computation times than for the numerical analysis; for N ≥ 100 the overhead is in the range of hours of computation time. So the use of the Replicate operator should take this effect into account.

We investigated this issue further for N = 1,000 and N = 2,000 and observed computation times for a numerical steady-state analysis of 0.5 s for the smaller, 1.7 s for the larger configuration with a Score of 0.46. It should be noted that the rate of convergence can vary by orders of magnitude depending on model parameters, even when N is fixed. The required precision also influences the convergence time.

Further analysis was done on how much time the model spends in states with a certain number of channels being open. We recorded the number of open channels at time t = 2,000 ms with 50 two-state channels, both by numerical analysis and from 10,000 simulation batches. The results from this experiment can be seen in Fig. 6. Because simulation produces similar results, only the numerical analysis results are shown. The mean value for N O from the numerical analysis was 5.63 with a variance of 130.73, which gives a Score of 0.46. The mean value of N O from 10,000 simulation batches is 5.77 with a variance of 133.78, which also gives a Score of 0.46. Numerical analysis finished in 0.34 s, while the simulation took 1943.74 s. The highest probability is for the smallest number of open channels, in agreement with previous results showing a small average value for the number of channels in the open state (Nguyen et al. 2005; DeRemigio and Smith 2005). There is a slight increase in the proportion for N≈34, which occurs because of the occasional synchronous opening of a significant fraction of channels (cf. Fig. 5). This increase can be seen more clearly in Fig. 6b, which is a rescaling of Fig. 6a.

a Probability distribution of the number of open channels for a two-state 50 channel model from the Replicate composed model solved by numerical analysis. Score and parameters as in Table 1. The GSPN and Replicate composed model produce the same results both through simulation of 10,000 batches and numerical analysis; only one result is shown for readability. b The figure is rescaled to see the slight increase in the distribution for N ≈ 34

Note that the Replicate/Join composer allows for nested, tree-type structures to compose atomic models with replicate and join operators. Hence a simple modification would allow study of the behavior of a single channel. Reduce the number of replications for the Replicate node by 1 and use a Join node to connect this last node to the rest (see Fig. 4b and c). In this manner, one can collect the same data for this individual channel, as for the rest of the group, thereby seeing the movement and behavior of a single channel. This construction also facilitates consideration of the composition of a heterogeneous set of single channel models, for instance, a single complicated M state model with a large cluster of simple two-state models. The concept follows what is known as the “tagged customer” approach in performance modeling with queuing networks, which is also known to be tricky to implement with Petri nets.

We exercised some experiments for a homogeneous cluster of four-state models as in Fig. 3b. Figure 7 shows the probability distribution for the channel of interest to be in one of its four states at t = 2,000 ms based on transient numerical analysis. As expected, it can be seen that a channel will spend most of its time in either the closed1 or closed3 state, since this is where the fastest transitions lead. If the channel of interest is given a different initial condition than closed1 for the starting state, the probability changes slightly between closed1/open and closed2/closed3.

Probability distribution of the state of a single 4-state channel. O is the open state and C1, C2, and C3 are the closed1, closed2, and closed3 states, respectively. The left, lighter, bar is for when the tagged channel is initialized to be in either the closed1 or open states and the right, darker, bar is for when the tagged channel is initialized to be in either the closed2 or closed3 states

Table 2 presents results from the analysis of a four-state channel model in the same way as Table 1 but only for transient analysis, so we included both the simulation and numerical analysis results. This model results in much larger CTMCs with |S| = (N + 3)(N + 2)(N + 1)/6 states. The results show that the simulator is faster than the transient solver TRS, which is based on a sparse matrix representation of Q and reaches its limit at |S| = 585, 276 for N = 150.

So far, we mainly focused on the computation of Score values for channel models through the measurement of the number of open channels. However, there is also interest in the probability distribution for the amplitude of puffs and sparks as well as their duration. We can measure these values by adjusting the atomic model to select an appropriate starting situation of a puff or spark, i.e, k of N channels are open, and by changing the dynamics of the model to make all channels remain closed once all of them are closed. With this modification, we can measure the amplitude as the maximal value of NumOpen. In addition, we can measure the duration of a spark as the mean time to absorption in the transformed CTMC. This new model can be seen in Fig. 8. The place Start is used to flag when k channels open, indicating a peak worth measuring. The place Finish is used to flag when the model returns to 0 channels open, but only if Start has also been flagged. Finally, the place MaxOpen is used to store the maximum value of the number of open channels, again, only if Start has been flagged. To calculate the amplitude, we can simply return the value of MaxOpen at a time after the absorbing state is reached. To calculate the duration, the reward value returns the marking of Finish every 1 ms, which gives the cumulative distribution of the time until absorption, which is the time that Finish changes from a mark of 0 to a mark of 1. For the experiments included here, the number of channels chosen was 50, as a large enough value to be able to see interesting behavior, yet small enough to be easily processed. The threshold of four was chosen as large enough to ensure some Ca2+ stochastic excitement.

The new Möbius atomic SAN model used for transient analysis. The place Start flags when the place NumOpen becomes greater than the threshhold; the place Finish flags when NumOpen returns to 0 (after passing the threshhold); and the place MaxOpen stores the maximal value of NumOpen. The input gates (left pointing triangles) are used to stop the model when NumOpen returns to 0 while the output gates (right pointing triangles) are used to change the marks of Start and Finish as needed, as well as increment and decrement NumOpen as before

The probability distribution of the maximum number of open channels is shown in Fig. 9. As before, numerical analysis and simulation produce similar results so only the former is shown. The mean value for MaxOpen from the numerical analysis was 7.91 and from 10,000 simulation batches was 7.56. Numerical analysis finished in 0.93 s, while the simulation took 60.49 s. Figure 9 shows that the probability distribution for the number of open channels is bimodal, with one peak at the chosen threshold (N = 4) and another around N = 45.

a Probability distribution of the maximum number of open channels for a two-state 50 channel model and an initial threshold of four open channels from numerical analysis. Score and parameters are as Fig. 6. Simulation produces similar results and are is not included here for readability. b The figure is rescaled to see the slight increase in the proportion for N > 40

The probability distribution of the mean time to absorption is shown in Fig. 10. Again, results from numerical analysis and simulation are similar so only numerical analysis results are included for readability. The average time to absorption from numerical analysis is 65.49 ms, with an average time of 65.31 ms from simulation. Numerical analysis completed in 0.04 s while simulation completed in 63.58 s.

a Probability distribution of the mean time to absorption (all channels closed) given 50 channels and an initial threshhold of four open channels. Each bar is a 20 ms interval. Score and parameters are as Table 1. Results are from numerical analysis; simulation produces similar results and are not included for readability. b The cumulative probability distribution shows that there is an 80% probability of all channels being closed by approximately 100 ms and 90% probability to close all channels by approximately 150 ms

These results show that there is greater than 50% probability all channels close in approximately 50 ms or less, and greater than 90% probability the absorbing state is reached within 150 ms.

7 Spatial arrangement and Graph composer

When considering spatial aspects of Ca2+ release site dynamics, we need to incorporate for each of the N channels information on its distance to other channels and access to the state of any other channel to formulate state-dependent transition rates. In Sect. 3, the impact of distances is represented by a N × N coupling matrix C. Among the three composers of Möbius (Replicate/Join, Graph composition and Action Synchronization), the Graph composer is the most natural fit. The Graph composer allows us to compose instances of atomic (or composed models) with the same Join composition as in the Replicate/Join composer, however the resulting graph of composed models need not be a tree as for the Replicate/Join composer. The Graph composer is able to leverage on symmetries in the model composition graph to reduce the associated CTMC with the help of lumpability. Möbius employs canonical labeling and automorphism groups to automatically detect suitable symmetries (Obal II et al. 2007) and to achieve a reduced CTMC. This mitigates the state space explosion problem and helps to enable numerical methods to solve models with very large CTMCs. Using the Graph composer, a modeler can maintain the atomic model used with a Replicate/Join composer, with only minor changes. This means that experimentation can be done with both a spatial arrangement of the channels, as well as the mean-field coupling, with very little extra work.

For this paper, we model a cluster of four two-state channels arranged in a square, with a minimum inter-channel distance of 0.03 μm, giving a C matrix in the form of Eq. 13:

The values of this matrix are as defined in Nguyen et al. (2005) with parameters D = 250 μm−2 s−1 and λ = 5 μm. Examination of this matrix shows there are only 3 distinct values (c 0 = 3.2664 μM, c 1 = 0.1637 μM, and c 2 = 0.1080 μM) that we can declare as global variables in Möbius to simplify storage and referencing. However, with a larger system, and a different arrangement of channels, it may not be obvious how many unique values are contained in the C matrix. Fortunately, Möbius has the capability to allow a modeler to incorporate outside C++ code to calculate, access, and store these values.

Because each channel is affected differently by other channels based on how far apart they are, each channel needs to know which of the other channels are open. The atomic model is then changed slightly from the one used under the Rep Composer, to allow for the storage of the extra information. The challenge is to have a generic atomic model that is then configured with respect to its location. The new atomic SAN model can be seen in Fig. 11a. It has N = 4 new places LeftStatus, RightStatus, OppStatus, and SelfStatus to track which channel is open. During the composition this information is shared in a manner that gives all channels the correct information. When more channels are added to the system, more places are added to the atomic model. Figure 11b shows the atomic model that is used for a 19 channel system. The 19 channel system has a spatial arrangement of a hexagonal lattice; we add three additional places: CenterStatus is for the channel in the middle of the lattice, while NumOpen has been divided into NumInnerOpen and NumOuterOpen to reflect that the channels are now arranged in two tiers.

In the composition, the Join operator is used to share variables among the atomic models—for instance, NumOpen is shared by all channels since each channel affects how many are open. The information is shared through the Join node by carefully matching the status places between every pair. There are two ways to proceed. One alternative is to use absolute names like StatusAtOne to encode the state of the channel at the first location and consistently use it this way throughout the model. This implies that the associated distance and transition rates change in each instance of that atomic model, which in turn implies that the atomic model itself is changed. The other alternative is to use relative names like LeftStatus for the state of the neighboring channel to the left. This allows us to take advantage of regular arrangements like the grid arrangement or the hexagonal lattice and to encode the arrangement solely in the composition operation of the Join, namely by carefully matching which variables are shared and merged among models. For instance, in Fig. 12a, channel 1’s RightStatus is shared with channel 4’s SelfStatus, channel 3’s LeftStatus and channel 2’s OppStatus. This information is then used to add (or not add) the effect, c 1 or c 2, of that channel to the transition rate. If done appropriately, the symmetries of regular spatial arrangements that imply lumpability for the underlying CTMC are carried over to symmetries in the Graph composed model, which in turn can be detected and used by Möbius for an automated reduction of the CTMC.

7.1 Results and analysis of Graph composition experiments

The composed model can then be solved in Möbius either by simulation or by numerical analysis where only the latter leverages on lumpability and symmetries. Table 3 shows results comparing experiments performed using the Graph and Replicate composition approaches. As with Table 1, only the results from the numerical analysis are included, since the simulation produces nearly matching results. The simulation times for the Replicate composed models were about 3 and 260 s for the steady-state analysis for N = 4 and 19, respectively. With the Graph composed models, the times were about 5 s and 38 s for N = 4 and 19, respectively. The Graph composed models were also solved using the symmetry detection and state-space reduction option included with Möbius. The calculated mean and variance values for the reward variable matched the results already reported for N = 4. For N = 19, results for a mean-field coupled model and the spatial model differ, illustrating that the spatial arrangement may have an impact, as discussed in Groff et al. (2009).

A limitation with using Möbius to solve a positional system for a larger number of channels (N) is the increase in the amount of work needed to define the model. With a positional system, all interactions between channels must be included in the model. As the model description grows with the value of N, it would be preferable to have a simple expression of the model analogous to the Replicate/Join composition. Further research could explore ways to do positional modeling without the need to explicitly state every channel interaction. Another limitation of using Möbius for the numerical analysis of CTMCs is the size of the generated state space. This restriction can be mitigated with advanced lumpability techniques specifically designed for the reduction of CTMC models of Ca2+ signaling complexes, which is an important topic for further research.

8 Conclusion

In this paper, we discussed ways to use stochastic Petri nets and in particular SANs supported by Möbius to express and subsequently analyze Markovian models of Ca2+ signaling complexes. We selected this application area since it highlights two characteristics of complex stochastic models in biology: (1) models are structured by their compositional formulation, and (2) models have a spatial aspect that a modeling formalism should be able to take into account. We showed how to use stochastic Petri nets and composition operations in a way that naturally corresponds to the structure of model Ca2+ release sites composed of instantaneously coupled Ca2+-regulated channels. Composition can be used to make Petri net modeling scale with the size of models, and to intuitively express regularities that are present in a model to the advantage of subsequent numerical analysis. For example, Möbius can reduce the associated Markov chain based on lumpability that is deduced from the model’s compositional structure in an automated manner. More concretely, we demonstrated how to use the Möbius Replicate/Join composer to model mean-field coupled Ca2+ release sites. We also showed how more detailed release site models in which the spatial arrangement of channels is important can be constructed using the Graph composer. Representative models of both types were analyzed with respect to their ability to exhibit puffs and sparks, and the amplitude and duration of these events. We demonstrated multiple methods of analysis including simulation and both transient and steady-state analysis of Markov chains, and called attention to the advantages and disadvantages of different modeling and analysis techniques.

References

Benoit A, Brenner L, Fernandes P, Plateau B (2004) Aggregation of stochastic automata networks with replicas. Linear Algebra Appl 386:111–136

Berridge MJ (1997) Elementary and global aspects of calcium signalling. J Exp Biol 499(2):291–306

Berridge MJ (2006) Calcium microdomains: organization and function. Cell Calcium 40:405–412

Bray D (1998) Signaling complexes: biophysical constraints on intracellular communication. Annu Rev Biophys Biomol Struct 27(1):59–75

Buchholz P (1994) Exact and ordinary lumpability in finite Markov chains. J Appl Probab 31(1):59–74

Buchholz P (1995) Equivalence relations for stochastic automata networks. In: Stewart WJ (ed) Computation with Markov chains. Kluwer, Boston, MA, pp 197–216

Buchholz P (2004) Adaptive decomposition and approximation for the analysis of stochastic Petri nets. Perform Eval 56(1–4):23–52

Buchholz P, Dayar T (2004) Comparison of multilevel methods for Kronecker-based Markovian representations. Computing 73(4):349–371

Chaouiya C (2007) Petri net modelling of biological networks. Brief Bioinform 8(4):210–219

Chiola G, Dutheillet C, Franceschinis G, Haddad S (1993) Stochastic well-formed colored nets and symmetric modeling applications. IEEE Trans Comput 42(11):1343–1360

Cho W (2006) Building signaling complexes at the membrane. Science’s STKE 2006(321):pe7

Ciardo G (1989) Analysis of large stochastic Petri net models. PhD thesis, Duke University

Ciardo G, Miner AS (1999) A data structure for the efficient Kronecker solution of GSPNs. In: Proceedings of the 8th international workshop on Petri nets and performance models. IEEE CS, pp 22–31

Ciardo G, Trivedi KS (1993) A decomposition approach for stochastic reward net models. Perform Eval 18(1):37–59

Ciardo G, Marmorstein RM, Siminiceanu R (2003) Saturation unbound. In: 9th international conference on tools and algorithms for the construction and analysis of systems, vol 2619. Springer, Berlin, pp 379–393