Abstract

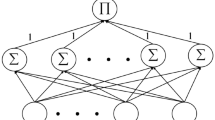

Sigma-Pi (Σ-Π) neural networks (SPNNs) are known to provide more powerful mapping capability than traditional feed-forward neural networks. A unified convergence analysis for the batch gradient algorithm for SPNN learning is presented, covering three classes of SPNNs: Σ-Π-Σ, Σ-Σ-Π and Σ-Π-Σ-Π. The monotonicity of the error function in the iteration is also guaranteed.

Similar content being viewed by others

Abbreviations

- SPNN:

-

Sigma-Pi neural network

References

Rumelhart DE and McClelland JL (1986). Parallel distributed processing, explorations in the microstructure of cognition. MIT Press, Cambridge

Li JY and Yu YL (1995). The realization of arbitrary Boolean function by two layer higher-order neural network. J South China Univ Techn 23: 111–116

Lenze B (2004). Note on interpolation on the hypercube by means of sigma-pi neural networks. Neurocomputing 61: 471–478

Bertsekas DP and Tsiklis J (1996). Neuro-dynamic programming. Athena Scientific, Boston, MA

Kushner HJ and Yang J (1995). Analysis of adaptive step size SA algorithms for parameter rates. IEEE Transac Automat Control 40: 1403–1410

Shao HM, Wu W, Li F and Zheng GF (2004). Convergence of gradient algorithm for feedforward neural network training. Proceed Int Symposium Comput Inform 2: 627–631

Wu W, Feng G and Li X (2002). Training multilayer perceptrons via minimization of sum of ridge functions. Adv Computat Math 17: 331–347

Liang YC (2002). Successive approximation training algorithm for feedforward neural networks. Neurocomputing 42: 311–322

Wu W, Feng G, Li Z and Xu Y (2005). Deterministic convergence of an online gradient method for BP neural networks. IEEE Transac Neural Networks 16: 533–540

Durbin R and Rumelhart D (1989). Product units: a computationally powerful and biologically pausible extension to backpropagation networks. Neural Comput 1: 133–142

Lenze B (1994). How to make sigma-pi neural networks perform perfectly on regular training sets. Neural Networks 7: 1285–1293

Heywood M and Noakes P (1995). A Framework for improved training of sigma-pi networks. IEEE Transac Neural Networks 6: 893–903

Yuan Y and Sun W (2001). Optimization theory and methods. Science Press, Beijing

Wu W, Shao HM, Qu D (2005) Strong convergence for gradient methods for BP networks training. Proceedings of the International Conference on on Neural Networks and Brains (ICNNB’05), pp 332–334

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, C., Wu, W. & Xiong, Y. Convergence Analysis of Batch Gradient Algorithm for Three Classes of Sigma-Pi Neural Networks. Neural Process Lett 26, 177–189 (2007). https://doi.org/10.1007/s11063-007-9050-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-007-9050-0

Keywords

- Convergence

- Sigma-Pi-Sigma neural networks

- Sigma-Sigma-Pi neural networks

- Sigma-Pi-Sigma-Pi neural networks

- Batch gradient algorithm

- Monotonicity