Abstract

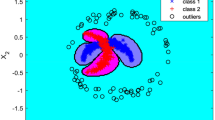

This paper presents a new model developed by merging a non-parametric k-nearest-neighbor (kNN) preprocessor into an underlying support vector machine (SVM) to provide shelters for meaningful training examples, especially for stray examples scattered around their counterpart examples with different class labels. Motivated by the method of adding heavier penalty to the stray example to attain a stricter loss function for optimization, the model acts to shelter stray examples. The model consists of a filtering kNN emphasizer stage and a classical classification stage. First, the filtering kNN emphasizer stage was employed to collect information from the training examples and to produce arbitrary weights for stray examples. Then, an underlying SVM with parameterized real-valued class labels was employed to carry those weights, representing various emphasized levels of the examples, in the classification. The emphasized weights given as heavier penalties changed the regularization in the quadratic programming of the SVM, and brought the resultant decision function into a higher training accuracy. The novel idea of real-valued class labels for conveying the emphasized weights provides an effective way to pursue the solution of the classification inspired by the additional information. The adoption of the kNN preprocessor as a filtering stage is effective since it is independent of SVM in the classification stage. Due to its property of estimating density locally, the kNN method has the advantage of distinguishing stray examples from regular examples by merely considering their circumstances in the input space. In this paper, detailed experimental results and a simulated application are given to address the corresponding properties. The results show that the model is promising in terms of its original expectations.

Similar content being viewed by others

References

Vapnik VN (1995) The nature of statistical learning theory. Springer-Verlag, New York

Vapnik VN (1999) An overview of statistical learning theory. IEEE Trans Neural Netw 10: 988–999. doi:10.1109/72.788640

Schölkopf B, Smola AJ (2002) Learning with kernels. MIT Press, Cambridge, MA

Cover TM, Hart PE (1967) Nearest neighbor pattern classification. IEEE Trans Inf Theory 13: 21–27. doi:10.1109/TIT.1967.1053964

Duda RO, Hart PE (1973) Pattern classification and scene analysis. John Wiley and sons, New York

Fukunaga K (1990) Statistical pattern recognition. 2. Academic Press, San Diego

Duda RO, Hart PE, Stork DG (2000) Pattern classification. John Wiley and sons, New York

Webb A (2002) Statistical pattern recognition. 2. John Wiley and sons, New York

Hsu C-C, Yang C-Y, Yang J-S (2005) Associating kNN and SVM for higher classification accuracy. In: International conference on computational intelligence and security. Xi’an, China, pp. 550–555

Cortes C, Vapnik VN (1995) Support vector networks. Mach Learn 20: 273–297

Hastie T, Tibshirani R, Friedman J (2001) The elements of statistical learning. Springer-Verlag, New York

Bartlett PL, Jordan MI, McAuliffe JD (2003) Convexity, classification, and risk bounds. Technical report 638, Department of Statistics, UC Berkeley, CA

Vapnik VN (1998) Statistical learning theory. John Wiley and sons, New York

Shawe-Taylor J, Bartlett PL, Williamson RC, Anthony M (1998) Structural risk minimization over data-dependent hierarchies. IEEE Trans Inf Theory 44(5): 1926–1940. doi:10.1109/18.705570

Zhang T (2004) Statistical behavior and consistency of classification methods based on convex risk minimization. Ann Stat 32: 56–85. doi:10.1214/aos/1079120130

Yang C-Y, Chou J-J, Yang J-S (2003) A method to improve classification performance of ethnic minority classes in k-nearest-neighbor rule. In: IEEE international conference on computational cybernetics. Siófok, Hungary

Yang C-Y, Hsu C-C, Yang J-S (2006) A novel SVM to improve classification for heterogeneous learning samples. In: International conference on computational intelligence and security. Guangzhou, China, pp. 172–175

Schölkopf B, Smola AJ, Müller KR (1998) Nonlinear component analysis as a kernel Eigen value problem. Neural Comput 10: 1299–1319. doi:10.1162/089976698300017467

Breiman L (1996) Bias, variance and arcing classifiers. Technical report 460, Department of Statistics, UC Berkeley, CA

Murphy PM (1995) UCI-benchmark repository of artificial and real data sets. University of California Irvine. http://www.ics.uci.edu/~mlearn

Vlachos P, Meyer M (1989) StatLib. Department of Statistics, Carnegie Mellon University. http://lib.stat.cmu.edu/

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, CY., Hsu, CC. & Yang, JS. Stray Example Sheltering by Loss Regularized SVM and kNN Preprocessor. Neural Process Lett 29, 7–27 (2009). https://doi.org/10.1007/s11063-008-9092-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-008-9092-y