Abstract

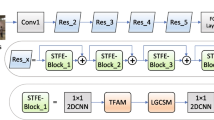

Learning spatiotemporal information is a fundamental part in action recognition. In this work, we attempt to extract efficient spatiotemporal information for video representation through a novel architecture, termed as SpatioTemporal Fusion Networks (STFN). STFN extract spatiotemporal information by introducing connections between the spatial and temporal streams in two-stream networks with fusion blocks, called as Compactly Fuse Spatial and Temporal information (CFST) block, whose goal is to integrate spatial and temporal information with little computational cost. CFST is built upon Compact Bilinear Pooling which can capture multiplicative interactions at corresponding locations. For better integration of two streams, we make an exploration of fusion configuration about where to insert fusion block and a combination of CFST block and additive interaction. We evaluate our proposed architecture on UCF-101 and HMDB-51, and obtain a comparable performance.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 2:1097–1105

Girshick R, Donahue J, Darrell T et al (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3431–3440

Karpathy A, Toderici G, Shetty S et al (2014) Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1725–1732

Ji S, Xu W, Yang M et al (2013) 3D convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: Advances in neural information processing systems, pp 568–576

Feichtenhofer C, Pinz A, Wildes R (2016) Spatiotemporal residual networks for video action recognition. Advances in neural information processing systems, pp 3468–3476

Gao Y, Beijbom O, Zhang N et al (2016) Compact bilinear pooling. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 317–326

Soomro K, Zamir A R, Shah M (2012) UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402

Kuehne H, Jhuang H, Stiefelhagen R et al (2013) Hmdb51: A large video database for human motion recognition. In: High Performance Computing in Science and Engineering 12. Springer, Berlin, pp 571–582

Laptev I (2005) On space-time interest points. Int J Comput Vis 64(2–3):107–123

Willems G, Tuytelaars T, Van Gool L (2008) An efficient dense and scale-invariant spatio-temporal interest point detector. In: European conference on computer vision. Springer, Berlin, pp 650–663

Wang H, Kläser A, Schmid C et al (2011) Action recognition by dense trajectories. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3169–3176

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: Proceedings of the IEEE international conference on computer vision, pp 3551–3558

Laptev I, Marszalek M, Schmid C et al (2008) Learning realistic human actions from movies. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–8

Wang L, Xiong Y, Wang Z et al (2016) Temporal segment networks: towards good practices for deep action recognition. In: European conference on computer vision. Springer, Cham, pp 20–36

Feichtenhofer C, Pinz A, Zisserman A (2016) Convolutional two-stream network fusion for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1933–1941

Feichtenhofer C, Pinz A, Wildes RP (2017) Spatiotemporal multiplier networks for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7445–7454

Tran D, Bourdev L, Fergus R et al (2015) Learning spatiotemporal features with 3d convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp 4489–4497

Qiu Z, Yao T, Mei T (2017) Learning spatio-temporal representation with pseudo-3d residual networks. In: Proceedings of the IEEE international conference on computer vision, pp 5534–5542

Diba A, Fayyaz M, Sharma V et al (2017) Temporal 3D ConvNets: new architecture and transfer learning for video classification. arXiv preprint arXiv:1711.08200

Carreira J, Zisserman A (2017) Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4724–4733

Wang L, Li W, Li W, et al (2018) Appearance-and-relation networks for video classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1430–1439

Sun S, Kuang Z, Sheng L, et al (2018) Optical flow guided feature: a fast and robust motion representation for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1390–1399

Wang L, Qiao Y, Tang X (2015) Action recognition with trajectory-pooled deep-convolutional descriptors. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4305–4314

Zhu W, Hu J, Sun G et al (2016) A key volume mining deep framework for action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1991–1999

Hong C, Yu J, Wan J et al (2015) Multimodal deep autoencoder for human pose recovery. IEEE Trans Image Process 24(12):5659–5670

Hong C, Chen X, Wang X et al (2016) Hypergraph regularized autoencoder for image-based 3D human pose recovery. Signal Process 124:132–140

Hong C, Yu J, Tao D et al (2015) Image-based three-dimensional human pose recovery by multiview locality-sensitive sparse retrieval. IEEE Trans Ind Electron 62(6):3742–3751

Yang M, Liu Y, You Z (2017) The Euclidean embedding learning based on convolutional neural network for stereo matching. Neurocomputing 267:195–200

Qian S, Liu H, Liu C et al (2018) Adaptive activation functions in convolutional neural networks. Neurocomputing 272:204–212

Yu Z, Yu J, Fan J et al (2017) Multi-modal factorized bilinear pooling with co-attention learning for visual question answering. In: Proceedings of the IEEE international conference on computer vision, pp 1839–1848

Yu Z, Yu J, Xiang C et al (2018) Beyond bilinear: generalized multimodal factorized high-order pooling for visual question answering. IEEE Trans Neural Netw Learn Syst 99:1–13

Kim JH, On KW, Lim W et al (2016) Hadamard product for low-rank bilinear pooling. arXiv preprint arXiv:1610.04325

Simon M, Gao Y, Darrell T et al (2017) Generalized orderless pooling performs implicit salient matching. In: Proceedings of the IEEE international conference on computer vision, pp 4960–4969

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61673402, Grant 61273270, and Grant 60802069, in part by the Natural Science Foundation of Guangdong under Grant 2017A030311029, Grant 2016B010109002, in part by the Science and Technology Program of Guangzhou under Grant 201704020180 and Grant 201604020024, and in part by the Fundamental Research Funds for the Central Universities of China.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Z., Hu, H. & Zhang, J. Spatiotemporal Fusion Networks for Video Action Recognition. Neural Process Lett 50, 1877–1890 (2019). https://doi.org/10.1007/s11063-018-09972-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-018-09972-6