Abstract

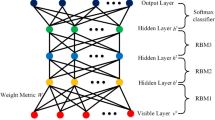

Convolutional Deep Belief Network (CDBN) is typically classified into deep generative model. Although CDBN has demonstrated the powerful capacity of feature extraction in unsupervised learning, there still remain diverse challenges in the robust and high-quality feature extraction. This paper designs an advanced hierarchical generative model in order to tackle with these troubles. First, we modify conventional Convolutional Restricted Boltzmann Machine (CRBM) through inducing Gaussian hidden units subsequently following point-wise multiplication with the original binary spike hidden units for high-order feature extraction of the local patch. We theoretically derive entire inferences of this novel model. Second, we attempt to learn more robust features by minimizing L2 norm of the jacobian of the extracted features producing from the modified model as novel regularization trick. This can introduce a localized space contraction benefit for robust feature extraction in turn. Finally, this paper construct a novel deep generative model, Contractive Slab and Spike Convolutional Deep Belief Network (CssCDBN), based on the modified CRBM, in order to learn deeper and more abstract features. The performances on diverse visual tasks indicate that CssCDBN is a more powerful model achieving impressive results over many currently excellent models.

Similar content being viewed by others

References

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, IEEE, pp 1725–1732. https://doi.org/10.1109/cvpr.2014.223

Lee H, Grosse R, Ranganath R, Ng AY (2011) Unsupervised learning of hierarchical representations with convolutional deep belief networks. Commun ACM 54(10):95–103. https://doi.org/10.1145/2001269.2001295

Masci J, Meier U, Ciresan D, Schmidhuber J (2011) Stacked convolutional auto-encoders for hierarchical feature extraction. Lect Notes Comput Sci 6791:52–59

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60(6):84–90. https://doi.org/10.1145/3065386

Szegedy C, Liu W, Jia YQ, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, IEEE, pp 1-9

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, IEEE, pp 770–778

Norouzi M, Ranjbar M, Mori G (2009) Stacks of convolutional restricted boltzmann machines for shift-invariant feature learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, IEEE, pp 2727–2734

Lee H, Largman Y, Pham P, Ng AY (2009) Unsupervised feature learning for audio classification using convolutional deep belief networks. In: Proceedings of the international conference on neural information processing systems, pp 1096–1104

Schmidt EM, Kim YE (2011) Learning Emotion-based acoustic features with deep belief networks. In: 2011 IEEE workshop on applications of signal processing to audio and acoustics (Waspaa), pp 65–68

Liu Y, Zhou SS, Chen QC (2011) Discriminative deep belief networks for visual data classification. Pattern Recogn 44(10–11):2287–2296. https://doi.org/10.1016/j.patcog.2010.12.012

Bengio Y, Lamblin P, Popovici D, Larochelle H (2006) Greedy layer-wise training of deep networks. In: Proceedings of the international conference on neural information processing systems, pp 153–160

Krizhevsky A (2009) Learning multiple layers of features from tiny images. Science Department, University of Toronto, Tech, pp 1–60. https://doi.org/10.1.1.222.9220

Ranzato M, Krizhevsky A, Hinton GE (2010) Factored 3-way restricted boltzmann machines for modeling natural images. J Mach Learn Res 9:621–628

Swersky K, Ranzato M, Buchman D, Marlin BM, Freitas ND (2011) On autoencoders and score matching for energy based models. In: Proceedings of the international conference on machine learning, pp 1201–1208

Courville A, Bergstra J, Bengio Y (2011) A spike and slab restricted Boltzmann machine. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics, pp 233–241

Bengio Y, Courville A, Bergstra JS (2011) Unsupervised models of images by spike-and-slab RBMs. In: Proceedings of the 28th international conference on machine learning, pp 1145–1152

Rifai S, Vincent P, Muller X, et al (2011) Contractive auto-encoders: explicit invariance during feature extraction. In: Proceedings of the 28th international conference on machine learning, pp 833–840

Lin M, Chen Q, Yan S (2014) Network in network. In: Proceeding of international conference on learning representations

Ranzato MHG (2010) Modeling pixel means and covariances using factorized third-order Boltzmann machines. Proc IEEE Conf Comput Vis Patt Recogn, IEEE 119:2551–2558

Chen D, Lv J, Zhang Y (2017) Graph regularized restricted boltzmann machine. IEEE Trans Neural Netw Learn Syst 99:91–99

Tomczak JM, Gonczarek A (2017) Learning invariant features using subspace restricted Boltzmann machine. Neural Process Lett 45(1):173–182. https://doi.org/10.1007/s11063-016-9519-9

Hu JY, Zhang JS, Ji NN, Zhang CX (2017) A new regularized restricted Boltzmann machine based on class preserving. Knowl-Based Syst 123:1–12. https://doi.org/10.1016/j.knosys.2017.02.012

Vincent P, Larochelle H, Bengio Y, et al (2008) Extracting and composing robust features with denoising autoencoders. In: Proceedings of the 25th international conference on machine learning, pp 1096–1103

Bishop C (1995) Training with noise is equivalent to Tikhonov regularization. Neural Comput 7(1):108–116

Bengio Y, Yao L, Alain G, et al (2013) Generalized denoising auto-encoders as generative models. In: Proceedings of the Advances in neural information processing systems, pp 899–907

Vincent P (2011) A connection between score matching and denoising autoencoders. Neural Comput 23(7):1661–1674

Fischer A, Igel C (2012) An Introduction to restricted Boltzmann machines. Iberoamerican congress on pattern recognition. Springer, Berlin, pp 14–36

Zhang N, Ding SF, Zhang J, Xue Y (2018) An overview on restricted Boltzmann machines. Neurocomputing 275:1186–1199. https://doi.org/10.1016/j.neucom.2017.09.065

Hinton GE (2002) Training products of experts by minimizing contrastive divergence. Neural Comput 14(8):1771–1800. https://doi.org/10.1162/089976602760128018

Ruder S (2016) An overview of gradient descent optimization algorithms. ArXiv preprint arXiv:1609.04747

Dieleman S (2016) https://github.com/Lasagne/Lasagne. Accessed 10 May 2017

Goodfellow IJ, Warde-Farley D, Lamblin P, et al (2013) Pylearn2: a machine learning research library. ArXiv preprint arXiv:1308.4214

Bergstra J, Breuleux O, Bastien F, et al (2010) Theano: a CPU and GPU math compiler in Python. In: Proceedings of 9th Python in science conference, pp. 1–7

Ba JL, Kingma DP (2015) Adam: a method for stochastic optimization. In: Proceedings of international conference on learning representations, 2015. pp 1–13

Hinton GEOS, Bao K (2005) Learning causally linked Markov random fields. Proc Int Workshop Artif Intell Stat 16:128–135

Osindero S, Welling M, Hinton GE (2006) Topographic product models applied to natural scene statistics. Neural Comput 18(2):381–414. https://doi.org/10.1162/089976606775093936

Poultney C, Chopra S, Cun YL (2007) Efficient learning of sparse representations with an energy-based model. In: Proceedings of the advances in neural information processing systems, pp 1137–1144

Ito M, Komatsu H (2004) Representation of angles embedded within contour stimuli in area V2 of macaque monkeys. J Neurosci 24(13):3313–3324. https://doi.org/10.1523/Jneurosci.4364-03.2004

Lee H, Ekandham C, Ng AY (2008) Sparse deep belief net model for visual area V2. In: Proceedings of the advances in neural information processing systems, pp 873–880

Huang KZ, Xu ZL, King I, Lyu MR, Campbell C (2009) Supervised self-taught learning: actively transferring knowledge from unlabeled data. In: IJCNN: 2009 international joint conference on neural networks, vols 1–6, p 481

Wang JJ, Yang JC, Yu K, Lv FJ, Huang T, Gong YH (2010) Locality-constrained linear coding for image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, IEEE, pp 3360–3367. https://doi.org/10.1109/cvpr.2010.5540018

Sohn K, Jung DY, Lee H, Hero AO (2011) efficient learning of sparse, distributed, convolutional feature representations for object recognition. In: 2011 IEEE international conference on computer vision (ICCV), pp 2643–2650

Li P, Liu Y, Liu GJ, Guo MZ, Pan ZY (2016) A robust local sparse coding method for image classification with Histogram Intersection Kernel. Neurocomputing 184:36–42. https://doi.org/10.1016/j.neucom.2015.07.136

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507. https://doi.org/10.1126/science.1127647

Salakhutdinov R, Hinton G (2012) An efficient learning procedure for deep Boltzmann machines. Neural Comput 24(8):1967–2006. https://doi.org/10.1162/NECO_a_00311

Ian Goodfellow DW-F, Mirza Mehdi, Courville Aaron, Bengio Yoshua (2013) Maxout networks. PMLR 28(3):1319–1327

Marijn F Stollenga JM, Gomez F, Schmidhuber J (2014) Deep networks with internal selective attention through feedback connections. In: Advances in neural information processing systems, pp 3545–3553

Lee CY, Xie S, Gallagher P, Zhang Z, Tu Z (2015) Deeply-supervised nets. In: AISTATS, pp 562–570

van der Maaten L, Hinton G (2008) Visualizing data using t-SNE. J Mach Learn Res 9:2579–2605

Larochelle H, Erhen D, Courville A, et al (2007) An empirical evaluation of deep architectures on problems with many factors of variation. In: Proceedings of the 24th international conference on Machine learning, pp 473–480

Nair V, Hinton G (2009) Implicit mixtures of restricted Boltzmann machines. In: Proceedings of the advances in neural information processing systems, pp 1145–1152

Larochelle H, Bengio Y (2008) Classification using discriminative restricted Boltzmann machines. In: Proceedings of international conference on machine learning, pp 536–543

Kihyuk Sohn GZ, Lee Chansoo, Lee Honglak (2013) Learning and selecting features jointly with point-wise gated Boltzmann machines. Proc Int Conf Mach Learn 28(2):217–225

Li Y, Wang D (2017) Learning robust features with incremental auto-encoders. ArXiv preprint arXiv:1705.09476

Zöhrer M, Pernkopf F (2014) General stochastic networks for classification. In: Proceedings of the advances in neural information processing systems, pp 2015–2023

Acknowledgements

This work is financially supported by the International S&T Cooperation Program of China (Grant No. 2015DFG12150) named as “Key Technology Elements and Demonstrator for Cloud-Assisted, Wireless Networked Ambulatory Supervision (C-Nurse)” and the National Natural Science Foundation of China (Grant No. 61175126). The authors would like to thank the handing Editor and the anonymous reviewers for their careful reading and helpful remarks, which have contributed in improving the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Wang, H., Bi, X. Contractive Slab and Spike Convolutional Deep Belief Network. Neural Process Lett 49, 1697–1722 (2019). https://doi.org/10.1007/s11063-018-9897-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-018-9897-2