Abstract

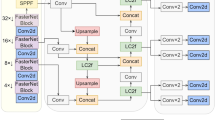

The onboard multi-modality sensors significantly expand perception ability of Unmanned Surface Vehicle (USV). This paper aims to fully utilize various onboard sensors and enhance USV’s object detection performance. We solve several unique challenges for application of USV multi-modality sensor system in the complex maritime environment. By utilizing deep learning networks, we achieved accurate object detection on water surface. We firstly propose a multi-modality sensor calibration method. The network fuses RGB images with multiple point clouds from various sensors. The well-calibrated image and point cloud are input to our deep object detection network, and conduct 3D detection through proposal generation network and object detection network. Meanwhile, we made a series of improvements to the system framework, which accelerate the detection procedures. We collected two datasets from the real-world offshore field and the simulation scenes respectively. The experiments on both datasets showed valid calibration results. On this basis, our object detection network achieves better accuracy than other methods. The performance of the proposed multi-modality sensor system meets the application requirement of our prototype USV platform.

Similar content being viewed by others

References

Roberts GN, Sutton R (eds) (2006) Advances in unmanned marine vehicles. The Institution of Engineering and Technology, Cambridge

Peng Y, Yang Y, Cui J, Li X, Pu H, Gu J, Xie S, Luo J (2017) Development of the USV ‘JingHai-I’ and sea trials in the Southern Yellow Sea. Ocean Eng 131:186–196

Lee J, Woo J, Kim N (2017) Vision and 2D LiDAR based autonomous surface vehicle docking for identify symbols and dock task in 2016 Maritime RobotX Challenge. In: 2017 IEEE underwater technology (UT), pp 1–5

Zhang H, Niu Y, Chang S-F (2018) Grounding referring expressions in images by variational context. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Zhang H, Kyaw Z, Yu J, Chang S (2017) PPR-FCN: weakly supervised visual relation detection via parallel pairwise R-FCN. In: 2017 IEEE international conference on computer vision (ICCV), pp 4243–4251

Zhang H, Kyaw Z, Chang S-F, Chua T-S (2017) Visual translation embedding network for visual relation detection. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Abdel-Aziz Y, Karara HM (1971) Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. In: Proceedings of the symposium on close-range photogrammertry, pp 1–18

Tsai RY (1986) An efficient and accurate camera calibration technique for 3D machine vision. No source information available

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22:1330–1334

Faugeras OD, Luong Q-T, Maybank SJ (1992) Camera self-calibration: theory and experiments. In: Sandini G (ed) Computer vision—ECCV’92. Springer, Berlin, pp 321–334

Pollefeys M, Koch R, Gool LV (1999) Self-calibration and metric reconstruction inspite of varying and unknown intrinsic camera parameters. Int J Comput Vis 32:7–25

Dhall A, Chelani K, Radhakrishnan V, Krishna KM (2017) LiDAR-camera calibration using 3D–3D point correspondences. arXiv:1705.09785 [cs]

Pusztai Z, Hajder L (2017) Accurate calibration of LiDAR-camera systems using ordinary boxes. In: 2017 IEEE international conference on computer vision workshops (ICCVW), pp 394–402

Levinson J, Thrun S (2013) Automatic online calibration of cameras and lasers. In: Proceedings of robotics: science and systems, Berlin, Germany

Chien H-J, Klette R, Schneider N, Franke U (2016) Visual odometry driven online calibration for monocular LiDAR-camera systems. In: 2016 23rd International conference on pattern recognition (ICPR), pp 2848–2853

Kendall A, Grimes M, Cipolla R (2015) PoseNet: a convolutional network for real-time 6-DOF camera relocalization. In: 2015 IEEE international conference on computer vision (ICCV), pp 2938–2946

Schneider N, Piewak F, Stiller C, Franke U (2017) RegNet: multimodal sensor registration using deep neural networks. In: 2017 IEEE intelligent vehicles symposium (IV), pp 1803–1810

Iyer G, Karnik Ram R, Murthy JK, Krishna KM (2018) CalibNet: self-supervised extrinsic calibration using 3D spatial transformer networks. arXiv:1803.08181 [cs]

Xu G, Zhang Z (1996) Epipolar geometry in stereo, motion and object recognition: a unified approach. Springer, Dordrecht

Zhou Y, Tuzel O (2017) VoxelNet: end-to-end learning for point cloud based 3D object detection

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 779–788

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) SSD: single shot multibox detector. In: Leibe B, Matas J, Sebe N, Welling M (eds) Computer vision—ECCV 2016. Springer, Berlin, pp 21–37

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: 2014 IEEE conference on computer vision and pattern recognition, pp 580–587

Girshick R (2015) Fast R-CNN. In: 2015 IEEE international conference on computer vision (ICCV), pp 1440–1448

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: towards real-time object detection with region proposal networks. arXiv:1506.01497 [cs]

Chen X, Kundu K, Zhu Y, Berneshawi AG, Ma H, Fidler S, Urtasun R (2015) 3D object proposals for accurate object class detection. In: Cortes C, Lawrence ND, Lee DD, Sugiyama M, Garnett R (eds) Advances in neural information processing systems 28. Curran Associates Inc, Red Hook, pp 424–432

Chen X, Kundu K, Zhang Z, Ma H, Fidler S, Urtasun R (2016) Monocular 3D object detection for autonomous driving. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 2147–2156

Song S, Chandraker M (2015) Joint SFM and detection cues for monocular 3D localization in road scenes. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp 3734–3742

Nie W, Liu A, Gao Y, Su Y (2018) Hyper-clique graph matching and applications. IEEE Trans Circuits Syst Video Technol. https://doi.org/10.1109/TCSVT.2018.2852310

Nie W, Cheng H, Su Y (2017) Modeling temporal information of mitotic for mitotic event detection. IEEE Trans Big Data 3:458–469

Liu A, Nie W, Gao Y, Su Y (2018) View-based 3-D model retrieval: a benchmark. IEEE Trans Cybern 48:916–928

Charles RQ, Su H, Kaichun M, Guibas LJ (2017) PointNet: deep learning on point sets for 3D classification and segmentation. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp 77–85

Qi CR, Yi L, Su H, Guibas LJ (2017) PointNet++: deep hierarchical feature learning on point sets in a metric space. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds) Advances in neural information processing systems 30. Curran Associates Inc, Red Hook, pp 5099–5108

Chen X, Ma H, Wan J, Li B, Xia T (2017) Multi-view 3D object detection network for autonomous driving. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp 6526–6534

Ku J, Mozifian M, Lee J, Harakeh A, Waslander S (2017) Joint 3D proposal generation and object detection from view aggregation. arXiv:1712.02294 [cs]

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv:1502.03167 [cs]

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2016) Feature pyramid networks for object detection. arXiv:1612.03144 [cs]

Acknowledgements

This work was financially supported by The Aoshan Innovation Project in Science and Technology of Qingdao National Laboratory for Marine Science and Technology (No. 2016ASKJ07), Key R&D plan of Shandong province (2016ZDJS09A01) and Qing dao Science and technology plan (17-1-1-3-jch). We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan X Pascal GPU used for this research. The authors thanks all anonymous reviewers for the valuable comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, H., Nie, J., Liu, Y. et al. A Multi-modality Sensor System for Unmanned Surface Vehicle. Neural Process Lett 52, 977–992 (2020). https://doi.org/10.1007/s11063-019-09998-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-09998-4