Abstract

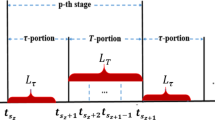

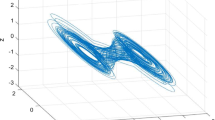

The problem of output feedback optimal control of the partially-unknown nonlinear systems with constrained-input is investigated in this paper. Firstly, a neural network observer is proposed to estimate the unmeasurable system states. Secondly, synchronous integral reinforcement learning (SIRL) algorithm is used to solve the Hamilton–Jacobi–Bellman (HJB) equation associated with non-quadratic cost function and the optimal controller is obtained without knowing the system drift dynamics. This algorithm is implemented by a critic-actor structure and the novel weight update laws of the neural networks are designed and tuned simultaneously. Moreover, the weight estimation errors of all neural networks are proven uniformly ultimately bounded (UUB), and the stability of the whole closed-loop system is also guaranteed. Finally, two numerical simulation examples support the effectiveness of the proposed methods.

Similar content being viewed by others

References

Lewis FL, Vrabie DL, Syrmos VL (2012) Optimal control, 3rd edn. Wiley & Sons, Hoboken

Wu YY, Cao JD, Alofi A, Al-Mazrooei A, Elaiw A (2015) Finite-time boundedness and stabilization of uncertain switched neural networks with time-varying delay. Neural Netw 69:135–143

Wu YY, Cao JD, Li QB, Alsaedi A, Alsaadi FE (2017) Finite-time synchronization of uncertain coupled switched neural networks under asynchronous switching. Neural Netw 85:128–139

Wang QL, Psillakis HE, Sun CY (2018) Cooperative control of multiple agents with unknown high-frequency gain signs under unbalanced and switching topologies. IEEE Trans Autom Control. https://doi.org/10.1109/TAC.2018.2867161

Wang QL, Sun CY (2018) Adaptive consensus of multi-agent systems with unknown high-frequency gain signs under directed graphs. IEEE Trans Syst Man Cybern Syst. https://doi.org/10.1109/TSMC.2018.2810089

Li QB, Guo J, Sun CY, Wu YY, Ding ZT (2018) Finite-time synchronization for a class of dynamical complex networks with nonidentical nodes and uncertain disturbance. J Syst Sci Complex. https://doi.org/10.1007/s11424-018-8141-5

Werbos PJ (1977) Advanced forecasting methods for global crisis warning and models of intelligence. Gen Syst Year b 22(6):25–38

Werbos PJ (2008) ADP: the key direction for future research in intelligent control and understanding brain intelligence. IEEE Trans Syst Man Cybern Part B Cybern 38(4):898–900

Mu CX, Wang D, He HB (2017) Novel iterative neural dynamic programming for data-based approximate optimal control design. Automatica 81:240–252

Bertsekas DP, Tsitsiklis JN (1996) Neuro-dynamic programming. Athena Sci 27(6):1687–1692

Pardalos PM (2009) Approximate dynamic programming: solving the curses of dimensionality. Wiley, New York

Si J, Wang YT (2001) Online learning control by association and reinforcement. IEEE Trans Neural Netw 12(2):264–276

Fang X, Zheng DZ, He HB, Ni Z (2015) Data-driven heuristic dynamic programming with virtual reality. Neurocomputing 166(C):244–255

Ding CX, Sun Y, Zhu YG (2017) A NN-based hybrid intelligent algorithm for a discrete nonlinear uncertain optimal control problem. Neural Process Lett 45:457–473

Wang D, Liu DR (2013) Neural-optimal control for a class of unknown nonlinear dynamic systems using SN-DHP technique. Neurocomputing 121:218–225

Zhang HG, Qin CB, Luo YH (2014) Nerual-network-based constrained optimal control scheme for discrete-time switched systems using dual heuristic programming. IEEE Trans Autom Sci Eng 121(8):839–849

Ni Z, He HB, Zhao DB, Xu X, Prokhorov DV (2014) GrDHP: a general utility function representation for dual heuristic dynamic programming. IEEE Trans Neural Netw Learn Syst 121(8):839–849

Werbos PJ (1992) Approximate dynamic programming for real-time control and neural modeling. Handbook of intelligent control. Van Nostrand, New York, pp 493–525

Werbos PJ (1990) Consistency of HDP applied to a simple reinforcement learning problem. Neural Netw 3(2):179–189

Murray JJ, Cox CJ, Lendaris GG, Saeks R (2002) Adaptive dynamic programming. IEEE Trans Syst Man Cybern 32(2):140–153

Lee JY, Park JB, Choi YH (2012) Integral Q-learning and explorized policy iteration for adaptive optimal control of continuous-time linear systems. Automatica 48(11):2850–2859

Jiang Y, Jiang ZP (2012) Computational adaptive optimal control for continuous-time linear systems with completely unknown dynamics. Automatica 48(10):2699–2704

Liu DR, Wei QL (2014) Policy iteration adaptive dynamic programming algorithm for discrete-time nonlinear systems. IEEE Trans Neural Netw Learn Syst 25(3):621–634

Mu CX, Sun CY, Wang D, Song AG (2017) Adaptive tracking control for a class of continuous-time uncertain nonlinear systems using the approximate solution of HJB equation. Neurocomputing 260:432–442

Abu-Khalaf M, Lewis FL (2005) Nearly optimal control laws for nonlinear systems with saturating actuators using a neural network HJB approach. Automatica 41(5):779–791

Vrabie D, Lewis FL (2008) Adaptive optimal control algorithm for continuous-time nonlinear systems based on policy iteration. IEEE Proc CDC08:73–79

Vrabie D, Pastravanu O, Abu-Khalaf M, Lewis FL (2009) Adaptive optimal control for continuous-time linear systems based on policy iteration. Automatica 45(2):477–484

Vrabie D, Lewis FL (2009) Neural network approach to continuous-time direct adaptive optimal control for partially unknown nonlinear systems. Neural Netw 22:237–246

Lee JY, Park JB, Choi YH (2015) Integral reinforcement learning for continuous-time input-affine nonlinear systems with simultaneous invariant explorations. IEEE Trans Neural Netw Learn Syst 26(5):916–932

Yang X, Liu DR, Luo B, Li C (2016) Data-based robust adaptive control for a class of unknown nonlinear constrained-input systems via integral reinforcement learning. Inf Sci 369:731–747

Vamvoudakis KG, Lewis FL (2010) Online actor-critic algorithm to solve the continuous-time infinite horizon optimal control problem. Automatica 46:878–888

Vamvoudakis KG, Vrabie D, Lewis FL (2014) Online adaptive algorithm for optimal control with integral reinforcement learning. Int J Robust Nonlinear Control 24:878–888

Modares H, Lewis FL, Naghibi-Sistani MB (2014) Integral reinforcement learning and experience replay for adaptive optimal control of partially-unknown constrained-input continuous-time systems. Automatica 50:193–202

Liu DR, Yang X, Wang D, Wei QL (2015) Reinforcement-learning-based robust controller design for continuous-time uncertain nonlinear systems subject to input constraints. IEEE Trans Cybern 45(7):1372–1385

Wang D, Mu CX, Zhang QC, Liu DR (2016) Event-based input-constrained nonlinear \(H_\infty \) state feedback with adaptive critic and neural implementation. Neurocomputing 214:848–856

Sun W, Wu YQ, Xia JW, Nguyen VT (2018) Adaptive fuzzy control with high-order barrier Lyapunov functions for high-order uncertain nonlinear systems with full-state constraints. IEEE Trans Cybern 99:1–9

Zhu LM, Modares H, Peen GO, Lewis FL, Yue BZ (2015) Adaptive suboptimal output-feedback control for linear systems using integral reinforcement learning. IEEE Trans Control Syst Technol 23(1):264–273

Wang TC, Sui S, Tong SC (2017) Data-based adaptive neural network optimal output feedback control for nonlinear systems with actuator saturation. Neurocomputing 247:192–201

Abdollahi F, Talebi HA, Patel RV (2006) A stable neural network-based observer with application to flexible-joint manipulators. IEEE Trans Neural Netw 17(1):118–129

Huang YZ (2017) Neuro-observer based online finite-horizon optimal control for uncertain non-linear continuous-time systems. IET Control Theory Appl 11(3):401–410

Arbib MA (2003) The handbook of brain theory and neural networks. MIT Press, Cambridge

Yan MM, Qiu JL, Chen XY, Chen X, Yang CD, Zhang AC (2018) Almost periodic dynamics of the delayed complex-valued recurrent neural networks with discontinuous activation functions. Neural Comput Appl 30:3339–3352

Yan MM, Qiu JL, Chen XY, Chen X, Yang CD, Zhang AC, Alsaadi F (2018) Almost periodic dynamics of the delayed complex-valued recurrent neural networks with discontinuous activation functions. Neural Process Lett 48:577–601

Ioannou P, Fidan B (2006) Advances in design and control. SIAM Adaptive Control Tutorial. SIAM, PA

Stevens BL, Frank FL (2003) Aircraft control and simulation. Wiley, New York

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by National Natural Science Foundation of China under Grant 61473202, 61773284.

Rights and permissions

About this article

Cite this article

Ren, L., Zhang, G. & Mu, C. Optimal Output Feedback Control of Nonlinear Partially-Unknown Constrained-Input Systems Using Integral Reinforcement Learning. Neural Process Lett 50, 2963–2989 (2019). https://doi.org/10.1007/s11063-019-10072-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-10072-2