Abstract

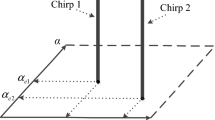

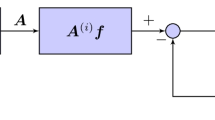

The block-sparse structure is shared by many types of signals, including audio, image, and radar-emitted signals. This structure can considerably improve compressive sensing (CS) performance and has attracted much attention in recent years. However, when fitting this model in practical applications, the nonzero blocks are always separated by one or more zero blocks to avoid interference between active emitters. (Generally, a block is occupied by an emitter.) In this paper, we coin a new phrase, ‘nonadjacent block sparse,’ or NBS, to describe this new structure. Our contributions are threefold. First, from a statistical probability perspective, the mean value and variance of block sparsity are evaluated and used to describe an NBS signal. Second, by employing the block discrete chirp matrix (BDCM), we propose and prove a condition that ensures the successful recovery of NBS signals from their linear measurements with high probability. Specifically, as long as a condition involved in mean value and variance of block sparsity is satisfied, an NBS signal can be successfully recovered with a high probability. Third, extensive experiments are simulated, and deep theoretical implications are discussed. The analyzed results demonstrate the progress we have made toward block-sparse CS.

Similar content being viewed by others

References

Huang, R., Rhee, K.H., Uchida, S.: Multimed. Tools Appl. 72, 71 (2014). https://doi.org/10.1007/s11042-012-1337-0

Chen, J., Chen, Y., Qin, D., Kuo, Y.: An elastic net-based hybrid hypothesis method for compressed video sensing. Multimed. Tools Appl. 74(6), 2085–2108 (2015)

Stimson, G.W.: Introduction to airborne radar, 2nd edn. SciTech Publishing Inc, Mendham (1998)

Vakin, S.A., Shustov, L.N., Dunwell, R.H.: Fundamentals of electronic warfare. Artech House, Boston (2001)

Sun, S.: A survey of multi-view machine learning. Neural Comput. Appl. 23(7–8), 2031–2038 (2013)

Khader, A.: Jilani Saudagar, Abdul Sattar Syed. Image compression approach with ridgelet transformation using modified neuro modeling for biomedical images. Neural Comput. Appl. 24(7–8), 1725–1734 (2014)

Candès, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2006)

Wright, J., Yang, A.Y., Ganesh, A., et al.: Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 31(2), 210–227 (2009)

Elhamifar, E., Vidal, R.: Sparse subspace clustering: algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 35(11), 2765–2781 (2013)

Ivana, T., Pascal, F.: Dictionary learning: what is the right representation for my signal. IEEE Signal Process. Mag. 4(2), 27–38 (2011)

Chen, Y., Oh, H.S.: A survey of measurement-based spectrum occupancy modeling for cognitive radios. IEEE Commun. Surv. Tutor. 18(1), 848–859 (2016)

Sahai, A.: Spectrum sensing: fundamental limits and practical challenges. Tutorial Document on IEEE Dyspan (2005)

Tian, Z., Giannakis, G.B.: Compressed sensing for wideband cognitive radios. In: International Conference on Acoustic, Speech, and Signal Processing (ICASSP) (2007)

Axell, E., Leus, G., Larsson, E.G., et al.: Spectrum sensing for cognitive radio: state-of-the-art and recent advances. IEEE Signal Process. Mag. 29(3), 101–116 (2012)

Sun, H., Nallanathan, A., Wang, C.X., et al.: Wideband spectrum sensing for cognitive radio networks: a survey. IEEE Wirel. Commun. 20(2), 74–81 (2013)

Hattab, G., Ibnkahla, M.: Multiband Spectrum Sensing: Challenges and Limitations. arXiv preprint arXiv:1409.6394 (2014)

De, P., Satija, U.: Sparse representation for blind spectrum Sensing in cognitive radio: a compressed sensing approach. Circuits Syst. Signal Process. 35(12), 4413–4444 (2016)

Sharma, S.K., Lagunas, E., Chatzinotas, S., et al.: Application of compressive sensing in cognitive radio communications: a survey. IEEE Commun. Surv. Tutor. 18(3), 1838–1860 (2016)

Sharma, S.K., Bogale, T.E., Chatzinotas, S., et al.: Cognitive radio techniques under practical imperfections: a survey. IEEE Commun. Surv. Tutor. 17(4), 1858–1884 (2015)

Mishali, M., Eldar, Y.C.: Blind multiband signal reconstruction: compressed sensing for analog signals. IEEE Trans. Signal Process. 57(3), 993–1009 (2009)

Stojnic, M., Parvaresh, F., Hassibi, B.: On the reconstruction of block-sparse signals with an optimal number of measurements. IEEE Trans. Signal Process. 57(8), 3075–3085 (2009)

Eldar, Y.C., Mishali, M.: Robust recovery of signals from a structured union of subspaces. IEEE Trans. Inf. Theory 55(11), 5302–5316 (2009)

Eldar, Y.C., Kuppinger, P., Bolcskei, H.: Block-sparse signals: uncertainty relations and efficient recovery. IEEE Trans. Signal Process. 58(6), 3042–3054 (2010)

Eldar, Y.C., Rauhut, H.: Average case analysis of multichannel sparse recovery using convex relaxation. IEEE Trans. Inf. Theory 56(1), 505–519 (2010)

Tropp, J.A.: On the conditioning of random subdictionaries. Appl. Comput. Harmon. Anal. 25(1), 1–24 (2008)

Bajwa, W.U., Duarte, M.F., Calderbank, R.: Conditioning of random block subdictionaries with applications to block-sparse recovery and regression. IEEE Trans. Inf. Theory 61(7), 4060–4079 (2015)

Mehdi, K., Jingxin, Z., Cishen, Z., Hadi, Z.: Iterative bayesian reconstruction of non-IID blocksparse signals. IEEE Trans. Signal Process. 64(13), 3297–3307 (2016)

Mehdi, K., Jingxin, Z., Cishen, Z., Hadi, Z.: Block-sparse impulsive noise reduction in OFDM systems—a novel iterative Bayesian approach. IEEE Trans. Commun. 64(1), 271–284 (2016)

Adcock B, Hansen A C, Poon C, et al. Breaking the coherence barrier: a new theory for compressed sensing. Forum of Mathematics, Sigma (2017). https://doi.org/10.1017/fms.2016.32

Chauffert, N., Ciuciu, P., Jonas Kahn, J., et al.: Variable density sampling with continuous trajectories. SIAM J. Imaging Sci. 7(4), 1962–1992 (2014)

Bigot, J., Boyer, C., Weiss, P.: An analysis of block sampling strategies in compressed sensing. IEEE Trans. Inf. Theory 62(4), 2125–2139 (2016)

Boyer, C., Bigot, J., Weiss, P.: Compressed sensing with structured sparsity and structured acquisition. Appl. Comput. Harmon. Anal. (2017). https://doi.org/10.1016/j.acha.2017.05.005

Applebaum, L., Howard, S.D., Searle, S., et al.: Chirp sensing codes: deterministic compressed sensing measurements for fast recovery. Appl. Comput. Harmon. Anal. 26(2), 283–290 (2009)

Calderbank, R., Thompson, A., Xie, Y.: On block coherence of frames. Appl. Comput. Harmon. Anal. 38(1), 50–71 (2015)

Bajwa, W.U., Calderbank, R., Jafarpour, S.: Revisiting model selection and recovery of sparse signals using one-step thresholding. In: 48th Annual Allerton Conference on Communication, Control, and Computing (2010)

Bruckstein, A.M., Donoho, D.L., Elad, M.: From sparse solutions of systems of equations to sparse modeling of signals and images. SIAM Rev. 51(1), 34–81 (2009)

Candes, E.J.: The restricted isometry property and its implications for compressed sensing. C. R. Math. 346(9–10), 589–592 (2008)

Candès, E.J., Wakin, M.B.: An introduction to compressive sampling. IEEE Signal Process. Mag. 25(2), 21–30 (2008)

Laska, J., Kirolos, S., Massoud, Y., et al.: Random sampling for analog-to-information conversion of wideband signals. In: IEEE Dallas/CAS Workshop on Design, Applications, Integration and Software (2006)

Zhilin, Z., Bhaskar, R.: Extension of SBL algorithms for the recovery of block sparse signals with intra-block correlation. IEEE Trans. Signal Process. 61(8), 2009–2015 (2013)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B-Stat. Methodol. 68(1), 49–67 (2006)

Candes, E.J., Tao, T.: Decoding by linear programming. IEEE Trans. Inf. Theory 51(12), 4203–4215 (2005)

Baron, D., Sarvotham, S., Baraniuk, R.G.: Bayesian compressive sensing via belief propagation. IEEE Trans. Signal Process. 58(1), 269–280 (2010)

Pati, Y.C., Rezaiifar, R., Krishnaprasad, P.S.: Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In: The Twenty-Seventh Asilomar Conference on Signals, Systems and Computers (1993)

Acknowledgements

The authors would like to thank professor Yonina C. Eldar for her code, which is available to the public on her homepage.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

To prove Proposition 1, we leverage the following theorem.

Theorem 1

[22] If a sensing matrix\( {\varvec{\Phi}} \)satisfies the block RIP with\( \delta_{2k\left| b \right.} \le \sqrt 2 - 1 \), then there is a unique block-k-sparse vector\( {\hat{\mathbf{x}}} \)equal to the solution of Eq. (2), where\( {\varvec{\Phi}} \)satisfies the block RIP with\( \delta_{k\left| b \right.} \). Therefore, for any k-block-sparse signalx, \( \left\| {\left( {1 - \delta_{k\left| b \right.} } \right){\mathbf{x}}} \right\|_{2}^{2} \le \left\| {\varvec{\Phi}}{\mathbf{x}} \right\|_{2}^{2} \le \left\| {\left( {1 + \delta_{k\left| b \right.} } \right){\mathbf{x}}} \right\|_{2}^{2} \)holds.

We use \( \delta_{k} \) for \( \delta_{k\left| b \right.} \) to denote a parameter that is in context with the block-sparse case only. Considering Theorem 1, Proposition 1 gives a condition under which the block RIP holds with high probability.

Next, we provide a proof of proposition 1. Our proof strategy is to first evaluate the expectation of the spectral norm of the submatrix drawn from \( {\varvec{\Phi}} \). Then, we derive the probability of block RIP being satisfied. Before providing the substance of the proof, we need to define some notations.

Let \( \mathcal{K} = \{ {\varvec{\Theta}}|\left\| {\varvec{\Theta}} \right\|_{1} = k\} \) be the set that contains all the block index vectors for extracting k blocks from \( {\varvec{\Phi}} \), where \( {\varvec{\Theta}} = \left[ {{\varvec{\uptheta}}_{1} , \ldots ,{\varvec{\uptheta}}_{r} } \right]^{\text{T}} = \left[ {\theta_{(1 - 1)n + 1} , \ldots ,\theta_{(1 - 1)n + n} , \ldots ,\theta_{(r - 1)n + 1} , \ldots ,\theta_{(r - 1)n + n} } \right]^{\text{T}} \)\( \in {\mathbb{R}}^{L \times 1} \) is an arbitrary realization of V. For any matrix M with dL columns and a block index vector \( {\varvec{\Theta}} \in {\mathbb{R}}^{L \times 1} \), we define the extracting operation as \( {\mathbf{M}}|_{{\varvec{\Theta}}} = \mathcal{E}\left( { \, {\mathbf{M}} \cdot \left( {diag({\varvec{\Theta}}) \otimes {\mathbf{I}}_{d} } \right) \, } \right) \), where \( \mathcal{E}\left( \cdot \right) \) is a function to remove zero blocks. If a set \( \varOmega \) contains several block index vectors, \( {\mathbf{M}}|_{\varOmega } \) stands for a set of submatrices drawn from M according to elements of \( \varOmega \). Furthermore, we define \( \mathcal{C}\left( {\varvec{\Theta}} \right) \) as the function that calculates the cardinality of set \( \varGamma { = }\left\{ {\left\| {{\varvec{\uptheta}}_{i} } \right\|_{1} |\left\| {{\varvec{\uptheta}}_{i} } \right\|_{1} > 0} \right\} \). According to the definition of the cell Eq. (12), \( \mathcal{C}\left( {\varvec{\Theta}} \right) \) is the number of active cells.

Based on Eq. (14), we have \( {\varvec{\Phi}}_{i}^{\text{T}} {\varvec{\Phi}}_{j} = \left( {{\mathbf{q}}_{i} \otimes {\mathbf{U}}} \right)^{\text{T}} \left( {{\mathbf{q}}_{j} \otimes {\mathbf{U}}} \right) = {\mathbf{q}}_{i}^{\text{T}} {\mathbf{q}}_{j} {\mathbf{I}} \) for any \( {\mathbf{q}}_{i} \) and \( {\mathbf{q}}_{j} \). Therefore, we study the properties of the submatrix extracted from Q instead of directly studying the submatrix drawn from \( {\varvec{\Phi}} \). We start by evaluating a surrogate expectation of a spectral norm of \( {\mathbf{Q}}_{\mathcal{K}} \) as follows:

where the last step uses the properties of the DCM that \( {\mathbf{Q}}_{i}^{\text{T}} {\mathbf{Q}}_{j} \) equals \( \sqrt {{1 \mathord{\left/ {\vphantom {1 r}} \right. \kern-0pt} r}} \, {\mathbf{1}} \) and 0 for \( i \ne j \) and \( i = j \), respectively.

Since \( \mathcal{C}\left( {\varvec{\Theta}} \right) \) is fixed, the maximum of \( \rho = \left\| {{\mathbf{1}}_{k} - blkdiag\left( {{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{1} } \right\|_{1} }} ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{2} } \right\|_{1} }} , \ldots ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{r} } \right\|_{1} }} } \right)} \right\|_{2} \) is achieved when the variance of \( \varGamma \) is its minimum. Furthermore, the maximum of \( \rho = \left\| {{\mathbf{1}}_{k} - blkdiag\left( {{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{1} } \right\|_{1} }} ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{2} } \right\|_{1} }} , \ldots ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{r} } \right\|_{1} }} } \right)} \right\|_{2} \) can be bounded by \( k - \left\lfloor {k/\mathcal{C}\left( {\varvec{\Theta}} \right)} \right\rfloor \), as shown in Figs. 9 and 10. Let \( \mathcal{K} = \mathcal{H}_{1} \cup \mathcal{H}_{2} \cdots \cup \mathcal{H}_{k} \), where \( \mathcal{H}_{i} = \left\{ {{\varvec{\Theta}}\left| {\mathcal{C}\left( {\varvec{\Theta}} \right) = i} \right.} \right\} \). Then, we have

\( \rho = \left\| {{\mathbf{1}}_{k} - blkdiag\left( {{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{1} } \right\|_{1} }} ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{2} } \right\|_{1} }} , \ldots ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{r} } \right\|_{1} }} } \right)} \right\|_{2} \) versus variances of different \( \varGamma \), it is easy to observe that the maximum of \( \rho \) is achieved when the variance is minimum

The maximum of \( \rho = \left\| {{\mathbf{1}}_{k} - blkdiag\left( {{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{1} } \right\|_{1} }} ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{2} } \right\|_{1} }} , \ldots ,{\mathbf{1}}_{{\left\| {{\varvec{\uptheta}}_{r} } \right\|_{1} }} } \right)} \right\|_{2} \) under different block sparsities (denoted by k). The dashed lines are \( k - \left\lfloor {k/\mathcal{C}\left( {\varvec{\Theta}} \right)} \right\rfloor \). Obviously, \( k - \left\lfloor {k/\mathcal{C}\left( {\varvec{\Theta}} \right)} \right\rfloor \) can well approximate and upper bound the maximum of \( \rho \)

In Eq. (16) line 3, we use the inequation \( k - \left\lfloor {k/i} \right\rfloor \le k\left( { \, 1 - 2^{1 - i} \, } \right) \); see Fig. 11. Since \( P\left( {\left\| {\mathbf{V}} \right\|_{1} = k} \right) \) can be well approximated by a Gaussian function, the maximum of \( k \cdot P\left( {\left\| {\mathbf{V}} \right\|_{1} = k} \right) \) is obtained when k equals the mean value. Therefore,

Comparison of \( k - \left\lfloor {k/i} \right\rfloor \) and \( k\left( { \, 1 - 2^{1 - i} \, } \right) \). Solid lines represent the values of \( k - \left\lfloor {k/i} \right\rfloor \), while dashed lines denote the value of \( k\left( { \, 1 - 2^{1 - i} \, } \right) \). Obviously, the dashed lines outperform the solid lines, that is, \( k - \left\lfloor {k/i} \right\rfloor \le k\left( { \, 1 - 2^{1 - i} \, } \right) \)

With the application of Markov’s inequality, the result is

By the assumption \( \mu \left( {1 - 2^{1 - \mu } } \right)/\sqrt {2\pi r\sigma^{2} } \le \varepsilon \left( {\sqrt 2 - 1} \right) \), it is obvious that

Finally, recalling Theorem 1 and substituting \( \alpha \) for \( \delta_{2k} \le \sqrt 2 - 1 \), we complete the proof. □

Rights and permissions

About this article

Cite this article

Tian, Y., Wang, X. Compressively sensing nonadjacent block-sparse spectra via a block discrete chirp matrix. Photon Netw Commun 37, 164–178 (2019). https://doi.org/10.1007/s11107-018-0813-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11107-018-0813-5