Abstract

The assignment of reviewers to papers is one of the most important and challenging tasks in organizing scientific conferences and a peer review process in general. It is a typical example of an optimization task where limited resources (reviewers) should be assigned to a number of consumers (papers), so that every paper should be evaluated by highly competent, in its subject domain, reviewers while maintaining a workload balancing of the reviewers. This article suggests a heuristic algorithm for automatic assignment of reviewers to papers that achieves accuracy of about 98–99% in comparison to the maximum-weighted matching (the most accurate) algorithms, but has better time complexity of Θ(n2). The algorithm provides an uniform distribution of papers to reviewers (i.e. all reviewers evaluate roughly the same number of papers); guarantees that if there is at least one reviewer competent to evaluate a paper, then the paper will have a reviewer assigned to it; and allows iterative and interactive execution that could further increase accuracy and enables subsequent reassignments. Both accuracy and time complexity are experimentally confirmed by performing a large number of experiments and proper statistical analyses. Although it is initially designed to assign reviewers to papers, the algorithm is universal and could be successfully implemented in other subject domains, where assignment or matching is necessary. For example: assigning resources to consumers, tasks to persons, matching men and women on dating web sites, grouping documents in digital libraries and others.

Similar content being viewed by others

References

ADBIS. (2007). International Conference on Advances in Databases and Information Systems. http://www.adbis.org/

Blei, D. M., Ng, A. Y., Jordan, M. I., & Lafferty, J. (2003). Latent dirichlet allocation. Journal of Machine Learning Research,3, 2003.

Cechlárová, K., Fleiner, T., & Potpinková, E. (2014). Assigning evaluators to research grant applications: The case of Slovak Research and Development Agency. Scientometrics,99(2), 495–506.

Charlin, L., & Richard Z. (2013). The Toronto paper matching system: An automated paper-reviewer assignment system. In Proceedings of the 30th international conference on machine learning, Atlanta, Georgia, USA, 2013 (Vol. 28). JMLR: W&CP.

Charlin, L., Zemel, R., & Boutilier, C. (2011) A framework for optimizing paper matching. In Proceedings of the 27th annual conference on uncertainty in artificial intelligence (Corvallis, OR, 2011) (pp. 86–95). AUAI Press

Cochran, W. G. (1941). The distribution of the largest of a set of estimated variances as a fraction of their total. Annals of Human Genetics (London),11(1), 47–52.

CompSysTech, International Conference on Computer Systems and Technologies. http://www.compsystech.org/

Conry, D., Koren, Y., & Ramakrishnan, N. (2009). Recommender systems for the conference paper assignment problem. In Proceedings of the 3rd ACM conference on recommender systems (pp. 357–360).

Cormen, T. H., Leiserson, C., Rivest, R., & Stein, C. (2001). Introduction to algorithms (2nd ed.). Cambridge: MIT Press.

CyberChair, A Web-based Paper Submission & Review System. http://www.borbala.com/cyberchair/

Dice, Lee R. (1945). Measures of the amount of ecologic association between species. Ecology,26(3), 297–302. https://doi.org/10.2307/1932409.JSTOR1932409.

Dinic, E. A. (1970). Algorithm for solution of a problem of maximum flow in a network with power estimation. Soviet Mathematics. Doklady,11(5), 1277–1280.

EasyChair. Conference management system. http://www.easychair.org/

EDAS: Editor’s Assistant, conference management system. http://edas.info/

Edmonds, J., & Karp, R. M. (1972). Theoretical improvements in algorithmic efficiency for network flow problems. Journal of the ACM (Association for Computing Machinery),19(2), 248–264. https://doi.org/10.1145/321694.321699.

Ferilli, S., Di Mauro, N., Basile, T. M. A., Esposito, F., & Biba, M. (2006). Automatic topics identification for reviewer assignment. In 19th international conference on industrial, engineering and other applications of applied intelligent systems, IEA/AIE 2006 (pp. 721–730). Springer LNCS.

Halevi, S. Web Submission and Review Software. http://people.csail.mit.edu/shaih/websubrev/

Hermann, M., Professor in Algorithms and Complexity. http://www.lix.polytechnique.fr/~hermann/

Jaccard, Paul. (1912). The Distribution of the flora in the alpine zone. New Phytologist,11, 37–50. https://doi.org/10.1111/j.1469-8137.1912.tb05611.x.

Kalinov, K. (2002). Practical statistics for social sciences, archeologists and anthropologists. Sofia: New Bulgarian University. (in Bulgarian).

Kalmukov, Y. (2006). An algorithm for automatic assignment of reviewers to papers. In Proceedings of the international conference on computer systems and technologies CompSysTech’06, Ruse (pp. V.5-1–V.5-7).

Kalmukov, Y. (2012). Describing papers and reviewers’ competences by taxonomy of keywords. Computer Science and Information Systems,9(2), 763–789.

Kou, N. M., Leong Hou, U., Mamoulis, N., & Gong, Z. (2015). Weighted coverage based reviewer assignment. In Proceedings of the 2015 ACM SIGMOD international conference on management of data (pp. 2031–2046). 2015.

Kuhn, H. W. (1955). The Hungarian method for the assignment problem. Naval Research Logistics Quarterly,2, 83–97.

Lawler, E. L. (1976). Combinatorial optimization: Networks and matroids. New York: Holt, Rinehart, Winston.

Li, Xinlian, & Watanabe, Toyohide. (2013). Automatic paper-to-reviewer assignment, based on the matching degree of the reviewers. Procedia Computer Science,22, 633–642.

Liu, X., Suel, T., & Memon, N. (2014). A robust model for paper reviewer assignment. In Proceedings of the 8th ACM conference on recommender systems (pp. 25–32)

Lomax, R. G. (2007). Statistical concepts: A second course (p. 10). ISBN 0-8058-5850-4

Long, C., Chi-Wing Wong, R., Peng, Y., & Ye, L. (2013). On good and fair paper-reviewer assignment. In 2013 IEEE 13th international conference on data mining (pp. 1145–1150). IEEE.

Lowik, P. (2009). Comparative analysis between PHP’s native sort function and quicksort implementation in PHP. http://stackoverflow.com/a/1282757. August 2009.

Matsumoto, M., & Nishimura, T. (1998). Mersenne twister: A 623-dimensionally equidistributed uniform pseudo-random number generator. ACM Transactions on Modeling and Computer Simulation (TOMACS),8(1), 3–30.

MathWorks. MATLAB—Statistic Toolbox. http://www.mathworks.com/products/statistics/

Microsoft Conference Management Toolkit. https://cmt3.research.microsoft.com/About

Mitkov, A. (2010). Theory of the experiment. “Library for PhD Students” Series. Ruse, 2010, ISBN: 978-954-712-474-5 (in Bulgarian)

Mitkov, A., & Minkov, D. (1993). Methods for statistical analysis and optimization of agriculture machinery—2-nd part. Sofia: Zemizdat Publishing House. (in Bulgarian). ISBN 954-05-0253-5.

Munkres, J. (1957). Algorithms for the assignment and transportation problems. Journal of the Society for Industrial and Applied Mathematics,5(1), 32–38.

Nguyen, J., Sánchez-Hernández, G., Agell, N., Rovira, X., & Angulo, C. (2018). A decision support tool using Order Weighted Averaging for conference review assignment. Pattern Recognition Letters,105, 114–120.

OpenConf Conference Management System. http://www.openconf.com/

Pesenhofer, A., Mayer, R., & Rauber, A. (2006). Improving Scientific Conferences by enhancing Conference Management System with information mining capabilities. In: Proceedings IEEE International Conference on Digital Information Management (ICDIM 2006) (pp. 359–366), ISBN: 1-4244-0682-x; S.

Price, Simon, & Flach, Peter A. (2017). Computational support for academic peer review: A perspective from artificial intelligence. Communications of the ACM,60(3), 70–79.

Rigaux, P. http://deptinfo.cnam.fr/~rigaux/

Rigaux, P. (2004). An iterative rating method: Application to web-based conference management. In Proceedings of the 2004 ACM Symposium on Applied Computing (SAC’04) (pp. 1682–1687). ACM Press, NY, ISBN 1-58113-812-1.

Rodriguez, M., & Bollen, J. (2008). An algorithm to determine peer-reviewers. In Conference on information and knowledge management (CIKM 2008) (pp. 319–328). ACM Press.

Rosen-Zvi, M., Griffiths, T., Steyvers, M., & Smyth, P. (2012). The author-topic model for authors and documents. arXiv preprint arXiv:1207.4169.

Taylor, C. J. (2008). On the optimal assignment of conference papers to reviewers

The MyReview System (2017) A web-based conference management system. http://myreview.sourceforge.net/. Accessed January 2017 (unavailable now)

van de Stadt, R. (2001). CyberChair: A web-based groupware application to facilitate the paper reviewing process. Available at www.cyberchair.org.

Zhai, C. X., Velivelli, A., & Yu, B. (2004). A cross-collection mixture model for comparative text mining. In Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining (pp. 743–748).

Zubarev, D., Devyatkin, D., Sochenkov, I., Tikhomirov, I., & Grigoriev, O. (2019). Expert assignment method based on similar document retrieval. In Elizarov, A., Novikov, B., Stupnikov, S. (Eds.), Data analytics and management in data intensive domains: XXI In-ternational conference DAMDID/RCDL’2019 (October 15–18, 2019, Kazan, Russia): Conference Proceedings (p. 339). Kazan: Kazan Federal University, 2019.

Funding

There has been no financial support for this work that could have influenced its outcome.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Appendices

Appendix 1: An example

Think of a hypothetical conference. Let’s assume there are 5 submitted papers and 5 registered reviewers. Each paper should be evaluated by 2 reviewers, so every reviewer should evaluate exactly (5 * 2)/5 = 2.0 papers.

Let the similarity matrix is as follows. Rows represent papers and columns represent reviewers.

At the beginning the algorithm sorts each row by the similarity factor in descending order. As a result, the first column suggests the most competent reviewer to every single paper.

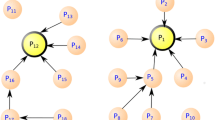

The first column suggests that the reviewer r1 should be assigned to 4 papers (p1, p2, p4 and p5). However, the maximum allowed number of papers per reviewer is 2, i.e. nobody should review more than two papers. So the algorithm has to decide which 2 of these 4 papers to assign to r1. At a glance it seems logical that r1 should be assigned to p1 and p2, as they have the highest similarity factors with him/her. On the other hand, there are fewer reviewers competent to evaluate p4 and p5 so they should be processed with priority. If r1 has to be assigned just to p4 or p5 which one is more suitable? One may say p4 as it has higher similarity factor. However, the second-suggested reviewer of p4 is almost as competent as r1, while the second-suggested reviewer of p5 is much less competent in it than r1 is. In this case it is better to assign r1 to p5 rather than to p4. If r1 is assigned to p4, then p5 will be evaluated by less competent reviewers only, a situation that is highly undesirable. So when deciding which papers to assign to a specific reviewer, the algorithm should take into account both the number of competent reviewers for each paper as well as the rate of decrease in the competence of the next-suggested reviewers for those papers. To automate the process the algorithm modifies the similarity factors from the first column by adding two corrections—C1 and C2. They are calculated by formulas 3 and 4. C1 takes into account the number of non-zero similarity factors with pi (i.e. the number of reviewers competent to evaluate pi), while C2 depends on the rate of decrease in the competence of the next-suggested reviewers for pi.

The specific values of C1 и C2 are as follows:

C1(p1, r1) = 0.0625 | C2(p1, r1) = 2 * 0.05 = 0.1 |

C1(p2, r1) = 0.0625 | C2(p2, r1) = 2 * 0.09 = 0.18 |

C1(p3, r5) = 0.0625 | C2(p3, r5) = 2 * 0.03 = 0.06 |

C1(p4, r1) = 0.25 | C2(p4, r1) = 2 * 0.03 = 0.06 |

C1(p5, r1) = 0.25 | C2(p5, r1) = 2 * 0.17 = 0.34 |

To preserve the real weight of matching, similarity factors should be modified in an auxiliary data structure (an ordinary array) rather than the matrix itself. Here is the first column stored in a single-dimension array.

After adding C1 и C2 the first column of the matrix will look like:

As the number of papers per reviewer is 2 then r1 is assigned to those 2 papers which have the highest similarity factors with him/her after modification. These are p2 and p5.

Rows corresponding to p1 and p4 in the similarity matrix are shifted one position to the left so that the next-competent reviewers are suggested to these papers. As reviewer r1 has already got the maximum allowed number of papers to review, he/she is considered to be busy and no more papers should be assigned to him/her in future. Thus all similarity factors, outside the first column, between r1 and all papers are deleted from the matrix. Deletion guarantees that he/she will not be assign to any more papers.

After shifting p1 and p4 one position left and deleting all occurrences of r1 outside the first column of the matrix, it will look like:

Now r1 is suggested not to 4 but just to 2 papers. However, after all operations performed above, r5 is now suggested to 3 papers. To decide which 2 of these 3 papers to assign to r5, the algorithm again modifies the similarity factors taken from the newly-formed first column of the matrix. As in the previous step, this is done by using formulas 3 and 4. After modification the first column will look like:

It should be assigned to those two papers which have the highest similarity factors with him/her. These are p3 and p4. The row corresponding to p1 is shifted one position to the left again, so that the next-competent reviewer is suggested to that paper. As r5 already has 2 papers to review, all similarity factors outside the first column that are associated with him/her are deleted from the matrix.

Therefore, the matrix looks like:

As seen in the first column, no reviewer is suggested to evaluate more than 2 papers. So it is now possible to assign all reviewers from the first column directly to the papers which they are suggested to. If the last matrix is compared to the initial one, it could be spotted that 3 of 5 papers (p2, p3 and p5) are assigned to their most competent reviewers. One (p4) has got its second-competent reviewer and another one (p1) its third-competent reviewer. However, the levels of competence of these reviewers in respect to p4 and p1 are very close to the levels of the most competent reviewers for these papers. r5 is assigned to p4 with a similarity factor of 0.50 while the most competent reviewer for p4 has a similarity factor of 0.53.

“Appendix 2”: Detailed pseudo code

The detailed pseudo code here could be directly translated in any high-level imperative programming language as each operation in the pseudo corresponds to an operator or a built-in function in the chosen programming language. Here is the meaning of the complex data structures (mostly arrays) used within the code:

-

SM[i,j]—similarity matrix—bi-dimensional array of arrays, where rows (i) represent papers and columns (j) represent reviewers. Each element contains an associative array of two elements—revUser (the user id of the reviewer who is suggested to evaluate paper i) and weight (the similarity factor between paper i and reviewer revUser).

-

papersOfReviewer[]—an associative array, whose keys corresponds to the usernames (revUser) of the reviewers who appear in the first column of the similarity matrix; and values containing arrays of two elements—id of the paper, being suggested to this reviewer; and the similarity factor between the paper and the reviewer.

For example:

-

rowsToShift[]—an array containing the row ids (these are actually the paper ids) that should be shifted one position to the left, so that the next-competent reviewer is suggested to this paper.

-

signifficantSF[paperId]—an array holding the number of significant, non-zero, similarity factors for every paper, identified by its paperId.

-

reviewersToRemove[]—an array holding the identifiers (revUser) of the reviewers who are already busy (i.e. have enough papers to review) and should be reviewed from the similarity matrix (except its first row) on the next pass through the outermost do-while cycle, so that they are not assigned to any more papers.

-

busyReviewers[]—an array holding the identifiers of the reviewers who are already busy (i.e. have enough papers to review). This is similar to reviewersToRemove with one major difference—busyReviewers keeps identifiers all the time, while reviewersToRemove is cleared on each pass after deleting the respective reviewers (similarity factors) from the similarity matrix.

-

maxPapersToAssign[j]—an array holding the maximum number of papers that could be assigned to every reviewer j, identified by its revUser.

Rights and permissions

About this article

Cite this article

Kalmukov, Y. An algorithm for automatic assignment of reviewers to papers. Scientometrics 124, 1811–1850 (2020). https://doi.org/10.1007/s11192-020-03519-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-020-03519-0

Keywords

- Heuristic assignment algorithm

- Bipartite graph matching

- Assignment of reviewers to papers

- Conference management

Mathematical Subject Classification

- 68R10 (Graph theory)

- 05C70 (Graph Matching)

- 05C85 (Graph algorithms)

- 90B50 (Management decision making)

- 90B70 (Manpower planning)

- 68P20 (Information storage and retrieval)

- 68T20 (heuristics, search strategies, etc.)

- 68U35 (Information systems)