Abstract

During the past years, evolutionary testing research has reported encouraging results for automated functional (i.e. black-box) testing. However, despite promising results, these techniques have hardly been applied to complex, real-world systems and as such, little is known about their scalability, applicability, and acceptability in industry. In this paper, we describe the empirical setup used to study the use of evolutionary functional testing in industry through two case studies, drawn from serial production development environments at Daimler and Berner & Mattner Systemtechnik, respectively. Results of the case studies are presented, and research questions are assessed based on them. In summary, the results indicate that evolutionary functional testing in an industrial setting is both scalable and applicable. However, the creation of fitness functions is time-consuming. Although in some cases, this is compensated by the results, it is still a significant factor preventing functional evolutionary testing from more widespread use in industry.

Similar content being viewed by others

Notes

If you are interested in trying out this one-click automated testing tool on your software, please go to: http://evotest.iti.upv.es.

Due to the small sample size of ETF_Manual (two optimization runs), the differences between the final fitness values of ETF_Default and ETF_Manual as well as ETF_Manual and ETF_Automated turned out to be not statistically significant, according to MWW tests. However, the difference between ETF_Random and ETF_Default is of high statistical significance.

References

Description of evolution engine parameters. http://guide.gforge.inria.fr/eeparams/EEngineParameters.pdf. Last accessed April 19, 2011.

ETF user manual and cookbook. http://evotest.iti.upv.es. Last accessed April 13, 2011.

GUIDE. http://gforge.inria.fr/projects/guide/. Last accessed April 13, 2011.

Evotest. http://evotest.iti.upv.es (2006). Last accessed April 13, 2011.

Arcuri, A., White, D. R., Clark, J., & Yao, X. (2008). Multi-objective improvement of software using co-evolution and smart seeding. In: X. Li, M. Kirley, M. Zhang, D. G. Green, V. Ciesielski, H. A. Abbass, Z. Michalewicz, T. Hendtlass, K. Deb, K. C. Tan, J. Branke, & Y. Shi (Eds.), Proceedings of the 7th international conference on simulated evolution and learning (SEAL ’08), LNCS (Vol. 5361, pp. 61–70). Melbourne, Australia: Springer.

Baresel, A., Pohlheim, H., & Sadeghipour, S. (2003). Structural and functional sequence test of dynamic and state-based software with evolutionary algorithms. In GECCO (pp. 2428–2441).

Beizer B. (1990). Software testing techniques. London: International Thomson Computer Press.

Briand L. C. (2007). A critical analysis of empirical research in software testing. In: Empirical software engineering and measurement, 2007. First International Symposium on ESEM 2007 (pp. 1–8).

Bühler, O., & Wegener, J. (2004). Automatic testing of an autonomous parking system using evolutionary computation. In Proceedings of SAE 2004 world congress (pp. 115–122).

Bühler, O., & Wegener, J. (2008). Evolutionary functional testing. Computers & Operations Research, 35(10), 3144–3160.

Chan, B., Denzinger, J., Gates, D., Loose, K., & Buchanan, J. (2004). Evolutionary behaviour testing of commercial computer games. In Proceedings of CEC 2004, Portland (pp. 125–132).

DaCosta, L., Fialho, A., Schoenauer, M., & Sebag, M. (2008). Adaptive operator selection with dynamic multi-armed bandits. In Proceedings of the 10th annual conference on genetic and evolutionary computation, GECCO ’08 (pp. 913–920). New York, NY: ACM. DOI http://doi.acm.org/10.1145/1389095.1389272. http://doi.acm.org/10.1145/1389095.1389272.

Fewster, M., & Graham, D. (1999). Software test automation: effective use of test execution tools. New York, NY: ACM Press/Addison-Wesley Publishing Co.

Goldberg, D.~E. (1989). Genetic algorithms in search, optimization and machine learning. Boston: Addison Wesley.

Grochtmann, M., & Wegener, J. (1998). Evolutionary testing of temporal correctness. In: Proceedings of the 2nd international software quality week Europe (QWE 1998). Brussels, Belgium.

Gros, H. G. (2003). Evaluation of dynamic, optimisation-based worst-case execution time analysis. In: Proceedings of the international conference on information technology: Prospects and challenges in the 21st century, (Vol. 1, pp. 8–14).

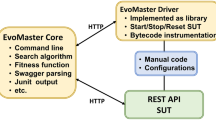

Gross, H., Kruse, P. M., Wegener, J., Vos, T. (2009). Evolutionary white-box software test with the evotest framework: A progress report. In ICSTW ’09: Proceedings of the IEEE international conference on software testing, verification, and validation workshops (pp. 111–120). IEEE Computer Society, Washington, DC, USA.

Harman, M., Hu, L., Hierons, R., Baresel, A., & Sthamer, H. (2002). Improving evolutionary testing by flag removal. In Proceedings of the genetic and evolutionary computation conference (GECCO 2002) (pp. 1233 – 1240). Morgan Kaufmann, New York, USA.

Holland, J.H. (1975). Adaptation in natural and artificial systems. Ann Arbor: University of Michigan Press.

Jones, B., Sthamer, H., & Eyres, D. (1996). Automatic structural testing using genetic algorithms. The Software Engineering Journal, 11(5), 299–306.

Juristo, N., Moreno, A., & Vegas, S. (2004). Reviewing 25 years of testing technique experiments. Journal of Empirical Software Engineering 9(1), 7–44.

Keijzer, M., Merelo, J. J., Romero, G., & Schoenauer, M. (2001). Evolving objects: A general purpose evolutionary computation library. In Artificial evolution (pp. 231–244). http://citeseer.ist.psu.edu/keijzer01evolving.html.

Kitchenham, B. A., Pfleeger, S. L., Pickard, L. M., Jones, P. W., Hoaglin, D. C., Emam, K. E., et al. (2002). Preliminary guidelines for empirical research in software engineering. IEEE Transactions on Software Engineering, 28(8), 721–734.

Klimke, A. (2003) How to access Matlab from Java, IANS report 2003/005. Tech. rep., University of Stuttgart. http://preprints.ians.uni-stuttgart.de.

Kruse, P. M., Wegener, J., & Wappler, S. (2009). A highly configurable test system for evolutionary black-box testing of embedded systems. In GECCO ’09: Proceedings of the 11th annual conference on genetic and evolutionary computation (pp. 1545–1552). New York, NY: ACM.http://doi.acm.org/10.1145/1569901.1570108.

Lethbridge, T. C., Sim, S. E., & Singer, J. (2005). Studying software engineers: Data collection techniques for software field studies. Empirical Software Engineering, 10(3), 311–341.

Lindlar, F., Windisch, A., & Wegener, J. (2010). Integrating model-based testing with evolutionary functional testing. In Proceedings of the 3rd international conference on software testing, verification, and validation workshops (ICSTW 2010) (pp. 163–172). Washington, DC: IEEE Computer Society.

McMinn, P. (2004). Search-based software test data generation: A survey. Software Testing, Verification and Reliability, 14(2), 105–156.

McMinn, P. (2011). Search-based software testing: Past, present and future. In Proceedings of the 4th international workshop on search-based software testing (SBST 2011).

Messina. http://www.berner-mattner.com/en/automotive-messina.php. Last accessed Feb 3, 2010.

Mueller, F., & Wegener, J. (1998). A comparison of static analysis and evolutionary testing for the verification of timing constraints. In RTAS ’98: Proceedings of the 4th IEEE real-time technology and applications symposium (p. 144). Washington, DC: IEEE Computer Society.

Pargas, R. P., Harrold, M. J., & Peck, R. R. (1999). Test-data generation using genetic algorithms. Journal of Software Testing, Verification and Reliability, 9(4), 263–282.

Perry, D. E., Porter, A. A., & Votta, L. G. (2000). Empirical studies of software engineering: A roadmap. In: ICSE ’00: Proceedings of the conference on the future of software engineering, (pp. 345–355). ACM.

Perry, D. E., Sim, S. E., & Easterbrook, S. (2005). Case studies for software engineers. In SEW ’05: Proceedings of the 29th annual IEEE/NASA software engineering workshop—Tutorial notes (pp. 96–159). Washington, DC: IEEE Computer Society.

Pohlheim, H. (2000). Evolutionäre algorithmen: Verfahren, operatoren und hinweise für die Praxis. Springer, Berlin: Heidelberg [u.a.].

Sthamer, H., & Wegener, J. (2002). Using evolutionary testing to improve efficiency and quality in software testing. In Proceedings of 2nd Asia-Pacific conference on software testing.

Tlili, M., Sthamer, H., Wappler, S., & Wegener, J. (2006). Improving evolutionary real-time testing by seeding structural test data. In Proceedings of the congress on evolutionary computation (CEC) (pp. 3227–3233). IEEE.

Tlili, M., Wappler, S., Sthamer, H., & Wegener, J. (2006). Improving evolutionary real-time testing. In Proceedings of the 8th annual conference on genetic and evolutionary computation (GECCO) (pp. 1917–1924). New York: ACM Press.

Tracey, N., Clark, J., Mander, K., & McDermid, J. (2000). Automated test-data generation for exception conditions. Software: Practice and Experience, 30(1), 61–79.

Vos, T., Baars, A., Lindlar, F., Kruse, P., Windisch, A., & Wegener, J. (2010). Industrial scaled automated structural testing with the evolutionary testing tool. In Proceedings of the 3rd international conference on software testing, verification and validation (ICST2010), Paris (France) (pp. 175–184). IEEE Computer Society.

Wegener, J., Buhr, K., & Pohlheim, H. (2002). Automatic test data generation for structural testing of embedded software systems by evolutionary testing. In GECCO ’02: Proceedings of the genetic and evolutionary computation conference (pp. 1233–1240). San Francisco, CA: Morgan Kaufmann Publishers Inc.

Wegener, J., Grimm, K., Grochtmann, M., Sthamer, H., & Jones, B. (1996). Systematic testing of real-time systems. In Proceedings of the 4th European international conference on software testing, analysis and review. Amsterdam, The Netherlands.

Windisch, A., & Al Moubayed, N. (2009). Signal generation for search-based testing of continuous systems. In Proceedings of the 2nd international conference on software testing, verification, and validation workshops (pp. 121–130). Washington, DC: IEEE Computer Society.

Windisch, A., Lindlar, F., Topuz, S., & Wappler, S. (2009). Evolutionary functional testing of continuous control systems. In GECCO ’09: Proceedings of the 11th annual conference on genetic and evolutionary computation (pp. 1943–1944). New York, NY: ACM.

Windisch, A., Lindlar, F., Topuz, S., & Wappler, S. (2009). Evolutionary functional testing of continuous control systems. In Proceedings of the 11th annual conference on genetic and evolutionary computation (GECCO) (pp. 1943–1944). New York, NY: ACM.

Acknowledgments

This work is supported by EU grant IST-33472 (EvoTest). For their support and help, we would like to thank Mark Harman, Kiran Lakhotia and Youssef Hassoun from Kings College London; Marc Schoenauer and Luis da Costa from INRIA; Jochen Hänsel from Fraunhofer FIRST; Dimitar Dimitrov and Ivaylo Spasov from RILA; and Dimitris Togias from European Dynamics.

Author information

Authors and Affiliations

Corresponding author

A questions for informal interviews

A questions for informal interviews

Typical questions that were used for the informal interviews.

-

1.

Was installation and setup of the ETF as easy and quick as you would like to? What would you improve?

-

2.

Would you recommend the use of the ETF to other testers you know? How?

-

3.

Do you think that you could persuade your management to invest in a tool like ETF?

-

4.

Are there any additional functionalities you need in order for the tool to be suitable in your industrial context?

-

5.

Do you have any other suggestions as to how the tools could be made more suitable for your industrial context?

-

6.

Do you have any other comments, criticisms or suggestions relating to the usability - ease of use - of the tools?

-

7.

Do you have confidence into the results of the ETF (maybe compared to the testing techniques currently used)?

Rights and permissions

About this article

Cite this article

Vos, T.E.J., Lindlar, F.F., Wilmes, B. et al. Evolutionary functional black-box testing in an industrial setting. Software Qual J 21, 259–288 (2013). https://doi.org/10.1007/s11219-012-9174-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11219-012-9174-y