Abstract

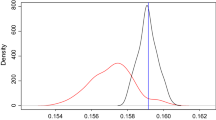

The Metropolis–Hastings algorithm is one of the most basic and well-studied Markov chain Monte Carlo methods. It generates a Markov chain which has as limit distribution the target distribution by simulating observations from a different proposal distribution. A proposed value is accepted with some particular probability otherwise the previous value is repeated. As a consequence, the accepted values are repeated a positive number of times and thus any resulting ergodic mean is, in fact, a weighted average. It turns out that this weighted average is an importance sampling-type estimator with random weights. By the standard theory of importance sampling, replacement of these random weights by their (conditional) expectations leads to more efficient estimators. In this paper we study the estimator arising by replacing the random weights with certain estimators of their conditional expectations. We illustrate by simulations that it is often more efficient than the original estimator while in the case of the independence Metropolis–Hastings and for distributions with finite support we formally prove that it is even better than the “optimal” importance sampling estimator.

Similar content being viewed by others

References

Atchadé, Y.F., Perron, F.: Improving on the independent Metropolis–Hastings algorithm. Stat. Sin. 15, 3–18 (2005)

Billingsley, P.: Statistical methods in Markov chains. Ann. Math. Stat. 32, 12–40 (1961)

Casella, G., Robert, C.: Rao–Blackwellisation of sampling schemes. Biometrika 83, 81–94 (1996)

Douc, R., Robert, C.P.: A vanilla Rao–Blackwellisation of Metropolis–Hastings algorithms. Ann. Stat. 39, 261–277 (2011)

Geyer, C.J.: Practical Markov chain Monte Carlo. Stat. Sci. 7, 473–483 (1992)

Hastings, W.K.: Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57, 97–109 (1970)

Jacob, P., Robert, C.P., Smith, M.: Using parallel computation to improve independent Metropolis–Hastings based estimation. J. Comput. Graph. Stat. 20, 616–635 (2011)

Malefaki, S., Iliopoulos, G.: On convergence of properly weighted samples to the target distribution. J. Stat. Plan. Inference 138, 1210–1225 (2008)

Marin, J.M., Robert, C.P.: Importance sampling methods for Bayesian discrimination between embedded models. In: Chen, M.-H., Dey, D.K., Müller, P., Sun, D., Ye, K. (eds.) Frontiers of Statistical Decision Making and Bayesian Analysis (2010). Chapter 14, pp. 513–553

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21, 1087–1091 (1953)

Acknowledgements

The authors wish to thank the two anonymous referees for their helpful comments and suggestions which considerably improved the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of Theorem 1

Appendix: Proof of Theorem 1

(a) Assume without loss of generality that the state space is \(\mathcal {X}=\{1, \ldots, m\}\) for some m≥2 and set for convenience h k =h(k), π k =π(k), g k =g(k), g kl =g(l|k) and w k =w(k). Then,

By the ergodic theorem it holds \(n^{-1}\sum_{i=1}^{n}I(X_{i}=k)\stackrel {\mathrm{a.s.}}{\longrightarrow}g_{k}\) and \(\sum_{j=1}^{n}\frac{\xi_{j}}{\sum_{l=1}^{n}\xi_{l}}I(X_{j}=\ell )\stackrel{\mathrm{a.s.}}{\longrightarrow}\pi _{\ell}\). Since (5) contains only finite sums we conclude that it converges almost surely to

because \(g_{k} = \kappa\sum_{i=1}^{m} \min\{\pi_{k} g_{k\ell}, \pi _{\ell }g_{\ell k}\}\). By taking h≡1 we get that \(n^{-1}\sum _{i=1}^{n}\hat{w}(X_{i})\stackrel{\mathrm{a.s.}}{\longrightarrow}\kappa \) and thus

(b) Let us define

and

Billingsley (1961) proved that

where \(\bar{\boldsymbol{U}}= (\bar{U}_{1}, \ldots, \bar{U}_{m})^{T}\), g=(g 1,…,g m )T and the ij entry of Σ 11 is

Here \(g_{ij}^{(n)}\) denotes the n-step transition probability from state i to state j and δ ij is Kronecker’s delta. Using similar arguments it can be proven that

where the ij entry of the submatrix Σ 12 is σ 12(ij)=κw j σ 11(ij) while the ij entry of the submatrix Σ 22 is σ 22(ij)=δ ij g i κw i (κw i −1)+κ 2 w i w j σ 11(ij).

It is clear that the asymptotic distribution of \(n^{1/2}\{\hat {h}_{\hat {w}}-E_{\pi}(h)\}\) can be found via the standard delta method. Observe that \(\hat{h}_{\hat{w}}\) can be expressed as

say. By differentiation we get

and

The variance of the asymptotic normal distribution of \(n^{1/2}\{\hat {h}_{\hat{w}}-E_{\pi}(h)\}\) is

where ∇ u f 1, ∇ v f 1 are the vectors containing the derivatives with respect to u, v, respectively, evaluated at (u,v)=(g,κ π). We will see below that the above expression is in fact as stated in the theorem. To this end, let us also consider the IS “estimator” \(\hat {h}_{IS}\) and evaluate its asymptotic variance too. After some algebra we get that

say. By (6) and (7) we see that the only difference between the two “estimators” is the replacement of π l by its unbiased estimate \(\kappa^{-1}\bar{V}_{l}\) in \(\hat {h}_{\hat{w}}\). By differentiation we get

Since the corresponding variance of the importance sampling estimator is

it is clear that

Set now \(A_{1} = - 2\nabla_{\boldsymbol{u}}f_{1}^{T} \boldsymbol{\varSigma } _{12} \nabla_{\boldsymbol{v}}f_{1}\), \(A_{2} = -\nabla_{\boldsymbol{u}}f_{1}^{T} \boldsymbol{\varSigma }_{22} \nabla_{\boldsymbol{u}}f_{1}\), B n =w(X n )h(X n ) for n=0,1,…, and b i =w i h i , μ i =E(B 1|X 0=i) for i=1,…,m. Consider further without loss of generality that E π (h)=0 and note that under stationarity, it holds that E π (h)=E g (B n ) for all n. Then,

But

and since X is reversible, i.e., it holds g i g ij =g j g ji and more generally \(g_{i} g_{ij}^{(n)} = g_{j} g_{ji}^{(n)}\) for all n, we conclude that

Moreover,

and for all n, we have that

Thus,

On the other hand,

But

and for all n,

Hence,

By the standard asymptotic theory for Markov chains \(\sigma ^{2}_{IS}(h)=E_{g}(B_{0}^{2})+2\sum_{n=1}^{\infty}E_{g}(B_{0}B_{n})\). Since \(E_{g}(B_{0}^{2})=\operatorname{Var}_{g}\{w(X_{0})h(X_{0})\}\) part (b) of the theorem follows.

(c) In order to compare the two variances note first that the first term of the sum in (10) is clearly positive. Moreover, we know that \(\sum_{n=2}^{\infty}E_{g}(B_{0}B_{n}) \equiv\sum _{n=2}^{\infty}\mathrm{Cov}_{g}(B_{0},B_{n}) \geq 0\) since each residual sum of autocovariances starting from an even integer is nonnegative (see for example Geyer 1992). Thus a sufficient condition for the variances difference to be positive is E g (B 0 B 1)≥0. This expectation can be expressed as a quadratic form, namely,

where \(\tilde{g}_{ij}=g_{i}g_{ij}\). We will show that in the case of independence MH, the matrix \(\tilde{\boldsymbol{G}}\) is nonnegative definite. Indeed, in this case,

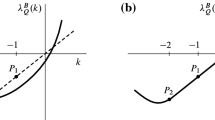

Consider without loss of generality that

and set γ ij =κ −1 q i π j . Then clearly \(\tilde {g}_{ij}=\gamma_{i\wedge j,i\vee j}\) where i∧j=min{i,j} and i∨j=max{i,j} so \(\tilde{\boldsymbol{G}}\) is nonnegative definite. To see that, proceed by induction to show that all its principal minors are nonnegative. Let \(\tilde{\boldsymbol{G}}_{kk}\) denote the matrix consisting of the first k rows and columns of \(\tilde{\boldsymbol{G}}\). Then, \(|\tilde{\boldsymbol{G}}_{11}|\equiv\gamma_{1\wedge1}=\kappa^{-1}q_{1}\pi_{1}>0\). Suppose now that \(|\tilde{\boldsymbol{G}}_{kk}|\geq 0\) for some k≥1. In order to prove that \(|\tilde{\boldsymbol{G}}_{k+1,k+1}|\geq 0\), multiply the k-th row of \(\tilde{\boldsymbol{G}}_{k+1,k+1}\) by π k+1/π k and subtract it from the (k+1)-th. Then the resulting matrix has all last row’s elements equal to zero except from the last one which is \(\kappa^{-1}q_{k+1}\pi_{k+1}-\kappa^{-1}q_{k}\pi _{k+1}^{2}/\pi _{k}=\kappa^{-1}\pi_{k+1}^{2}(q_{k+1}/\pi_{k+1}-q_{k}/\pi_{k})\). By (11) this quantity is nonnegative thus, \(|\tilde {\boldsymbol{G}}_{k+1,k+1}|= \kappa^{-1}\pi_{k+1}^{2}(q_{k+1}/\pi_{k+1}- q_{k}/\pi _{k})|\tilde{\boldsymbol{G}}_{kk}|\geq 0\).

Rights and permissions

About this article

Cite this article

Iliopoulos, G., Malefaki, S. Variance reduction of estimators arising from Metropolis–Hastings algorithms. Stat Comput 23, 577–587 (2013). https://doi.org/10.1007/s11222-012-9331-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-012-9331-y