Abstract

In virtualized environments, multiprocessor virtual machines encounter synchronization problems such as lock holder preemption (LHP) and lock waiter preemption (LWP). When the issue happens, a virtual CPU (VCPU) waiting for such locks spins for an extraordinarily long time and wastes CPU cycles seriously, resulting in a significant degradation of system performance. Recent research that addresses this issue has some shortcomings. To address these problems, this paper proposes an efficient lock-aware virtual machine scheduling scheme to avoid LHP and LWP. Our approach detects lock holders and waiters from the virtual machine monitor side, and gives preempted lock holders and waiters multiple, continuous, extra scheduling chances to release locks, assuring that the de-scheduled VCPUs are not lock holders or waiters with high probability. We implement a Xen-based prototype and evaluate the performance of lock-intensive workloads. The experimental results demonstrate that our scheduling scheme fundamentally eliminates lock holder preemptions and lock waiter preemptions.

Similar content being viewed by others

1 Introduction

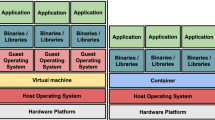

Virtualization technology has been widely used in modern data centers. It allows a large number of virtual machines (VMs) to be consolidated in limited underlying physical resources to improve resource utilization. Meanwhile, with the development of hardware technology, increasing physical resources are integrated on a single machine, CPU resources in particularly. As the increasing demand for VM computing power, multiprocessor (MP) VMs have been found an increasingly wide utilization. However, MP VMs introduce seriously synchronization issues such as lock holder preemption (LHP) [1] and lock waiter preemption (LWP) [2], resulting in a significant degradation of system performance.

Recent research has made effort to eliminate the impact of such issues from different aspects, such as co-scheduling [3, 4], lock waiter yielding [5–8] and LHP and LWP avoidance [1, 9, 10]. However these solutions have some shortcomings. Therefore, in this paper, we propose an efficient lock-aware virtual machine scheduling scheme to avoid LHP and LWP. As the detection of the lock holder and waiter is performed in the virtual machine monitor (VMM), which does not require guest kernel modifications. When a de-scheduled virtual CPU (VCPU) is detected as a lock holder or waiter, we give the VCPU an extra scheduling to release locks. During this extra scheduling, the VCPU may acquire other locks after releasing its current locks, which makes the VCPU have the probability to get de-scheduled with holding other locks again. Thus, we continuously schedule such VCPU until it holds no lock when it gets de-scheduled. By this scheduling manner, our scheduling scheme assures that the de-scheduled VCPUs are not lock holders or waiters with high probability.

The rest of the paper is organized as follows. We discuss background and related work in Sect. 2 followed by the design of our scheduling scheme in Sect. 3. We present our evaluation results in Sect. 4. Then we discuss some possible extensions in Sect. 5. We conclude the paper in Sect. 6.

2 Background and related work

In this section, we describe the lock holder preemption issue and lock waiter preemption issue. Then we discuss the inadequacy of existing solutions.

2.1 The introduction of lock holder preemption and lock waiter preemption

Lock holder preemption In virtualized environments, because a VCPU can be preempted at any time, it invalidates the assumption of spinlocks that the lock holder will never be preempted. When a VCPU is preempted while it is holding locks, other VCPUs that are trying to acquire the locks would spin and wait until the preempted VCPU gets scheduled back and releases the locks. This results in an extraordinarily long lock waiting time and wastes CPU cycles seriously. This issue is called as lock holder preemption.

Lock waiter preemption LWP issue, which is first identified in [2], occurs when ticket spinlocks [11] are used in virtualized environments. For instance, if thread A holds the lock, then thread B tries to acquiry it, and then thread C, thread B is guaranteed to get the lock before thread C. In virtualized environments, if the VCPU running thread B is preempted, the VCPU running thread C will have to spin until the VCPU running thread B is scheduled back to acquires and releases the lock. It results in the waste of CPU cycles. This issue is called as lock waiter preemption.

2.2 Problems with alternative solutions

Co-scheduling The co-scheduling approach, derived from gang scheduling [3], allows the VMM to schedule all or part of the VCPUs in one VM simultaneously to eliminate LHP and LWP issues. Based on the ideal, some research makes improvement [12–14]. They put the VM into two categories: the high-throughput type and the concurrent type. The concurrent type of VMs are scheduled with co-scheduling strategy, and the high-throughput type of VMs are scheduled with asynchronous scheduling strategy. However, co-scheduling can cause CPU fragmentation, priority inversion and execution delay.

Lock waiter yielding As mentioned above, when LHP or LWP occurs, other VCPUs trying to acquire the corresponding lock have to spin and wait for an extraordinarily long time. This feature is used to detect the lock waiter. Once the detector finds a VCPU spinning for a certain amount of time, the VCPU is considered as a lock waiter, and it is yielded to avoid the waste of CPU cycles. Although yielding the CPU to another VCPU saves the CPU time, it delays the execution of the yielded VCPU and degrades performance. On the other hand, when yielding a lock waiter, it has to intelligently choose which VCPU is the best to replace the yielded VCPU to run. If yielding to a spinning VCPU, performance degrades severely.

LHP and LWP avoidance This approach ensures that any de-scheduled VCPU is not holding or waiting for any lock, which makes total system performance is undiminished. This is an ideal approach. However, the existing related research only addresses LHP issue and cannot handle LWP issue. Moreover, the existing solutions either require guest kernel modications [10] or need complicated conguration [1].

Preemptable ticket spinlocks This approach provides a new lock primitive [2] to alleviates LWP issue by relaxing the ordering guarantees offered by ticket locks. However, the approach cannot handle LHP issue.

3 Design of lock-aware scheduling scheme

As the existing solutions have some shortcomings, we propose an efficient lock-aware virtual machine scheduling scheme to avoid LHP and LWP. Our scheduling scheme is inspired by the scheduler in native OS environments. Therefore, we firstly introduce how the scheduler avoids the preemption of the lock holder and waiter in native OS environments.

3.1 LHP and LWP avoidance in native OS environments

In native OS environments, the kernel keeps a \(preempt\_count\) for each thread within their own \(thread\_info\) structures. \(preempt\_count\) is used by the scheduler to determine whether a thread is preemptible. If \(preempt\_count\) is 0, the corresponding thread is safe to be preempted by other threads; otherwise it means the corresponding thread is holding some system resources, such as spinlocks, and it is not safe to preempt the thread. In preemptive kernel (we will further discuss this in Sect. 5), whenever an outside event, such as clock interrupt, arrives and triggers thread scheduling, the scheduler firstly checks \(preempt\_count\) of the current thread. If it is 0, the scheduler schedules out the current thread; otherwise the scheduler keeps the current thread running. Regarding spinlock-related operations, before a thread acquires a lock, it increases its \(preempt\_count\) by 1; after a thread releases a lock, it decreases its \(preempt\_count\) by 1. Therefore, \(preempt\_count\) of a thread holding one or more locks is always nonzero, and the scheduler is not allowed to schedule out such thread, which avoids LHP. Meanwhile, as \(preempt\_count\) increases before acquiring a lock, \(preempt\_count\) of a thread waiting for a lock is also nonzero, which ensures lock waiters cannot be preempted and avoids LWP.

\(preempt\_count\) is defined within \(thread\_info\) structure and \(thread\_info\) structure is allocated at the bottom of the kernel stack. Therefore, it is easy to calculate the address of \(preempt\_count\) by the ESP register. We use \(preempt\_count\) to detect the lock holder and waiter in the VMM.

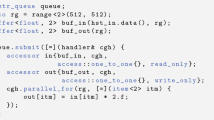

3.2 Lock-aware in the VMM

This section describes how to determine the lock holder and waiter by \(preempt\_count\) of a VCPU’s current thread. First of all, we make an experiment to study the relationship between \(preempt\_count\) and a VCPU’s lock state (holding, waiting, or other). To monitor real usage of spinlocks, we instrumented the Linux spinlocks implementation by adding a counter in the guest kernel to indicate a lock state of the current thread. We acquire \(preempt\_count\) of the current thread in the VMM by the manner described before. Whenever the VMM schedule out a VCPU, we record the lock state of the current thread running on the VCPU and the corresponding \(preempt\_count\). We find that most of the VCPUs with nonzero \(preempt\_count\) are holding or waiting for locks, and the value of \(preempt\_count\) approximately reflects the number of locks that a VCPU is holding. Thereby, once we detect that \(preempt\_count\) of a de-scheduled VCPU is nonzero, we consider the VCPU as a potential lock holder or waiter.

However, there is an exception. There are nonzero values of \(preempt\_count\) but the corresponding VCPUs are not holding or waiting for any lock. This is because the use of \(preempt\_count\) is not only for spinlocks. In some other non-preemptible scenarios, such as interrupt processing, page fault, temporary kernel mapping, etc, \(preempt\_count\) is also set as a nonzero value to avoid the preemption. To distinguish these situations from LHP and LWP, we make an in-deepth study on \(preempt\_count\) and find that \(preempt\_count\) of a thread that is not in spinlock-related scenarios keeps unchanged for a very long time and changes much less frequently than that of a thread holding or waiting for locks. According to this feature, we detect this “exception” in our scheduling scheme, which is described in next section.

3.3 Lock-aware virtual machine scheduling scheme

To avoid LHP and LWP issues, the VMM scheduler should be able to determine whether the current thread running on a VCPU is holding or waiting for locks. Apparently, the VCPUs that are holding or waiting for locks should not be preempted. We refer to the VCPUs of this type as unpreemptible-VCPUs, and the rest of VCPUs are referred to as preemptible-VCPUs. Before a VCPU gets scheduled out, the scheduler checks whether the VCPU is an unpreemptible-VCPU. If the VCPU is an unpreemptible-VCPU, it is allowed to get scheduled for an extra time slice. In order to keep the scheduling as fair as possible, the VCPU should get scheduled out immediately after releasing all locks. At this time, the VCPU turns into a preemptible-VCPU. However, due to the semantic gap between guest operating systems and the VMM, it is difficult to determine exact size of extra scheduling time slice that a VCPU needs to release locks. If the extra scheduling time slice is small and the VCPU is unable to release locks within the extra scheduling time slice, it reduces scheduling efficiency. To ensure the VCPU to release all locks during the extra scheduling, our scheduling scheme sets a large size of the extra scheduling time slice, which is longer than the lock holding time. We denote the size of the extra scheduling time slice as \(T_\mathrm{extra}\), which will be further discussed in Sect. 3.4.

When an unpreemptible-VCPU gets scheduled in the extra scheduling time, there is time remaining after releasing locks since \(T_\mathrm{extra}\) is greater than the lock holding time. The VCPU would hold or wait for other locks within the remaining time, which makes it possible that the VCPU is still an unpreemptible-VCPU after it runs out of its extra time. Therefore, it is necessary to repeatedly give the VCPU extra scheduling chance until it turns into a preemptible-VCPU. Figure 1 illustrates our scheduling sequence with multiple, continuous, extra scheduling. VCPU\(_1\) gets scheduled at first. At the time of \(t_1\), VCPU\(_1\) runs out of its normal time and is considered as an unpreemptible-VCPU by our scheduler. Thus, VCPU\(_1\) get an extra scheduling chance, whose time slice size is \(T_\mathrm{extra}\). After VCPU\(_1\) runs out of its extra time at the time of \(t_2\), it is still an unpreemptible-VCPU and gets another extra scheduling chance with \(T_\mathrm{extra}\) of time slice size. This situation happens again at the time of \(t_3\) and \(t_4\). After four times of continuous, extra scheduling, VCPU\(_1\) turns into a preemptible-VCPU at the time of \(t_5\), when we schedule out VCPU\(_1\) and schedule in VCPU\(_2\). The process of scheduling VCPU\(_2\) is similar to VCPU\(_1\). VCPU\(_2\) runs out of its normal time at the time of \(t_6\), and it is considered as an unpreemptible-VCPU. After three times of continuous, extra scheduling, VCPU\(_2\) turns into a preemptible-VCPU at the time of \(t_7\). Then we schedule out VCPU\(_2\) and schedule in VCPU\(_3\).

If a VCPU keeps acquiring and releasing locks frequently and happens to hold or wait for locks every time when it runs out of its time, the VCPU will never get scheduled out, which would violate the basic principle of virtualization. To address the issue, we should set an upper bound to limit the total times of continuous, extra scheduling while not hurting performance. Firstly, let us analyze the probability that an unpreemptible-VCPU turns into a preemptible-VCPU within n times of continuous, extra scheduling. It is independent that whether a VCPU is an unpreemptible-VCPU at the end of each extra time slice. Thus, for each time when a VCPU runs out of its extra time, the probability that the VCPU is an unpreemptible-VCPU is the same. We assume this probability is \(p (0 \le p \le 1)\). We denote \(P_n\) as the probability that a VCPU maintains as an unpreemptible-VCPU at each end of n times of continuous, extra scheduling. Apparently, we have:

Note that \(P_n\) also stands for the probability that an unpreemptible-VCPU does not turn into a preemptible-VCPU by n times of continuous, extra scheduling. In other word, within n times of continuous, extra scheduling, the probability that an unpreemptible-VCPU turns into a preemptible-VCPU is \((1-P_n)\). Therefore, if we set n as the upper bound of the total times of continuous, extra scheduling, the probability that LHP and LWP can be avoided by our scheduling scheme is \((1-P_n)\). If we fix the probability that LHP and LWP should be avoided, we can calculate a minimum upper bound. Let UB\(_\mathrm{extra}\) denote the upper bound of the total times of continuous, extra scheduling. Let P denote the demanded probability that LHP and LWP can be avoided. We get the following equation from Eq. (1):

For example, if \(p=0.5\) and we demand the probability of 99.8 % to avoid LHP and LWP, the minimum upper bound calculated by Eq. (2) is 9. If we demand the probability of 99.9 %, the upper bound is 10. Hence, if choosing an appropriate UB\(_\mathrm{extra}\), our scheduling scheme is able to assure that the de-scheduled VCPUs are not lock holders or waiters with high probability by multiple, continuous, extra scheduling. As p varies under different workloads and different configurations, choosing a minimum UB\(_\mathrm{extra}\) by (2) is slightly inconvenience. For simplicity, we set UB\(_\mathrm{extra}\) by experiment in Sect. 4.1.

There is another issue that is related to our lock-aware approach. As was mentioned in Setc. 3.2, nonzero \(preempt\_count\) does not indicate the corresponding VCPU must be holding or waiting for locks, and it is necessary to distinguish between them. For convenience, we refer to these “exception” VCPUs as exception-VCPUs. Since we cannot determine if a VCPU is an exception-VCPU by only one nonzero \(preempt\_count\), we consider all VCPUs with nonzero \(preempt\_count\) as potential unpreemptible-VCPUs, and give these VCPUs multiple, continuous, extra scheduling chances. As was mentioned in Sect. 3.2, the exception-VCPUs keep \(preempt\_count\) unchanged for a very long time. During this time, \(preempt\_count\) will be found unchanged at each end of extra scheduling time slices. We denote the number of times that \(preempt\_count\) continuously remains unchanged at each end of extra scheduling time slices as unchanged count. To determine the exception-VCPUs, we set a upper bound for the unchanged count, which is denoted as UB\(_\mathrm{unchanged}\). Once the unchanged count of a VCPU exceeds UB\(_\mathrm{unchanged}\), we consider the VCPU as an exception-VCPU and schedule out it. In order to find these VCPUs as soon as possible, UB\(_\mathrm{unchanged}\) should be set as small as possible. Nevertheless, as a VCPU could maintain as a lock holder or waiter for many times as we mentioned above, \(preempt\_count\) of the VCPU would be continuously unchanged for some times, which leads to that some lock holders and waiters are considered as exception-VCPUs by mistake and performance degrades. Hence, UB\(_\mathrm{unchanged}\) should be set carefully. We set UB\(_\mathrm{unchanged}\) by experiment in Sect. 4.1.

3.4 Scheduling fairness

This section provides a technique to keep the scheduling fairness. To begin with, we take into account of the scheduling parameter \(T_\mathrm{extra}\). As was mentioned in Sect. 3.3, in order to guarantee unpreemptible-VCPUs release all locks within one extra scheduling time slice, the size of the extra scheduling time slice \(T_\mathrm{extra}\) should be longer than the lock holding time. However, if we choose an overlarge value, such as 10 ms, it is hard to balance the scheduling fairness and performance. The reason is as follows. It is multiple, continuous, extra scheduling that ensures the de-scheduled VCPUs are not lock holders or waiters with certain probability. To optimize performance, high probability and large UB\(_\mathrm{extra}\) are required. According to the example described in last section, to guarantee the probability of 99.9 %, a potential unpreemptible-VCPU should be assigned at most 10 times of continuous, extra scheduling. It means that if we set \(T_\mathrm{extra}\) as 10 ms, the VCPU would be scheduled for extra 100 ms, which is much longer than normal scheduling time (30 ms for Xen’s credit scheduler). If we reduce UB\(_\mathrm{extra}\), the probability that LHP and LWP can be avoided degrades, resulting in performance degradation. Hence, \(T_\mathrm{extra}\) should be set as small as possible, meanwhile, it should be larger than the size of the lock holding time. A further discussion about \(T_\mathrm{extra}\) is presented in Sect. 4.1.

However, although \(T_\mathrm{extra}\) is very small, the potential unpreemptible-VCPUs gain more CPU time than the others since extra scheduling time slices are assigned to the VCPUs by our scheduling scheme. To eliminate this unfairness, we divide the scheduling time of a potential unpreemptible-VCPU into two components: extra time component, which is the total extra scheduling time, and normal time component, which is normal scheduling time. We record the size of each potential unpreemptible-VCPU’s extra time component when the VCPU is scheduled out, and subtract the extra time component of last time from its normal time slice when the VCPU is scheduled in next time. The scheduling sequence is illustrated in Fig. 2, where T is the average scheduling time slice by default for each VCPU.

4 Evaluation

In this section, we present the detailed results to evaluate our scheduling scheme. We compare our scheduling scheme LAVMS with the paravirtual-spinlocks (PVLOCK), which is a lock waiter yielding approach, and a standard system, which is not optimized for LHP or LWP. We first determine the scheduling parameters of LAVMS. Then we compare the performance of different benchmarks among these scheduling schemes.

Our test system has one 2.0 GHz Intel Xeon E5405 processors with 4 cores. The test system runs Xen 4.3.2 with Linux 3.12.10 running in all VMs. Each VM is allocated 1GB of RAM and 4 VCPUs. We use four benchmarks as follows: webbench [15], ebizzy [16], hackbench [17] and kernbench [18].

4.1 Determining scheduling parameters

This section studies the impact of our three scheduling parameters on system performance. In the test, we use ebizzy and webbench. We run 5 VMs and each VM runs the same benchmark simultaneously. For each parameter configuration, we run for 5 minutes. We record the number of preempted lock holders, and measure the corresponding performance.

Determining\({T}_{extra}\) In order to eliminate the impact of UB\(_\mathrm{unchanged}\) and UB\(_\mathrm{extra}\) on the results, we set the two parameters as large as possible. Here, UB\(_\mathrm{unchanged}\) is set as 10, and UB\(_\mathrm{extra}\) is set as 30. We vary \(T_\mathrm{extra}\) from 10 to 100 \(\upmu \)s. The results are illustrated in Fig. 3. For both benchmarks, different values of \(T_\mathrm{extra}\) have the same performance and the same number of preempted lock holders. This is because that the extra scheduling time slice is large enough for an unpreemptible-VCPU to release all locks. Even if the VCPU acquires other locks during the extra scheduling, with large UB\(_\mathrm{unchanged}\) and UB\(_\mathrm{extra}\), our scheduling scheme ensures high probability that the VCPU is not a lock holder or waiter when it gets scheduled out. As this process is independent of \(T_\mathrm{extra}\), \(T_\mathrm{extra}\) hardly impacts performance. We can set \(T_\mathrm{extra}\) as any value between 10 and 100 \(\upmu \)s. Due to being frequently trapped into the VMM would harm the system efficiency and lead to a high overhead, we set \(T_\mathrm{extra}\) as 100 \(\upmu \)s in the rest of experiments.

Determining\({UB}_{unchanged}\) In this test, we set UB\(_\mathrm{extra}\) as 30, and set \(T_\mathrm{extra}\) as 100 \(\upmu \)s. We vary UB\(_\mathrm{unchanged}\) from 1 to 10. The results are illustrated in Fig. 4. When UB\(_\mathrm{unchanged}\) is 1, many lock holders and waiters are considered as exception-VCPUs by mistake and de-scheduled, resulting in a plenty of lock holder preemptions and lock waiter preemptions. With the increment of UB\(_\mathrm{unchanged}\), the mistakes get reduced, which increases performance. When UB\(_\mathrm{unchanged}\) reaches a certain value, 6 for webbench and 9 for ebizzy in our test, performance reaches the optimum for the first time. As UB\(_\mathrm{unchanged}\) keeps increasing, performance remains stable. Therefore, UB\(_\mathrm{unchanged}\) should be set as the corresponding certain value for different workloads. However, for convenience, we set UB\(_\mathrm{unchanged}\) as 10 for all of the rest experiments no matter what benchmark or workload is used.

Determining\({UB}_{extra}\) In this test, we do not vary UB\(_\mathrm{extra}\). Instead, we set the parameter as 30. \(T_\mathrm{extra}\) and UB\(_\mathrm{unchanged}\) are set as 100 and 10 \(\upmu \)s respectively according to the results of previous experiments. We measure the distribution of the number of times of continuous, extra scheduling. The results are illustrated in Fig. 5. Most of the VCPUs get scheduled below 10 times in the extra time component, which means that most of unpreemptible-VCPUs turn into preemptible-VCPUs within 10 times of continuous, extra scheduling. Note that there are lots of VCPUs scheduled 10 times. This is because these VCPUs are likely preemptible-VCPUs and \(preempt\_count\) of them are nonzero and keep unchanged. When these VCPUs get scheduled 10 times and touch the upper bound of the unchanged count UB\(_\mathrm{unchanged}\), our scheduling scheme de-schedules the VCPUs. As \(T_\mathrm{extra}\) is small enough, we could set a relatively larger value of UB\(_\mathrm{extra}\). We set UB\(_\mathrm{extra}\) as 30 in the rest of experiments.

4.2 Performance

As the VMs share the underlying physical resources, performance definitely decreases with the increment of the number of VMs. If taking no account of the impact of LHP and LWP, there is an inversely proportional relationship between the performance (in terms of throughput or the reciprocal of completion time) of each VM and the number of VMs. Concretely, the performance of each VM multiplied by the number of VMs is equal to a constant value, which is the maximum of total system performance. For example, if only one VM runs with the throughput of 120 Mb/s, two VMs should have a throughput of 60 Mb/s for each and three VMs should have a throughput of 40 Mb/s for each. However, if the scheduling scheme cannot effectively reduce the impact of LHP and LWP, due to execution delay and the waste of CPU cycles caused by LHP and LWP, there is no such inversely proportional relationship. In this case, as the number of competing VMs increases, the result of total system performance degrades. Therefore, total system performance can be used to measure how effectively an approach reduces the impact of LHP and LWP. This section compares the performance among the scheduling schemes mentioned before.

To compare the performance for different scenarios, we vary the number of VMs that share the underlying physical resources. We increase the number of VMs from 1 to 5, and each VM runs a same benchmark simultaneously. For each of the four benchmarks, we run 10 times for each configuration. We calculate the average performance of each VM. The results are illustrated in Fig. 6.

Figure 6a shows the performance of each scheduling schemes when executing webbench. In the case of one VM, each scheduling scheme has comparable performance. With the increment of the number of VMs, the performance of each VM degrades as expected. However, total system performance does not degrade under LAVMS, slightly degrades under PVLOCK, and significantly degrades with the standard system. The reason for this result is as follows. For the case of the standard system, as nothing optimized for LHP or LWP is done, there are lots of lock holder preemptions and lock waiter preemptions, leading to poor performance. For the case of PVLOCK, it yields the busy spinning waiters to avoid the waste of CPU cycles, which increases performance to some extent. However, due to execution delay, the performance of this PVLOCK is not the best. For the case of LAVMS, lock holders and waiters have multiple, continuous, extra scheduling chances to release locks, thus there are rare lock holder preemptions and lock waiter preemptions, which make the best performance among these scheduling schemes. As the number of VMs keep increasing, these features become more obvious.

Figure 6b–d are the results of ebizzy, hackbench and kernbench respectively, and show the similar feature as webbench. Note that, for ebizzy and kernbench, LAVMS and PVLOCK have the same performance. A possible reason for this is that ebizzy and kernbench are not as sensitive to time as webbench, the impact of LHP and LWP on performance would be small.

In summary, as the lack of optimization for LHP and LWP, the standard system has terrible performance when multiple VMs share the physical CPUs. PVLOCK saves CPU cycles by yielding the busy spinning waiters, which improves performance in the case of multiple VMs. Nevertheless, as it suffers execution delay, it cannot achieve optimal performance. Regarding our scheduling scheme LAVMS, we eliminate most of lock holder preemptions and lock waiter preemptions by giving lock holders and waiters multiple, continuous, extra scheduling chances to release locks, thus our scheduling scheme guarantees undiminished total system performance.

5 Discussion and future works

In this paper, we use \(preempt\_count\) to detect lock state. However, in fact, \(preempt\_count\) would be changed by locking or releasing operating only if it is a preemptive kernel. Therefore, our lock-aware method works only if the guest kernel is a preemptive kernel. We need to figure out another lock-aware method to detect lock state with non-preemptive guest kernel from the VMM side. We leave it as future work.

Although there is a limitation with our lock-aware method, our scheduling scheme would work well with any other lock-aware method since it is designed to be independent of the lock-aware method. When our scheduling scheme makes a decision to determine whether a VCPU needs an extra scheduling chance, only the VCPU’s lock state is needed by our scheduling scheme. As the VCPU’s lock state can be detected by our lock-aware method as well as any other lock-aware method, our scheduling scheme can cooperate with any lock-aware method, such as a paravirtualized lock-aware method, and achieve the same performance.

6 Conclusions

In virtualized environments, MP VMs suffer from LHP and LWP issues. Some research addresses this issue from different aspects, such as co-scheduling, lock waiter yielding and LHP and LWP avoidance, which have some shortcomings. To address this problem, this paper introduces a novel scheduling scheme based on lock-aware in the VMM. Our scheduling scheme gives lock holders and waiters multiple, continuous, extra scheduling chances to release locks, assuring the VCPU gets scheduled out without holding or waiting for locks with high probability. Moreover, the scheduling scheme ensures the scheduling fairness by subtracting the time consumed by a potential unpreemptible-VCPU in its last extra time component from its normal time slice of this time. The evaluation demonstrates that our scheduling scheme fundamentally eliminates lock holder preemptions and lock waiter preemptions, and guarantees total system performance undiminished.

References

Uhlig V, LeVasseur J, Skoglund E, Dannowski U (2004) Towards scalable multiprocessor virtual machines. In: Proceedings of the 3rd conference on virtual machine research and technology symposium (VM). IEEE, pp 43–56

Ouyang J, Lange JR (2013) Preemptable ticket spinlocks: improving consolidated performance in the cloud. In: Proceedings of the 9th international conference on virtual execution environments (VEE). ACM, pp 191–200

Lee W, Frank M, Lee V, Mackenzie K, Rudolph L (1997) Implications of I/O for gang scheduled workloads. In: Job scheduling strategies for parallel processing. Springer, New York, pp 215–237

Ousterhout JK (1982) Scheduling techniques for concurrent systems. In: Proceedings of the 3rd international conference on distributed computing systems (ICDCS), pp 22–30

Chakraborty K, Wells PM, Sohi GS (2012) Supporting overcommitted virtual machines through hardware spin detection. IEEE Trans Parallel Distrib Syst 23(2):353–366

Wells PM, Chakraborty K, Sohi GS (2006) Hardware support for spin management in overcommitted virtual machines. In: 15th International conference on parallel architecture and compilation techniques (PACT). ACM, pp 124–133

Zhang J, Dong Y, Duan J (2012a) ANOLE: A profiling-driven adaptive lock waiter detection scheme for efficient MP-guest scheduling. In: Proceedings of the 2012 IEEE international conference on cluster computing (CLUSTER). IEEE, pp 43–56

Zhang L, Chen Y, Dong Y, Liu C (2012b) Lock-visor: an efficient transitory co-scheduling for MP guest. In: Proceedings of the 41st international conference on parallel processing (ICPP). IEEE, pp 88–97

Kim H, Kim S, Jeong J, Lee J, Maeng S (2013) Demand-based coordinated scheduling for smp vms. In: Proceedings of the 18th international conference on architectural support for programming languages and operating systems (ASPLOS). ACM, pp 191–200

Zhong A, Jin H, Wu S, Shi X, Gen W (2012) Optimizing xen hypervisor by using lock-aware scheduling. In: 2012 Second international conference on cloud and green computing (CGC). IEEE, pp 31–38

(2008) Ticket spinlocks. http://lwn.net/Articles/267968/

Weng C, Wang Z, Li M, Lu X (2009) The hybrid scheduling framework for virtual machine systems. In: Proceedings of the 5th international conference on virtual execution environments (VEE). ACM, pp 111–120

Weng C, Liu Q, Yu L, Li M (2011) Dynamic adaptive scheduling for virtual machines. In: Proceedings of the 20th ACM international symposium on high performance distributed computing (HPDC). ACM, pp 239–250

Yu Y, Wang Y, Guo H, He X (2011) Hybrid co-scheduling optimizations for concurrent applications in virtualized environments. In: Proceedings of the 6th IEEE international conference on networking, architecture and storage (NAS)

(2014) Webbench. http://home.tiscali.cz:8080/~cz210552/webbench.html

(2014) Ebizzy. http://sourceforge.net/projects/ebizzy/

(2014) Hackbench. http://people.redhat.com/mingo/cfs-scheduler/tools/hackbench.c

(2014) Kernbench. http://freecode.com/projects/kernbench

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yu, C., Qin, L. & Zhou, J. A lock-aware virtual machine scheduling scheme for synchronization performance. J Supercomput 75, 20–32 (2019). https://doi.org/10.1007/s11227-015-1557-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-015-1557-y