Abstract

The checkpoint/restart mechanism is critical in a preemptive system because clusters with this mechanism will be improved in terms of fault tolerance, load balance, and resource utilization. As graphics processing units (GPUs) have more recently become commonplace with the advent of general-purpose computation, and open computing language (OpenCL) programs are portable across various CPUs and GPUs, it is increasingly important to set up checkpoint/restart mechanism in OpenCL programs. However, due to the complexity of the internal computational state of the GPU, there is currently no effective and reasonable checkpoint/restart scheme for OpenCL applications. This paper proposes a feasible system, checkpoint/restart state (CRState), to achieve checkpoint/restart in GPU kernels. The computation states including heap, data segments, local memory, stack and code segments in the underlying hardware are identified and concretized in order to establish an association between the underlying level state and the application level representation. Then, a pre-compiler is developed to insert primitives into OpenCL programs at compile time so that major components of the computation state will be extracted at runtime. Since the computation state is duplicated at application level, such OpenCL programs can be preempted and ported across heterogeneous devices. A comprehensive example and ten authoritative benchmark programs are selected to demonstrate the feasibility and effectiveness of the proposed system.

Similar content being viewed by others

1 Introduction

As the name suggests, GPUs were originally designed as specialized image microprocessors. Driven by the insatiable market demand for general-purpose computing technology, the programmable GPU has evolved into a highly parallel, multi-threaded computation accelerators, which are no longer limited to 3D graphics processing. Taking advantage of the respective characteristics of CPU and GPU, the current clusters are mostly composed of multiple GPUs and multi-nodes [12]. Such cluster structure greatly facilitates the consolidation of cluster computing resources especially core architectural resources. When the cluster allocates resources, the scheduling mode of GPU is quite different from the one on CPU. GPU sharing mechanisms are not provided during application execution [34], and nowadays clusters generally use the pass-through distribution method to allocate GPU resources. Pass-through is a technique that enables a node to directly access a Peripheral Component Interconnect (PCI) device [20], which means the GPU is individually assigned to the node, and only that node has the right to use GPU.

Obviously, preemptive scheduling of GPU tasks without the restrictions of migrating between platforms or devices is better than the traditional batch-mode non-preemptive one. First of all, preemption can provide fault tolerance to the system through saving computation state periodically. Whenever an error happens, the recorded state can be restored. Moreover, preemptive task scheduling facilitates load sharing and load balancing. System resources are shared by multiple tasks. Preemptive scheduling can enable fairly sharing among tasks. Also, a task can be dynamically rescheduled to another resource for better system utilization. Meanwhile, the preemptive scheduler can dynamically migrate a task’s subtasks to underloaded resources for faster execution. Preemptive scheduling can adjust and balance tasks among resources promptly and flexibly.

Open Computing Language (OpenCL) is an open standard for general purpose parallel programming of various computing units such as personal computers, clusters, mobile devices and embedded platforms. As such a standard for heterogeneous systems, OpenCL has the ability to support a variety of applications, ranging from embedded system software to high performance computing (HPC) solutions, through a low-level abstraction by exploiting parallelism. Therefore, it dramatically increases speed and reduces response time, and it is currently used in numerous markets including scientific and biomedical software, gaming products, visual image processing, etc.

This paper intends to propose a CRState system with the capacity for preemption in the kernel of heterogeneous devices, and OpenCL programs in this system reconstruct the computation states at application level. All attempts by an application program to manipulate the GPU devices must go through the CRState system. The implementation consists of a pre-compiler and a run-time support module in the OpenCL framework. The pre-compiler transforms the source code into an extended one, on which the run-time support module can be called to construct, backup and restore computation states. This paper makes the following contributions:

-

A pre-compiler is developed to annotate the original OpenCL programs so the checkpoint/restart can happen at predetermined places.

-

The CRState system can extract the computation state out of the underlying hardware. The state can be mapped to a logical place so that they can be handled directly.

-

The computation state can be stored and easily ported to another heterogeneous device where the computation state is restored by overriding the current states, so that the program can be resumed from the last checkpoint.

-

Experiments are conducted to demonstrate the feasibility and effectiveness of the proposed scheme. In addition, the performance is compared and analyzed in both homogeneous and heterogeneous environments.

Section 2 gives related work. Section 3 briefly introduces the architectures of GPUs, the OpenCL programming model and the checkpoint/restart mechanism. In Sect. 4, the proposed checkpoint/restart system, CRState, is described in detail. In Sect. 5, the experimental results are presented. Finally, the conclusion and future work are discussed in Sect. 6.

2 Related work

Because of decade-long interest, a lot of progress has been made on State-Migration (SM). The mainstream technique for stability of applications is checkpoint/restart (CR) [27]. The implementations that are only aimed at CPU and not GPU have become mature and reliable gradually as operating systems and applications evolve. As a software mechanism to achieve computation mobility, State-Carrying Code (SCC) saves and retrieves computation states during normal program execution in heterogeneous multi-core clusters [17]. Condor [11], a heterogeneous distribution system, achieved high throughput in the work pool without modification to the underlying Linux operating system [6]. Libckpt [9] designed by Ferreira et al. achieved checkpointing at the user level where the system was inserted as a middle layer for collecting the state information at runtime.

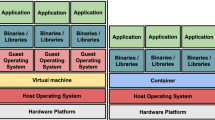

Currently, although computing states of multiple tasks can be migrated between GPU devices during runtime, most of the time CR is still interposed in the host device, and the content is migrated only at the context level of the GPU. CheCuda [38] was the first to implement a CR scheme on CUDA applications in 2009. Based on Berkeley Lab Checkpoint/Restart(BLCR) [32], the add-on package CheCUDA backs up and releases the contexts on devices before checkpoints. Then, it recreates the CUDA resources during the restoration process. In 2011, Takizawa et al. designed CheCL [37] for heterogeneous devices in OpenCL language following CheCUDA. The same year, a new CUDA CR library, ADD FULL NAME(NVCR), with no need for recompilation was described by Nukada et al. [28], wherein migration of the CUDA context is also necessary. VOCL [42] proposed by Xiao et al. provided the ability to support the transparent utilization of local or remote GPUs by managing the GPU memory handles. VOCL uses a command queuing strategy [41] to optimally balance the power of clusters [22]. Crane, of Gleeson et al. [14] and the framework given by Tien et al. [39] are also concerned with out-kernel migration in OpenCL GPU performance. By scheduling cluster computations [1], Wu et al. [40], Gottschlag et al. [15] and Suzuki et al. [36] applied migration to the virtual machine, but they also did not make reallocations on the basis of context.

Nevertheless, fine-grained migration in GPU kernels only has been proposed a few times. Jiang et al. [18] design a system in which the source code is partitioned according to given checkpoints, and the kernel will be repeatedly launched until the end of the task. Even though they only concentrated on fault-tolerant characteristics and lack of nested functions, their contribution embarked on a new chapter in CUDA in-kernel research. CudaCR [33] also focused on fault-tolerant operations and presented an optimized schedule strategy for memory corruptions in the device. CudaCR provides a stable performance despite lack of universality in that threads are not allowed to modify global memory that has been accessed by another work.

Compared to CheCuda [38] and CheCL [37], CRState implements the underlying checkpoint/restart scheme at a fine-grained level, and can accomplish the checkpoint/restart operations without waiting for kernel to be finished. Compared to the studies of Jiang et al. [18] and CudaCR [33], CRState has achieved checkpoint/restart on heterogeneous GPU devices. The heterogeneity enhances the availability of checkpoint/start schemes in many large-scale applications for resource management, fault tolerance and load balancing.

3 Background

3.1 GPU architecture

The architecture of GPU devices invented in 1999 [24] varied greatly for different computational requirements. After NVIDIA G80 came out in 2006 [29], basic architecture of GPUs remained stable, although some details varied from version to version. Currently, GPUs process many elements called stream processing units to parallel a single program, multiple data (SPMD) task using threads [30]. Taken an NVIDIA Volta version as an example shown in Fig. 1, compute work distribution delivers computing work from the host to every streaming multiprocessor (SM) block. The data are transferred between the CPU and GPU through the PCI-E bus. Cores as the basic units handle a group of threads called warp in GPU clock cycles managed by SM multi-threaded instructing scheduler. There are sophisticated architectures in modern GPU kernels with different types of memory space such as global, share, local, constant, texture memory and registers. In Fig. 1, the interconnection network implements an interactive interface to communicate SM commands and to exchange data between global memory and SM space through a Level2 cache. Shared memory for a group of computing units located in SM has very limited space. Embedded in every core, registers are the closest and fastest memory element. Local memory can only access variables within its own thread. Although local memory is situated in an external space, like global memory, it has the advantage of allowing read and write operations with high performance due to the Level1 cache.

3.2 The OpenCL programming model

As a framework for programming on heterogeneous platforms such as CPU, GPU, FPGA [31], Cell Broadband Engine(Cell/BE) [7], and other mobile devices [23], OpenCL [16] provides the ability to execute the kernels in different devices in three steps. First, the write command is fetched from the command queue and then data are copied from host memory to GPU memory. Second, the GPU device fetches a kernel startup command from queues and launches the kernel by calling every work-item in parallel in accordance with the SPMD model. A work-item is one of a collection of parallel executions invoked and executed by one or more processing elements on a compute unit [16]. A collection of work-items that executes on a single compute unit can also be bonded to a work-group. A work-group also executes the same kernel instance in parallel and shares local memory. Once all of the work-items finish and a read command is triggered, the result is copied back to the host memory. In the OpenCL framework, the host has only one way to read and write on the global memory, which is called a buffer in the host. Global memory denoted in OpenCL is the same as GPU memory. Local memory, which is mapped to shared memory in GPU world, could be allocated by the host with no permission for reading and writing. Private memory, which is mapped to local memory in the GPU world and registers are transparent to CPU or host.

3.3 Checkpoint/restart

Checkpoint/Restart proceeds in two stages, computation state backup and restoration, which could be accomplished at three levels: kernel, library and application [26]. During checkpoint process, a snapshot of the computation state is taken and stored. In the restoration stage, the current states will be replaced by the previously stored ones. A kernel-level system is transparent [4] to programmers and is constructed through the in-kernel function of operating systems [21] such as V-system [13]. A library-level such as BLCR system extracts computation states through library functions [32]. In an applications-level system, source code is reconstructed in the pre-compile phase [2], which informs how the program will resume from the last checkpoint position [5].

4 CRState design and implementation

The purpose of our system is to achieve a Checkpoint/Restart scheme between two heterogeneous GPU devices accomplished at the application level. The GPU computation states are controlled by device drivers, not the operating system. Unfortunately, most of the GPU vendors do not provide the drivers’ source code or permission to change their code. Without permission to change the drivers, a kernel-level checkpoint/restart system cannot be set up on heterogeneous GPU devices. Moreover, since there is no API to extract the computation states in OpenCL, CR does not work at a library level either. Therefore, Checkpoint/Restart at the application level is the only choice.

In our system, a Checkpoint/Restart operation also proceeds in two phases, backup and restore. When backup is triggered by GPU, the running threads or work items in OpenCL are suspended. Current computation states are fetched from memory units in GPU device to host. In restore, the states of the last interrupted execution are migrated to another devices, and the kernel is re-launched from the last checkpoint. Thus, the paused execution is resumed in a new device.

In a Checkpoint/Restart operation, the computation state is the main concern for continuing to continue the execution in a new device. The executing program’s state, which exists in the underlying hardware, is controlled by GPU drivers. Since there is no access to GPU drivers, when a command of checkpoint is issued, the computation states are reconstructed in applications. In this way, the computation state is duplicated and copied back to the host. In restart, the computation state in the underlying hardware is overridden by the last stored one.

4.1 Pre-compilation

To duplicate the states at an application level, CRState is assisted by a pre-compiler, which modifies the source code on the side of both the host and the device at compilation time, as shown in Fig. 2. By defining data structures and inserting auxiliary functions to control access to control access to the states, the pre-compiler augments the source code to accomplish the checkpoint/restart functionality. Before runtime, the executable functions that are inserted in pre-compilation are linked as supporting modules. In our system, programmers only need to determine the locations of the checkpoints and the identity of the new device where the computing task will be restarted.

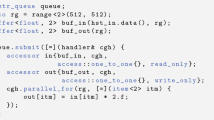

Figure 3 illustrates a simple example of source code including a nested function and pointers to the global, local and private address space. The OpenCL kernel launch function, kMain, is defined with five variables, and the subfunction, foo, is defined with two variables and two formal parameters. Checkpoints need to be inserted into both functions. Algorithms 1 and 2 show pseudo-code for the insertion of state-construction primitives into source code executed on both CPU and GPU sides.

4.2 Computation states

In OpenCL framework, the computation state consists of four parts: heap and data segments (global memory), local memory, the stack (private memory) and code segments.

GPU device allocates the disjoint stack space for every work-item, and all work-items share resource such as executable files and global memory. The global memory could be recognized as heap and data segments. Every work-item has the capability to access and modify the contents of global memory by pointers. In OpenCL framework, work-items are divided into several groups to share local memory. A pointer cannot access a section of memory belonging to a different type of pointer. However, CPU system has no protection for different work-items from accessing stacks or heaps. In other words, a work-item can read and write any part of stack once it manages to access them through a global pointer variable. The contents of registers or caches are not considered since the implement is in a high-level language rather than an assembly language. Therefore, the registers or caches are transparent to applications.

4.3 Reconstruction of heap and data segments

The heap and data segments are two parts of computation states. In OpenCL framework, since the dynamic allocation is not allowed, the heap and data segments can be treated as the global memory. A global memory object is allocated on the host side, and is controlled to communicate with GPU by a handler in host. In this case, a control block called global memory control block (GMCB) is designed for each global memory object to manage the transfer operation. GMCBs do not need to exchange the information with the GPU device, and in order to save memory space, they only exist on the host side to handle global memory objects. A GMCB mainly consists of a size variable, a pointer referencing the transfer buffer and a pointer to global memory object. Since the function that creates a global memory object is invoked at runtime, the pre-compiler cannot determine the number of global memory objects. A linked list, GMCBLinkedList, is designed to connect the GMCBs, which are dynamically generated. There are three stages in global memory transfer.

-

In pre-compilation, registration functions for GMCBs are inserted after the functions that are used for the creation of global memory objects. The registration function is used to create a GMCB node, initialize the data and add the new node to the tail of GMCBLinkedList. The initialization process includes binding together the corresponding global memory objects, saving the parameters of global memory and allocating a chunk of transfer memory to store the content from GPU memory, as shown in Fig. 4.

Taking the simple example shown in Fig. 3, the transfer controller only operates on the host side in Algorithm 1. In the beginning of program, the registration functions are inserted. To allow checkpoint and restart, read and write processes are inserted, respectively.

-

At a checkpoint, the components in the GMCBLinkedList are saved by a checkpoint command from the GPU in Fig. 4. The GMCBs in the GMCBLinkedList notify the global memory objects to send a read command to the GPU so that the contents of global memory are sent to the corresponding transfer buffers of the GMCB.

-

In restart, a restart command from the CPU activates the restoration process for the GMCBLinkedList, as shown in Fig. 4. The GMCBs in GMCBLinkedList notify the global memory objects to send a write command to the GPU. Then, the CPU sends the content of the transfer buffer to the corresponding global space on the GPU.

4.4 Reconstruction of local memory

Local memory is one of the computation states that are specific to GPU in the OpenCL framework. It can be treated as special cache hardware in CPU, where the concurrent work-items share the resource. Programmers have no direct control over the cache hardware. In the OpenCL framework, local memory is a chunk of memory where a group of work-item shares the resource according to program instructions.

Local memory is allocated on GPU, and the host device has no read or write accesses. A global memory object for each local memory is designed to communicate the contents of local memory between the host and GPU devices, thus acting as a transport medium (shown on the right side of Fig. 5). In this way, every local memory object has a corresponding handle on the host side. A control block called the local memory control block (LMCB), which only exists on the host side, is designed to manage an object that acts as a transport medium. An LMCB mainly consists of a size variable, a pointer referencing the transfer buffer and a pointer to the object acting as the transport medium. The parameters of local memory are directly obtained by scanning the source code in pre-compilation. An array of LMCBs, LMCBarray, is defined to store LMCBs. In order to dynamically communicate with GPU devices at runtime, a management block, the local memory blocks management (LMBM), is designed on both GPU and CPU sides. An LMBM mainly consists of the length of the local memory, the transfer message and other information about local pointers. The LMBM manages the exchange between GPU’s local memory and global memory as well as the exchange between hosts.

Since the local memory is in every work-group, there are several copies in GPU devices according to the number of the work-groups. Then, the transfer buffer for each local object is partitioned into several portions, and each one is used to store the contents of one work-group for its local object. Figure 5 shows the reconstruction of one of the local object \(Lbuffer_0\), which is partitioned into n portions distributed to n work-groups. In Fig. 5, the \(WG_k\) is used to express the kth work-group, and the \(WI_k\) is used to express the kth work-item. There are three stages in local memory reconstruction.

-

In the pre-compilation stage, the information of local memory is extracted. The pre-compiler defines LMCBarray and inserts a registration function used to register the transport mediums as global memory objects, create and initialize the data of the LMCBs. An initialization function of LMCB includes bonding the corresponding transport medium, defining the basic information and allocating a chunk of transfer memory. Being different from GMCBLinkedList and GMCBs, all of the LMCBs LMCBarray are generated in pre-compilation. LMBM is defined in both the host and kernel functions.

Taken the simple example as shown in Fig. 3, the controller processes both the host and device side code. The insertion on the host program is similar to the global reconstruction shown in Algorithm 1. In the beginning of kernel function, the code that manages the local memory restoration based on the strategy that every work-item handles a part of memory is inserted in Algorithm 2. After duplication, the barrier code for synchronization is inserted. In the end of kernel function, the barrier and backup primitives are inserted.

-

At the checkpoint, a backup signal is generated from the running work-groups on GPU device. Every work-group notifies the work-items to copy the contents of local memory to the transport mediums. Due to the different progress of the work-items, a mechanism is provided to synchronize the work-items within a single work-group. In this way, some faster work-items are suspended temporarily to keep up the same pace with others. In order to reduce the overhead, the copy work is divided into several parts, and every work-item does one of the parts. Due to the column-major order in memory layout of GPU, the access way is designed to exploit locality. The kth work-item handles the elements in the sequence set that meets the condition expressed on Equation 1:

$$\begin{aligned} \{x\Vert x\, MOD\, groupSize = k\} \end{aligned}$$(1)The groupSize is the number of work-items in a single work-group.

In memory coalescing, peak performance utilization occurs when all work-items in a half warp access continuous memory locations. After this copying process, the LMBM notifies the host to fetch the contents from GPU device, where the signal is denoted by a checkpoint arrowhead shown in the top-left section of Fig. 5. The backup component of LMCBs in the LMCBarray is activated and the LMCBs send a read command to the corresponding transport mediums. Finally, the contents of local memory are fetched to the transfer memory in CPU.

-

During the restart process, a restore signal is sent to the LMCBarray, and the retrieving component of each LMCB is activated. A write command of each LMCB is sent to the corresponding transport medium. The contents of transfer memory are fetched and sent to GPU. After the data transfer, LMBM is notified by CPU, and the work-items are arranged to execute copy operation to the local memory after a synchronization operation. Finally, LMBM notifies the computing task to continue.

4.5 Reconstruction of stack

Stack is an important part of computation states. In OpenCL framework, private memory of a single work-item is regarded as stack space of an independent work-item in a multi-thread concurrent system. A work-item can not access to each other’s private memory that exists in a disjoint space.

The private variables in private memory within a single work-item are separated into two categories: non-pointer variables and pointers. For convenience and efficiency, the non-pointer variables of source code are integrated to a structure in pre-compilation, so that all of the variables can be transferred as a segment of contiguous memory. However, the pointers will be updated when the virtual address space is be reassigned. Thus, the pointers can not be simply copied as contiguous memory blocks. The pointers in private memory will be extracted to integrated into an array due to unified pointer data type. In this way, the pointers are transformed to the address offsets of memory blocks in backup phase. In restoration, pointers will be retrieved, respectively. Furthermore, due to the independent access to the virtual address space among private memory, local memory and global memory, the pointers should also be divided into three chunks of corresponding space according to the place of pointing contents. Given the above, the private memory is divided into four parts: non-pointer variables, pointers pointing to the global memory space, pointers pointing to the local memory space and pointers pointing to the private memory space.

Like local memory, private memory is only allocated on GPU devices, and can not be handled on the host side. In such situations, a transport medium is set in global memory space to transfer the contents of private memory between the host and GPU devices. A private memory control block (PMCB) on host controls the private memory through the transport mediums. Since a kernel function only has a limited amount of private memory, there is one PMCB on host. In this case, the transport medium for private memory is allocated in one chunk of memory, which is denoted in a box with a black border of Private Transfer Buffer in Figs. 6, 7. A PMCB mainly consists of a pointer to transfer buffer and a pointer to transport medium objects. A private memory block management (PMBM) is designed on the both host and GPU sides to exchange the information. A PMBM mainly consists of the message of multiple functions in kernel, the message of elaborated pointers, the transfer error, the message of alignment and endianness.

Since private memory is allocated by each work-item, the number of copies varies with the number of work-items. The memory in the transport medium are partitioned into several portions to meet the requirements of each every work-item. In OpenCL framework, the dynamic memory allocation is not supported in kernel. But there are other cases resulting in dynamic change of the virtual address space of private memory such as child function invocation. Thus, the size of the private memory is not determined in pre-compilation. The size of private memory is pre-allocated with a statically fixed value. Considering the large number of work-items in execution, the pre-allocated size of private memory is set optimally to reduce the memory usage. In a portion of private memory of one work-item, the content is divided into four parts: non-pointer variables and three types of the pointers aforementioned. The content in a memory chunk of non-pointer variables is also divided into several parts when the functions are invoked in execution time. In this way, there are three stages during the private memory restoration as shown in Fig. 7.

-

In the pre-compilation stage, the non-pointer variables are extracted and integrated into a structure in source code. The pointers are collected into three arrays according to the locations that they are pointing to. The pre-compiler replace the references to the variables with the ones to the corresponding fields in non-pointer variables structure and pointers arrays. A PMCB is defined and a registration function is inserted to register the four transport mediums as global memory objects and initialize the data in PMCB. An initialization function of PMCB includes setting the pointers to reflect the existence of the transport mediums, defining the basic information such as a pre-allocated size and allocating four chunks of transfer memory. PMBM is defined on both the host and device sides.

Taken the simple example shown in Fig. 3, source code modification happens on both the host and device sides. The insertion on the host program is similar to the global reconstruction shown in Algorithm 1. Figure 6 shows that different types of variables are extracted. In pre-compilation, variables are processed first. Non-pointer variables are collected in a structure PDV, and the pointers are moved to arrays according to the different types. In the beginning of each function, the structure variable such as \(pdv_0\) is defined as in Fig. 6. The variable name in source code is replaced with a property of the structure or an elements of the array. In the beginning of the kernel function, the computation state which was saved in last time will overwrite the current state as shown in Algorithm 2. There are several stages of processing: non-pointer variables and three types of pointers. The non-pointer variables and private pointers are handled in a restart function of every subfunction and the global and local pointers are handled in the kernel function. In the end of kernel function or subfunctions, the check-point functions are served to store the current states about non-pointer variables and private pointers. The global and local pointers are stored in the kernel function.

-

At the checkpoint, a backup signal is generated in GPU. Every work-item copies the contents of private memory to the specified transport mediums. The duplication between the global memory and private memory is controlled by a function controller shown in Fig. 8. The function controller includes the address space of blocks (ASB) of a stack, the cursors to record the duplication position, pointers information and some other tags. After copying, PMBM notifies the host to fetch the contents from GPU device. PMCB sends a read command to the four transport mediums. Finally, the contents of private memory are fetched to the corresponding transfer memory in CPU.

-

During the restart process, a restore signal is sent to PMCB, and the retrieving component of PMCB is activated. A write command of PMCB is sent to the transport mediums. The contents in transfer memory are fetched and sent to GPU. After the transfer, PMBM is notified by the CPU, and organizes the work-items to execute the copy operation to private memory. PMBM resumes the suspended task.

Synchronization Optimization

Due to the disjoint space of different work-items without any access to each others, the synchronization of the private duplication is unnecessary. However, the global memory can be accessed by all the work-items and local memory can be accessed by the members of a same work-group. In general, the synchronization about the global memory and local memory duplication should be considered. Nevertheless, during the checkpoint process, the duplication first occurs between the global memory and local memory or private memory on GPU sides. When the duplication ends, the kernel sends a signal to the CPU and then kernel function exits. After receiving the signal on the host side, the CPU organizes GMCB, LMCB, PMCB to fetch the computation states from GPU through PCI-E bus. In this design, the synchronization of global memory duplication is not considered in order to reduce the cost of memory and time.

4.6 Contents extraction and restoration with nested functions

In GPU computation, it is possible to call a child function or multiple functions recursively. A subfunction has its own variables which are destructed at the end of the function. For dynamic function calls, it is hard for the pre-compiler to determine the memory usage of variables. Thus, CRState pre-allocates a segment of memory in global memory as a transport medium so that each work-item can exchange the computation states with host.

In OpenCL programs, the kernel is treated as a main function, the kernel launch can be deemed to a function call. Variables in the each subfunction or kernel function can be recognized as an independent chunk of a function’s stack memory, denoted as block, which allows the virtual address space to have gaps in functional calls as shown in Fig. 8. This notion is also like a no-fixed page which are used to exchange the contents of the virtual address space between memory and disk by operating systems.

When a call instruction starts, a frame is pushed into the system stack, and the values for the stack pointer \(\%rsp\) is recorded. With a ret instruction, the program jumps to the original position indicated by \(\%rsp\). Without access to registers, address space of blocks (ASB) is used to simulate the stack register to record the address scopes of stack.

As shown in Fig. 8, the states are located in transport medium occupying a continuous region of global memory, while states are fragmented in private memory like no-fixed pages in operating systems. Loading and read operations of each child function block can be simulated as push and pop operations, which makes use of the last-in, first-out (LIFO) memory management discipline. There are four stages in computation states transferring with multiple functions.

-

At the pre-compilation, the functions including kernel functions are recorded. After the pre-compiler scans the function definition, non-pointer variables and pointers are integrated to a structure and an array respectively. The formal parameters should also be extracted as variables. The reconstruction consists of four auxiliary functions inserted in pre-compilation.

In the example of Fig. 3, a new-call function and a restoring function are inserted into the beginning of the function in the Algorithm 2. The new-call function is used for the first entry and information of this function block is defined in new-call function. The restoring function is used to update the computation states in restore. An end-call function and a checkpoint function are inserted into the end of the function. The end-call function is used to finish the function and checkpoint function is used to record the computation states at checkpoint.

-

In the execution stage, the computation task runs on schedule according to the users demand. Once a subfunction is invoked, the new-call function is called. A structure about the non-pointer variables and an array containing the pointers are registered into the system. The address scope of the memory block is pushed onto ASB stack as shown in Fig. 8. When a subfunction is finished, the program calls the end-call function in this function. The memory which stores the variables of this function is released and the address scope on ASB stack is poped.

-

At a checkpoint, a duplicating command is issued first. The support module looks up the address scope and gives two positions: a destination address in transport medium and a source address in private memory. The duplication carries out when a ready signal is received from the system. Finally, this subfunction exits after duplication and the control returns back to the parent function. In this function, a duplicating operation carries out in the same manner. And all functions are involved and handled with the LIFO policy.

-

During the restart process, a retrieving command is called in the beginning. The support module looks up the address scope and gives the source address in transport medium. The retrieving function carries out when a ready signal is received from the system. A structure that stores the pointer and non-pointer variables is created as a block in private virtual address space and replaces the current one. Then, the information in transport medium is copied back and the kernel function is invoked again. Based on the program counter, control will go to the proper position and call the same subfunction as in the last execution. The subfunction will use the same manner to call its own subfunctions, and so on. In each function, local data will be overwritten by the one in backup media. The program counter implementation helps determine the right functions and the correct order for function calls.

4.7 Pointers handling

A pointer is a variable whose value is an address. The virtual address spaces on different devices are different. Also, the memory blocks to save the subfunction variables are not contiguous in the virtual address space and their orders will be different on another devices. Thus, CRState uses base address and offsets to represent pointers for better portability across different devices. There are three types of pointers: global pointers, local pointers and private pointers, depending on their locations. There are three stages in pointer handling.

-

In the pre-compilation stage, pointer update function and pointer backup function are defined for global, local and private pointers. And three pointer tables for three different pointers types are defined to record two contents: the memory block sequence and the offset address which the pointer refers to.

-

At the checkpoint, the program invokes a pointer backup function. For each pointer, its initial address and the address space of memory blocks from the block tables as well as the sequence which is pointed to a certain memory block are obtained. And then, the base address of the memory block and an offset are used to represent a pointer. They are saved in pointer tables.

-

During the restart process, a resume operation command is executed. Pointers obtains the access to the block tables and pointer tables. The current initial address and offset are obtained by lookup table and then they are added to represent the pointer.

Figure 9 illustrates that the private pointers are extracted to offsets and sequences of pointing blocks in checkpoint, and then restored later.

4.8 Program counter

The program counter (PC) called the instruction address register (IAR) [25], the instruction counter [3], or just part of the instruction sequencer [10], is a register used to specify the execution in modern high-level programming languages.

The PC of CRState refers to sequence of code positions recorded for the execution progresses in already sequentially called functions. Traditionally, the code position is stored in \(\%rip\), but the register is not accessible at application level. An effective way is to use a positive integer to represent the value in \(\%rip\), so that the PC can be concretized for users. In pre-compilation, the program is partitioned into several sections according to the breaking positions for checkpoint/restart. A switch statement is inserted into the program to dispatch the control to each breaking points as shown in Algorithm 2. In each function, a PC value corresponds to a labeled value of a particular case statement in that function. At the restore process, system fetches the information of last breaking position by querying the local PC, so that the control can jump to the resume position.

Under circumstance of multi-functions kernel, the code of every subfunction is partitioned into several segments with its own PC. Thus, a linear array called PCArray is designed to save all PC values of the invoked subfunctions. In this way, PCs are transformed as formal parameters to subfunctions as shown in Algorithm 2. PCArray saves the breakpoint of subfunctions in checkpoint and leads to a same execution sequence in restore. Figure 8 illustrates the multistage nested model in calling subfunctions following the LIFO order. When a pointer referring to a new subfunction is called or a current subfunction is finished, the top of PCStack will be adjusted to indicate the current function as well as its PC value. During the execution of a function, only the local PC value of current function will be changed, whereas all previous calling functions and their PCs remain the same. As function activation and deactivation occurs, PCStack will expand and shrink accordingly. To restore the computation, the OpenCL program will be re-executed. All previously invoked functions will be called again and the control jumps to the position as before. In the checkpointing function, the control will jump to the checkpointing position to resume the computation.

Figure 10 shows a simple example for this process in the form of a tree map. The codes of functions including main kernel function and subfunction are divided into several segments. During the restore process, CRState system fetches the program counter of the main kernel function and the control jumps to the corresponding position. Then, the control calls the \(subfunction_0\) pointed by PC and the PC information of this function is fetched from PCArray. Finally, the control starts at the \(segment_1\) of the \(subfunction_1\) to resume the computation. When the \(segment_1\) finishes, the checkpoint program intervenes to backup the computation state. The PC will be updated with the new start, \(segment_2\). When the checkpoint of \(subfunction_1\) finishes, the control will return to the \(subfunction_0\). Since the \(subfunction_0\) is not the location of the current checkpoint, its PC will not be updated, and so does the main kernel function.

4.9 Alignment handling

CRState system is designed at application level, where the computation states are transformed into pure data. Computation states can be treated as a group of continuous memory blocks. However, these states can cause interpretation errors due to the different alignment manners on different devices. Alignment refers to the manner to store data for efficient access. It is determined by compilers and operating systems and can result in unused space, called padding. Normally padding is added between structure members, called internal padding, or between the last member and the end of the structure, called tail padding. Although the programmers can specify alignment policy in programs, normally the default one is adopted.

For handling the different alignments, tags are attached to the tail of the memory sections to describe the alignment. All computation states carry tags. In restart, tags are generated based on data types first, and then compared with the tags carried with the state. If both tags are the same, there is no alignment issue. Then, the old computation state can overwrite the new one directly. Otherwise, only data items in old states will be copied to new states. Platform1 and 2 in Fig. 11 illustrates this process. The tags such as \(Tag_k\) and \(Tag_{f0}\) are migrated to platform2 and compared with the tags generated on platform2. A simplified sequence flow diagram is shown on the bottom right of this figure.

As shown in Fig. 12, each tag occupies four bytes, including three contents: data size, data type, and padding size, where data size is described in two bytes. The scalar types or arrays with scalar types such as int and float can generate their tags directly and each type corresponds to one tag.

However, aggregate types will be flattened down to generate an iterative list for all elements inside. The building of an aggregate type tag is different from the scalar one. First, the tag which is used to store type information is built. Then, all of elements are built after the whole tag. A simple example, pdv_0.n0, in Fig. 12 illustrates this process. Pdv_0.n0 is first built and the next items are pdv_0.n0.a, pdv_0.n0.n1 and so on. If there is a nested aggregate type in handling such as the pdv_0.n0.n1 in this figure, it is processed in the same way as the depth-first tree traversal. In this example, pdv_0.n0.b is not handled until the all of the pdv_0.n0.n1 elements finish. Another difference is that the content, type in aggregate tag is not only denoted to the data type. Since the number of scalar types is small, the type is attached with additional information when is built the aggregate type. A variable, ceiling, is set as a bound between the scalar types (ranging from 0 to ceiling) and aggregate types (greater than ceiling). The element number of aggregate type is added to the ceiling in order to interpret the tags on the receiver side.

As for lazy handling, the unmarshal interpreter decodes the tags only on the receiver side. There is no restriction on alignment. The data conversion scheme is a “Receiver Makes Right” (RMR) [43] variant, which can reduce the decoding times in clusters. In this way, it can generate a lighter workload to tackle data.

Because the stack of computation state expands and shrinks dynamically at runtime as a result of function calls, the content of tags can change in different checkpoints. In alignment handling, the process is divided into three phases with the bound of checkpoint/restart: tags definition (pre-compilation), tags generation (checkpoint) and tags interpretation (restart) as shown in Fig. 11. At kernel execution, once a subfunction is called, the tags of this function will be encoded. And subfunction tags are cleaned up when this function is destructed.

For tags definition, the pre-compiler creates the tag container based on the number of extracted variables shown in Type System in Fig. 11. In each function, tags generation function and tags interpretation function are inserted into checkpoint function and restart function of Algorithm 2, respectively. Through parsing the source code, the pre-compiler extracts the types of all the variables and flattens the aggregate types recursively by analyzing the relation of nested aggregations. Padding can be obtained by subtracting the addition of the current address and variable size from the end address. If the variable filed is not the last one or the end of some aggregate type, the end address is equal to the start address of the next variable filed lead to Equation 2:

Otherwise, the end address is the addition of the start address of the whole filed or the aggregate type and the size as shown in Equation 3, 4:

Or:

Figure 13 illustrates the calculation of padding, including two situations. Subgraph (a) depicts the most general case mentioned in Equation 2 where the variable ID k meets the condition expressed on Equation 5:

The n is the number of variables.

Subgraph (b) shows a case about how to calculate the last variable of an aggregate type.

Since the padding portions and the variables tags with subfunction invocation is not determined in pre-compilation, the tag generation will be executed at the checkpoint. Once the checkpoint is required, the variable tags are collected from all of the called functions at this moment. Then, the padding is calculated from the functions inserted in pre-compilation. The Platform1 section of Fig. 11 illustrates this process. Considering the example of Fig. 11, at the checkpoint moment, the called functions, kernel, \(foo_0\) and \(foo_1\) have been registered in the CRState system. The tag generator collects the variable information of these functions and creates the tags.

Tags interpretation is postponed until the restart point as needed. As shown in Fig. 11, the tags are transferred to another device with the state, and the current ones are compared with the tags from the last device. In addition, the interpreter will be activated if they are different. For scalar types, the alignment adjustment is a walk-through process relocating the start address of every variable filed of the memory. The interpreter defines two cursors pointing to the two memory blocks as shown in Fig. 14. The two cursors start at the first variable filed. The original cursor duplicates the contents of variable filed to the current memory block while the two cursors walk down to lower address. Once the copy finishes, the cursors lookup the sizes of padding in respective tags and jump to the next variable filed skipping the padding slots.

For aggregate types, the walk-through is also the main process for relocating the start address of every variable filed. However, due to the nested aggregate types, the padding portions still exist. The information of alignments needs to be parsed from the bottom of nested layer to the upper one. Thus, two arrays, remaining element number (REM) and end aggregate address (EAA) are designed to temporarily store the information of upper layers when the interpreter is handling the variables in the bottom layers. The operations of these arrays are simulated to push and pop in LIFO order. The REM array is used to store the numbers of the unhandled nested aggregate types where each element of the array is denoted to a nested layer. The EAA array is used to store the end address of nested aggregate type. Once the interpreter parses a nested aggregate type, the element of the type and the end address of this aggregate type are pushed into two arrays. Then, the interpreter expands the aggregate type, and converts the elements of this aggregate type. Figure 15 illustrates the process of the extension. A cursor identifies an aggregate type and jumps to the extension layer. When a new nested aggregate type comes up, the relevant information is pushed to the arrays and its elements are converted as the next one. Once one of the nested aggregate type finishes, the REM notifies the interpreter to jump to the upper layer of the aggregate and load the information of the upper layer in order to resume as shown in Fig. 16. Since the addresses of variables are modified, the interpreter also notifies the pointers restorer to update the contents of pointers.

Taken an example of Fig. 12, the second part of tags, pdv_0.n0, is parsed in interpretation. The interpreter identifies it as an aggregate type, and pushes the end address (\(EA_{n0}\)) and the number of elements (\(EM_{n0}\)) to EAA and REM arrays. Then, the interpreter converts pdv_0.n0.a. When the pdv_0.n0.a finishes, the \(EM_{n0}\) auto-decreases one. Then, the pdv_0.n0.n1 is a nested aggregated type. The information, (\(EA_{n0.n1}\)) and (\(EM_{n0.n1}\)) are pushed to the EAA and REM. The \(EM_{n0}\) auto-decreases one. Figure 17 illustrates status of two arrays at this moment. When the pdv_0.n0.n1.a finishes, the interpreter reads the REM and interprets pdv_0.n0.b. The operation, pop of Fig. 17 illustrates this process.

4.10 Endianness handling

Endianness refers to different byte orders in data storing process. Based on the locations to save the most or least significant bytes, there are two ways in byte order: big-endian and little-endian. The endianness will not make a difference during the value copy. However, the situation is unclear in memory operations.

CRState system saves the endianness of the current device at checkpoint. The endianness tag is transferred to the new device with the computation states. In the restore process, the endianness tag is compared to that of the new device. If they do not match, the CRState system launches the endianness interpreter to identify the position and fix the endianness of each variable through the walk-through process.

Figure 18 shows a simple example of different endianness policy on two devices. It is possible to verify the difference between Little-Endian and Big-Endian organization looking at the order of the bytes that composes a short and an int variable type inside the memory. Each block represents a byte memory with its respective memory address. The variables are assumed to align on a \(4-byte\) boundary, so the padding space is denoted as “NULL” in Fig. 18.

5 Experiment

In order to verify the feasibility and performance of the system, two groups of experiments on CRState was conducted. The first group has been a homogeneous GPU test, using two NVIDIA Tesla K80 GPUs with 24GB of graphics memory each. Homogeneous test means that the computation state was migrated from one K80 device to another identical one. And the other group was tested with different GPU devices, consisting of one AMD Radeon HD 7950 with 3GB of memory and one NVIDIA GeForce GTX 1070 with 8GB of graphical memory. Additionally, the homogeneous setup uses an Intel Xeon \(E5-2603\) v4 as main CPU in the host-side, and the heterogeneous setup uses an Intel Core \(i5-8500\).

To demonstrate the stability and viability of CRState system, a set of comprehensive test scenarios was conducted on the two described platforms. These test scenarios verify and validate all the functions executed by the CRState, which included five basic data types: int, float, double, char, bool and aggregate types such as structure, array and union. A variety of pointers were built into the test scenarios as well, pointing to global memory, local memory and private memory. Additionally, these pointers were set to dynamically point to different variables during program execution. There were three nested subfunctions into the test scenarios, and each subfunction was designed to accomplish checkpoint/restart mechanisms.

In order to further explain the scientific nature of the system, ten official benchmark programs were selected as test cases: Fast Fourier Transformation (FFT), two-point angular correlation function (TPACF), kmeans, LU Decomposition (LUD), Needleman-Wunsch (NW) for DNA sequence alignments, stencil 2D, Lennard-Jones potential function from molecular dynamics (MD), MD5 hash, radix sort (RS) and primitive root (PR). These benchmark programs were selected from different authoritative benchmarks including SPEC ACCEL [19], SHOC [8] and Rodinia [35]. They were used to evaluate the performance on memory and time.

5.1 Performance of the homogeneous group

The consistency of the results was verified before a performance analysis of the system, comparing the results between the original programs and the one with checkpoint/restart mechanism. In order to fully explain the results, the consistency detection experiment was divided into three parts. The first part compares the calculation state during the migration process, then compares the relative offset of the pointer variable in the calculation state, and finally compares the calculation result of the program output. The experiment was designed to make a comparison between a system with one checkpoint/restart and the original system, and a system with two checkpoint/restart and the original system. According to the tests, all scenarios presented consistent results.

Each test case in the homogeneous experimental setup was further refined into three categories: native case, one-checkpoint/restart case with CRState, and two-checkpoint/restart. They were compared to evaluate the performance of CRState.

Next, we recorded the execution time of each test case and regarded time as a performance indicator of the system. All experiments were conducted ten times to get the average values given by Equation 6:

The time growth variation fluctuates between 0.5 to 5%, no matter whether it was one- or two-checkpoint/restart case as shown in Fig. 19. More precisely, only the execution time on the device side without considering the startup time used for creation of platform, search and launch of devices and preparation of the input data on host has been measured and compared. In this case, the gap between the native and checkpoint/restart was amplified in order to show the differences of benchmarks and the breakdowns of the overhead. The results in milliseconds are listed in Tables 1, 2. As shown in Fig. 20, the execution time increased by half in general with a few exceptions on MD, K-means and LUD. The MD version was not growing much, i.e., under \(0.5\%\), but the overheads of K-means and LUD grew for about \(150\%\). The differences between the execution time of k-means, LUD and MD may depend on the calculated quantity and the computation states which needed to be stored. For example, if the proportion of calculated quantity in the execution time was so large that the time for storing computation states could be ignored, the overhead would not grow too much, and vice versa. However, although there was a gap between the native version and checkpoint/restart versions, the difference between one time or twice checkpoints was minimal. All in all, in terms of the overhead of whole programs, the growth was acceptable which ranged from 0.5 to 5%, as shown in Fig. 19.

Figure 21 shows the key components of overhead time relationship in ten benchmarks as follows (bottom to top): checkpoint, restart, transfer to host, and write to GPU. Checkpoint represents the backup time of GPU, while restart represents the recovery time. Transfer to host refers to the time used for transferring computation state from GPU to Host, write to GPU represented the time to read back to GPU. From Fig. 21, although there was no direct correlation between the results of each test scenario, two conclusions can be drawed by analyzing the key information. First, the time taken to write to the GPU from the host far exceeded the time that the GPU wrote back to the host. Since the total amount of data written, respectively, was the same, the main reason for the time gap was that the memory management mechanism on CPU was much better than that on GPU. The second point was that the blue and purple areas were balanced in general.

The memory usage was compared in Fig. 22 and listed in Tables 3, 4. The memory consumption was about twice or 2.5 times large as the native versions. The reason of the large memory usage was that the data in private memory and local memory had to be duplicated into in global memory before they had been transferred back to the host side.

One of the GPUs of the homogeneous setup was selected to monitor the power consumption among the different benchmarks, with and without the checkpoint/restart configuration. Figures 23, 24, 25 show the GPU power consumption of checkpoint/restart with CRState system. In general, the device power consumption remained almost the same.

5.2 Performance of the heterogeneous group

Similar to the homogeneous setup, the consistency of the results for the heterogeneous system was verified in the first place. As expected, the data consistency was still guaranteed.

Then, we recorded and analyzed the time of the three test cases in the rigorous calculation, which meant that the overhead was only compared with the execution time on the device side. All the data presented here were also the average values calculated according to Eq. 6 after ten tests and the results are listed in Tables 5, 6. Under heterogeneous conditions, the growth of time was ranged from 30 to \(100\%\) except in one benchmark program, MD, as shown in Figure 24. The computation state switches are much cheap operations in terms of computation time of the MD program, so they have little effect. In general, the average increase in time was about \(50\%\), which was similar to the performance of homogeneous setup. However, the time taken by the program with one or two checkpoints was less than that in the front group, such as TPACF and K-means, and some results were opposite. On one hand, because the performance of the k80 was far superior than the two GPU devices of the heterogeneous system, the computational overhead accounts for a relatively low proportion. In contrast, the overhead of other aspects are greater than those in the heterogeneous system. In other words, the difference in hardware between systems may have led to this result. On the other hand, regarding the OpenCL framework, when the system is migrating computation state, not only the heterogeneous device needs to be switched, but also the context in which the device needs to be changed too.

In Fig. 25, the area, write to GPU, is still considerably larger than the transfer to host in almost cases, which is the same as the homogeneous setup. Similarly, this can be explained by the difference in the data access time between CPU and GPU. Figure 25 also revealed that the gap between backup time and recovery time varies significantly. Obviously, the second group was set to be a heterogeneous platform, which means that the two migration devices are different. As a result, the checkpoint and restart regions shows a considerable variation with different experimental test cases.

Taking an example for FFT benchmark, the four key components of overhead compared to the whole execution are illustrated in Fig. 26. Each part accounts for \(20\%\) or so, and other factors such as GPU in-kernel schedule and re-launch time also takes a certain percentage of the total cost.

The results of memory usage are the same as the homogeneous system as shown in Fig. 22. The computation states needed for migration not changed, so the allocated memory for migration also remains unchanged.

The system with the NVIDIA GeForce GTX 1070 was selected to compared the power consumption of checkpoint/restart with CRState system. As reflected in Fig. 27, it was roughly flat.

In short, the performance of CRState was satisfactory in execution time, power consumption aspects, although the memory usage was large. However, the growth of memory usage was fixed in general. The data in private memory and local memory had to be duplicated into in global memory before they had been transferred back to the host side, thereby these data occupied double space. It was an effective mechanism in a way for GPU computation checkpoint/restart.

6 Conclusion

This paper proposes a feasible checkpoint/restart system, CRState, for GPU applications on homogeneous and heterogeneous devices. The pre-compiler of the system transforms the source code to an applicable one through collecting the variables, inserting the auxiliary functions and partitioning the code. With the assistance of pre-compiler, the system identifies the computation states in the underlying hardware and reconstruct them in a heterogeneous device.

Although software checkpointing approaches incur heavier overheads than hardware ones, they are the only portable choice among heterogeneous devices. The comprehensive examples and experimental results of ten benchmark programs have demonstrated the feasibility and effectiveness of the proposed system. As the results shown, the overhead is acceptable. In general, the device power consumption remains almost the same as the one without the checkpoint/restart technique. Some performance overheads have been analyzed through comparison between the homogeneous and heterogeneous groups.

The future work includes optimizing the algorithm to reduce the overheads and enhancing the robustness among the heterogeneous devices with more large-scale and complex tests.

References

Ansel J, Arya K, Cooperman G (2009) Dmtcp: Transparent checkpointing for cluster computations and the desktop. In: IEEE International Symposium on Parallel & Distributed Processing, pp 1–12

Arora R, Bangalore P, Mernik M (2011) A technique for non-invasive application-level checkpointing. J Supercomput 57(3):227–255

Bitsavers AK (2008) Principles of operation: type 701 and associated equipment (from ibm manual). Annals of the history of computing 5(2):164–166

Bozyigit M, Al-Tawil K, Naseer S (2000) A kernel integrated task migration infrastructure for clusters of workstations. Comput Electr Eng 26(3):279–295

Bronevetsky G, Marques D, Pingali K, Stodghill P (2003) Automated application-level checkpointing of mpi programs. pp 84–94

Butt A, Zhang R, Hu Y (2003) A self-organizing flock of condors. https://doi.org/10.1145/1048935.1050192. Cited By 43

Chen T, Raghavan R, Dale JN, Iwata E (2007) Cell broadband engine architecture and its first implementation–a performance view. Ibm J Res Dev 51(5):559–572

Danalis A, Marin G, Mccurdy C, Meredith JS, Roth PC, Spafford K, Tipparaju V, Vetter JS (2010) The scalable heterogeneous computing (shoc) benchmark suite. In: Workshop on general-purpose computation on graphics processing units

Ferreira KB, Riesen R, Brighwell R, Bridges P, Arnold D (2011) libhashckpt: hash-based incremental checkpointing using GPU’s. Springer, Berlin Heidelberg

Flores I (1972) B72–26 computer organization and the system/370. IEEE Trans Comput C–21(12):1458–1459

Frey J, Tannenbaum T, Livny M, Foster I, Tuecke S (2002) Condor-g: a computation management agent for multi-institutional grids. Cluster Comput 5(3):237–246

Gavrilovska A, Kumar S, Raj H, Schwan K, Gupta V, Nathuji R, Niranjan R, Ranadive A, Saraiya P (2007) High-performance hypervisor architectures: virtualization in hpc systems. Workshop on system

Gioiosa R, Sancho JC, Jiang S, Petrini F, Davis K (2005) Transparent, incremental checkpointing at kernel level: a foundation for fault tolerance for parallel computers

Gleeson J, Kats D, Mei C, Lara ED (2017) Crane: fast and migratable gpu passthrough for opencl applications. In: ACM International Systems and Storage Conference, p 11

Gottschlag M, Hillenbrand M, Kehne J, Stoess J, Bellosa F (2013) LoGV: low-overhead GPGPU virtualization

Group KOW (2017) The OpenCL specification. KHRONOS

Jiang H, Ji Y (2010) State-carrying code for computation mobility. Handbook of Research on Scalable Computing Technologies

Jiang H, Zhang Y, Jennes J, Li KC (2013) A checkpoint/restart scheme for cuda programs with complex computation states. Ijndc 1(4):196

Juckeland G, Brantley W, Chandrasekaran S, Chapman B, Shuai C, Colgrove M, Feng H, Grund A, Henschel R, Hwu WMW (2014) Spec accel: a standard application suite for measuring hardware accelerator performance. In: Pmbs

Kang J, Yu H (2018) Mitigation technique for performance degradation of virtual machine owing to gpu pass-through in fog computing. J Commun Netw 20(3):257–265. https://doi.org/10.1109/JCN.2018.000038

Laadan O, Nieh J (2007) Transparent checkpoint-restart of multiple processes on commodity operating systems. In: Usenix Technical Conference, June 17-22, 2007, Santa Clara, Ca, Usa, pp 323–336

Lama P, Li Y, Aji AM, Balaji P, Dinan J, Xiao S, Zhang Y, Feng W, Thakur R, Zhou X (2013) pvocl: Power-aware dynamic placement and migration in virtualized gpu environments. In: 2013 IEEE 33rd International Conference on Distributed Computing Systems, pp. 145–154. https://doi.org/10.1109/ICDCS.2013.51

Leskela J, Nikula J, Salmela M (2009) Opencl embedded profile prototype in mobile device. In: SiPS 2009. IEEE Workshop on Signal Processing Systems, 2009, pp 279–284

Macedonia M (2003) The GPU enters computing’s mainstream. IEEE Computer Society Press, New York

Mead C, Conway L (1980) Introduction to VLSI systems. Addison-Wesley, Cambridge

Milojicic DS, Paindaveine Y (1996) Process vs. task migration 1:636

Monperrus M (2018) Automatic software repair: a bibliography. ACM Comput Surv 51(1):17:1–17:24. https://doi.org/10.1145/3105906

Nukada A, Takizawa H, Matsuoka S (2011) Nvcr: A transparent checkpoint-restart library for nvidia cuda. In: IEEE International Symposium on Parallel and Distributed Processing Workshops and Phd Forum, pp 104–113

Owens J, Luebke D, Govindaraju N, Harris M, Krüger J, Lefohn A, Purcell T (2007) A survey of general-purpose computation on graphics hardware. Comput Gr Forum 26(1):80–113. https://doi.org/10.1111/j.1467-8659.2007.01012.x

Owens JD, Houston M, Luebke D, Green S, Stone JE, Phillips JC (2008) Gpu computing. Proc IEEE 96(5):879–899

Paper W (2013) Implementing FPGA design with the OpenCL standard. Altera

Paul H (2006) Berkeley lab checkpoint/restart (blcr) for linux clusters. In: Journal of Physics : Conference Series, p 494

Pourghassemi B, Chandramowlishwaran A (2017) cudacr: An in-kernel application-level checkpoint/restart scheme for cuda-enabled gpus. In: IEEE International Conference on CLUSTER Computing, pp 725–732

Sajjapongse K, Wang X, Becchi M, Sajjapongse K, Wang X, Becchi M (2013) A preemption-based runtime to efficiently schedule multi-process applications on heterogeneous clusters with gpus. In: International Symposium on High-Performance Parallel and Distributed Computing, pp. 179–190

Shuai C, Boyer M, Meng J, Tarjan D, Sheaffer JW, Lee SH, Skadron K (2009) Rodinia: a benchmark suite for heterogeneous computing. In: IEEE International Symposium on Workload Characterization

Suzuki T, Nukada A, Matsuoka S (2015) Efficient execution of multiple cuda applications using transparent suspend, resume and migration 9233, 687–699

Takizawa H, Koyama K, Sato K, Komatsu K, Kobayashi H (2011) Checl: Transparent checkpointing and process migration of opencl applications. In: Parallel & Distributed Processing Symposium, pp 864–876

Takizawa H, Sato K, Komatsu K, Kobayashi H (2010) Checuda: a checkpoint/restart tool for cuda applications. In: International Conference on Parallel and Distributed Computing, Applications and Technologies, pp 408–413

Tien TR, You YP (2014) Enabling opencl support for gpgpu in kernel-based virtual machine. Softw Pract Exp 44(5):483–510

Wu TY, Lee WT, Duan CY, Suen TW (2013) Enhancing cloud-based servers by GPU/CPU virtualization management. Springer, Berlin

Xiao S, Balaji P, Dinan J, Zhu Q, Thakur R, Coghlan S, Lin H, Wen G, Hong J, Feng WC (2012) Transparent accelerator migration in a virtualized gpu environment. In: IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, pp 124–131

Xiao S, Balaji P, Zhu Q, Thakur R, Coghlan S, Lin H, Wen G, Hong J, Feng WC (2012) Vocl: An optimized environment for transparent virtualization of graphics processing units. In: Innovative Parallel Computing, pp. 1–12

Zhou H, Geist A (1995) “receiver makes right” data conversion in pvm. In: Proceedings International Phoenix Conference on Computers and Communications, pp 458–464. https://doi.org/10.1109/PCCC.1995.472453

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Grant No. Natural Science Foundation of Zhejiang Province (LY20F020001).

Rights and permissions

About this article

Cite this article

Chen, G., Zhang, J., Zhu, Z. et al. CRState: checkpoint/restart of OpenCL program for in-kernel applications. J Supercomput 77, 5426–5467 (2021). https://doi.org/10.1007/s11227-020-03460-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-020-03460-2