Abstract

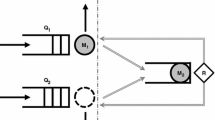

We consider a two dimensional time varying tandem queue with coupled processors. We assume that jobs arrive to the first station as a non-homogeneous Poisson process. When each queue is non-empty, jobs are processed separately like an ordinary tandem queue. However, if one of the processors is empty, then the total service capacity is given to the other processor. This problem has been analyzed in the constant rate case by leveraging Riemann Hilbert theory and two dimensional generating functions. Since we are considering time varying arrival rates, generating functions cannot be used as easily. Thus, we choose to exploit the functional Kolmogorov forward equations (FKFE) for the two dimensional queueing process. In order to leverage the FKFE, it is necessary to approximate the queueing distribution in order to compute the relevant expectations and covariance terms. To this end, we expand our two dimensional Markovian queueing process in terms of a two dimensional polynomial chaos expansion using the Hermite polynomials as basis elements. Truncating the polynomial chaos expansion at a finite order induces an approximate distribution that is close to the original stochastic process. Using this truncated expansion as a surrogate distribution, we can accurately estimate probabilistic quantities of the two dimensional queueing process such as the mean, variance, and probability that each queue is empty.

Similar content being viewed by others

References

Askey, R., & Wilson, J. (1985). Some basic hypergeometric orthogonal polynomials that generalize Jacobi polynomials. Memoirs of the American Mathematical Society, 54, 1–55.

Andradottir, S., Ayhan, H., & Down, D. (2001). Server assignment policies for maximizing the steady state throughput of finite state queueing systems. Management Science, 47, 1421–1439.

Blanc, J. P. C. (1988). A numerical study of a coupled processor model. Computer Performance and Reliability, 2, 289–303.

Blanc, J. P. C., Iasnogorodski, R., & Nain, Ph. (1988). Analysis of the \(M/G//1 \rightarrow / M/1\) Model. Queueing Systems, 3, 129–156.

Boxma, O., & Ivanovs, J. (2013). Two coupled Levy queues with independent input. Stochastic Systems, 3(2), 574–590.

Cameron, R., & Martin, W. (1947). The orthogonal development of non-linear functionals in series of Fourier-Hermite functionals. Annals of Mathematics, 48, 385–392.

Cohen, J. W., & Boxma, O. (2000). Boundary Value Problems in Queueing System Analysis. Oxford: Elsevier.

Engblom, S., & Pender, J. (2014). Approximations for the Moments of Nonstationary and State Dependent Birth-Death Queues. Cornell University. Available at: http://www.columbia.edu/~jp3404

Fayolle, G., & Iasnogorodski, R. (1979). Two Coupled Processors: The reduction to a Riemann-Hilbert Problem. Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete, 47, 325–351.

Knessl, C., & Morrison, J. A. (2003). Heavy traffic analysis of two coupled processors. Queueing Systems, 30, 173–220.

Knessl, C. (1991). On the diffusion approximation to two parallel queues with processor sharing. IEEE Trans on Automatic Control, 30, 173–220.

Konheim, A. G., Meilijson, I., & Melkman, A. (1981). Processor sharing of two parallel lines. Journal of Applied Probability, 18, 952–956.

van Leeuwaarden, J., & Resing, J. A. C. (2005). Tandem queue with coupled processors: Computational issues. Queueing Systems, 50, 29–52.

Mandelbaum, A., Massey, W. A., & Reiman, M. (1998). Strong approximations for Markovian service networks. Queueing Systems, 30, 149–201.

Massey, W. A., & Pender, J. (2011). Skewness variance approximation for dynamic rate multi-server queues with abandonment. Performance Evaluation Review, 39, 74–74.

Massey, W. A., & Pender, J. (2013). Gaussian skewness approximation for dynamic rate multi-server queues with abandonment. Queueing Systems, 75, 243–277.

Massey, W. A., & Pender, J. (2014). Approximating and Stabilizing Dynamic Rate Jackson Networks with Abandonment. Cornell University, . Available at: http://www.columbia.edu/~jp3404

Osogami, T., Harcol-Balter, M., & Scheller-Wolf, A. (2003). Analysis of cycle stealing with switching cost. ACM Sigmetrics, 31, 184–195.

Ogura, H. (1972). Orthogonal functionals of the Poisson process. IEEE Transactions on Information Theory, 18, 473–481.

Pender, J. (2014). Gram Charlier expansions for time varying multiserver queues with abandonment. SIAM Journal of Applied Mathematics, 74(4), 1238–1265.

Pender, J. (2013). Laguerre Polynomial Approximations for Nonstationary Queues, Cornell University. Available at: http://www.columbia.edu/~jp3404

Pender, J. (2015). Nonstationary loss queues via cumulant moment approximations, Cornell University. Probability in Engineering and Informational Sciences, 29(1), 27–49.

Pender, J. (2014). Sampling the Functional Forward Equations: Applications to Nonstationary Queues. Cornell University. Technical Report. Available at: http://www.columbia.edu/~jp3404

Pender, J. (2014). Gaussian Approximations for Nonstationary Loss Networks. Cornell University. Technical Report. Available at: http://www.columbia.edu/~jp3404

Resing, J., & Ormeci, L. (2003). A tandem queueing model with coupled processors. Operations Research Letters, 31, 383–389.

Stein, C. M. (1986). Approximate Computation of Expectations (Vol. 7)., Lecture Notes Monograph Series Hayward: Institute of Mathematical Statistics.

Wright, P. E. (1992). Two parallel processors with coupled inputs. Advances in Applied Probability, 24, 986–1007.

Xiu, D., & Karniadakis, G. E. (2002). The Wiener-Askey polynomial chaos for stochastic differential equations. SIAM Journal on Scientific Computing, 24(2), 619–644.

Acknowledgments

This work is partially supported by a Ford Foundation Fellowship and Cornell University.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Hermite polynomials

Lemma 5.1

(Stein [26]). The random variable \(X\) is Gaussian\((0,1)\) if and only if

for all generalized functions \(f\). Moreover, we also have that

where \(h_n(X)\) is the \(n^{th}\) Hermite polynomial.

Let \(X\) and \(Y\) be two i.i.d Gaussian(0,1) random variables (Fig. 8).

Proposition 5.2

Any \(L^2\) function can be written as an infinite sum of Hermite polynomials of \(X\), i.e.

and

1.2 Calculation of expectation and covariance terms

We define \(\varphi \) and \(\Phi \) to be the density and the cumulative distribution functions, for \(X\) respectively, where

We begin with some of the simpler expectation terms that only involve the evaluation of the Gaussian tail cdf.

Now we use the previous proposition to derive the following expectations. Using the \(L^2\) expansion of the function, we get an infinite series representation for the first line. To move from the second to the third line, we use the fact that the function \(\{ Q_1 > 0 \}\) does not depend on the function \(Y\). Lastly, we use the Hermite polynomial generalization of Stein’s lemma (Fig. 9).

The following two expectations can be calculated easily using the previous calculations.

Now we begin the calculation of the covariance terms with respect to the first queue length. From the first line to the second we use the property that covariances are invariant to constants. Then, we use the Hermite polynomial expansion property and the Hermite polynomial generalization of Stein’s lemma once again (Fig. 10).

For the next two covariance terms, we use the previous covariance term in the calculation.

Now we begin the calculation of the covariance terms with respect to the second queue length. From the first line to the second we use the property that covariances are invariant to constants. Then, we use the Hermite polynomial expansion property and the Hermite polynomial generalization of Stein’s lemma once again.

Lastly, for the next two covariance terms, we use the previous covariance term in the calculation.

Rights and permissions

About this article

Cite this article

Pender, J. An analysis of nonstationary coupled queues. Telecommun Syst 61, 823–838 (2016). https://doi.org/10.1007/s11235-015-0039-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11235-015-0039-0