Abstract

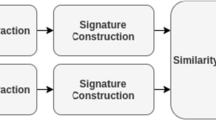

This paper considers the task of image search using the Bag-of-Words (BoW) model. In this model, the precision of visual matching plays a critical role. Conventionally, local cues of a keypoint, e.g., SIFT, are employed. However, such strategy does not consider the contextual evidences of a keypoint, a problem which would lead to the prevalence of false matches. To address this problem and enable accurate visual matching, this paper proposes to integrate discriminative cues from multiple contextual levels, i.e., local, regional, and global, via probabilistic analysis. “True match” is defined as a pair of keypoints corresponding to the same scene location on all three levels (Fig. 1). Specifically, the Convolutional Neural Network (CNN) is employed to extract features from regional and global patches. We show that CNN feature is complementary to SIFT due to its semantic awareness and compares favorably to several other descriptors such as GIST, HSV, etc. To reduce memory usage, we propose to index CNN features outside the inverted file, communicated by memory-efficient pointers. Experiments on three benchmark datasets demonstrate that our method greatly promotes the search accuracy when CNN feature is integrated. We show that our method is efficient in terms of time cost compared with the BoW baseline, and yields competitive accuracy with the state-of-the-arts.

Similar content being viewed by others

References

Arandjelović, R., & Zisserman, A. (2011). Smooth object retrieval using a bag of boundaries. In Computer Vision, IEEE International Conference on (pp. 375–382).

Arandjelović, R., & Zisserman, A. (2012). Three things everyone should know to improve object retrieval. In IEEE Conference on Computer Vision and Pattern Recognition (pp. 2911–2918).

Babenko, A., Slesarev, A., Chigorin, A., & Lempitsky, V. (2014). Neural codes for image retrieval. arXiv preprint arXiv:1404.1777.

Carson, C., Thomas, M., Belongie, S., Hellerstein, J. M., & Malik, J. (1999). Blobworld: A system for region-based image indexing and retrieval. In Visual Information and Information Systems (pp. 509–517).

Charikar, M. S. (2002). Similarity estimation techniques from rounding algorithms. In Proceedings of the 34th annual ACM symposium on theory of computing (pp. 380–388). ACM.

Chen, Y., & Wang, J. Z. (2002). A region-based fuzzy feature matching approach to content-based image retrieval. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(9), 1252–1267.

Chum, O., Philbin, J., Sivic, J., Isard, M., & Zisserman, A. (2007). Total recall: Automatic query expansion with a generative feature model for object retrieval. In IEEE International Conference on Computer Vision (pp. 1–8).

Deng, C., Ji, R., Liu, W., Tao, D., & Gao, X. (2013). Visual reranking through weakly supervised multi-graph learning. In IEEE International Conference on Computer Vision (pp. 2600–2607).

Donahue, J., Jia, Y., Vinyals, O., Hoffman, J., Zhang, N., Tzeng, E., & Darrell, T. (2013). Decaf: A deep convolutional activation feature for generic visual recognition. arXiv preprint arXiv:1310.1531.

Fang, Q., Sang, J., & Xu, C. (2013). Giant: Geo-informative attributes for location recognition and exploration. In Proceedings of the 21st ACM international conference on Multimedia (pp. 13–22).

Ge, T., Ke, Q., & Sun, J. (2013). Sparse-coded features for image retrieval. In British Machine Vision Conference, vol. 6.

Gong, Y., Wang, L., Guo, R., & Lazebnik, S. (2014). Multi-scale orderless pooling of deep convolutional activation features. In European Conference on Computer Vision (pp. 392–407). Springer.

Huiskes, M. J., Thomee, B., & Lew, M. S. (2010). New trends and ideas in visual concept detection: The mir flickr retrieval evaluation initiative. In Proceedings of the international conference on multimedia information retrieval (pp. 527–536). ACM.

Jegou, H., Douze, M., & Schmid, C. (2008). Hamming embedding and weak geometric consistency for large scale image search. In European Conference on Computer Vision (pp. 304–317). Springer.

Jégou, H., Douze, M., & Schmid, C. (2009). On the burstiness of visual elements. In IEEE Conference on Computer Vision and Pattern Recognition (pp. 1169–1176).

Jégou, H., Douze, M., & Schmid, C. (2010). Improving bag-of-features for large scale image search. International Journal of Computer Vision, 87(3), 316–336.

Jégou, H., Perronnin, F., Douze, M., Sanchez, J., Perez, P., & Schmid, C. (2012). Aggregating local image descriptors into compact codes. IEEE Transactions on Pattern Analysis and Machine Intelligence, 34(9), 1704–1716.

Lazebnik, S., Schmid, C., & Ponce, J. (2006). Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In IEEE Conference on Computer Vision and Pattern Recognition (vol. 2, pp. 2169–2178).

LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278–2324.

LeCun, Y., Huang, F. J., & Bottou, L. (2004). Learning methods for generic object recognition with invariance to pose and lighting. In CVPR (pp. 90–97).

Li, X., Larson, M., & Hanjalic, A. (2015). Pairwise geometric matching for large-scale object retrieval. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60(2), 91–110.

Manjunath, B. S., & Ma, W. Y. (1996). Texture features for browsing and retrieval of image data. IEEE Transactions on Pattern Analysis and Machine Intelligence, 18(8), 837–842.

Manjunath, B. S., Ohm, J. R., Vasudevan, V. V., & Yamada, A. (2001). Color and texture descriptors. IEEE Transactions on Circuits and Systems for Video Technology, 11(6), 703–715.

Niester, D., & Stewenius, H. (2006). Scalable recognition with a vocabulary tree. In CVPR.

Oliva, A., & Torralba, A. (2001). Modeling the shape of the scene: A holistic representation of the spatial envelope. International Journal of Computer Vision, 42(3), 145–175.

Perd’och, M., Chum, O., & Matas, J. (2009). Efficient representation of local geometry for large scale object retrieval. In IEEE Conference on Computer Vision and Pattern Recognition (pp. 9–16).

Perronnin, F., Liu, Y., Sánchez, J., & Poirier, H. (2010). Large-scale image retrieval with compressed fisher vectors. In IEEE Conference on Computer Vision and Pattern Recognition (pp. 3384–3391).

Philbin, J., Chum, O., Isard, M., Sivic, J., & Zisserman, A. (2007). Object retrieval with large vocabularies and fast spatial matching. In IEEE Conference on Computer Vision and Pattern Recognition (pp. 1–8).

Qin, D., Wengert, C., & Van Gool, L. (2013). Query adaptive similarity for large scale object retrieval. In IEEE Conference on Computer Vision and Pattern Recognition (pp. 1610–1617).

Razavian, A. S., Azizpour, H., Sullivan, J., & Carlsson, S. (2014) Cnn features off-the-shelf: An astounding baseline for recognition. In IEEE Conference on Computer Vision and Pattern Recognition Workshops (pp. 512–519).

Shen, X., Lin, Z., Brandt, J., Avidan, S., & Wu, Y. (2012). Object retrieval and localization with spatially-constrained similarity measure and k-nn re-ranking. In CVPR.

Shi, M., Avrithis, Y., & Jégou, H. (2015). Early burst detection for memory-efficient image retrieval. In Computer Vision and Pattern Recognition.

Souvannavong, F., Merialdo, B., & Huet, B. (2005). Region-based video content indexing and retrieval. In International Workshop on Content-Based Multimedia Indexing. Citeseer.

Tao, R., Gavves, E., Snoek, C. G., & Smeulders, A. W. (2014). Locality in generic instance search from one example. In IEEE Conference onComputer Vision and Pattern Recognition (CVPR), 2014 (pp. 2099–2106). IEEE.

Tolias, G., Avrithis, Y., Jégou, H. (2013). To aggregate or not to aggregate: Selective match kernels for image search. In IEEE International Conference on Computer Vision (pp. 1401–1408).

Van Gemert, J. C., Veenman, C. J., Smeulders, A. W., & Geusebroek, J. M. (2010). Visual word ambiguity. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(7), 1271–1283.

Wang, J., Shen, H. T., Song, J., & Ji, J. (2014). Hashing for similarity search: A survey. arXiv preprint arXiv:1408.2927.

Wengert, C., Douze, M., & Jégou, H. (2011). Bag-of-colors for improved image search. In ACM international conference on Multimedia (pp. 1437–1440).

White, T. (2012). Hadoop: The definitive guide. Sebastopol, CA: O’Reilly Media, Inc.

Wu, J., & Rehg, J. M. (2011). Centrist: A visual descriptor for scene categorization. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(8), 1489–1501.

Xie, L., Tian, Q., Hong, R., & Zhang, B. (2015). Image classification and retrieval are one. In International Conference on Multimedia Retrieval.

Zhang, H., Zha, Z. J., Yang, Y., Yan, S., Gao, Y., & Chua, T. S. (2013). Attribute-augmented semantic hierarchy: Towards bridging semantic gap and intention gap in image retrieval. In ACM international conference on Multimedia (pp. 33–42).

Zhang, S., Yang, M., Cour, T., Yu, K., & Metaxas, D. N. (2012). Query specific fusion for image retrieval. In European Conference on Computer Vision (pp. 660–673). Springer.

Zhang, S., Yang, M., Wang, X., Lin, Y., & Tian, Q. (2013). Semantic-aware co-indexing for near-duplicate image retrieval. In IEEE International Conference on Computer Vision (pp. 1673–1680).

Zheng, L., Wang, S., Liu, Z., & Tian, Q. (2014). Packing and padding: Coupled multi-index for accurate image retrieval. In CVPR 2014.

Zheng, L., Wang, S., Tian, L., He, F., Liu, Z., & Tian, Q. (2015). Query-adaptive late fusion for image search and person re-identification. In IEEE Conference on Computer Vision and Pattern Recognition (pp. 1741–1750).

Zheng, L., Wang, S., Zhou, W., & Tian, Q. (2014). Bayes merging of multiple vocabularies for scalable image retrieval. In CVPR.

Acknowledgments

This work was supported by the National High Technology Research and Development Program of China (863 program) under Grant No. 2012AA011004 and the National Science and Technology Support Program under Grant No. 2013BAK02B04. This work was supported in part to Dr. Qi Tian by ARO grants W911NF-15-1-0290 and Faculty Research Gift Awards by NEC Laboratories of America and Blippar. This work was supported in part by National Science Foundation of China (NSFC) 61429201.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Communicated by Svetlana Lazebnik.

Rights and permissions

About this article

Cite this article

Zheng, L., Wang, S., Wang, J. et al. Accurate Image Search with Multi-Scale Contextual Evidences. Int J Comput Vis 120, 1–13 (2016). https://doi.org/10.1007/s11263-016-0889-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-016-0889-2