Abstract

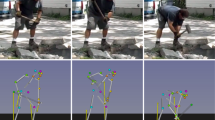

Recent advances in image-based human pose estimation make it possible to capture 3D human motion from a single RGB video. However, the inherent depth ambiguity and self-occlusion in a single view prohibit the recovery of as high-quality motion as multi-view reconstruction. While multi-view videos are not common, the videos of a person performing a specific action are usually abundant on the Internet. Even if these videos were recorded at different time instances, they would encode the same motion characteristics of the person. Therefore, we propose to capture human motion by jointly analyzing these Internet videos instead of using single videos separately. However, this new task poses many new challenges that cannot be addressed by existing methods, as the videos are unsynchronized, the camera viewpoints are unknown, the background scenes are different, and the human motions are not exactly the same among videos. To address these challenges, we propose a novel optimization-based framework and experimentally demonstrate its ability to recover much more precise and detailed motion from multiple videos, compared against monocular pose estimation methods.

Similar content being viewed by others

References

Anguelov, D., Srinivasan, P., Koller, D., Thrun, S., Rodgers, J., Davis, J. (2005). Scape: Shape completion and animation of people. In: TOG.

Arnab, A., Doersch, C., & Zisserman, A. (2019). Exploiting temporal context for 3d human pose estimation in the wild. In: CVPR.

Bo, L., & Sminchisescu, C. (2010). Twin gaussian processes for structured prediction. IJCV.

Bogo, F., Kanazawa, A., Lassner, C., Gehler, P., Romero, J., & Black, M.J. (2016). Keep it smpl: Automatic estimation of 3d human pose and shape from a single image. In: ECCV.

Burenius, M., Sullivan, J., & Carlsson, S. (2013). 3d pictorial structures for multiple view articulated pose estimation. In: CVPR.

Caba Heilbron, F., Escorcia, V., Ghanem, B., & Carlos Niebles, J. (2015). Activitynet: A large-scale video benchmark for human activity understanding. In: CVPR.

Cao, Z., Hidalgo Martinez, G., Simon, T., Wei, S., & Sheikh, Y.A. (2019). Openpose: Realtime multi-person 2d pose estimation using part affinity fields. T-PAMI.

Caspi, Y., & Irani, M. (2002). Spatio-temporal alignment of sequences. T-PAMI.

Chen, C.H., & Ramanan, D. (2017). 3d human pose estimation = 2d pose estimation+ matching. In: CVPR.

Chen, Y., Wang, Z., Peng, Y., Zhang, Z., Yu, G., & Sun, J. (2018). Cascaded pyramid network for multi-person pose estimation.

Cheng, Y., Yang, B., Wang, B., Yan, W., & Tan, R.T. (2019). Occlusion-aware networks for 3d human pose estimation in video. In: ICCV.

Dong, J., Jiang, W., Huang, Q., Bao, H., & Zhou, X. (2019). Fast and robust multi-person 3d pose estimation from multiple views. In: CVPR.

Dong, J., Shuai, Q., Zhang, Y., Liu, X., Zhou, X., & Bao, H. (2020). Motion capture from internet videos. In: ECCV.

Dong, J., Fang, Q., Jiang, W., Yang, Y., Huang, Q., Bao, H., & Zhou, X. (2021). Fast and robust multi-person 3d pose estimation and tracking from multiple views. T-PAMI.

Dwibedi, D., Aytar, Y., Tompson, J., Sermanet, P., & Zisserman, A. (2019). Temporal cycle-consistency learning. In: CVPR.

Elhayek, A., Stoll, C., Hasler, N., Kim, K. I., Seidel, H. P., & Theobalt, C. (2012). Spatio-temporal motion tracking with unsynchronized cameras. In: CVPR.

Elhayek, A., de Aguiar, E., Jain, A., Tompson, J., Pishchulin, L., Andriluka, M., Bregler, C., Schiele, B., & Theobalt, C. (2015a). Efficient convnet-based marker-less motion capture in general scenes with a low number of cameras. In: CVPR.

Elhayek, A., Stoll, C., Kim, K. I., & Theobalt, C. (2015b). Outdoor human motion capture by simultaneous optimization of pose and camera parameters. In: CGF.

Fang, H. S., Xie, S., Tai, Y. W., & Lu, C. (2017). RMPE: Regional multi-person pose estimation. In: ICCV.

Feng, Y., Ma, L., Liu, W., Zhang, T., & Luo, J. (2018). Video re-localization. In: ECCV.

Feng, Y., Ma, L., Liu, W., & Luo, J. (2019). Spatio-temporal video re-localization by warp lstm. In: CVPR.

Gall, J., Rosenhahn, B., Brox, T., Seidel, H. P. (2010). Optimization and filtering for human motion capture. IJCV.

Guan, P., Weiss, A., Balan, A. O., & Black, M. J. (2009). Estimating human shape and pose from a single image. In: ICCV.

Guler, R. A., Kokkinos, I. (2019). Holopose: Holistic 3d human reconstruction in-the-wild. In: CVPR.

Hasler, N., Rosenhahn, B., Thormahlen, T., Wand, M., Gall, J., & Seidel, H. P. (2009). Markerless motion capture with unsynchronized moving cameras. In: CVPR.

Huang, Q. X., Guibas, L. (2013). Consistent shape maps via semidefinite programming. In: CGF.

Huang, Y., Bogo, F., Lassner, C., Kanazawa, A., Gehler, P. V., Romero, J., Akhter, I., & Black, M. J. (2017). Towards accurate marker-less human shape and pose estimation over time. In: 3DV.

Ionescu, C., Papava, D., Olaru, V., & Sminchisescu, C. (2013). Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. T-PAMI.

Joo, H., Simon, T., & Sheikh, Y. (2018). Total capture: A 3d deformation model for tracking faces, hands, and bodies. In: CVPR.

Kanazawa, A., Black, M. J., Jacobs, D. W., & Malik, J. (2018). End-to-end recovery of human shape and pose. In: CVPR.

Kanazawa, A., Zhang, J. Y., Felsen, P., & Malik, J. (2019). Learning 3d human dynamics from video. In: CVPR.

Kocabas, M., Athanasiou, N., & Black, M. J. (2020). Vibe: Video inference for human body pose and shape estimation. In: CVPR.

Kocabas, M., Huang, C. H. P., Hilliges, O., & Black, M. J. (2021). Pare: Part attention regressor for 3d human body estimation. ICCV.

Lassner, C., Romero, J., Kiefel, M., Bogo, F., Black, M. J., & Gehler, P. V. (2017). Unite the people: Closing the loop between 3d and 2d human representations. In: CVPR.

Lee, C. S., & Elgammal, A. (2010). Coupled visual and kinematic manifold models for tracking. IJCV.

Li, R., Tian, T. P., Sclaroff, S., & Yang, M. H. (2010). 3d human motion tracking with a coordinated mixture of factor analyzers. IJCV.

Loper, M., Mahmood, N., Romero, J., Pons-Moll, G., Black, M. J. (2015). Smpl: A skinned multi-person linear model. TOG.

Martinez, J., Hossain, R., Romero, J., & Little, J. J. (2017). A simple yet effective baseline for 3d human pose estimation. In: ICCV.

Moreno-Noguer, F. (2017). 3d human pose estimation from a single image via distance matrix regression. In: CVPR.

Omran, M., Lassner, C., Pons-Moll, G., Gehler, P., & Schiele, B. (2018). Neural body fitting: Unifying deep learning and model based human pose and shape estimation. In: 3DV.

Pavlakos, G., Zhou, X., Derpanis, K. G., & Daniilidis, K. (2017). Harvesting multiple views for marker-less 3d human pose annotations. In: CVPR.

Pavlakos, G., Zhou, X., & Daniilidis, K. (2018a). Ordinal depth supervision for 3d human pose estimation. In: CVPR.

Pavlakos, G., Zhu, L., Zhou, X., Daniilidis, K. (2018b). Learning to estimate 3d human pose and shape from a single color image. In: CVPR.

Pavlakos, G., Choutas, V., Ghorbani, N., Bolkart, T., Osman, A. A., Tzionas, D., Black, M. J. (2019). Expressive body capture: 3d hands, face, and body from a single image. In: CVPR.

Pavllo, D., Feichtenhofer, C., Grangier, D., & Auli, M. (2019). 3d human pose estimation in video with temporal convolutions and semi-supervised training. In: CVPR.

Romero, J., Tzionas, D., & Black, M. J. (2017). Embodied hands: Modeling and capturing hands and bodies together. SIGGRAPH Asia.

Saini, N., Price, E., Tallamraju, R., Enficiaud, R., Ludwig, R., Martinovic, I., Ahmad, A., & Black, M. J. (2019). Markerless outdoor human motion capture using multiple autonomous micro aerial vehicles. In: ICCV.

Sermanet, P., Lynch, C., Chebotar, Y., Hsu, J., Jang, E., Schaal, S., Levine, S., & Brain, G. (2018). Time-contrastive networks: Self-supervised learning from video. In: ICRA.

Sigal, L., Balan, A., & Black, M. J. (2008). Combined discriminative and generative articulated pose and non-rigid shape estimation. In: NeurIPS.

Sigal, L., Isard, M., Haussecker, H., Black, M. J. (2012). Loose-limbed people: Estimating 3d human pose and motion using non-parametric belief propagation. IJCV.

Sun, K., Xiao, B., Liu, D., & Wang, J. (2019). Deep high-resolution representation learning for human pose estimation. In: CVPR.

Sun, X., Shang, J., Liang, S., & Wei, Y. (2017). Compositional human pose regression. In: ICCV.

Sun, X., Xiao, B., Wei, F., Liang, S., & Wei, Y. (2018). Integral human pose regression. In: ECCV.

Tekin, B., Márquez-Neila, P., Salzmann, M., & Fua, P. (2017). Learning to fuse 2d and 3d image cues for monocular body pose estimation. In: ICCV.

Tome, D., Russell, C., & Agapito, L. (2017). Lifting from the deep: Convolutional 3d pose estimation from a single image. CVPR.

Tuytelaars, T., & Van Gool, L. (2004). Synchronizing video sequences. In: CVPR.

Ukrainitz, Y., & Irani, M. (2006). Aligning sequences and actions by maximizing space-time correlations. In: ECCV.

Wang, J., Xu, E., Xue, K., & Kidzinski, L. (2020). 3d pose detection in videos: Focusing on occlusion. arXiv preprint arXiv:200613517.

Wang, O., Schroers, C., Zimmer, H., Gross, M., & Sorkine-Hornung, A. (2014). Videosnapping: Interactive synchronization of multiple videos. TOG.

Wang, Y., Liu, Y., Tong, X., Dai, Q., & Tan, P. (2017). Outdoor markerless motion capture with sparse handheld video cameras. TVCG.

Wolf, L., & Zomet, A. (2006). Wide baseline matching between unsynchronized video sequences. IJCV.

Xiang, D., Joo, H., & Sheikh, Y. (2019). Monocular total capture: Posing face, body, and hands in the wild. In: CVPR.

Xu, X., & Dunn, E. (2019). Discrete laplace operator estimation for dynamic 3d reconstruction. arXiv preprint arXiv:190811044.

Yu, C., Wang, B., Yang, B., & Tan, R. T. (2020). Multi-scale networks for 3d human pose estimation with inference stage optimization. arXiv preprint arXiv:201006844.

Zanfir, A., Marinoiu, E., & Sminchisescu, C. (2018a). Monocular 3d pose and shape estimation of multiple people in natural scenes-the importance of multiple scene constraints. In: CVPR.

Zanfir, A., Marinoiu, E., Zanfir, M., Popa, A. I., & Sminchisescu, C. (2018b). Deep network for the integrated 3d sensing of multiple people in natural images. In: NeurIPS.

Zheng, E., Ji, D., Dunn, E., & Frahm, J. M. (2015). Sparse dynamic 3d reconstruction from unsynchronized videos. In: ICCV.

Zhou, X., Zhu, M., & Daniilidis, K. (2015). Multi-image matching via fast alternating minimization. In: ICCV.

Zhou, X., Zhu, M., Leonardos, S., Derpanis, K. G., & Daniilidis, K. (2016). Sparseness meets deepness: 3d human pose estimation from monocular video. In: CVPR.

Zhou, X., Huang, Q., Sun, X., Xue, X., & Wei, Y. (2017). Towards 3d human pose estimation in the wild: a weakly-supervised approach. In: ICCV.

Acknowledgements

The authors would like to acknowledge support from NSFC (No. 62172364).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jun Sato.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Junting Dong and Qing Shuai have contributed equally to this work.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 49936 KB)

Rights and permissions

About this article

Cite this article

Dong, J., Shuai, Q., Sun, J. et al. iMoCap: Motion Capture from Internet Videos. Int J Comput Vis 130, 1165–1180 (2022). https://doi.org/10.1007/s11263-022-01596-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-022-01596-7