Abstract

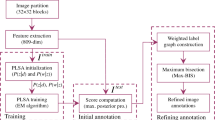

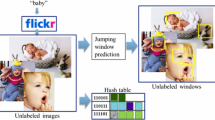

This paper presents a generalized relevance model for automatic image annotation through learning the correlations between images and annotation keywords. Different from previous relevance models that can only propagate keywords from the training images to the test ones, the proposed model can perform extra keyword propagation among the test images. We also give a convergence analysis of the iterative algorithm inspired by the proposed model. Moreover, to estimate the joint probability of observing an image with possible annotation keywords, we define the inter-image relations through proposing a new spatial Markov kernel based on 2D Markov models. The main advantage of our spatial Markov kernel is that the intra-image context can be exploited for automatic image annotation, which is different from the traditional bag-of-words methods. Experiments on two standard image databases demonstrate that the proposed model outperforms the state-of-the-art annotation models.

Similar content being viewed by others

References

Li, J., & Wang, J. (2003). Automatic linguistic indexing of pictures by a statistical modeling approach. IEEE Transactions on Pattern Analysis and Machine Intelligence, 25(9), 1075–1088.

Gao, Y., Fan, J., Xue, X., & Jain, R. (2006). Automatic image annotation by incorporating feature hierarchy and boosting to scale up SVM classifiers. In Proc. ACM multimedia (pp. 901–910).

Chang, E., Kingshy, G., Sychay, G., & Wu, G. (2003). CBSA: Content-based soft annotation for multimodal image retrieval using Bayes point machines. IEEE Transactions on Circuits and Systems for Video Technology, 13(1), 26–38.

Jeon, J., Lavrenko, V., & Manmatha, R. (2003). Automatic image annotation and retrieval using cross-media relevance models. In Proc. SIGIR (pp. 119–126).

Lavrenko, V., Manmatha, R., & Jeon, J. (2004). A model for learning the semantics of pictures. In Advances in neural information processing systems (Vol. 16, pp. 553–560).

Feng, S., Manmatha, R., & Lavrenko, V. (2004). Multiple Bernoulli relevance models for image and video annotation. In Proc. CVPR (pp. 1002–1009).

Liu, J., Wang, B., Li, M., Li, Z., Ma, W., Lu, H., et al. (2007). Dual cross-media relevance model for image annotation. In Proc. ACM multimedia (pp. 605–614).

Lu, Z., & Ip, H. (2009). Image categorization by learning with context and consistency. In Proc. CVPR (pp. 2719–2726).

Lu, Z., & Ip, H. (2010). Combining context, consistency, and diversity cues for interactive image categorization. IEEE Transactions on Multimedia, 12(3), 194–203.

Li, J., Najmi, A., & Gray, R. (2000). Image classification by a two-dimensional hidden Markov model. IEEE Transactions on Signal Processing, 48(2), 517–533.

Rabiner, L. (1989). A tutorial on hidden Markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2), 257–286.

Salzenstein, F., & Collet, C. (2006). Fuzzy Markov random fields versus chains for multispectral image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(11), 1753–1767.

Hofmann, T. (2001). Unsupervised learning by probabilistic latent semantic analysis. Machine Learning, 41, 177–196.

Lu, Z., Peng, Y., & Ip, H. (2010). Image categorization via robust pLSA. Pattern Recognition Letters, 31(1), 36–43.

Lazebnik, S., Schmid, C., & Ponce, J. (2006). Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In Proc. CVPR (pp. 2169–2178).

Shotton, J., Winn, J., Rother, C., & Criminisi, A. (2009). TextonBoost for image understanding: Multi-class object recognition and segmentation by jointly modeling texture, layout, and context. International Journal of Computer Vision, 81(1), 2–23.

Duygulu, P., Barnard, K., de Freitas, N., & Forsyth, D. (2002). Object recognition as machine translation: Learning a lexicon for a fixed image vocabulary. In Proc. ECCV (pp. 97–112).

Liu, J., Li, M., Liu, Q., Lu, H., & Ma, S. (2009). Image annotation via graph learning. Pattern Recognition, 42(2), 218–228.

Liu, J., Wang, B., Lu, H., & Ma, S. (2008). A graph-based image annotation framework. Pattern Recognition Letters, 29(4), 407–415.

Yu, F., & Ip, H. (2006). Automatic semantic annotation of images using spatial hidden Markov model. In Proc. ICME (pp. 305–308).

Yu, F., & Ip, H. (2008). Semantic content analysis and annotation of histological images. Computers in Biology and Medicine, 38(6), 635–649.

Makadia, A., Pavlovic, V., & Kumar, S. (2008). A new baseline for image annotation. In Proc. ECCV (pp. 316–329).

Golub, G., & Loan, C. V. (Eds.). (1989). Matrix computations. Baltimore: The Johns Hopkins University Press.

Acknowledgements

The work described in this paper was supported by a grant from the Research Council of Hong Kong SAR, China (Project No. CityU 114007) and a grant from City University of Hong Kong (Project No. 7008040).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

This appendix presents the proof of Theorem 1. First, we can obtain from Eq. 10 that

Since the sum of each row of S is bounded by 1 (i.e. \(\sum_{J\in \mathcal{U}}P(J|I)\leq 1\)), from the Perron–Frobenius theorem [23], we know the spectral radius of S or ρ(S) ≤ 1. Moreover, since 0 < α < 1 (i.e. ρ(αS) < 1), we have

where I is the identity matrix. Hence, it follows from Eq. 22 that the sequence {F t (W)} will converge to

when t → ∞. That is, we have proven the theorem.

Rights and permissions

About this article

Cite this article

Lu, Z., Ip, H.H.S. Automatic Image Annotation Based on Generalized Relevance Models. J Sign Process Syst 65, 23–33 (2011). https://doi.org/10.1007/s11265-010-0544-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-010-0544-z