Abstract

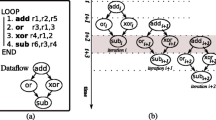

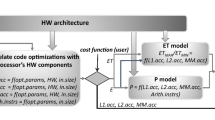

In this paper, we consider programmable tightly-coupled processor arrays consisting of interconnected small light-weight VLIW cores, which can exploit both loop-level parallelism and instruction-level parallelism. These arrays are well suited for compute-intensive nested loop applications often providing a higher power and area efficiency compared with commercial off-the-shelf processors. They are ideal candidates for accelerating the computation of nested loop programs in future heterogeneous systems, where energy efficiency is one of the most important design goals for overall system-on-chip design. In this context, we present a novel design methodology for the mapping of nested loop programs onto such processor arrays. Key features of our approach are: (1) Design entry in form of a functional programming language and loop parallelization in the polyhedron model, (2) support of zero-overhead looping not only for innermost loops but also for arbitrarily nested loops. Processors of such arrays are often limited in instruction memory size to reduce the area and power consumption. Hence, (3) we present methods for code compaction and code generation, and integrated these methods into a design tool. Finally, (4) we evaluated selected benchmarks by comparing our code generator with the Trimaran and VEX compiler frameworks. As the results show, our approach can reduce the size of the generated processor codes up to 64 % (Trimaran) and 55 % (VEX) while at the same time achieving a significant higher throughput.

Similar content being viewed by others

Notes

In practice, n is usually 2 or 3.

An iteration vector consists of the iteration variables of a loop, e. g., in case of a two-dimensional loop nest with iteration variables i and j, the iteration vector is I = (i j)T.

These are similar to rotating registers, used mainly to store the value of variables to deal with loop-carried data dependencies.

The iteration interval II denotes the number of clock cycles between the evaluation of two successive iterations.

If G CF contains a node with a self-edge, there exists the possibility to pack contiguously the instructions from different iterations for that node, since more than one iteration, given by the sum of the weights of all incoming edges divided by how many times the execution enters the node, are executed consecutively. If there is no self-edge, there is no possibility to pack contiguously the instructions from different iterations, since only one iteration is executed.

The average iteration interval is the average time between the start of two successive loop iterations. It is calculated by dividing the total execution time of a loop nest GL by the total number of iterations executed.

The overhead indicates the amount of time that is spent in executing other than the innermost loop compared to the total execution time.

References

Boppu, S., Hannig, F., Teich, J. (2013). Loop program mapping and compact code generation for programmable hardware accelerators. In:Proceedings of the 24th IEEE International Conference on Application-specific Systems, Architectures and Processors (ASAP), (pp. 10–17): IEEE.

Dutta, H., Hannig, F., Teich, J. (2006). Hierarchical partitioning for piecewise linear algorithms. In Proceedings of the 5th International Conference on Parallel Computing in Electrical Engineering (PARELEC), (pp. 153–160): IEEE Computer Society.

Feautrier, P., & Lengauer, C. (2011). Polyhedron model In Padua, D. (Ed.), Encyclopedia of Parallel Computing, (pp. 1581–1592): Springer.

Fisher, J. (1983). Very long instruction word architectures and the ELI-512. In Proceedings of the 10th Annual International Symposium on Computer Architecture (ISCA), (pp. 140–150): IEEE.

GCC. the GNU Compiler Collection. http://gcc.gnu.org.

Gupta, S., Gupta, R., Dutt, N., Nicolau, A. (2004). SPARK: A Parallelizing Approach to the High-Level Synthesis of Digital Circuits: Kluwer Academic Publishers.

Hannig, F. (2009). Scheduling Techniques for High-Throughput Loop Accelerators. Ph.D. thesis. Germany: University of Erlangen-Nuremberg. Verlag Dr. Hut, Munich,Germany,ISBN: 978-3-86853-220-3.

Hannig, F., Dutta, H., Teich, J. (2006). Mapping a Class of Dependence Algorithms to Coarse-Grained Reconfigurable Arrays: Architectural parameters and methodology. International Journal of Embedded Systems, 2(1/2), 114–127. doi:10.1504/IJES.2006.010170.

Hannig, F., Lari, V., Boppu, S., Tanase, A., Reiche, O. (2014). Invasive Tightly-Coupled Processor Arrays: A Domain-Specific Architecture/Compiler Co-Design Approach. ACM Transactions on Embedded Computing Systems (TECS), 13(4s), 133:1–133:29. doi:10.1145/2584660.

Hannig, F., Ruckdeschel, H., Dutta, H., Teich, J. (2008). PARO: synthesis of hardware accelerators for multi-dimensional dataflow-intensive applications. In Proceedings of the 4th International Workshop on Applied Reconfigurable Computing (ARC), Lecture Notes in Computer Science (LNCS) (Vol. 4943, pp. 287–293): Springer.

Hannig, F., Ruckdeschel, H., Teich, J. (2008). The PAULA language for designing multi-dimensional dataflow-intensive applications. In Proceedings of the GI/ITG/GMM-Workshop – Methoden und Beschreibungssprachen zur Modellierung und Verifikation von Schaltungen und Systemen, (pp. 129–138): Shaker.

Hannig, F., & Teich, J. (2004). Resource constrained and speculative scheduling of an algorithm class with run-time dependent conditionals. In Proceedings of the 15th IEEE International Conference on Application-specific Systems, Architectures, and Processors (ASAP), (pp. 17–27): IEEE Computer Society.

Hewlett-Packard Laboratories: Vex toolchain. http://www.hpl.hp.com/downloads/vex.

ILOG (2011). CPLEX Division:ILOG CPLEX 12.1,User’s Manual.

Irigoin, F., & Triolet, R. (1988). Supernode partitioning. In Proceedings of the 15th ACM SIGPLAN-SIGACT Symposium on Principles of Programming Languages (POPL), (pp. 319–329). USA. ACM, San Diego, CA.

Jainandunsing, K. (1986). Optimal partitioning scheme for wavefront/systolic array processors. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), (pp. 940–943). USA: San Jose, CA.

Kissler, D., Hannig, F., Kupriyanov, A., Teich, J. (2006). A dynamically reconfigurable weakly programmable processor array architecture template. In Proceedings of the 2nd International Workshop on Reconfigurable Communication Centric System-on-Chips (ReCoSoC), (pp. 31–37). Montpellier.

Kissler, D., Hannig, F., Kupriyanov, A., Teich, J. (2006). A highly parameterizable parallel processor array architecture. In Proceedings of the International Conference on Field Programmable Technology (FPT), (pp. 105–112): IEEE.

Kroupis, N., Raghavan, P., Jayapala, M., Catthoor, F., Soudris, D. (2009). Compilation technique for loop overhead minimization. In Proceedings of 12th Euromicro Conference on Digital System Design, Architectures, Methods and Tools (DSD), (pp. 419–426).

Lattner, C., & Adve, V. (2004). LLVM: a compilation framework for lifelong program analysis & transformation. In Proceedings of the International Symposium on Code Generation and Optimization (CGO), (pp. 75–86).

Lee, J., Choi, K., Dutt, N. (2003). An algorithm for mapping loops onto coarse-grained reconfigurable architectures. In Proceedings of Conference on Languages, Compilers, and Tools for Embedded Systems (LCTES), (pp. 183–188): ACM.

Lengauer, C. (1993). Loop parallelization in the polytope model. In Best, E. (Ed.) Proceedings of the 4th International Conference on Concurrency Theory (CONCUR), Lecture Notes in Computer Science (LNCS) (Vol. 715, pp. 398–416). Hildesheim: Springer.

Lengauer, C., Barnett, M., III, D.G.H. (1991). Towards Systolizing Compilation. Distributed Computing, 5, 7–24. doi:10.1007/BF02311229.

Mei, B., Vernalde, S., Verkest, D., De Man, H., Lauwereins, R. (2002). DRESC: a retargetable compiler for coarse-grained reconfigurable architectures. In Proceedings of the IEEE International Conference on Field-Programmable Technology (FPT), (pp. 166–173).

Mei, B., Vernalde, S., Verkest, D., Lauwereins, R. (2004). Design methodology for a tightly coupled VLIW/reconfigurable matrix architecture: a case study. In Proceedings of Design, Automation and Test in Europe Conference and Exhibition (DATE) (Vol. 2, pp. 1224–1229).

Melpignano, D., Benini, L., Flamand, E., Jego, B., Lepley, T., Haugou, G., Clermidy, F., Dutoit, D. (2012). Platform 2012, a many-core computing accelerator for embedded SoCs: performance evaluation of visual analytics applications. In Proceedings of the 49th Annual Design Automation Conference (DAC), (pp. 1137–1142): ACM.

Moldovan, D. (1983). On the Design of Algorithms for Vlsi Systolic Arrays. In Proceedings of the IEEE, 71(1), 113–120.

Muddasani, S., Boppu, S., Hannig, F., Kuzmin, B., Lari, V., Teich, J. (2012). A prototype of an invasive tightly-coupled processor array. In Proceedings of the Conference on Design and Architectures for Signal and Image Processing (DASIP), (pp. 393–394): IEEE.

Munshi, A. (2012). The OpenCL specification version 1.2: Khronos OpenCL Working Group.

Rau, B.R. (1994). Iterative modulo scheduling: An algorithm for software pipelining loops. In Proceedings of the 27th Annual International Symposium on Microarchitecture, (pp. 63–74).

Rau, B.R., Schlansker, M.S., Tirumalai, P.P. (1992). CodeGeneration Schema for Modulo Scheduled Loops. SIGMICRO Newsletter, 23(1–2), 158–169.

Schmid, M., Hannig, F., Tanase, A., Teich, J. (2014). High-level synthesis revised – generation of FPGA accelerators from a domain-specific language using the polyhedron model. Parallel Computing: Accelerating Computational Science and Engineering (CSE), Advances in Parallel Computing (Vol. 25, pp. 497–506). Amsterdam: IOS Press.

Singh, H., Lee, M., Lu, G., Bagherzadeh, N., Kurdahi, F., Filho, E. (2000). MorphoSys: An integrated Reconfigurable System for Data-Parallel and Computation-Intensive Applications. IEEE Transactions on Computers, 49(5), 465–481. doi:10.1109/12.859540.

Sousa, É., Tanase, A., Hannig, F., Teich, J. (2013). Accuracy and performance analysis of harris corner computation on tightly-coupled processor arrays. In Proceedings of the Conference on Design and Architectures for Signal and Image Processing (DASIP), (pp. 88–95): IEEE.

Teich, J. (1993). A Compiler for Application-Specific Processor Arrays. Saarbrücken: Shaker Verlag. Ph.D. thesis,Institut für Mikroelektronik, Universität des Saarlandes,ISBN: 3-86111-701-0.

Teich, J., & Thiele, L. (1993). Partitioning of Processor Arrays: A Piecewise Regular Approach. Integration, the VLSI Journal, 14(3), 297–332. doi:10.1016/0167-9260(93)90013-3.

Teich, J., Thiele, L., Zhang, L. (1997). Partitioning Processor Arrays Under Resource Constraints. Journal of VLSI Signal Processing, 17(1), 5–20. doi:10.1023/A:1007935215591.

The Trimaran Consortium: An infrastructure for research in backend compilation and architecture exploration. http://www.trimaran.org.

Thiele, L. (1988). On the hierarchical design of VLSI processor arrays. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS) (Vol. 3, pp. 2517–2520).

Thiele, L. (1995). Resource Constrained Scheduling of Uniform Algorithms. Journal of VLSI Signal Processing, 10, 295–310.

Thiele, L., & Roychowdhury, V. (1991). Systematic design of local processor arrays for numerical algorithms. In Deprettere, E., & van der Veen, A. (Eds.) Proceedings of the International Workshop on Algorithms and Parallel VLSI Architectures 1990 (Vol. A:Tutorials, pp. 329–339). Amsterdam: Elsevier.

Uh, G.R., Wang, Y., Whalley, D., Jinturkar, S., Burns, C., Cao, V. (1999). Effective exploitation of a zero overhead loop buffer. In Proceedings of the Workshop on Languages, Compilers, and Tools for Embedded Systems (LCTES), (pp. 10–19).

Venkataramani, G., Najjar, W., Kurdahi, F., Bagherzadeh, N., Böhm, W., Hammes, J (2003). Automatic compilation to a coarse-grained reconfigurable system-on-chip. ACM Transactions on Embedded Computing Systems (TECS), 2(4), 560–589. doi:10.1145/950162.950167.

Wolfe, M. (1996). High Performance Compilers for Parallel Computing: Addison-Wesley.

Acknowledgements

This work was supported by the German Research Foundation (DFG) as part of the Transregional Collaborative Research Centre “Invasive Computing” (SFB/TR 89).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Boppu, S., Hannig, F. & Teich, J. Compact Code Generation for Tightly-Coupled Processor Arrays. J Sign Process Syst 77, 5–29 (2014). https://doi.org/10.1007/s11265-014-0891-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-014-0891-2