Abstract

Compressive Sensing (CS) is an emerging technology which can encode a signal into a small number of incoherent linear measurements and reconstruct the entire signal from relatively few measurements. Different from former coding scheme which distortion mainly comes from quantizer, distortion and bit rate are related to quantization and compressive sampling in the compressive sensing based image coding schemes. Moreover, the total number of bits is often constrained in the practical application. Therefore under the given bit rate how to balance the number of measurements and quantization step size to minimization the distortion is a great challenge. In this paper, a fast Lagrange multiplier solving method is proposed for the compressive sensing based image coding scheme. Then using the solved Lagrange multiplier, the optimal number of measurements and quantization step size are determined based on the rate-distortion criteria. Experimental results show that the proposed algorithm improves objective and subjective performances substantially.

Similar content being viewed by others

1 Introduction

Compressive sensing is a sampling method that converts unknown input signals, embedded in a high dimensional space, into the signals in smaller dimension. If the input signal is sufficiently sparse, it can be reconstructed in high precision. It was first proposed by Donoho and Candes [1, 2]. By unifying sampling and data compression, the CS-based image coding system abandons the traditional sampling-then-processing cascade architecture such that it does not require too much time and hardware [3, 4]. For example, in [5, 6] the image was coded by partitioning the coefficients into the different sets to sample independently by compressive sensing. In [7, 8] robust image coding with Multiple Description Coding (MDC) was proposed based upon CS for high packet loss transmission. By reducing the number of samples, the decoder has to solve a large scale convex optimization problem. Since the encoding process is straightforward and the decoder is complex, the CS-based system is well-suited to be the preferred compression method where there are restrictions in power consumption, time and space on the encoder side.

In conventional image coding schemes, distortion is mainly caused by quantizer. The larger quantization step size (or quantization parameter), the larger the distortion is. The bit rate can be regulated by changing quantization step size. However, the characteristics of compressive sensing make it different. In CS based image coding schemes, bit-rate is controlled by quantization step size and the number of measurements. Then if the bit-budget of the encoder is constrained, the number measurement and quantization step size will compose a competing performance tradeoff. Therefore, the question is how to determine the proper quantization step size and how to allocate the number of measurements for each coding unit to satisfying the bit budget.

To the best of our knowledge, few researches discuss this issue in detail. In [9], sampling rate allocation method is presented according to the sparsity of each region in distributed compressive video sensing framework. However, it did not consider the effect of quantization to distortion and coding rate. Moreover, the side information is needed. In [10], rate-distortion (RD) optimized rate allocation is presented. However, its efficiency largely depends on the proposed distortion model.

In this paper, the uniform scalar quantization is introduced and a compressive sensing based image coding framework is developed, wherein quantization step size and the number of measurements are jointly optimized. The main contribution of this paper is two-fold.

-

In compressive sensing based image coding framework, the solving method of Lagrange multiplier is studied. With the solved Lagrange multiplier, a joint optimization algorithm is proposed to derive the optimal values of the measurement number and quantization step size in rate-distortion sense. Moreover, quantization is optimized to improve the performance of the dead zone.

-

An adaptive compressive sensing based image coding framework with RD optimization is presented. Firstly the image is sparse representation, then according to the solved Lagrange multiplier, the optimal measurement number and quantization step size can be solved and allocated to the each coding unit, finally, the image can be efficiently compressed with given bit budget.

The scheme performs image-level and block-level parameter allocation and can substantially improve the rate–distortion performance of the CS image coding system. Here, it is worth noting that in this paper our goal mainly focus on the joint optimization and try to develop an adaptive compressive sensing based image coding framework with optimal allocation of measurement number and quantization step size, instead of competing compression performance against the current image compression.

The remaining part of this paper is organized as follows. Section 2 provides introduction to the compressive sensing. Section 3 highlights the intuition and main idea of the algorithm. Section 4 studies the problem of finding Lagrange multiplier in detail. RD optimized compressive sensing coding algorithm is described in Section 5. The performance comparison is carried out in Section 6. Finally, this paper is concluded in Section 7.

2 The Compressive Sensing

The compressive sensing involves three major aspects: sparse representation, measurements and signal recovery. Sparsity means the number of degree of freedom of a discrete time signal. Considering the image as the vector u ∈ ℝ N and representation basis Ψ N × N , the K-sparse signal x can be represented as

In most of the cases K < <N. Commonly natural images are sparse or compressible under decompositions such as Discrete Cosine Transform (DCT) and wavelet transform [11, 12]. Instead of sampling x directly, a small number of CS measurements is taken. Denote a L × N random sensing matrix Φ with L ≪ N and then the linear samples y can be taken

CS theory states that it is possible to recover x from y with overwhelming probability if Θ satisfies a Restricted Isometry Property (RIP) [13, 14]. The number of samples it takes to successfully reconstruct the signal is determined by the correlation of Φ and Ψ [15]. The lower the correlation between Φ and Ψ is, the fewer the measurements are needed. It is proved that by using L ≥ αK log N random measurements, for some small α, x can be recovered with high probability.

Reconstruction algorithms exploit the measurement vector y, measurement matrix Φ and the sparsity of the image in Ψ to recover the value of each pixel in the image. Since L < N, the problem of recovering the image is ill-conditioned. The conventional method exploited for image reconstruction is the l 1-norm minimization algorithm. It searches for the vector which its transformation in representation basis Ψ has the smallest l 1-norm:

Since the measurement vector y is corrupted by quantization noise in image coding system, the problem can be reformulated as follows.

where parameter ε is the magnitude of quantization noise.

Recently the total variation (TV) norm which means the l 1-norm of the gradient is used in CS recovery. The image is reconstructed as follows:

TV minimization recovers the image with sharper and more accurate edges and boundaries compared to l 1-norm minimization. However, It is more computationally complex.

The other category of algorithms is based on iterative greedy pursuit [16]. Greedy algorithms solve a multistage optimization problem by finding a global optimum based on the local optimum solutions found in different stages. Greedy methods have lower complexity at the cost of performance.

3 Basic Idea of the Algorithm

The distortion rate function is briefly analyzed for CS-based image coding system and the basic idea of the optimization algorithm is discussed in this section.

3.1 Distortion Analysis

Considering a simple straightforward image coding architecture there are the following blueprint: measurement, quantization, entropy coding and decoding. Firstly the source image u is sampled by Eqn. (2). Then linear samples y is quantized as follows [17]

where Q SQ : ℝ → ℚ is the quantization function which is the mapping from measurements to the quantization codeword. Suppose that scalar quantization is followed by variable-length encoding. Then encoded signal is

where f enc : ℚ → ℂ is the encoding function mapping the quantization codeword to the binary codeword c enc = {c 1,c 2,…c M }. The lengths of different codewords are allowed to be different. For the quantizer, the distortion rate function is defined by

where \( \overline{R} \) is the corresponding encoding rate. It can be obtained as follows:

The encoding rate has the lower boundary H = ∑ M i = 1 (−p i log2 p i ), where p i is the probability that codeword c i is selected. In CS-based coding system, both measurement and quantization produce the distortion. Therefore the total reconstruction distortion is dependent on approximation error and quantization error. Then the distortion rate function of the coding system can be given by

where ŷ Q is the de-quantized signal and \( \widehat{x} \) is the recovered signal. Suppose that the measurements are quantized using a uniform scalar quantizer with step size Δ. That is, CS measurements are rounded to the closest value in {n ⋅ Δ, n ∈ ℤ}. Consequently quantization error can be bounded by

In case of small number L ≪ N of linear measurements, reconstruction error can be represented by:

where C and α is small constant [18]. The reconstruction error of the recovered signal approaches zero as the number of measurements increases. With above discussion, the difference between the original signal and the signal reconstructed at the decoder is at most

The term on the left is quantization error, while the term on the right is approximation error.

3.2 Basic Idea of the Algorithm

In practice, bit-budget is commonly constrained. Given the fixed bit-budget R c , the number of measurements and quantization step size will compose a competing performance tradeoff. As quantization step size increases, less bit are being used to encode each measurement, thereby decreasing the precision of each measurement and increasing distortion. In this way more measurements can be obtained under the fixed bit budget, thereby increasing the quality of the recovered image. On the other hand, as quantization step size decreases and the precision of each measurement increases, more bits are being used to represent each image measurement and less measurement can be obtained. Thus, as mentioned in the introduction, a main problem is how to allocate bit rate between quantization and sampling process to obtain the optimal reconstruction performance.

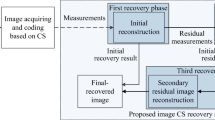

The presented framework is shown in Figure 1. It contains six modules: sparse representation, partition coding unit, RD optimized parameter allocation, CS sampling, measurement quantization and entropy coding.

-

1)

Sparse representation and partition coding unit: The input signal is changed to sparse signal by sparse representation module. The most common representation basis is DCT, curvelet transform and wavelet transform. Since different regions in image have different spatial correlation and complexity, different sampling rate is needed. More complex regions need to be allocated more measurements and vice versa. Therefore partition coding unit module divides the transformed image into several non-overlapping coding units. In this framework, Discrete Wavelet Transform (DWT) is employed for sparse representation and the coding units are defined according to subbands. Although DWT are used and coding units are defined in this paper, other sparse representation and coding unit definition is also adaptable to the framework and RDO theme presented is applicable as well.

-

2)

RD optimized parameter allocation: R-D optimization problem can be converted to unconstrained form by introducing Lagrange multiplier λ which is normally referred as the slope of the R-D curve. Thus this module has two processes. Firstly, the optimal λ is solved under the given bit budget. Consequently, the number of measurements and quantization step size (the number of bits per measurement) is optimized with λ. As a result the best reconstruction quality is obtained in RD sense. There are two kind of optimization: image-level and block-level optimization. When the whole wavelet coefficients are taken as a coding unit, the image-level optimization aims at allocating the optimal quantization step size and the number of measurements with the given bit budget. If the wavelet coefficients are partitioned into several coding blocks, the block-level optimization focuses on determining the optimal quantization step size and allocating the optimal number of measurements to each block.

-

3)

RD optimized quantization: In this framework uniform scalar quantizer is introduced. The quantization step size is determined by ‘RD optimized parameter allocation’ module. Moreover, as uniform quantizer performs well with large signals and behaves poor in dead-zone, quantization is optimized to improve the performance for small signals according to rate-distortion criteria.

4 Solving Lagrange Multiplier for CS Based Image Coding

Rate-Distortion Optimization (RDO) plays a critical role in the hybrid video coding. It aims at minimizing the distortion for a given rate R c by appropriate selections of coding options. Since it can greatly improve performance of coding system, it pervades all of source coding. Lagrangian optimization is a popular method to convert the constrained R-D optimization problem to the unconstrained form by introducing Lagrange multiplier λ (the slope of RD curve). The proper value of λ is important to achieve the optimal solution at the required rate. Many works have focused on this approach [19–23]. However, for CS based image coding framework most researches aim at analyzing the rate-distortion performance [24–27] and few researches focus on solving λ. In this paper, we try to find the optimal slope of RD curve under the given bit budget in CS based image coding framework.

Rate-Distortion Optimization (RDO) minimizes the distortion for a given rate R c by appropriate selections of coding parameters,

where S is the set of coding parameters, D(s) and R(s) are the distortion and the rate caused by the parameter configuration s respectively. This constrained problem can be converted to the unconstrained format

where λ is the Lagrangian multiplier. Since the solution of above equation is the point at which the line of absolute slope λ is tangent to the convex hull of the R-D characteristic, λ is normally referred as the slope of the R-D curve. Generally speaking, a large value of λ corresponds to the higher distortion and the lower bit-rate. On Contrary, a smaller value corresponds to the lower distortion and the higher bit-rate. The desired optimal slope value is corresponding to the optimal convex hull point for the given rate budget R c .

In this section, the biased Lagrange cost J B (λ) similar to the bisection search algorithm is introduced [28]. The biased Lagrange cost J B (λ) is defined as follows

It can be proved that J B (λ) is the convex function of λ and λ ∗ that minimize J B (λ) is the optimal slope for the given budget constraint. Moreover, since J B (λ) is convex, the optimal slope λ * is unique. The more detailed proof is shown in the appendix. Since λ ∗ is the optimal slope, the corresponding s ∗(λ ∗) is the optimal operating point. Therefore the problem of searching the optimal slope is converted to solving the minimum value of J B (λ). The optimal slope λ * can be found:

In this framework, coding options include quantization step size and the number of measurements. Then the optimal slope is the solution to

where Q step is quantization step size and N cs is the ratio of the number of retained measurements to the total samples. In addition, no matter how the operational point is distributed on R-D curve, the minimization can be searched definitely. Consequently the problem of searching the optimal slope is converted to solving the minimum value of J B (λ). Apparently it can be accomplished by the fast convex search algorithm like Bisection method [29] or the golden-ratio search [20].

Considering the complexity and the convergent rate, the ergodically fast Line-search algorithm which has the better performances than the well-known Golden Section algorithm is applied [30]. Suppose the minimization of a single extremal function f(⋅) on some interval [A, B]. Define [A 1, B 1] ⊃ [A, B] and an initial point E 1 ∈ [A 1, B 1]. The fast Line-search algorithm compares function values at two points: E n1 from the previous iteration and E n2 selected by the algorithm at the current iteration. Define U n = min{ E 1,n, E 2,n }, V n = max{ E 1,n, E 2,n }, then the search interval is updated. If f (U n ) < f (V n ), the updated search interval is [A n , V n ]; otherwise the search interval is [U n , B n ]. At the next iteration the point E 1,n in the updated search interval is evaluate. When the search interval is smaller than the predefined precision, convergence is achieved.

This fast Line-search algorithm is used to search the minimum of J B (λ). The choice of a good initial operating point is the key to a fast convergence. Assume we have chosen two values A, B with A < B, which satisfy the relation R(A) ≤ R c ≤ R(B). Note that failure to find both of A and B which satisfies the above inequalities means that the given problem is unsolvable; i.e., R c is inconsistent with the given sets of parameters. A conservative choice for a solvable problem would be A = 0 and B = ∞. The minimization of the function is f (C min) and C min = (A n + 1 + B n + 1)/2. For the typical iterate times N, 10 ≤ N ≤ 30, are the optimal choice.

Then the proposed algorithm with the improved convergence rule takes the following main steps:

-

1.

Initialize the initial range, threshold ε and search parameters: α = 0.3772 and β = 0.15.

-

2.

Set the search interval [λ a , λ b ], where λ a = A − α(B − A), λ b = B + α(B − A).

-

3.

Compute two points λ e1 and λ e2

$$ \begin{array}{cc}\hfill {\lambda}_{e1}=\left({\lambda}_a+{\lambda}_b\right)/2-\beta \left({\lambda}_b-{\lambda}_a\right)/2,\hfill & \hfill {\lambda}_{e2}=\left\{\begin{array}{c}\hfill {\lambda}_{e1}+\beta \left({\lambda}_b-{\lambda}_a\right)\hfill \\ {}\hfill {\lambda}_{e1}-\beta \left({\lambda}_b-{\lambda}_a\right)\hfill \end{array}\right.\begin{array}{cc}\hfill \hfill & \hfill \begin{array}{c}\hfill {\lambda}_{e1}<\left({\lambda}_a+{\lambda}_b\right)/2\hfill \\ {}\hfill otherwise\begin{array}{cc}\hfill \begin{array}{cc}\hfill \hfill & \hfill \hfill \end{array}\hfill & \hfill \hfill \end{array}\hfill \end{array}\hfill \end{array}\hfill \end{array} $$ -

4.

Compute the biased rate-distortion cost J B (λ e1), J B (λ e2).

-

5.

Set \( \begin{array}{cc}\hfill {\lambda}_U= \min \left({\lambda}_{e1},{\lambda}_{e2}\right),\hfill & \hfill {\lambda}_V= \max \left({\lambda}_{e1},{\lambda}_{e2}\right)\hfill \end{array} \) and then compute the corresponding cost J B (λ U ), J B (λ V ).

-

6.

Update the next search interval [λ a , λ b ]. If J B (λ U ) < J B (λ V ), set λ b = λ V , else set λ a = λ U .

-

7.

If |λ a − λ b | < ε, the optimal slope is searched; else go to step 2.

Thus starting from a known initial interval engulfing the desired operating slope, the search intervals are made successively smaller, exploiting the relationship of both global rate and global distortion with respect to the operating slope until convergence is achieved.

5 RD Optimized Compressive Sensing Coding

In this section, we use the solved Lagrange multiplier λ ∗ to develop a joint N cs -Q step optimization method that can be used in compressive sensing based image coding framework. Moreover, quantization is optimized in RD sense to improve the performance of dead-zone.

5.1 Quantization Optimization

According to rate-distortion theory, the optimal quantization performance can be acquired by minimizing D subject to a bit budget constraint R ≤ R c . Apparently, quantizing every value in such a way can produce high performance. However, it is simply impossible because of huge complexity. It is known that the statistical properties of coefficients of the transform, e.g. DCT and wavelet, are characterized by Laplacian distributions. That is to say, the distribution is sharp in small signal zone and flat in large signal zone. Meanwhile, the uniform scalar quantizer is intensively concerned for its excellent R-D performance in high bit rate and poor performance in low bit rate. Thus it is reasonable to focus on improving quantization performance for the coefficients in dead-zone.

The basic idea is quantizing the coefficients in dead-zone according to RD criteria. For the coefficients located in the dead-zone, the quantization level should be zero originally while it is not definitely in the optimized quantizer. These small coefficients will be quantized to values that produce the best RD performance. For convenience, we define the window W z containing the coefficients to be processed. Then the optimal quantization level of the coefficients falling into W z can be gotten:

where y is the coefficients to be quantized, z is the candidate quantization level and D Q is the corresponding distortion. Since W z is near the dead-zone, the coefficients in it is small and then the possible quantization level will be ranged in a limited set. In this work, the set of candidate quantization levels are defined as I = {0, 1}. Initially W z can be defined as the union of the first and second quantization cell. Then its range can be adaptively updated according to the coefficients actually changed. The typical distributions of these coefficients are depicted in Figure 2 for quantization level Q step = [18, 24, 26, 31]. It can be seen that the requantized coefficients are located in a limited zone. Moreover, the coefficients near zero are impossibly quantized to different values since the original quantization levels are reasonable. In this way, the optimal quantization level for the coefficients in W z is solved. The detailed information is included in our previous work [31].

5.2 Joint the Number of Measurements and Quantization Step Size

In compressive sensing based image coding scheme, both of quantization and compressive sampling are related to distortion and rate. For the fixed number of measurements, the distortion increases with the increase of quantization step size. In other words, if fewer bits are allocated to each measurement, a coarse quantization will cause poor reconstruction quality. It can be observed in Figure 3a. For the fixed quantization step size, the distortion decreases with the increase of the number of measurements at same time the bit rate is increased. Moreover, as shown in Figure 3b, when N cs is small, such as 0.1 or 0.2, the distortion is quite large and will rapidly decrease as the increase of N cs . When N cs is large, the decrease of the distortion is not so obvious. The reason may be that when N cs is large enough no more information could be provided as increasing N cs .

However, if the target bit rate is given, there is a balance between quantization step size and the number of measurements. In this case, the approximation error due to insufficient number of CS measurements and quantization error caused by insufficient quantization precision cannot be simultaneously minimized. Decreasing approximation error allows a larger number of measurements but reduces the quantization precision per measurement, and vice versa. The aim is to find the optimal value of Q step and N cs which can minimize the distortion with the bit budget R c . Since both of quantization and compressive sensing contribute to distortion and bit rate, we can define rate R(Q step , N cs ) and distortion D(Q step , N cs ). Then the optimal performance can be obtained by

where I is the set of candidate quantization step size. Since the different regions of the image have different temporal correlation and sparsity properties, the image commonly is compressed based on non-overlapping coding blocks. In addition, the number of required measurements for reconstructing a block highly depends on the sparsity of the block. Hence, sparser blocks need fewer measurements and vice versa. That is to say, the number of measurements should not be allocated to each coding block equally. Then the problem is converted to determine the optimal quantization step size and number of measurements of each coding block. It is can be expressed as follows

where the image is partitioned to B blocks and N cs (i) is the ratio of retained samples of block i.

In this framework, the coding unit is defined in wavelet domain. After DWT, there are four subbands for one-level decomposition, seven subbands for two-level decomposition and so on. Denoting each subband as a coding block, the over-lapped blocks are gotten. The properties of each subband are different. It can be explained by taking one-level decomposition as an example. In this case, LL, HL, LH and HH subbands reflecting low-frequency and high-frequency components respectively are obtained. Roughly speaking, LL subband represents a low-pass-filtered low-resolution version of the original image. Moreover, it captures most of the information. It can be seen from Figure 4 that most of significant coefficients are located in the LL subband and most of zero values are located in the HH subband. LL subband is more complex than the high-frequency subbands. It is known that the number of measurements needed to recover the signal is depends more on the inherent complexity of the image than the number of pixels we wish to reconstruct. Since the complexity and sparsity of an image are highly correlated, it can be deduced that the sparsity of each subband is not same. Furthermore, the LL subband has lower sparsity while the high-frequency subbands have higher sparsity.

At the same time, since LL subband contains most of information of the image, it is more proper to be allocated more measurements to acquire higher recovery quality. Experiments are executed to observe the improvement of recovered image quality with the increase of measurement number. Firstly, the total number of sampled coefficients is given and is equally divided into four subbands. Define the measurement numbers of LL, LH, HL and HH subbands as M 1, M 2 , M 3 and M 4. Then in the condition of M 2 , M 3 and M 4 unchanged, the improvement of performance can be observed with the increase of M 1. In the same way, the improvement of performance can be got with the increase of M 2 , M 3 and M 4 respectively as shown in Figure 5. It can be seen that with the more measurement number of LL subband the recovery quality can be greatly improved, while this improvement cannot be seen in the high-frequency subbsands. With the given total measurement number M t , the performance of different allocation scheme is shown in Table 1. The average 0.5 dB gain will be obtained with every 4 % measurement number increase in LL subband, while there is no obvious gain with measurement number increase in HH subband. It can be deduced that LL subband should be allocated more measurements compared to other subbands.

Some algorithms allocate the number of CS measurements according to the number of wavelet coefficients [32, 33]. However, it is not easy to use the number of nonzero coefficients of a block to estimate its real sparsity. In this section, we use Eqn. (20) to allocate the number of measurements of each subband. Since the subbands are sampled and recovered separately, suppose B subbands is obtained after DWT and the subband i is re-sampled using random matrices Φ i , i = 1,…B. The optimal allocation can be obtained by:

In fact, only when parameter N cs (i) is larger than a threshold, the signal can be recovered with high probability. This lower bound of the measurement number of each subband i is related to the sparsity. For simplicity, the sole lower bound N LB is defined which can be estimated by the sparsity of total wavelet coefficients. By introducing Lagrange multiplier λ, the optimal quantization step size and measurement number for each subband can be acquired as follows,

With discussion in Section 4, the joint optimization of allocation of quantization step size and measurement number can be acquired by solving

where the innermost minimization (a) involves the search for the best quantization level for signals in the processed window W z and the optimal measurement number allocation for fixed operating slope λ and the outermost optimization (b) is the convex search for λ * subject to R c . Accordingly, the final solution is obtained in two sequential optimization step.

6 Experimental Results

The proposed scheme is implemented and experimented on a set of diverse natural images. Suppose each test image was performed three-level DWT. Gaussian random matrix is selected as CS measurement matrix. The measurement matrix is generated pseudorandomly from a single seed at the encoder and decoder. Scalar uniform quantization was considered and arithmetic coding is applied in the experiment. Orthogonal Matching Pursuit (OMP) and TV minimization with equality constraints are considered to recovery sampled signals. The efficiency of each strategy is related to how sparse the images are in the wavelet domain. Tests were made for different quantization steps and the number of measurements in order to select the best for each compression rate.

Firstly to see the gains from quantizer optimization, the performance with the fix allocation of the measurement number is tested. As shown in Figure 6, the performance comparison is obtained for “Lena” with 40 % coefficients retained and 50 % coefficients retained in total for “bank” with OMP recovery. It can be seen that the rate-distortion optimized quantizer results in better performance. The average gain about 1 dB can be achieved with the same rate.

The proposed scheme are compared with the traditional CS coding system in which the image is transformed into wavelet space, resampled by CS, uniformly quantized and entropy coded. In case of image-level parameter allocation, the joint optimization problem is how to solve the optimal Lagrange multiplier λ and allocate the optimal quantization step size and the number of measurements with the given bit budget. Figure 7 shows the solved Lagrange multiplier λ under the different bit budget. Moreover, the optimization performance with the solved λ is shown in Figure 8. In the traditional coding scheme, the fixed measurement number and variable quantization step size are used to obtain different bit-rates, then different RD curves can be achieve by using N CS = 0.2,0.3,0.4,0.5 respectively. Compared to the optimization scheme, approximately 0.3 dB gains can be observed.

In case of block-level parameter allocation, the joint optimization problem is how to determine the optimal quantization step size and allocate the optimal number of measurements to each block. The Peak signal-to-noise ratio (PSNR) values versus the rate with OMP recovery methods are shown in Figure 9. The proposed algorithm substantially surpasses the traditional CS algorithm with maximum gain of 5 dB. It can be seen that the average gain about 3 dB can be achieved with the same rate. TV reconstruction method is also considered in Figure 6. Since the natural image has small discrete gradient in most locations, TV method performs better in PSNR. With 40 % coefficients retained, the proposed algorithm can further improve the coding performance about 0.7 dB. However, TV method is time consuming. It is obvious that allocation different measurement number to wavelet subbands brings more gains. Since more measurements are allocated to represent the more significant coefficients, the quality of the reconstructed image is improved.

We also include the results of Set Partitioning in Hierarchical Trees (SPIHT) image compression methodology. From Figure 10, it can be seen that the PSNR for SPIHT is better than the proposed algorithm, with the values becoming significant in the case of high bit rates. Since up to now almost existing CS methods are not competitive against traditional signal compression methods in rate–distortion performance, the performance of the proposed algorithm is acceptable. Figure 11 shows the subjective comparisons between the traditional wavelet algorithm and the proposed algorithm. Obviously the proposed algorithm has better subjective performance.

To see how the algorithm allocates measurements between the four subbands in relation to the bit budget, we tabulate in Table 2 the ratio of the optimized measurement number for each subband to the total number. It can be concluded that more measurements are allocated to LL subband. This is because LL subband has lower sparsity and needs to be sufficient to make the recovered image good enough.

7 Conclusions

Compressed Sensing is based on the concept that small number of linear projections of a compressible signal contains enough information to reconstruct or further process the signal. Since it can decrease the computation, it has been investigated extensively. Recently, CS has been applied for image compression. Therefore, both of quantization and compressive sampling cause distortion in CS based image coding scheme. In case of given total bit rate, it is important to determine how to allocate quantization step size and the number of measurements. In today’s hybrid video coding, rate-distortion optimization plays a critical role. In this paper, rate-distortion based method is proposed to jointly optimize the allocation. Firstly, the Lagrange multiplier solving method is proposed for CS based coding scheme. The proposed solving method also can be used in other CS-based image/video coding scheme. Then with the solved Lagrange multiplier, a joint optimization method is presented in rate-distortion sense. Simulation results show that the proposal offers comparable RD performance to the conventional method.

References

Donoho, D. (2006). Compressive sensing. IEEE Trans. on Inform Theory, 52(4), 1289–1306.

Cand’es, E., Romberg, J., & Tao, T. (2006). Robust uncertainty principles, exact signal reconstruction from highly incomplete frequency information. IEEE Trans. on Inform Theory, 52(2), 489–509.

Wu, X. L. & Zhang, X. J. (2009). Model-guided adaptive recovery of compressive sensing, in Proc. Data Compression Conf. (DCC), pp. 123–132.

Goyal, V. K., Fletcher, A. K., & Rangan, S. (2008). Compressive sensing and lossy compression. IEEE Signal Processing Magazine, 25(2), 48–52.

Wu, S-H, Peng, W-H, Chiang, T (2010). Bit-plane compressive sensing with bayesian decoding for lossy compression, 28th Picture Coding Symposium, pp. 606–609.

Wen, J., Chen, Z., Han, Y., Villasenor, J. D., & Yang, S. (2010). A compressive sensing image compression algorithm using quantized DCT and noiselet information, In Proc. Acoustics, Speech and Signal Process.Conf. (ICASSP), pp. 1294–1296.

Deng, C.W., Lin, W. S., Lee, B. S. & Lau, C. T. (2010). Robust image compression based upon compressive sensing, in Proc. IEEE Int. Conf. Multimed. and Expo. (ICME), pp. 462–467.

Deng, C., Lin, W., & Lee, B.-S. (2012). Robust image coding based upon compressive sensing. IEEE Trans. on Multimed., 14(2), 278–290.

Chen, H.-W., Kang, L.-W., & Lu, C.-S. (2010). Dynamic measurement rate allocation for distributed compressive video sensing. Proceedings of SPIE, 7744, 774401–774410.

Haixiao, L., Bin, S., Fang, T., Hao, Q., & Joint Sampling Rate and Bit-Depth Optimization in Compressive Video Sampling. (2014). IEEE Trans. on Multimed, 16(6), 1549–1562.

Lam, E., & Goodman, J. (2000). A mathematical analysis of the DCT coefficient distributions for images. IEEE Trans. on Image Process., 9(10), 1661–1666.

Huang, J. & Mumford, D. (1999). Statistics of natural images and models, in Comput. Vision and Pattern Recognition, IEEE Comput. Society Conf., pp. 1543–547.

Candes, E., & Wakin, M. (2008). An introduction to compressive sampling. IEEE signal process. magazine, 25(2), 21–30.

Baranuik, R. (2007). Compressive sensing. IEEE signal process. Magazine, 24(4), 118–124.

Cand’es, E. J., & Romberg, J. (2007). Sparsity and incoherence in compressive sampling. Inverse Problems, 23(3), 969–985.

Do, T. T., Gan, L., Nguyen, N. & Tran, T. D. (2008). Sparsity adaptive matching pursuit algorithm for practical compressed sensing, Asilomar Conf. on Signals, Syst., and Comput. pp. 581–587.

Laska, J. N., & Baraniuk, R. G. (2012). Regime change: Bit-depth versus measurement-rate in compressive sensing. IEEE Transactions on Signal Processing, 60(7), 3496–3505.

Candes, E. & Romberg, J. (2006). Encoding the lp ball from limited measurements, Proceedings of the Data Compression Conference (DCC), pp. 1–10.

Stutz, T., & Uhl, A. (2012). Efficient and rate-distortion optimal wavelet packet basis selection in JPEG2000. IEEE Trans. on Multimed, 14(2), 264–277.

Jiang, W. (2009). A novel algorithm of solving the optimal slope on rate-distortion curve for the given rate budget. Journal of Donghua University (English Edition), 26(3), 259–263.

Fan, Y., Wang, J., & Sun, J. (2012). Multiple description image coding based on delta-sigma quantization with rate-distortion optimization. IEEE Trans. on image process, 21(9), 4304–4309.

Chen, Z., & Dapeng, W. (2012). Rate-distortion optimized cross-layer rate control in wireless video communication. IEEE Trans. on CSVT, 22(3), 352–365.

Jiaying, L., Yongjin, C., Zongming, G., & Kuo, C.-C. J. (2010). Bit allocation for spatial scalability coding of H.264/SVC with dependent rate-distortion analysis. IEEE Trans. on CSVT, 22(7), 967–981.

Schulz, A., Velho, L., da Silva, E. A. B. (2009). On the empirical rate-distortion performance of compressive sensing, in Proc. Image Process. Conf., pp. 3049–3042.

Boufounos, P., & Baraniuk, R. (2008). Quantization of sparse representations. IEEE Trans. On Inform. Theory, 52(2), 489–509.

Jacques, L., Hammond, D. K. & Fadili, M. J. (2009). Dequantized compressive sensing with nongaussian constraints, IEEE Int.Conf. on Image Process. (ICIP), pp. 1465–1468.

Wang, L., Wu, X., & Shi, G. (2012). Binned progressive quantization for compressive sensing, IEEE Trans. Compression actions On Image Process., pp. 2980–2990.

Press, W. K., Flannery, B. P., Teukolsky, S. A., et al. (1988). Numerical recipes in C: The art of scientific computing. Cambridge: Cambridge Univ. Press.

Ramchandran, K., & Vetterli, M. (1993). Best wavelet packet bases in a rate-distortion sense. IEEE Trans. on Image Processing, 2(2), 160–175.

Pronzato, L., Wynn, H. P., & Zhigljavsky, A. A. (1999). Finite sample behaviour of an ergodically fast line-search algorithm. Comput. Optim. and Appl, 14(3), 1475–86.

Wei, J., Jia, W., & Jun, S. (2005). Rate-distortion based quantisation level adjustment for H.264. Electronics Letters, 41(16), 29–30.

Deng, C.W., Lin, W. S., Lee, B. S. & Lau, C. T. (2010). Robust image compression based upon compressive sensing, in Proc. IEEE Int. Conf. Multimed. and Expo. (ICME),, pp. 462–467.

Venkatraman D., & Makur, A. (2009). A compressive sensing approach to object-based surveillance video coding, in Proc. IEEE Int. Conf. Acoustics, Speech. Signal Process. (ICASSP), pp. 3513–3516.

Acknowledgments

This work is supported by National Natural Science Foundation of China (NSFC, 61401269, 61371125, 61202369), Natural Science Foundation of Shanghai (14ZR1417400), Shanghai Technology Innovation Project (10110502200, 11510500900), Innovation Program of Shanghai Municipal Education Commission (12ZZ176,13YZ105), Project of Science and Technology Commission of Shanghai Municipality (10PJ1404500), Leading Academic Discipline Project of Shanghai Municipal Education Commission (J51303).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The biased Lagrange cost J B (λ) is defined as follows

Then following results can be concluded:

-

1.

J B (λ) is the convex function of λ.

$$ \begin{array}{l}{J}_B\left({\lambda}_3\right)={\lambda}_3{R}_c-{J}^{*}\left(\lambda \right)\\ {}\begin{array}{cc}\hfill \hfill & \hfill \hfill \end{array}={\lambda}_3{R}_c-\underset{s\in S}{ \min}\left\{D(s)+\left[\theta {\lambda}_1+\left(1-\theta \right){\lambda}_2\right]R(s)\right\}\\ {}\begin{array}{cc}\hfill \begin{array}{cc}\hfill \hfill & \hfill \hfill \end{array}\hfill & \hfill \begin{array}{l}\le \theta \underset{s\in S}{ \min}\left\{\left[{\lambda}_1{R}_c-D(s)-{\lambda}_1R(s)\right]+\left(1-\theta \right)\underset{s\in S}{ \min}\left[{\lambda}_2{R}_c-D(s)-{\lambda}_2R(s)\right]\right\}\\ {}=\theta {J}_B{\left(\lambda \right)}_1+\left(1-\theta \right){J}_B\left({\lambda}_2\right)\end{array}\hfill \end{array}\end{array} $$(26)where λ 3 = θλ 1 + (1 − θ)λ 2, 0 ≤ θ ≤ 1.

-

2.

λ * and s*(λ *) that minimize J B (λ) are the optimal slope and optimal operating point for the given budget constraint.

Suppose λ ' is the slope of the convex hull face which “straddles” the budget constraint line on the R-D plane. For λ < λ ', invoking the lower rate operating point s '

$$ \begin{array}{l}\left.\left.{J}_B\left(\lambda \right)-{J}_B\left(\lambda \hbox{'}\right)=\underset{s\in S}{ \min}\left\{\left[\lambda {R}_c-\left(D(s)+\lambda R(s)\right)\right]-\left[\lambda \hbox{'}{R}_c-\left(D(s)+\lambda \hbox{'}R\left(s\hbox{'}\right)\right)\right]\right\}\right]\right\}\\ {}\begin{array}{cc}\hfill \begin{array}{cc}\hfill \begin{array}{ccc}\hfill \begin{array}{cc}\hfill \hfill & \hfill \hfill \end{array}\hfill & \hfill \hfill & \hfill \hfill \end{array}\hfill & \hfill \begin{array}{l}\ge \left[\lambda {R}_c-\left(D\left(s\hbox{'}\right)+\lambda R\left(s\hbox{'}\right)\right)\right]-\left[\lambda \hbox{'}{R}_c-\left(D\left(s\hbox{'}\right)+\lambda \hbox{'}R\left(s\hbox{'}\right)\right)\right]\\ {}\ge \left(\lambda -\lambda \hbox{'}\right)\left({R}_c-R\left(s\hbox{'}\right)\right)\ge 0\end{array}\hfill \end{array}\hfill & \hfill \hfill \end{array}\end{array} $$(27)Similarly, for λ > λ ', invoking the higher rate operating point s ' ', it can be got

$$ {J}_B\left(\lambda \right)-{J}_B\left(\lambda \hbox{'}\right)\ge \left(\lambda -\lambda \hbox{'}\right)\left({R}_c-R\left(s\hbox{'}\hbox{'}\right)\right)\ge 0 $$(28)Therefore, for all positive values of λ, J B (λ ') < J B (λ) is satisfied. It is proved that the optimal slope for the bit budget R c is the point minimizing the biased cost J B (λ).

Rights and permissions

About this article

Cite this article

Jiang, W., Yang, J. The Rate-Distortion Optimized Compressive Sensing for Image Coding. J Sign Process Syst 86, 85–97 (2017). https://doi.org/10.1007/s11265-015-1087-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-015-1087-0