Abstract

Action recognition with dynamic actor and scene has been a tremendous research topic. Recently, spatio temporal features such as optical flows have been utilized to define motion representation over sequence of time. However, to increase accuracy, deep decomposition is necessary either to enrich information under location or time-varying actions due to spatio temporal dynamics. To this end, we propose algorithm consists of vectors obtained by applying multi-resolution analysis of motion using Haar Wavelet Packet (HWP) over time. Its computation efficiency and robustness have led HWP to gain popularity in texture analysis but their applicability in motion analysis is yet to be explored. To extract representation, a sequence of bin of Histogram of Flow (HOF) is treated as signal channel. Deep decomposition is then applied by utilizing Wavelet Packet decomposition called Packet Flow to many levels. It allows us to represent action’s motions with various speeds and ranges which focuses not only on HOF within one frame or one cuboid but also on the temporal sequence. HWP, however, has translation covariant property that is not efficient in performance because actions occur in arbitrary time and various sampling location. To gain translation invariant capability, we pool each respective coefficient of decomposition for each level. It is found that with proper packet selection, it gives comparable results on the KTH action and Hollywood dataset with train-test division without localization. Even if spatiotemporal cuboid sampling is not densely sampled like of baseline method, we achieve lower complexity and comparable performance on camera motion burdened dataset like UCF Sports that motion features such as HOF do not perform well.

Similar content being viewed by others

References

Somasundaram, G. et al. (2014). Action recognition using global spatio-temporal features derived from sparse representations. Computer Vision and Image Understanding, 123, 1–13.

Klaser, A., Marszaek, M., Schmid, C. (2008). A spatio-temporal descriptor based on 3d-gradients. BMVC 2008-19th British machine vision conference british machine vision association.

Fathi, A., & Mori, G. (2008). Action recognition by learning mid-level motion features. In IEEE Conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE.

Byrne, J. (2015). Nested motion descriptors. In Proceedings of the IEEE conference on computer vision and pattern recognition.

Lan, Z. et al. (2015). Long-short Term Motion Feature for Action Classification and Retrieval. arXiv:1502.04132.

Chen, Q.-Q., & Zhang, Y.-J. (2016). Cluster trees of improved trajectories for action recognition. Neurocomputing, 173, 364–372.

Lin, Z., Jiang, Z., Davis, L. S. (2009). Recognizing actions by shape-motion prototype trees. In 2009 IEEE 12th International conference on computer vision. IEEE.

Sadanand, S., & Corso, J. J. (2012). Action bank: a high- level representation of activity in video. In 2012 IEEE Conference on IEEE computer vision and pattern recognition (CVPR).

Fleet, D. J., & Jepson, A. D. (1990). Computation of component image velocity from local phase information. International Journal of Computer Vision, 5.1, 77–104.

Jain, M., Jegou, H., Bouthemy, P. (2013). Better exploiting motion for better action recognition. In 2013 IEEE Conference on computer vision and pattern recognition (CVPR). IEEE.

Schuldt, C., Laptev, I., Caputo, B. (2004). Recognizing human actions: a local SVM approach. In Proceedings of the 17th International conference on pattern recognition, 2004. ICPR 2004. (Vol. 3). IEEE.

Matsukawa, T. (2010). TakioKurita action recognition usingthree-way cross-correlations feature of local motion attributes ICPR.

Liu, C., Yuen, J., Torralba, A. (2011). Sift flow: dense correspondence across scenes and its applications. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33.5, 978–994.

Farneback, G. (2003). Two-frame motion estimation based on polynomial expansion. Image Analysis, (pp. 33–370). Berlin: Springer.

Lowe, D. G. (1999). Object recognition from local scale-invariant features. In The Proceedings of the seventh IEEE international conference on computer vision (Vol. 2). IEEE.

Shi, F., Petriu, E., Laganiere, R. (2013). Sampling strategies for real-time action recognition. In 2013 IEEE Conference on computer vision and pattern recognition (CVPR). IEEE.

Schindler, K., & Gool, L. V. (2008). Action snippets: how many frames does human action recognition require? In IEEE Conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE.

Kobayashi, T., & Otsu, N. (2008). Image feature extraction using gradient local auto-correlations. Computer Vision ECCV (Vol. 2008, pp. 346–358). Berlin: Springer.

Otsu, N., & Kurita, T. (1988). A new scheme for practical flexible and intelligent vision systems MVA.

Ke, Y., Sukthankar, R., Hebert, M. (2007). Spatio-temporal shape and flow correlation for action recognition. In 2007 IEEE Conference on computer vision and pattern recognition CVPR?07. IEEE.

Mikolajczyk, K., & Uemura, H. (2011). Action recognition with appearance & motion features and fast search trees. Computer Vision and Image Understanding, 115.3, 426–438.

Chakraborty, B. et al. (2012). Selective spatio-temporal interest points. Computer Vision and Image Understanding, 116.3, 396–410.

Wang, H. et al. (2009). Evaluation of local spatio-temporal features for action recognition. BMVC 2009-British machine vision conference. BMVA Press.

Uijlings, J. et al. (2014). Realtime video classification using dense HOF/HOG. In Proceedings of international conference on multimedia retrieval. ACM.

Theriault, C., Thome, N., Cord, M. (2013). Dynamic scene classification: learning motion descriptors with slow features analysis. In 2013 IEEE conference on computer vision and pattern recognition (CVPR). IEEE.

Legenstein, R., Wilbert, N., Wiskott, L. (2010). Reinforcement learning on slow features of high-dimensional input streams. PLoS Computational Biology, 6.8, e1000894.

Lan, T., Wang, Y., Mori, G. (2011). Discriminative figure-centric models for joint action localization and recognition. In 2011 IEEE International conference on computer vision (ICCV). IEEE.

Hadjidemetriou, E., Grossberg, M. D., Nayar, S. K. (2004). Multiresolution histograms and their use for recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 26.7, 831–847.

Lee, J., & Shin, S. Y. (2000). Multiresolution motion analysis with applications. In Proc. International workshop on human modeling and animation.

Yu, W., Sommer, G., Daniilidis, K. (2003). Multiple motion analysis: in spatial or in spectral domain? Computer Vision and Image Understanding, 90.2, 129–152.

Oshin, O., Gilbert, A., Bowden, R. (2014). Capturing relative motion and finding modes for action recognition in the wild. Computer Vision and Image Understanding, 125, 155–171.

Bhattacharya, S. et al. (2014). Recognition of complex events: exploiting temporal dynamics between underlying concepts. In Proceedings of the IEEE conference on computer vision and pattern recognition.

Wang, L., Qiao, Y., Xiaoou, T. (2015). MoFAP a multi-level representation for action recognition. International Journal of Computer Vision, 1–18.

Fernando, B. et al. (2015). Rank Pooling for Action Recognition. arXiv preprint arXiv:1512.01848.

Gokhale, M.Y., & Khanduja, D. K. (2010). Time domain signal analysis using wavelet packet decomposition approach. Int’l Journal of Communications Network and System Sciences, 3.03, 321.

Laine, A., & Fan, J. (1993). Texture classification by wavelet packet signatures. IEEE Transactions on Pattern Analysis and Machine Intelligence, 5.11, 1186–1191.

Le, Q. V. et al. (2011). Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In 2011 IEEE Conference on IEEE computer vision and pattern recognition (CVPR).

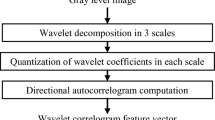

Yudistira, N., & Kurita, T. (2015). Multiresolution local autocorrelation of optical flows over time for action recognition. In 2015 IEEE International conference on systems, man, and cybernetics (SMC). IEEE.

Rodriguez, M. D., Ahmed, J., Shah, M. (2008). Action MACH: a spatio-temporal maximum average correlation height filter for action recognition. Computer Vision and Pattern Recognition.

Soomro, K., & Zamir, A.R. (2014). Action recognition in realistic sports videos, computer vision in sports. Springer International Publishing.

Boughorbel, S., Tarel, J.-P., Boujemaa, N. (2005). Generalized histogram intersection kernel for image recognition. In 2005 ICIP IEEE International conference on image processing, 2005 (Vol. 3). IEEE.

Wang, H. et al. (2009). Evaluation of local spatio-temporal features for action recognition. BMVC 2009-British machine vision conference. BMVA Press.

Wang, H. et al. (2013). Dense trajectories and motion boundary descriptors for action recognition. International Journal of Computer Vision, 103.1, 60–79.

Laptev, I. et al. (2008). Learning realistic human actions from movies. In IEEE Conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE.

Sun, L. et al. (2014). DL-SFA: deeply-learned slow feature analysis for action recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition.

Wong, S.-F., & Cipolla, R. (2007). Extracting spatiotemporal interest points using global information. In IEEE 11th International conference on computer vision, 2007. ICCV 2007. IEEE.

Taylor, G. W., Fergus, R., LeCun, Y., Bregler, C. (2010). Convolutional learning of spatio-temporal features. In Proceedings of the 11th European conference on computer vision Part VI, ECCV?10 (pp. 140–153). Berlin: Springer-Verlag.

Ji, S., Xu, W., Yang, M., Yu, K. (2013). 3d convolutional neural networks for human action recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(1), 221–231.

Bruna, J., & Mallat, S. (2013). Invariant scattering convolution networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35.8, 1872–1886.

Acknowledgments

This work was partially supported by KAKENHI (23500211).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yudistira, N., Kurita, T. Deep Packet Flow: Action Recognition via Multiresolution Deep Wavelet Packet of Local Dense Optical Flows. J Sign Process Syst 91, 609–625 (2019). https://doi.org/10.1007/s11265-018-1363-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-018-1363-x