Abstract

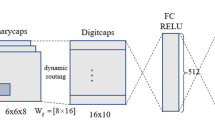

A Capsule Network (CapsNet) is a relatively new classifier and one of the possible successors of Convolutional Neural Networks (CNNs). CapsNet maintains the spatial hierarchies between the features and outperforms CNNs at classifying images including overlapping categories. Even though CapsNet works well on small-scale datasets such as MNIST, it fails to achieve a similar level of performance on more complicated datasets and real applications. In addition, CapsNet is slow compared to CNNs when performing the same task and relies on a higher number of parameters. In this work, we introduce Convolutional Fully-Connected Capsule Network (CFC-CapsNet) to address the shortcomings of CapsNet by creating capsules using a different method. We introduce a new layer (CFC layer) as an alternative solution to creating capsules. CFC-CapsNet produces fewer, yet more powerful capsules resulting in higher network accuracy. Our experiments show that CFC-CapsNet achieves competitive accuracy, faster training and inference and uses less number of parameters on the CIFAR-10, SVHN and Fashion-MNIST datasets compared to conventional CapsNet.

Similar content being viewed by others

Notes

In this implementation, the output of the second convolutional layer (256 feature maps) is divided to 32 “capsule groups” each producing 8D capsules.

References

Zhao, W., Ye, J., Yang, M., Lei, Z., Zhang, S., & Zhao, Z. (2017). Text Classification.

Sabour, S., Frosst, N., & Hinton, G. E. (2017). Dynamic Routing Between Capsules. (Nips).

Lecun, Y. The MINIST Database of handwritten digits. http://yann.lecun.com/exdb/mnist/. Accessed 1 Dec 2021.

Benenson, R. (2016). Classification datasets results.

Mukhometzianov, R., & Carrillo, J. (2018). CapsNet comparative performance evaluation for image classification. pp. 1–14.

Mobiny, A., & Van Nguyen, H. (2018). Fast CapsNet for lung cancer screening. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (vol. 11071 LNCS, pp. 741–749). Springer Verlag.

Rajasegaran, J., Jayasundara, V., Jayasekara, S., Jayasekara, H., Seneviratne, S., & Rodrigo, R. (2019). Deepcaps: Going deeper with capsule networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (vol. 2019-June, pp. 10717–10725).

Do Rosario, V. M., Borin, E., & Breternitz, M. (2019). The multi-lane capsule network. IEEE Signal Processing Letters, 26(7), 1006–1010.

Shiri, P., Sharifi, R., & Baniasadi, A. (2020). Quick-CapsNet (QCN): a fast alternative to Capsule Networks. In Proceedings of IEEE/ACS International Conference on Computer Systems and Applications, AICCSA (vol. 2020-Novem).

Xiang, C., Zhang, L., Tang, Y., Zou, W., & Xu, C. (2018). MS-CapsNet: A novel multi-scale capsule network. IEEE Signal Processing Letters, 25(12), 1850–1854.

Sun, K., Yuan, L., Xu, H., & Wen, X. (2020). Deep tensor Capsule Network. IEEE Access.

Deli, A. (2018). HitNet: a neural network with capsules embedded in a Hit-or-Miss layer, extended with hybrid data augmentation and ghost capsules. 1–19.

Fuchs, A., & Pernkopf, F. (2020). Wasserstein routed capsule networks.

He, J., Cheng, X., He, J., & Xu, H. (2019). Cv-CapsNet: Complex-valued capsule network. IEEE Access, 7, 85492–85499.

Yang, S., Lee, F., Miao, R., Cai, J., Chen, L., Yao, W., Kotani, K., & Chen, Q. (2020). RS-CapsNet: an advanced Capsule Network. IEEE Access.

Huang, W., & Zhou, F. (2020). DA-CapsNet: dual attention mechanism capsule network. Scientific Reports.

Hinton, G., Sabour, S., & Frosst, N. (2018). Matrix capsules with EM routing. In 6th International Conference on Learning Representations, ICLR 2018 - Conference Track Proceedings.

Ding, X., Wang, N., Gao, X., Li, J., Wang, X., & Liu, T. (2020). Group feedback Capsule Network. IEEE Transactions on Image Processing.

Ahmed, K., & Torresani, L. (2019). STAR-CAPS: Capsule networks with straight-through attentive routing. In Advances in Neural Information Processing Systems (vol. 32).

Zhang, Z., Ye, S., Liao, P., Liu, Y., Su, G., & Sun, Y. (2020). Enhanced Capsule Network for Medical image classification. Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS 2020-July, 1544–1547.

Mobiny, A., Yuan, P., Cicalese, P. A., & Van Nguyen, H. (2020). Decaps: Detail-oriented capsule networks. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics).

Mobiny, A., & Van Nguyen, H. (2018). Fast CapsNet for lung cancer screening. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 11071 LNCS, 741–749.

Shiri, P., & Baniasadi, A. (2021). Convolutional fully-connected Capsule Network (CFC-CapsNet). In ACM International Conference Proceeding Series.

Molchanov, P., Tyree, S., Karras, T., Aila, T., & Kautz, J. (2017). Pruning convolutional neural networks for resource efficient inference. In 5th International Conference on Learning Representations, ICLR 2017 - Conference Track Proceedings.

Polino, A., Pascanu, R., & Alistarh, D. (2018). Model compression via distillation and quantization. In 6th International Conference on Learning Representations, ICLR 2018 - Conference Track Proceedings.

Rajasegaran, J., Jayasundara, V., Jayasekara, S., Jayasekara, H., Seneviratne, S., & Rodrigo, R. (2019). DeepCaps: Going Deeper with Capsule Networks.

Xiao, H., Rasul, K., & Vollgraf, R. (2017). Fashion-MNIST: a Novel Image Dataset for Benchmarking Machine Learning Algorithms.

Netzer, Y., Wang, T., Coates, A., Bissacco, A., Wu, B., & Ng, A. Y. (2011). The Street View House Numbers (SVHN) Dataset.

Krizhevsky, A., Nair, V., & Hinton, G. (2009). CIFAR-10 and CIFAR-100 datasets.

Huang, G., Liu, Z., van der Maaten, L., & Weinberger, K. Q. (2016). Densely Connected Convolutional Networks.

Kolesnikov, A., Beyer, L., Zhai, X., Puigcerver, J., Yung, J., Gelly, S., & Houlsby, N. (2019). Big Transfer (BiT): General Visual Representation Learning.

Funding

This research has been funded in part or completely by the Computing Hardware for Emerging Intelligent Sensory Applications (COHESA) project. COHESA is financed under the National Sciences and Engineering Research Council of Canada (NSERC) Strategic Networks grant number NETGP485577-15.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shiri, P., Baniasadi, A. Convolutional Fully-Connected Capsule Network (CFC-CapsNet): A Novel and Fast Capsule Network. J Sign Process Syst 94, 645–658 (2022). https://doi.org/10.1007/s11265-021-01731-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-021-01731-6