Abstract

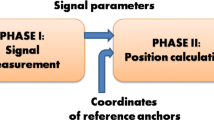

Device-free Localization (DfL) systems offer real-time indoor localization of people without any electronic devices attached on their bodies. The human body influences the radio wave propagation between wireless links and changes the Received Signal Strength (RSS). Wireless Sensor Networks (WSNs) nodes easily measure these RSS changes and appropriate Radio Tomographic Imaging (RTI) algorithms can then process the RSS data and allow human localization. This paper investigates how to choose near-optimal regularization parameter during the regularization process for indoor DfL and describes an experimental indoor DfL setup realized with a Sun SPOT based WSN. The work elaborates on the numerical calculation of the near-optimal regularization parameter by usage of the trade-off curve criterion. The calculated parameter enables conclusive RTI image with sufficient localization precision for eHealth or other ambient-assisted-living applications where the error tolerance is at a scale of several tens of centimeters. The value for the regularization parameter matches the empirical derived value obtained in the authors’previous work.

Similar content being viewed by others

References

Chomu, K., Atanasovski, V., & Gavrilovska, L. (2015). Device-free localization using Sun SPOT WSNs. In V. Atanasovski & A. Leon-Garcia (Eds.), Future Access Enablers for Ubiquitous and Intelligent Infrastructures: First International Conference, FABULOUS 2015, Ohrid, Republic of Macedonia, September 23–25, 2015 (pp. 91–99). Cham: Springer. http://dx.doi.org/10.1007/978-3-319-27072-2_12.

Adler, S., Schmitt, S., & Kyas, M. (2014). Device-free indoor localisation using radio tomography imaging in 800/900 MHz band. In International conference on indoor positioning and indoor navigation, Busan, South Korea.

Bocca, M., Kaltiokallio, O., & Patwari, N. (2012). Radio tomographic imaging for ambient assisted living. In S. Chessa & S. Knauth (Eds.), Evaluating AAL systems through competitive benchmarking–Communications in computer and information science (Vol. 362, pp. 108–130). Berlin: Springer.

Kaltiokallio, O., Bocca, M., & Patwari, N. (2013). A multi-scale spatial model for RSS-based device-free localization. IEEE Transactions on Mobile Computing. arXiv:1302.5914 [cs.NI].

Alhmiedat, T., Samara, G., & Salem, A. O. A. (2013). An indoor fingerprinting localization approach for ZigBee wireless sensor networks. European Journal of Scientific Research, 105(2), 190202.

Chang, L., Chen, X., Fang, D., Wang, J., Xing, T., Liu, C., et al. (2015). FALE: Fine-grained device free localization that can adaptively work in different areas with little effort. In SIGCOMM’ 15, London, United Kingdom. doi:10.1145/2785956.2790020.

Cassara, P., Potorti, F., Barsocchi, P., & Girolami, M. (2015). Choosing an RSS Device-free Localization algorithm for ambient assisted living. In Indoor Positioning and Indoor Navigation (IPIN) (p. 18). doi:10.1109/IPIN.2015.7346788.

Wilson, J., & Patwari, N. (2010). Radio tomographic imaging with wireless networks. IEEE Transactions on Mobile Computing, 9(5), 621632.

Wilson, J., & Patwari, N. (2011). See through walls: Motion tracking using variance-based radio tomography networks. IEEE Transactions on Mobile Computing, 10(5), 612621.

Zhao, Y., & Patwari, N. (2011). Noise reduction for variance-based device-free localization and tracking. In Proceedings of IEEE 8th conference sensor, mesh and ad hoc communications and networks. Salt Lake City, Utah.

Zhao, Y., & Patwari, N., Phillips, J. M., & Venkatasubramanian, S. (2013). Radio iomographic imaging and tracking of stationary and moving people via Kernel Distance. In IPSN’ 13, ACM 978-1-4503-1959-1/13/04.

Edelstein, A., & Rabbat, M. (2013). Background subtraction for online calibration of baseline RSS in RF sensing networks. IEEE Transactions on Mobile Computing., 12(12), 2386–2398.

Xiao, W., Song, B., Yu, X., & Chen, P. (2015). Nonlinear optimization-based Device-free Localization with outlier link rejection. Sensors, 15, 8072–8087. doi:10.3390/s150408072.

Wei, B., Varshney, A., Patwari, N., Hu, W., Voigt, T., & Chou, C. T. (2015). dRTI: Directional radio tomographic imaging, IPSN’ 15, Seattle, WA, USA.

Bal, G. (2012). Introduction to inverse problems: Lecture notes—Department of Applied Physics and Applied Mathematics. New York: Columbia University.

Kabanikhin, S. I. (2008). Definitions and examples of inverse and ill-posed problems. Journal of Inverse and Ill-Posed Problems, 16, 317357.

Morozov, V. A. (1966). On the solution of functional equations by the method of regularization. Soviet Mathematics Doklady, 7, 414417.

Albani, V., Cezaro, A. D., & Zubelli, J. P. (2014). On the choice of the Tikhonov regularization parameter and the discretization level: A discrepancy-based strategy. SSRN.

Golub, G., Heath, M., & Wahba, G. (1979). Generalized cross-validation as a method for choosing a good ridge parameter. Technometrics, 21, 215223.

Hansen, P. C. (1992). Analysis of discrete ill-posed problems by means of the L-curve. SIAM Journal, 34, 561–580.

Hansen, P. C. (2001). The L-curve and its use in the numerical treatment of inverse problems. In P. Johnston (Ed.), Computational inverse problems in electrocardiology. Advances in computational bioengineering (Vol. 5, pp. 119–142). Southampton: WIT Press. http://www2.imm.dtu.dk/pubdb/p.php?449.

Shibagaki, A., Suzuki, Y., Karasuyama, M., & Takeuchi, I. (2015). Regularization path of cross-validation error lower bounds. In NIPS 2015. arXiv:1502.02344.

Rust, B. W. (1998). Truncating the singular value decomposition for Ill-posed problems. Technical Report of NISTIR 6131, National Institute of Standards and Technology, U.S. Department of Commerce, Gaithersburg, MD.

Pierce, J. E., & Rust, B. W. (1985). Constrained least squares interval estimation. SIAM Journal on Scientific and Statistical Computing, 6, 670–683.

Cai, T. T., & Guo, Z. (2015). Confidence intervals for high-dimensional linear regression: Minimax rates and adaptivity. arXiv:1506.05539.

Kilmer, M. E., & O’Leary, D. P. (2001). Choosing regularization parameters in iterative methods for ill-posed problems. SIAM Journal on Matrix Analysis and Applications, 22, 1204–1221.

Lakhdari, A., & Boussetila, N. (2015). An iterative regularization method for an abstract ill-posed biparabolic problem. Boundary Value Problems. Article 55.

Acknowledgements

This work is supported by the EC FP7 eWall project (http://ewallproject.eu/) No. 610658. The authors would like to thank everyone involved.

Author information

Authors and Affiliations

Corresponding author

Appendix: Singular Value Decomposition, SVD and Trade-off Curve, Curvature of the Logarithmic Trade-off Curve

Appendix: Singular Value Decomposition, SVD and Trade-off Curve, Curvature of the Logarithmic Trade-off Curve

1.1 Singular Value Decomposition

This “Appendix” gives analytical equations derived by the trade-off curve analysis when Tikhonov regularization is employed. For common engineering problems, it is reasonable to consider that the solution is stable (does not experience severe and sudden deviations), so the assumption that \({{\varvec{P}}}\) equals identity matrix is acceptable. Since not any a priori estimate is known about the true solution, the \({{\varvec{x}}}_{0}\) vector is set to zero.

Singular value decomposition technique breaks down the \(L{\times }P\) weighting matrix \({{\varvec{A}}}\) (L is the number of the links; P is the number of the pixels) onto singular vectors. When \(L < P\), SVD operation results in

where \({{\varvec{u}}}_{i}\) and \({{\varvec{v}}}_{i}\) are singular vectors, and \(\sigma _{i}\) are singular values with property

The singular vectors \({{\varvec{u}}}_{i}\) and \({{\varvec{v}}}_{i}\) are orthogonal and unit (they are orthonormal), i.e., \({{\varvec{u}}}_{i}^T{{\varvec{u}}}_{j} = {{\varvec{v}}}_{i}^T{{\varvec{v}}}_{j} = \varvec{ \delta }_{ij}\).

The entries of the singular vectors \({{\varvec{u}}}_{i}\) and \({{\varvec{v}}}_{i}\) have property to alter between positive and negative, whereas the singular values \(\sigma _{i}\) monotonically decrease to 0. Thus, if the SVD delivers larger singular values, the singular vectors experience less oscillations, and vice versa.

The least squares method is the most convenient solution for the minimization problem (7), which yields to

Appling SVD to (11) gives

where \(f_{1}, f_{2},\ldots\, ,f_{L}\) are the Tikhonov filter coefficients, defined as

For the straightforward solution (\(\lambda = 0\)), all coefficients \(f_{i}\) become 1. When the regularization is employed (\(\lambda > 0\)), the filter coefficients suppress the influence of small singular values on the regularized solution \({{\varvec{x}}}_{\lambda }\).

The residual solution is given by

where the vector \({{\varvec{y}}}_{0}={{\varvec{y}}}-\sum _{i=1}^L{{\varvec{u}}}_{i}{{\varvec{u}}}_{i}^T{{\varvec{y}}}\) is part of \({{\varvec{y}}}\) which is not affected by the filter coefficients. Contrary to the previous case of regularized solution, the filter coefficients suppress the influence of larger singular values on the residual solution.

Regarding (12) and (14), the squares of the solution and residual norms are:

Equations (15) and (16), supported by (13), reveal the influence of \(\lambda\) to the quality of the final regularized solution, and help to understand the rationale of the trade-off curve criterion.

1.2 SVD and Trade-off Curve

In reality, errors (donated as vector \({{\varvec{e}}}\)) inevitably influence any measurement process, so the gathered data \({{\varvec{y}}}\) are

where \(\bar{{{\varvec{y}}}}\) are exact undisturbed measurements and \(\bar{{{\varvec{x}}}}={{\varvec{A}}}^T\bar{{{\varvec{y}}}}\) is the exact solution.

Then, the regularized solution is

where \(\bar{{{\varvec{x}}}}_{\lambda }=\left( {{\varvec{A}}}^{T}{{\varvec{A}}}+\lambda ^2{{\varvec{I}}}\right) ^{-1}{{\varvec{A}}}^{T}\bar{{{\varvec{y}}}}\) is part that corresponds to the exact undisturbed measurements, and \({{\varvec{x}}}_{\lambda }^e=\left( {{\varvec{A}}}^{T}{{\varvec{A}}}+\lambda ^2{{\varvec{I}}}\right) ^{-1}{{\varvec{A}}}^{T}{{\varvec{e}}}\) is part that corresponds to the pure errors.

It is assumed that for \(\bar{{{\varvec{y}}}}\) applies the discrete Picard condition which states that the singular values \(\sigma _{i}\) decline slower than \(\left| {{\varvec{u}}}_{i}^T\bar{{{\varvec{y}}}}\right|\). A consequence of this assumption is that exact solution coefficients \(\left| {{\varvec{v}}}_{i}^T\bar{{{\varvec{x}}}}\right| =\left| {{\varvec{u}}}_{i}^T\bar{{{\varvec{y}}}}/\sigma _{i}\right|\) also decline, which ensures that the exact solution has moderate and limited norm. This is essential characteristic of the realistic inverse problems which guarantees that the regularized solution would be a good approximation and it make sense to search for near-optimal \(\lambda\).

Let k be the number of filter coefficients closer to 1 (e.g. greater than 0.5). Equation (13) defines that for the k-th singular value holds that \(\sigma _{k}\cong \lambda\). Boundary number k divides the singular values on “big ones” and “small ones”, so it is very important for specifying the \(\lambda\). By the nature of the number k and knowing that \(\left| {{\varvec{v}}}_{i}^T\bar{{{\varvec{x}}}}\right|\) decline, follows that, for not too large \(\lambda\), the sum in (15) mainly consists of the first k terms. Thus, the square of a solution norm of the exact undisturbed measurements \(\bar{{{\varvec{y}}}}\) is

as long as \(\lambda\) is small enough. For (19), as \(\lambda\) tends to infinity, k tends to zero, thus, \(\left\| \bar{{{\varvec{x}}}}_{\lambda }\right\| _{2}\) also tends to zero. Contrary, as \(\lambda\) tends to zero, \(\left\| \bar{{{\varvec{x}}}}_{\lambda }\right\| _{2}\) tends to \(\left\| \bar{{{\varvec{x}}}}\right\| _{2}\).

The square of a residual norm of the exact undisturbed measurements \(\bar{{{\varvec{y}}}}\) is

For (20), as \(\lambda\) tends to infinity, the residual norm tends to \(\left\| \bar{{{\varvec{y}}}}\right\| _{2}\). Contrary, for small \(\lambda\), the residual norm fades out.

The conclusion about the trade-off curve for the exact undisturbed measurements \(\bar{{{\varvec{y}}}}\) is that it is mainly a line at \(\left\| \bar{{{\varvec{x}}}}_{\lambda }\right\| _{2}\,\cong\, \left\| \bar{{{\varvec{x}}}}\right\| _{2}\), and for large values of \(\lambda\), \(\left\| \bar{{{\varvec{x}}}}_{\lambda }\right\| _{2}\) drops towards zero.

Below follows an analogous overview about the trade-off curve for the pure errors \({{\varvec{e}}}\). The errors that disturbance the measurements are random variables and they are not correlated each other. This means that coefficients \({{\varvec{u}}}_{i}^T{{\varvec{e}}}\) do not rely upon i,

i.e. errors do not obey the discrete Picard condition. Thus, the square of a solution norm of the errors is

Here, \(\sum _{i=1}^k\sigma _{i}^{-2}\) is roughly equal to \(\sigma _{k}^{-2}\,\cong\, \lambda ^{-2}\) whereas \(\sum _{i=k+1}^L\sigma _{i}^2\) is roughly equal to \(\sigma _{k+1}^2\,\cong\, \lambda ^2\), which gives

where \(c_{\lambda }\) is certain value that decline with \(\lambda\). For (23), as \(\lambda\) tends to infinity, solution norm tends to zero. Contrary, as \(\lambda\) tends to zero, solution norm tends to \(\left\| {{\varvec{A}}}^T{{\varvec{e}}}\right\| _{2}\,\cong\, \texttt {E}\left\| {{\varvec{A}}}^T\right\| _{\texttt {F}}\). (F stands for the Frobenius norm or the HilbertSchmidt norm.)

Further, the square of a residual norm of the errors is

For (24), as \(\lambda\) tends to infinity, k tends to zero, thus residual norm tends to \(\texttt {E}\sqrt{P}\,\cong\, \left\| {{\varvec{e}}}\right\| _{2}\). Contrary, for small \(\lambda\), residual norm fades out.

The conclusion about the trade-off curve for the pure errors \({{\varvec{e}}}\) is that it is mainly a line at \(\left\| {{\varvec{Ax}}}_{\lambda }^e-{{\varvec{e}}}\right\| _{2}\,\cong\, \left\| {{\varvec{e}}}\right\| _{2}\), and for small values of \(\lambda\), \(\left\| {{\varvec{A}}}\bar{{{\varvec{x}}}}_{\lambda }-\bar{{{\varvec{y}}}}\right\| _{2}\) drops towards zero.

Eventually, the over-all trade-off curve which combines the exact undisturbed measurements \(\bar{{{\varvec{y}}}}\) and pure errors \({{\varvec{e}}}\), formally donated as \(\bar{{{\varvec{y}}}}+{{\varvec{e}}}\), can be considered. In the region where \(\lambda\) is small, \({{\varvec{u}}}_{i}^T{{\varvec{e}}}\) terms prevail, so the over-all trade-off curve matches the pure errors trade-off curve. Here, \({{\varvec{x}}}_{\lambda }\) matches \({{\varvec{x}}}_{\lambda }^e\). Otherwise, in the region where \(\lambda\) is large, \({{\varvec{u}}}_{i}^T\bar{{{\varvec{y}}}}\) terms prevail, so the over-all trade-off curve matches the exact undisturbed measurements trade-off curve. Here, \({{\varvec{x}}}_{\lambda }\) matches \(\bar{{{\varvec{x}}}}_{\lambda }\). The over-all trade-off curve is in fact transition from one line to another. The point where the curve has greatest curvature is a turning point after which it is impossible to achieve additional error suppressing, and the only effect left would be meaningless solution suppressing. So, this turning point is a good indicator to a near-optimal \(\lambda\). To make curvature more distinctive, the curve is plotted in logarithmic scale.

1.3 Curvature of the Logarithmic Trade-off Curve

Let quantities \(\eta\) and \(\rho\) are defined as

and successively

The trade-off curve is a diagram of \({\hat{\eta }}/2=\log \left\| {{\varvec{x}}}_{\lambda }\right\| _{2}\) against \({\hat{\rho }}/2=\log \left\| {{\varvec{Ax}}}_{\lambda }-{{\varvec{y}}}\right\| _{2}\), where \({\hat{\eta }}\) and \({\hat{\rho }}\) depend on \(\lambda\). Let \({\hat{\eta }}'\), \({\hat{\rho }}'\), \({\hat{\eta }}''\), and \({\hat{\rho }}''\) represent the first and second order derivative functions of \({\hat{\eta }}\) and \({\hat{\rho }}\) with respect to \(\lambda\). Regarding this, the curvature \(\kappa\) of the parametrically given plane curve, as a function of \(\lambda\), is

and then, the objective is to find more explicit expression about it, suitable for numerical calculations.

The first order derivative functions of \({\hat{\eta }}\) and \({\hat{\rho }}\) are

Equation (13) gives the dependence of \(f_{i}\) on \(\sigma _{i}\) and \(\lambda\), and it allows to derivate the next two equations:

Considering (15), (16), (25), and using (29), follows that the first order derivative functions of \(\eta\) and \(\rho\) are

where \(\beta _{i}={{\varvec{u}}}_{i}^T{{\varvec{y}}}\).

Relation (13) can be reformulated as

which in turn gives an important conclusion

The second order derivative functions of \({\hat{\eta }}\) and \({\hat{\rho }}\) are

Respecting (32), the second order derivative function of \(\rho\) is

With substituting (28) and (33) into (27) and then exploiting (32) and (34), the expression for \(\kappa\) gets transformed into

where \(\eta '\) is given by (30).

Rights and permissions

About this article

Cite this article

Chomu, K., Atanasovski, V. & Gavrilovska, L. Finding Near-Optimal Regularization Parameter for Indoor Device-free Localization. Wireless Pers Commun 92, 197–220 (2017). https://doi.org/10.1007/s11277-016-3846-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-016-3846-z