Abstract

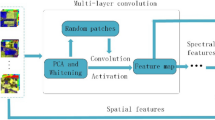

The original hyperspectral data served as the initial features has the characteristics of high dimension and redundancy, which is not suitable for the subsequent analysis, so extracting feature information is needed. The deep learning model has a strong ability in feature learning, but if the model has too many layers which will lead to the original information loss in the process of layer-by-layer feature learning and reduce the subsequent classification accuracy. To solve this problem, the paper proposed a deep learning model of hybrid structure with the contractive autoencoder and restricted boltzmann machine to extract the hyperspectral data feature information. First, through pre-processing the spectral data, the 2d spectrum data is converted into a one dimensional vector. Then, a hybrid model is constructed for unsupervised training and supervised learning for the hyperspectral data, and features are extracted from bottom to top gradually according to the hybrid model. Finally, the SVM classifier is adopted to enhance the classification ability of spectral data. The paper uses the hybrid model proposed to test for extracting features with two sets of AVIRIS data and compares with PCA and GCA methods. The experiment results show that the feature extraction algorithm based on hybrid depth model can get the better features, and have strong distinguish performance, and can get better classification accuracy by the SVM algorithm.

Similar content being viewed by others

References

Jia, X., Kuo, B. C., & Crawford, M. M. (2013). Feature mining for hyper-spectral image classification. Proceedings of the IEEE, 101(3), 676–697.

Huo, L. G., & Feng, X. C. (2014). Denoising of hyperspectral remote sensing image based on principal component analysis and dictionary learning. Journal of Electronics & Information Technology, 36(11), 2723–2729.

Cheng, S. X., Xie, C. Q., Wang, Q. N., et al. (2014). Different wavelengths selection methods for identification of early blight on tomato leaves by using hyperspectral imaging technique. Spectroscopy and Spectral Analysis, 34(5), 1362–1366.

Fan, L., Lv, J., & Deng, J. (2014). Classification of hyperspectral remote sensing images based on bands grouping and classification ensembles. Acta Optica Sinica, 34(9), 1–11.

Cheriyadat, A., & Bruce, L. (2003). Why principal component analysis is not an appropriate feature extraction method for hyperspectral data. Proceeding of IEEE Geoscience and Remote Sensing Symposium (IGARSS), 104(2), 3420–3422.

Sun, K., Geng, X., Tang, H., et al. (2015). A new target detection method using nonlinear PCA for hyperspectral imagery. Bulletin of Surveying and Mapping, 0(1), 105–108.

Liu, J. (2012). Kernel direct LDA subspace hyperspectral image terrain classification. Computer Science, 39(6), 274–277.

Feng, D. C., Chen, F., & Wen-Li, X. U. (2014). Detecting local manifold structure for unsupervised feature selection. Acta Automatica Sinica, 40(10), 2253–2261.

Zhou, S., Tan, K., & Wu, L. (2014). Hyperspectral image classification based on ISOMAP algorithm using neighborhood distance. Remote Sensing Technology & Application, 29(4), 695–700.

Yan, L., & Roy, D. P. (2015). Improved time series land cover classification by missing-observation-adaptive nonlinear dimensionality reduction. Remote Sensing of Environment, 158, 478–491.

Sun, W., Liu, C., Shi, B., et al. (2014). Dimensionality reduction with improved local tangent space alignment for hyperspectral imagery classification. Journal of Tongji University, 42(1), 0124–0130.

Li, Y., Su, H., Qi, C. R., et al. (2015). Joint embeddings of shapes and images via CNN image purification. ACM Transactions on Graphics, 34(6), 1–12.

Hinton, G., Deng, L., Yu, D., et al. (2012). Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Processing Magazine, 29(6), 82–97.

Dahl, G. E., Yu, D., Deng, L., et al. (2012). Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. IEEE Trans on Audio, Speech and Language Processing, 20(1), 30–42.

Xie, J., Zhang, L., You, J., et al. (2015). Effective texture classification by texton encoding induced statistical features. Pattern Recognition, 48(2), 447–457.

Rifai, S., Vincent, P., Muller, X., Glorot, X., & Bengio, Y. (2011). Contractive auto-encoders: Explicit invariance during feature extraction. In Proceedings of the international conference on machine learning (ICML) (pp. 833–840).

Erhan, D., Manzagol, P., Bengio, Y., Bengio, S., & Vincent, P. (2009). The difficulty of training deep architectures and the effect of unsupervised pretraining. In Proceedings of the international conference on artificial intelligence and statistics (AISTATS) (pp. 153–160).

Hinton, G. E., & Ruslan, R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504–507.

Hjelm, R. D., Calhoun, V. D., Salakhutdinov, R., et al. (2014). Restricted Boltzmann machines for neuroimaging: An application in identifying intrinsic networks. Neuroimage, 96(8), 245–260.

Barat, C., & Ducottet, C. (2016). String representations and distances in deep convolutional neural networks for image classification. Pattern Recognition, 54, 104–115.

Wang, Y., Yao, H., & Zhao, S. (2015). Auto-encoder based dimensionality reduction. Neurocomputing, 184, 232–242.

Ba, J., Grosse, R., & Salakhutdinov, R., et al. (2015). Learning wake-sleep recurrent attention models. In Conference on neural information processing systems, Canada (pp. 1–9).

Hinton, G. E. (2010). A practical guide to training restricted Boltzmann machines. Department of Computer Science, University of Toronto, Montreal.

Hinton, G. E. (2002). Training products of experts by minimizing contrastive divergence. Neural Computation, 14(8), 1771–1800.

Schulz, H., Cho, K., & Raiko, T., et al. (2013). Two layers contractive encoding with shortcuts for semi-supervised learning. In Proceedings of the 20th international conference on neural information processing (pp. 450–457).

Le Roux, N., & Bengio, Y. (2008). Representational power of restricted Boltzmann machines and deep belief networks. Neural Computation, 20(6), 1631–1649.

Zhang, C. X., Ji, N. N., & Wang, G. W. (2015). Restricted Boltzmann machines. Chinese Journal of Engineering Mathematics, 32(2), 159–173.

Larochelle, H., Bengio, Y., Louradour, J., et al. (2009). Exploring strategies for training deep neural networks. Journal of Machine Learning Research, 10(6), 1–40.

Acknowledgements

This work was supported by national and international scientific and technological cooperation special projects (No. 2015DFA00530), and supported by national natural science foundation of China (Nos. 61461042, 61461041).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jiang, X., Xue, H., Zhang, L. et al. Hyperspectral Data Feature Extraction Using Deep Learning Hybrid Model. Wireless Pers Commun 102, 3529–3543 (2018). https://doi.org/10.1007/s11277-018-5389-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-018-5389-y