Abstract

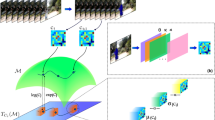

The understanding of human activity is one of the key research areas in human-centered robotic applications. In this paper, we propose complexity-based motion features for recognizing human actions. Using a time-series-complexity measure, the proposed method evaluates the amount of useful information in subsequences to select meaningful temporal parts in a human motion trajectory. Based on these meaningful subsequences, motion codewords are learned using a clustering algorithm. Motion features are then generated and represented as a histogram of the motion codewords. Furthermore, we propose a multiscaled sliding window for generating motion codewords to solve the sensitivity problem of the performance to the fixed length of the sliding window. As a classification method, we employed a random forest classifier. Moreover, to validate the proposed method, we present experimental results of the proposed approach based on two open data sets: MSR Action 3D and UTKinect data sets.

Similar content being viewed by others

References

Ahn H, Moon H, Fazzari MJ, Lim N, Chen JJ, Kodell RL (2007) Classification by ensembles from random partitions of high-dimensional data. Comput Stat Data Anal 51(12):6166–6179

Al Alwani A, Chahir Y (2015) 3-D skeleton joints-based action recognition using covariance descriptors on discrete spherical harmonics transform. In: International Conference on Image Processing (ICIP) 2015, IEEE, Québec, Canada. https://hal.archives-ouvertes.fr/hal-01168436

Al Alwani AS, Chahir Y (2016) Spatiotemporal representation of 3d skeleton joints-based action recognition using modified spherical harmonics. Pattern Recognit Lett. http://dx.doi.org/10.1016/j.patrec.2016.05.032

Chrungoo A, Manimaran S, Ravindran B (2014) Activity recognition for natural human robot interaction. In: International Conference on Social Robotics, Springer, pp 84–94

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: Computer Vision and Pattern Recognition, CVPR 2005. IEEE Computer Society Conference, IEEE, vol 1, pp 886–893

Devanne M, Wannous H, Berretti S, Pala P, Daoudi M, Del Bimbo A (2015) 3-D human action recognition by shape analysis of motion trajectories on Riemannian manifold. IEEE Trans Cybern 45(7):1340–1352

Evangelidis G, Singh G, Horaud R (2014) Skeletal quads: human action recognition using joint quadruples. In: International Conference on Pattern Recognition, pp 4513–4518

Grassberger P (1986) Toward a quantitative theory of self-generated complexity. Int J Theor Phys 25(9):907–938

Ho TK (1998) The random subspace method for constructing decision forests. IEEE Trans Pattern Anal Mach Intell 20(8):832–844

Hussein ME, Torki M, Gowayyed MA, El-Saban M (2013) Human action recognition using a temporal hierarchy of covariance descriptors on 3d joint locations. IJCAI 13:2466–2472

Johansson G (1975) Visual motion perception. Scientific American

Keogh E, Lin J (2005) Clustering of time-series subsequences is meaningless: implications for previous and future research. Knowl Inf Syst 8(2):154–177

Kwon WY, Suh IH (2014) Complexity-based motion features and their applications to action recognition by hierarchical spatio-temporal naive bayes classifier. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), pp 3141–3148

Li W, Zhang Z, Liu Z (2010) Action recognition based on a bag of 3d points. In: 2010 Computer Vision and Pattern Recognition Workshops (CVPRW), IEEE, pp 9–14

Luo J, Wang W, Qi H (2013) Group sparsity and geometry constrained dictionary learning for action recognition from depth maps. In: Proceedings of the IEEE International Conference on Computer Vision, pp 1809–1816

Ofli F, Chaudhry R, Kurillo G, Vidal R, Bajcsy R (2014) Sequence of the most informative joints (smij): a new representation for human skeletal action recognition. J Vis Commun Image Represent 25(1):24–38

Pazhoumand-Dar H, Lam CP, Masek M (2015) Joint movement similarities for robust 3d action recognition using skeletal data. J Vis Commun Image Represent 30:10–21

Plagemann C, Ganapathi V, Koller D, Thrun S (2010) Real-time identification and localization of body parts from depth images. In: Robotics and Automation (ICRA), 2010 IEEE International Conference, IEEE, pp 3108–3113

Rahmani H, Mahmood A, Huynh DQ, Mian A (2014) HOPC: histogram of oriented principal components of 3d pointclouds for action recognition. In: European Conference on Computer Vision, Springer, pp 742–757

Rani P, Liu C, Sarkar N, Vanman E (2006) An empirical study of machine learning techniques for affect recognition in human–robot interaction. Pattern Anal Appl 9(1):58–69

Rissanen J (2007) Information and complexity in statistical modeling. Springer, New York, p 142

Seidenari L, Varano V, Berretti S, Bimbo A, Pala P (2013) Recognizing actions from depth cameras as weakly aligned multi-part bag-of-poses. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp 479–485

Sempena S, Maulidevi NU, Aryan PR (2011) Human action recognition using dynamic time warping. In: Electrical Engineering and Informatics (ICEEI), 2011 International Conference, IEEE, pp 1–5

Singh M, Basu A, Mandal MK (2008) Human activity recognition based on silhouette directionality. IEEE Trans Circuits Syst Video Technol 18(9):1280–1292

Slama R, Wannous H, Daoudi M, Srivastava A (2015) Accurate 3d action recognition using learning on the Grassmann manifold. Pattern Recognit 48(2):556–567

Tononi G, Sporns O, Edelman G (1994) A measure for brain complexity: relating functional segregation and integration in the nervous system. Proc Natl Acad Sci 91(11):5033

Vail DL, Veloso MM, Lafferty JD (2007) Conditional random fields for activity recognition. In: Proceedings of the 6th international joint conference on autonomous agents and multiagent systems, ACM, p 235

Vemulapalli R, Arrate F, Chellappa R (2014) Human action recognition by representing 3d skeletons as points in a lie group. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 588–595

Vieira AW, Nascimento ER, Oliveira GL, Liu Z, Campos MF (2012) Stop: Space-time occupancy patterns for 3d action recognition from depth map sequences. In: Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications, pp 252–259

Wang C, Wang Y, Yuille AL (2013) An approach to pose-based action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 915–922

Wang J, Liu Z, Chorowski J, Chen Z, Wu Y (2012a) Robust 3d action recognition with random occupancy patterns. In: Computer Vision–ECCV 2012, Springer, pp 872–885

Wang J, Liu Z, Wu Y, Yuan J (2012b) Mining actionlet ensemble for action recognition with depth cameras. In: Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference, IEEE, pp 1290–1297

Xia L, Aggarwal J (2013) Spatio-temporal depth cuboid similarity feature for activity recognition using depth camera. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2834–2841

Xia L, Chen CC, Aggarwal J (2012) View invariant human action recognition using histograms of 3d joints. In: Computer Vision and Pattern Recognition Workshops (CVPRW), 2012 IEEE Computer Society Conference, IEEE, pp 20–27

Yamato J, Ohya J, Ishii K (1992) Recognizing human action in time-sequential images using hidden markov model. In: Computer Vision and Pattern Recognition. Proceedings CVPR’92., 1992 IEEE Computer Society Conference, IEEE, pp 379–385

Yang X, Tian Y (2012) Eigen joints-based action recognition using naive–bayes–nearest-neighbor. In: Second International Workshop on human activity understanding from 3D data in conjunction with CVPR, pp 14–19

Yang X, Zhang C, Tian Y (2012) Recognizing actions using depth motion maps-based histograms of oriented gradients. In: Proceedings of the 20th ACM international conference on Multimedia, ACM, pp 1057–1060

Zhu C, Sheng W (2009) Human daily activity recognition in robot-assisted living using multi-sensor fusion. In: Robotics and Automation. ICRA’09. IEEE International Conference, IEEE, pp 2154–2159

Zhu C, Sheng W (2011) Wearable sensor-based hand gesture and daily activity recognition for robot-assisted living. IEEE Trans Syst Man Cybern Part A Syst Hum 41(3):569–573

Zhu Y, Chen W, Guo G (2013) Fusing spatiotemporal features and joints for 3d action recognition. In: Computer Vision and Pattern Recognition Workshops (CVPRW), 2013 IEEE Conference, IEEE, pp 486–491

Acknowledgments

This work was supported by the Global Frontier RD Program on “Human-centered Interaction for Coexistence” funded by the National Research Foundation of Korea grant funded by the Korean Government (MEST) (NRFMIAXA003-2010-0029744). This study also has been conducted with the support of the Korea Institute of Industrial Technology as “Development of automatic programming framework for manufacturing tasks by analyzing human activity (KITECH E0-16-0056)”.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kwon, W.Y., Lee, S.H. & Suh, I.H. Motion codeword generation using selective subsequence clustering for human action recognition. Intel Serv Robotics 10, 41–54 (2017). https://doi.org/10.1007/s11370-016-0208-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11370-016-0208-3