Abstract

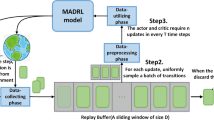

Reinforcement learning as autonomous learning is greatly driving artificial intelligence (AI) development to practical applications. Having demonstrated the potential to significantly improve synchronously parallel learning, the parallel computing based asynchronous advantage actor-critic (A3C) opens a new door for reinforcement learning. Unfortunately, the acceleration's inuence on A3C robustness has been largely overlooked. In this paper, we perform the first robustness assessment of A3C based on parallel computing. By perceiving the policy’s action, we construct a global matrix of action probability deviation and define two novel measures of skewness and sparseness to form an integral robustness measure. Based on such static assessment, we then develop a dynamic robustness assessing algorithm through situational whole-space state sampling of changing episodes. Extensive experiments with different combinations of agent number and learning rate are implemented on an A3C-based pathfinding application, demonstrating that our proposed robustness assessment can effectively measure the robustness of A3C, which can achieve an accuracy of 83.3%.

Similar content being viewed by others

References

Fabisch A, Petzoldt C, Otto M, Kirchner F. A survey of behavior learning applications in robotics—State of the art and perspectives. arXiv:1906.01868, 2019. https://arxiv.org/abs/1906.01868, June 2021.

Silver D, Huang A, Maddison C J et al. Mastering the game of Go with deep neural networks and tree search. Nature, 2016, 529(7587): 484-489. https://doi.org/10.1038/nature16961.

Mnih V, Kavukcuoglu K, Silver D et al. Human-level control through deep reinforcement learning. Nature, 2015, 518(7540): 529-533. https://doi.org/10.1038/nature14236.

Tamar A, Wu Y, Thomas G, Levine S, Abbeel P. Value iteration networks. In Proc. the 30th International Conference on Neural Information Processing Systems, Dec. 2016, pp.2154-2162.

Watkins C. Learning from delayed rewards [Ph.D. Thesis]. University of Cambridge, England, 1989.

Grounds M, Kudenko D. Parallel reinforcement learning with linear function approximation. In Proc. the 6th European Conference on Adaptive and Learning Agents and Multiagent Systems: Adaptation and Multi-Agent Learning, May 2007, Article No. 45. https://doi.org/10.1145/1329-125.1329179.

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmiller M. Playing Atari with deep reinforcement learning. In Proc. the 27th Conference on Neural Information Processing Systems, Dec. 2013.

Barto G A, Sutton S R, Anderson W C. Neuron like elements that can solve difficult learning control problems. IEEE Trans. Systems, Man, & Cybernetics, 1983, SMC-13(5): 834-846. https://doi.org/10.1109/TSMC.1983.6313077.

Mnih V, Badia A P, Mirza M, Graves A, Harley T, Lillicrap T P, Silver D, Kavukcuoglu K. Asynchronous methods for deep reinforcement learning. In Proc. the 33rd International Conference on Machine Learning, Jun. 2016, pp.1928-1937.

Lillicrap T, Hunt J J, Pritzel A, Heess N, Erez T, Tassa Y, Silver D, Wierstra D. Continuous control with deep reinforcement learning. arXiv:1509.02971, 2016. http://arxiv.org/abs/1509.02971, May 2021.

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O. Proximal policy optimization algorithms. arXiv:1707.06347, 2017. https://arxiv.org/abs/1707.06347, May 2021.

Babaeizadeh M, Frosio I, Tyree S, Clemons J, Kautz J. GA3C: GPU-based A3C for deep reinforcement learning. In Proc. the 30th Conference on Neural Information Processing Systems, Dec. 2016.

Cho H, Oh P, Park J, Jung W, Lee J. FA3C: FPGA-accelerated deep reinforcement learning. In Proc. the 24th International Conference on Architectural Support for Programming Languages and Operating Systems, Apr. 2019, pp.499-513. https://doi.org/10.1145/3297858.3304058.

Huang S, Papernot N, Goodfellow I, Duan Y, Abbeel P. Adversarial attacks on neural network policies. arXiv:1702.02284, 2017. https://arxiv.org/abs/1702.02284, February 2021.

Yuan Z, Gong Y. Improving the speed delivery for robotic warehouses. IFAC-PapersOnLine, 2016, 49(12): 1164-1168. https://doi.org/10.1016/j.ifacol.2016.07.661.

McKee J. Speeding Fermat’s factoring method. Math. Comput., 1999, 68(228): 1729-1737. https://doi.org/10.1090/S0025-5718-99-01133-3.

Chinchor N. MUC-4 evaluation metrics. In Proc. the 4th Message Understanding Conference, Jun. 1992, pp.22-29. https://doi.org/10.3115/1072064.1072067.

Koutník J, Schmidhuber J, Gomez F. Evolving deep unsupervised convolutional networks for vision-based reinforcement learning. In Proc. the 14th Conference on Genetic and Evolutionary Computation, Jul. 2014, pp.541-548. https://doi.org/10.1145/2576768.2598358.

Babaeizadeh M, Frosio I, Tyree S, Clemons J, Kautz J. Reinforcement learning through asynchronous advantage actor-critic on a GPU. arXiv:1611.06256, 2016. https://arxiv.org/abs/1611.06256, November 2020.

Bojchevski A, Gunnemann S. Adversarial attacks on node embeddings via graph poisoning. arXiv:1809.01093, 2018. https://arxiv.org/abs/1809.01093, May 2021.

Xiao H, Xiao H, Eckert C. Adversarial label flips attack on support vector machines. In Proc. the 20th European Conference on Artificial Intelligence, Aug. 2012, pp.870-875. https://doi.org/10.3233/978-1-61499-098-7-870.

Zugner D, Gunnemann S. Adversarial attacks on graph neural networks via meta learning. arXiv:1902.08412, 2019. https://arxiv.org/abs/1902.08412, February 2021.

Goodfellow I, Shlens J, Szegedy C. Explaining and harnessing adversarial examples. arXiv:1412.6572, 2014. https://arxiv.org/abs/1412.6572, March 2021.

Kurakin A, Goodfellow I, Bengio S. Adversarial examples in the physical world. In Proc. the 5th International Conference on Learning Representations, Apr. 2017.

Szegedy C, Zaremba W, Sutskever I, Bruna J, Erhan D, Goodfellow I, Fergus R. Intriguing properties of neural networks. arXiv:1312.6199, 2013. https://arxiv.org/abs/1312.6199, February 2021.

Huang Y, Zhu Q. Manipulating reinforcement learning: Poisoning attacks on cost signals. arXiv:2002.03827, 2020. https://arxiv.org/abs/2002.03827, June 2021.

Tan A, Lu N, Xiao D. Integrating temporal difference methods and self-organizing neural networks for reinforcement learning with delayed evaluative feedback. IEEE Transactions on Neural Networks, 2008, 19(2): 230-244. https://doi.org/10.1109/TNN.2007.905839.

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. In Proc. the 27th Neural Information Processing Systems, Dec. 2014, pp.2672-2680.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In Proc. the 29th IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2016, pp.770-778. https://doi.org/10.1109/CVPR.2016.90.

Szegedy C, Liu W, Jia Y, Serrmanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A. Going deeper with convolutions. In Proc. the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2015. https://doi.org/10.1109/CVPR.2015.7298594.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556, 2014. https://arxiv.org/abs/1409.1556, April 2021.

Huang G, Liu Z, Van Der Maaten L Q, Weinberger K. Densely connected convolutional networks. In Proc. the 30th IEEE Conference on Computer Vision and Pattern Recognition, Jul. 2017, pp.2261-2269. https://doi.org/10.1109/CVPR.2017.243.

Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. Communications of the ACM, 2017, 60(6): 84-90. https://doi.org/10.1145/3065386.

Author information

Authors and Affiliations

Corresponding authors

Supplementary Information

ESM 1

(PDF 827 kb)

Rights and permissions

About this article

Cite this article

Chen, T., Liu, JQ., Li, H. et al. Robustness Assessment of Asynchronous Advantage Actor-Critic Based on Dynamic Skewness and Sparseness Computation: A Parallel Computing View. J. Comput. Sci. Technol. 36, 1002–1021 (2021). https://doi.org/10.1007/s11390-021-1217-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11390-021-1217-z