Abstract

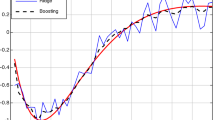

Understanding the empirical success of boosting algorithms is an important theoretical problem in machine learning. One of the most influential works is the margin theory, which provides a series of upper bounds for the generalization error of any voting classifier in terms of the margins of the training data. Recently an equilibrium margin (Emargin) bound which is sharper than previously well-known margin bounds is proposed. In this paper, we conduct extensive experiments to test the Emargin theory. Specifically, we develop an efficient algorithm that, given a boosting classifier (or a voting classifier in general), learns a new voting classifier which usually has a smaller Emargin bound. We then compare the performances of the two classifiers and find that the new classifier often has smaller test errors, which agrees with what the Emargin theory predicts.

Similar content being viewed by others

References

Freund Y, Schapire R E. Experiments with a new boosting algorithm. In: International Conference on Machine Learning (ICML), Bari, 1996

Freund Y, Schapire R E. A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci, 1997, 55: 119–139

Bauer E, Kohavi R. An empirical comparison of voting classification algorithms: Bagging, boosting and variants. Mach Learn, 1999, 36: 105–139

Dietterich T. An experimental comparison of three methods for constructing ensembles of decision trees: Bagging, boosting and randomization. Mach Learn, 2000, 40: 139–157

Viola P, Jones M. Rapid object detection using a boosted cascade of simple features. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, 2001

Caruana R, Niculescu-Mizil A. An empirical comparison of supervised learning algorithms. In: 23th International Conference on Machine Learning (ICML), Pittsburgh, 2006

Friedman J, Hastie T, Tibshirani R. Additive logistic regression: A statistical view of boosting. Ann Stat, 2000, 28: 337–407

Jiang W. Process consistency for adaboost. Ann Stat, 2004, 32: 13–29

Lugosi W, Vayatis N. On the bayes-risk consistency of regularized boosting methods. Ann Stat, 2004, 32: 30–55

Zhang T. Statistical behavior and consistency of classification methods based on convex risk minimization. Ann Stat, 2004, 32: 56–85

Bartlett P, Jordan M, McAuliffe J D. Convexity, classification, and risk bounds. J Am Stat Assoc, 2006, 101: 138–156

Mease D, Wyner A. Evidence contrary to the statistical view of boosting. J Mach Learn Res, 2008, 9: 131–156

Schapire R, Freund Y, Bartlett P, et al. Boosting the margin: A new explanation for the effectiveness of voting methods. Ann Stat, 1998, 26: 1651–1686

Grove A J, Schuurmans D. Boosting in the limit: Maximizing the margin of learned ensembles. In: National Conference on Artificial Intelligence (AAAI), Wisconsin, 1998

Breiman L. Prediction games and arcing algorithms. Neural Comput, 1999, 11: 1493–1517

Reyzin L, Schapire R E. How boosting the margin can also boost classifier complexity. In: International Conference on Machine Learning (ICML), 2006

Koltchinskii V, Panchenko D. Empirical margin distributions and bounding the generalization error of combined classifiers. Ann Stat, 2002, 30: 1–50

Koltchinskii V, Panchenko D. Complexities of convex combinations and bounding the generalization error in classification. Ann Stat, 2005, 33: 1455–1496

Wang L, Sugiyama M, Yang C, et al. On the margin explanation of boosting algorithms. In: 21th Annual Conference on Learning Theory (COLT), Helsinki, 2008

Wang L, Sugiyama M, Jing Z, et al. A Refined Margin Analysis for Boosting Algorithms via Equilibrium Margin. J Mach Learn Res, 2011, 12: 1835–1863

Rätsch G, Schölkopf B, Smola A, et al. ν-arc: Ensemble learning in the presense of outliers. In: Solla S A, Leen T, Müller K-R, eds. Proceedings of Advances in Neural Information Processing Systems (NIPS)). Cambridge: MIT Press, 1999

Demiriz A, Bennet K, Shawe-Taylor J. Linear programming boosting via column generation. Mach Learn, 2002, 46: 225–254

Bennett K, Demiriz A. Semi-supervised support vector machines. In: Proceedings of the Conference on Advances in Neural Information Processing Systems (NIPS). Cambridge: MIT Press, 1999. 368–374

Asuncion A, Newman D J. UCI machine learning repository, 2007. Available from: http://www.ics.uci.edu/~mlearn/MLRepository.html

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, L., Deng, X., Jing, Z. et al. Further results on the margin explanation of boosting: new algorithm and experiments. Sci. China Inf. Sci. 55, 1551–1562 (2012). https://doi.org/10.1007/s11432-012-4602-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11432-012-4602-y