Abstract

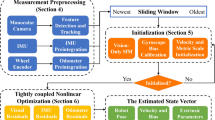

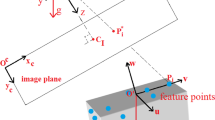

Visual tracking, as a popular computer vision technique, has a wide range of applications, such as camera pose estimation. Conventional methods for it are mostly based on vision only, which are complex for image processing due to the use of only one sensor. This paper proposes a novel sensor fusion algorithm fusing the data from the camera and the fiber-optic gyroscope. In this system, the camera acquires images and detects the object directly at the beginning of each tracking stage; while the relative motion between the camera and the object measured by the fiber-optic gyroscope can track the object coordinate so that it can improve the effectiveness of visual tracking. Therefore, the sensor fusion algorithm presented based on the tracking system can overcome the drawbacks of the two sensors and take advantage of the sensor fusion to track the object accurately. In addition, the computational complexity of our proposed algorithm is obviously lower compared with the existing approaches (86% reducing for a 0.5 min visual tracking). Experiment results show that this visual tracking system reduces the tracking error by 6.15% comparing with the conventional vision-only tracking scheme (edge detection), and our proposed sensor fusion algorithm can achieve a long-term tracking with the help of bias drift suppression calibration.

Similar content being viewed by others

References

Klein G S W, Drummond T W. Tightly integrated sensor fusion for robust visual tracking. Image Vision Comput, 2004, 22: 769–776

Hol J D. Pose Estimation and Calibration Algorithms for Vision and Inertial Sensors. Sweden Linköping: Linköping Univ Press, 2008. 7–23

Song S, Qiao W, Li B, et al. An efficient magnetic tracking method using uniaxial sensing coil. IEEE Trans Magn, 2014, 50: 4003707

Wang Q, Chen W P, Zheng R, et al. Acoustic target tracking using tiny wireless sensor devices. Inf Process Sens Netw, 2003, 2634: 642–657

Zhang X, Hu W, Xie N, et al. A robust tracking system for low frame rate video. Int J Comput Vis, 2015, 115: 279–304

Zhang X, Hu W, Maybank S, et al. Sequential particle swarm optimization for visual tracking. In: Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition, Anchorage, 2008. 1–8

Li Y, Ai H, Yamashita T, et al. Tracking in low frame rate video: a cascade particle filter with discriminative observers of different life spans. IEEE Trans Anal, 2008, 30: 1728–1740

Park I K, Singhal N, Lee M H, et al. Design and performance evaluation of image processing algorithms on GPUs. IEEE Trans Parall Distr, 2011, 22: 91–104

Erdem A T, Ercan A O. Fusing inertial sensor data in an extended Kalman filter for 3D camera tracking. IEEE Trans Image Process, 2015, 24: 538–548

Foxlin E. Inertial head-tracker sensor fusion by a complementary separate-bias Kalman filter. In: Proceedings of IEEE Virtual Reality Annual International Symposium, Santa Clara, 1996. 185–194

Hol J D, Dijkstra F, Luinge H, et al. Tightly coupled UWB/IMU pose estimation. In: Proceedings of IEEE International Conference on Ultra-Wideband, Vancouver, 2009. 688–692

He P, Cardou P, Desbiens A, et al. Estimating the orientation of a rigid body moving in space using inertial sensors. Multibody Syst Dyn, 2014, 35: 1–27

Corke P, Lobo J, Dias J. An introduction to inertial and visual sensing. Int J Robot Res, 2007, 26: 519–535

Starner T. Project glass: an extension of the self. IEEE Pervas Comput, 2013, 12: 14–16

Chai L, Nguyen K, Hoff B, et al. An adaptive estimator for registration in augmented reality. In: Proceedings of 2nd IEEE and ACM International Workshop on Augmented Reality, San Francisco, 1999. 23–32

You S, Neumann U. Fusion of vision and gyro tracking for robust augmented reality registration. In: Proceedings of IEEE Conference on Virtual Reality, Yokohama, 2001. 71–78

Zhang G. The Principles and Technologies of Fiber-Optic Gyroscope. Beijing: National Defense Industry Press, 2008. 1–25

Barbour N, Schmidt G. Inertial sensor technology trends. IEEE Sens J, 2001, 1: 332–339

Hwangbo M, Kim J S, Kanade T. Gyro-aided feature tracking for a moving camera: fusion, auto-calibration and GPU implementation. Int J Robot Res, 2011, 30: 1755–1774

Yu J, Wang Z F. 3D facial motion tracking by combining online appearance model and cylinder head model in particle filtering. Sci China Inf Sci, 2014, 57: 029101

Gonzalez R C, Woods R E, Eddins S L. Digital Image Processing Using Matlab. Beijing: Publishing House of Electronics Industry, 2014. 205–229

Wang X, He C, Wang Z. Method for suppressing the bias drift of interferometric all-fiber optic gyroscopes. Opt Lett, 2011, 36: 1191–1193

He C, Yang C, Wang Z. Fusion of finite impulse response filter and adaptive Kalman filter to suppress angle random walk of fiber optic gyroscopes. Opt Eng, 2012, 51: 124401–124401

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tan, Z., Yang, C., Li, Y. et al. A low-complexity sensor fusion algorithm based on a fiber-optic gyroscope aided camera pose estimation system. Sci. China Inf. Sci. 59, 042412 (2016). https://doi.org/10.1007/s11432-015-5516-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11432-015-5516-2