Abstract

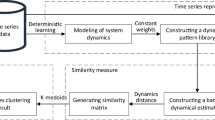

In this paper, based on deterministic learning, we propose a method for rapid recognition of dynamical patterns consisting of sampling sequences. First, for the sequences yielded by sampling a periodic or recurrent trajectory (a dynamical pattern) generated from a nonlinear dynamical system, a sampled-data deterministic learning algorithm is employed for modeling/identification of inherent system dynamics. Second, a definition is formulated to characterize similarities between sampling sequences (dynamical patterns) based on differences in the system dynamics. Third, by constructing a set of discrete-time dynamical estimators based on the learned knowledge, similarities between the test and training patterns are measured by using the average L1 norms of synchronization errors, and general conditions for accurate and rapid recognition of dynamical patterns are given in a sampled-data framework. Finally, numerical examples are discussed to illustrate the effectiveness of the proposed method. We demonstrate that not only a test pattern can be rapidly recognized corresponding to a similar training pattern, but also the proposed recognition conditions can be verified step by step based on historical sampling data. This makes a distinction compared with the previous work on rapid dynamical pattern recognition for continuous-time nonlinear systems, in which the recognition conditions are difficult to be verified by using continuous-time signals.

Similar content being viewed by others

References

Kadous M W. Temporal classification: extending the classification paradigm to multivariate time series. Dissertation for Ph.D. Degree. Kensington: University of New South Wales, 2002

Dietterich T G. Machine learning for sequential data: a review. In: Proceedings of Joint IAPR International Workshops SSPR and SPR, 2002. 15–30

Keogh E, Ratanamahatana C A. Exact indexing of dynamic time warping. Knowl Inf Syst, 2005, 7: 358–386

Xi X P, Keogh E, Shelton C, et al. Fast time series classification using numerosity reduction. In: Proceedings of International Conference on Machine Learning, 2006

Wang M, Wang Z D, Chen Y, et al. Adaptive neural event-triggered control for discrete-time strict-feedback nonlinear systems. IEEE Trans Cybern, 2020, 50: 2946–2958

Huang Z K, Cao J D, Raffoul Y N. Hilger-type impulsive differential inequality and its application to impulsive synchronization of delayed complex networks on time scales. Sci China Inf Sci, 2018, 61: 078201

Ljung L. Perspectives on system identification. IFAC Proc Volume, 2008, 41: 7172–7184

Yang Q, Wu X D. 10 challenging problems in data mining research. Int J Info Tech Dec Mak, 2006, 5: 597–604

Gales M, Young S. The application of hidden Markov models in speech recognition. FNT Signal Process, 2007, 1: 195–304

Rabiner L R. A tutorial on hidden Markov models and selected applications in speech recognition. Proc IEEE, 1989, 77: 257–286

Yamato J, Ohya J, Ishii K. Recognizing human action in time-sequential images using hidden Markov model. In: Proceedings of Computer Vision and Pattern Recognition, 1992. 379–385

Turaga P, Chellappa R, Subrahmanian V S, et al. Machine recognition of human activities: a survey. IEEE Trans Circ Syst Video Technol, 2008, 18: 1473–1488

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521: 436–444

Pascanu R, Gulcehre C, Cho K, et al. How to construct deep recurrent neural networks. In: Proceedings of International Conference on Learning Representations, 2014

Sutskever I, Martens J, Hinton G E. Generating text with recurrent neural networks. In: Proceedings of International Conference on Machine Learning, 2011. 1017–1024

Sutskever I, Vinyals O, Le Q V. Sequence to sequence learning with neural networks. In: Proceedings of Advances in Neural Information Processing Systems, 2014. 3104–3112

Cho K, van Merrienboer B, Gulcehre C, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of Conference on Empirical Methods in Natural Language Processing, 2014

Graves A, Mohamed A R, Hinton G. Speech recognition with deep recurrent neural networks. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, 2013

Huang Z Y, Tang J, Xue S F, et al. Speaker adaptation of RNN-BLSTM for speech recognition based on speaker code. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, 2016. 5305–5309

Li X G, Wu X H. Constructing long short-term memory based deep recurrent neural networks for large vocabulary speech recognition. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, 2015. 4520–4524

Li J, Xu H, He X W, et al. Tweet modeling with LSTM recurrent neural networks for hashtag recommendation. In: Proceedings of IEEE International Joint Conference on Neural Networks, 2016. 1570–1577

Wang M S, Song L, Yang X K, et al. A parallel-fusion RNN-LSTM architecture for image caption generation. In: Proceedings of IEEE International Conference on Image Processing, 2016. 4448–4452

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput, 1997, 9: 1735–1780

Bengio Y, Simard P, Frasconi P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw, 1994, 5: 157–166

Xu K, Ba J, Kiros R, et al. Show, attend and tell: neural image caption generation with visual attention. In: Proceedings of International Conference on Machine Learning, 2015. 2048–2057

Charles A, Yin D, Rozell C. Distributed sequence memory of multidimensional inputs in recurrent networks. J Mach Learn Res, 2017, 18: 181–217

Wang C, Hill D J. Learning from neural control. IEEE Trans Neural Netw, 2006, 17: 130–146

Wang C, Hill D J. Deterministic Learning Theory for Identification, Recognition, and Control. Boca Raton: CRC Press, 2009

Wang M, Zhang Y W, Wang C. Learning from neural control for non-affine systems with full state constraints using command filtering. Int J Control, 2018, 47: 1–15

Wang C, Hill D J. Deterministic learning and rapid dynamical pattern recognition. IEEE Trans Neural Netw, 2007, 18: 617–630

Wang C, Chen T R. Rapid detection of small oscillation faults via deterministic learning. IEEE Trans Neural Netw, 2011, 22: 1284–1296

Lin P, Wang C, Chen T R. A stall warning scheme for aircraft engines with inlet distortion via deterministic learning. IEEE Trans Control Syst Technol, 2018, 26: 1468–1474

Lin P, Wang M, Wang C, et al. Abrupt stall detection for axial compressors with non-uniform inflow via deterministic learning. Neurocomputing, 2019, 338: 163–171

Chen T R, Wang C, Hill D J. Rapid oscillation fault detection and isolation for distributed systems via deterministic learning. IEEE Trans Neural Netw Learn Syst, 2014, 25: 1187–1199

Chen T R, Wang C, Hill D J. Small oscillation fault detection for a class of nonlinear systems with output measurements using deterministic learning. Syst Control Lett, 2015, 79: 39–46

Chen T R, Wang C, Chen G, et al. Small fault detection for a class of closed-loop systems via deterministic learning. IEEE Trans Cybern, 2019, 49: 897–906

Yuan C Z, Wang C. Design and performance analysis of deterministic learning of sampled-data nonlinear systems. Sci China Inf Sci, 2014, 57: 032201

Wu W M, Wang C, Yuan C Z. Deterministic learning from sampling data. Neurocomputing, 2019, 358: 456–466

Fradkov A L, Evans R J. Control of chaos: methods and applications in engineering. Annu Rev Control, 2005, 29: 33–56

Chen G R, Dong X N. From Chaos to Order: Methodologies, Perspectives and Applications. Singapore: World Scientific, 1998

Acknowledgements

This work was supported in part by National Natural Science Foundation of China (Grant No. 61890922) and in part by National Major Scientific Instruments Development Project (Grant No. 61527811).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wu, W., Wang, Q., Yuan, C. et al. Rapid dynamical pattern recognition for sampling sequences. Sci. China Inf. Sci. 64, 132201 (2021). https://doi.org/10.1007/s11432-019-2878-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11432-019-2878-y