Abstract

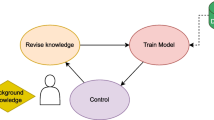

Bridging neural network learning and symbolic reasoning is crucial for strong AI. Few pioneering studies have made some progress on logical reasoning tasks that require partitioned inputs of instances (e.g., sequential data), from which a final concept is formed based on the complex (perhaps logical) relationships between them. However, they cannot apply to low-level cognitive tasks that require unpartitioned inputs (e.g., raw images), such as object recognition and text classification. In this paper, we propose abductive subconcept learning (ASL) to bridge neural network learning and symbolic reasoning on unsegmented image classification tasks. ASL uses deep learning and abductive logical reasoning to jointly learn subconcept perception and secondary reasoning. Specifically, it first employs meta-interpretive learning (MIL) to induce first-order logical hypotheses capturing the relationships between the high-level subconcepts that account for the target concept. Then, it uses the groundings of the logical hypotheses as labels to train a deep learning model for identifying the subconcepts from unpartitioned data. ASL jointly trains the deep learning model and learns the MIL theory by minimizing the inconsistency between their grounded outputs. Experimental results show that ASL successfully integrates machine learning and logical reasoning with accurate and interpretable results in several object recognition tasks.

Similar content being viewed by others

References

Russell S. Unifying logic and probability. Commun ACM, 2015, 58: 88–97

Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks. In: Proceedings of Advances in Neural Information Processing Systems, 2012. 1097–1105

Gunning D, Aha D W. DARPA’s explainable artificial intelligence (XAI) program. AI Mag, 2019, 40: 44–58

Muggleton S H, Schmid U, Zeller C, et al. Ultra-strong machine learning: comprehensibility of programs learned with ILP. Mach Learn, 2018, 107: 1119–1140

Zhou Z H. Learnware: on the future of machine learning. Front Comput Sci, 2016, 10: 589–590

Zhou Z-H. Abductive learning: towards bridging machine learning and logical reasoning. Sci China Inf Sci, 2019, 62: 076101

Dai W Z, Xu Q, Yu Y, et al. Bridging machine learning and logical reasoning by abductive learning. In: Proceedings of Advances in Neural Information Processing Systems, 2019. 2811–2822

Manhaeve R, Dumancic S, Kimmig A, et al. DeepProbLog: neural probabilistic logic programming. In: Proceedings of Advances in Neural Information Processing Systems, 2018. 3749–3759

de Raedt L, Kimmig A, Toivonen H. ProbLog: a probabilistic prolog and its application in link discovery. In: Proceedings of the 20th International Joint Conference on Artifical Intelligence, Hyderabad, 2007. 2462–2467

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521: 436

Sabour S, Frosst N, Hinton G E. Dynamic routing between capsules. In: Proceedings of Advances in Neural Information Processing Systems, 2017. 3856–3866

Wolpert D H. Stacked generalization. Neural Networks, 1992, 5: 241–259

Zhou Z H, Feng J. Deep forest: towards an alternative to deep neural networks. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence, 2017. 3553–3559

Ilse M, Tomczak J M, Welling M. Attention-based deep multiple instance learning. In: Proceedings of the 35th International Conference on Machine Learning, Stockholm, 2018. 2132–2141

Wang X, Yan Y, Tang P, et al. Revisiting multiple instance neural networks. Pattern Recognit, 2018, 74: 15–24

Carbonneau M A, Cheplygina V, Granger E, et al. Multiple instance learning: a survey of problem characteristics and applications. Pattern Recognition, 2018, 77: 329–353

Yang S J, Jiang Y, Zhou Z H. Multi-instance multi-label learning with weak label. In: Proceedings of the 23rd International Joint Conference on Artificial Intelligence, 2013

Sun Y Y, Ng M K, Zhou Z H. Multi-instance dimensionality reduction. In: Proceedings of the 24th AAAI Conference on Artificial Intelligence, 2010

Zhou Z H, Zhang M L, Huang S J, et al. Multi-instance multi-label learning. Artif Intell, 2012, 176: 2291–2320

Wang W, Zhou Z H. Learnability of multi-instance multi-label learning. Chin Sci Bull, 2012, 57: 2488–2491

Zhou Z H, Sun Y Y, Li Y F. Multi-instance learning by treating instances as non-IID samples. In: Proceedings of the 26th Annual International Conference on Machine Learning, 2009. 1249–1256

Mathieu E, Rainforth T, Siddharth N, et al. Disentangling disentanglement in variational autoencoders. 2018. ArXiv:1812.02833

Burgess C P, Matthey L, Watters N, et al. MONet: unsupervised scene decomposition and representation. 2019. ArXiv:1901.11390

Locatello F, Bauer S, Lucic M, et al. Challenging common assumptions in the unsupervised learning of disentangled representations. 2018. ArXiv:1811.12359

Dong H, Mao J, Lin T, et al. Neural logic machines. 2019. ArXiv:1904.11694

Shanahan M, Nikiforou K, Creswell A, et al. An explicitly relational neural network architecture. 2019. ArXiv:1905.10307

de Raedt L, Kimmig A. Probabilistic (logic) programming concepts. Mach Learn, 2015, 100: 5–47

Koller D, Friedman N, Džeroski S, et al. Introduction to Statistical Relational Learning. Cambridge: MIT Press, 2007

Kakas A C, Kowalski R A, Toni F. Abductive logic programming. J Logic Computation, 1992, 2: 719–770

Yu Y, Qian H, Hu Y Q. Derivative-free optimization via classification. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence, 2016

Muggleton S H, Lin D, Tamaddoni-Nezhad A. Meta-interpretive learning of higher-order dyadic datalog: predicate invention revisited. Mach Learn, 2015, 100: 49–73

Bratko I. Prolog Programming for Artificial Intelligence. Mississauga: Pearson Education Canada, 2012

Xian Y, Lampert C H, Schiele B, et al. Zero-shot learning—a comprehensive evaluation of the good, the bad and the ugly. IEEE Trans Pattern Anal Mach Intell, 2019, 41: 2251–2265

He K, Zhang X, Ren S, et al. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016. 770–778

Dietterich T G, Lathrop R H, Lozano-Pérez T. Solving the multiple instance problem with axis-parallel rectangles. Artif Intelligence, 1997, 89: 31–71

Andrews S, Tsochantaridis I, Hofmann T. Support vector machines for multiple-instance learning. In: Proceedings of Advances in Neural Information Processing Systems, Vancouver, 2002. 561–568

Gärtner T, Flach P A, Kowalczyk A, et al. Multi-instance kernels. In: Proceedings of the 19th International Conference on Machine Learning, 2002. 179–186

Zhang Q, Goldman S A. EM-DD: an improved multiple-instance learning technique. In: Proceedings of Advances in Neural Information Processing Systems Vancouver, 2001. 1073–1080

Wei X S, Wu J, Zhou Z H. Scalable algorithms for multi-instance learning. IEEE Trans Neural Netw Learn Syst, 2017, 28: 975–987

Acknowledgements

This work was supported in part by National Natural Science Foundation of China (Grant Nos. 62176139, 61872225, 61876098) and Major Basic Research Project of Natural Science Foundation of Shandong Province (Grant No. ZR2021ZD15).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Han, Z., Cai, LW., Dai, WZ. et al. Abductive subconcept learning. Sci. China Inf. Sci. 66, 122103 (2023). https://doi.org/10.1007/s11432-020-3569-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11432-020-3569-0