Abstract

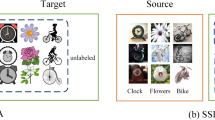

Unsupervised domain adaptation (UDA) aims to transfer the knowledge from a label-rich source domain to an unlabeled target domain. Current approaches mainly focus on aligning the target domain’s data distribution with the source domain, but lacking attention to allowing target data to guide which features need to be captured. Source data dominates feature extraction. As a result, target embedding would lose some vital discriminative features and limit UDA in complex tasks. In this paper, we argue that utilizing auxiliary tasks to capture target-intrinsic patterns, which do not depend on source supervision, could further enhance UDA. Multiple auxiliary tasks understand the instance concept from different perspectives. Our findings show that exploiting the conceptual consistency of multiple auxiliary tasks to characterize the common part of these various understandings should reveal the target’s hidden ground truth. Furthermore, we propose a novel method named multiple self-supervision conceptual consistency domain adaptation (MSCC). Experiments and analysis on benchmark datasets show the effectiveness of our idea and method.

Similar content being viewed by others

References

Pan S J, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng, 2009, 22: 1345–1359

Dai W Y, Yang Q. Xue G R, et al. Boosting for transfer learning. In: Proceedings of the 24th International Conference on Machine Learning (ICML), 2007. 193–200

Raina R, Battle A, Lee H, et al. Self-taught learning: transfer learning from unlabeled data. In: Proceedings of the 24th International Conference on Machine Learning (ICML), 2007. 759–766

Lawrence N D, Platt J C. Learning to learn with the informative vector machine. In: Proceedings of the 21st International Conference on Machine Learning (ICML), 2004. 65

Mihalkova L, Huynh T, Mooney R J. Mapping and revising Markov logic networks for transfer learning. In: Proceedings of the 22nd AAAI Conference on Artificial Intelligence (AAAI), 2007. 7: 608–614

Tzeng E, Hoffman J, Zhang N, et al. Deep domain confusion: maximizing for domain invariance. 2014. ArXiv:1412.3474

Long M S, Cao Y, Wang J M, et al. Learning transferable features with deep adaptation networks. In: Proceedings of the 32nd International Conference on Machine Learning (ICML), 2015. 97–105

Long M S, Zhu H, Wang J M, et al. Unsupervised domain adaptation with residual transfer networks. In: Proceedings of the Advances in Neural Information Processing Systems 29 (NeurIPS), 2016. 136–144

Yaroslav G, Evgeniya U, Hana A, et al. Domain-adversarial training of neural networks. J Mach Learning Res, 2016, 17: 1–35

Yaroslav G, Victor L. Unsupervised domain adaptation by backpropagation. In: Proceedings of the 32nd International Conference on Machine Learning (ICML), 2015. 1180–1189

Tzeng E, Hoffman J, Saenko K, et al. Adversarial discriminative domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017. 7167–7176

Chen C Q, Xie W P, Wen Y, et al. Multiple-source domain adaptation with generative adversarial nets. Knowledge-Based Syst, 2020, 199: 105962

Judy H, Eric T, Taesung P, et al. CYCADA: cycle-consistent adversarial domain adaptation. In: Proceedings of the 35th International Conference on Machine Learning (ICML), 2018. 1994–2003

Wang Z M, Du B, Tu W P, et al. Incorporating distribution matching into uncertainty for multiple kernel active learning. IEEE Trans Knowl Data Eng, 2019, 33: 128–142

Xie S A, Zheng Z B, Chen L, et al. Learning semantic representations for unsupervised domain adaptation. In: Proceedings of the 35th International Conference on Machine Learning (ICML), 2018. 5423–5432

Long M S, Cao Z J, Wang J M, et al. Conditional adversarial domain adaptation. In: Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS), 2018. 1647–1657

Pei Z Y, Cao Z J, Long M S, et al. Multi-adversarial domain adaptation. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, 2018. 3934–3941

Wang J D, Feng W J, Chen Y Q, et al. Visual domain adaptation with manifold embedded distribution alignment. In: Proceedings of the 26th ACM International Conference on Multimedia (MM), 2018. 402–410

Wang Z M, Du B, Guo Y H. Domain adaptation with neural embedding matching. IEEE Trans Neural Netw Learn Syst, 2020, 31: 2387–2397

Saito K, Ushiku Y, Harada T, et al. Adversarial dropout regularization. In: Proceedings of the 6th International Conference on Learning Representations (ICLR), 2018

Saito K, Watanabe K, Ushiku Y, et al. Maximum classifier discrepancy for unsupervised domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018. 3723–3732

Ghifary M, Kleijn W B, Zhang M, et al. Deep reconstruction-classification networks for unsupervised domain adaptation. In: Proceedings of the 14th European Conference on Computer Vision (ECCV), 2016. 597–613

Sun Y, Tzeng E, Darrell T, et al. Unsupervised domain adaptation through self-supervision. 2019. ArXiv:1909.11825

Zhou Z-H, Zhan D-C, Yang Q. Semi-supervised learning with very few labeled training examples. In: Proceedings of the 22nd AAAI Conference on Artificial Intelligence, 2007. 675–680

Ben-David S, Blitzer J, Crammer K, et al. A theory of learning from different domains. Mach Learn, 2010, 79: 151–175

Goodfellow I J, Pouget-Abadie J, Mirza M, et al. Generative adversarial networks. 2014. ArXiv:1406.2661

Gidaris S, Bursuc A, Komodakis N, et al. Boosting few-shot visual learning with self-supervision. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2019. 8059–8068

Lee H, Hwang S J, Shin J. Self-supervised label augmentation via input transformations. In: Proceedings of the 37th International Conference on Machine Learning (ICML), 2020. 5714–5724

Kolesnikov A, Zhai X, Beyer L. Revisiting self-supervised visual representation learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019. 1920–1929

Jing L L, Tian Y L. Self-supervised visual feature learning with deep neural networks: a survey. IEEE Trans Pattern Anal Mach Intell, 2020, 43: 4037–4058

Doersch C, Zisserman A. Multi-task self-supervised visual learning. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017. 2070–2079

Kalogeiton V, Weinzaepfel P, Ferrari V, et al. Joint learning of object and action detectors. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017. 4163–4172

Tarvainen A, Valpola H. Mean teachers are better role models: weight-averaged consistency targets improve semi-supervised deep learning results. In: Proceedings of the Advances in Neural Information Processing Systems 30 (NeurIPS), 2017. 1195–1204

Zou Y, Yu Z D, Liu X F, et al. Confidence regularized self-training. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2019. 5982–5991

Wang Q, Breckon T P. Unsupervised domain adaptation via structured prediction based selective pseudo-labeling. In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), 2020. 34: 6243–6250

Long M S, Zhu H, Wang J M, et al. Deep transfer learning with joint adaptation networks. In: Proceedings of the 34th International Conference on Machine Learning (ICML), 2017. 2208–2217

Zhang H Y, Cisse M, Dauphin Y N, et al. MixUp: beyond empirical risk minimization. In: Proceedings of the 6th International Conference on Learning Representations (ICLR), 2018

Berthelot D, Carlini N, Goodfellow I, et al. MixMatch: a holistic approach to semi-supervised learning. In: Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS), 2019. 5049–5059

Jiang J G, Fu B, Long M S. Transfer-learning-library. 2020. https://github.com/thuml/Transfer-Learning-Library

He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016. 770–778

Na J, Jung H, Chang H J, et al. FixBi: bridging domain spaces for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021. 1094–1103

Laine S, Aila T. Temporal ensembling for semi-supervised learning. In: Proceedings of the 5th International Conference on Learning Representations (ICLR), 2015. 1–13

Acknowledgements

This work was supported by National Natural Science Foundation of China (Grant Nos. 62076121, 61921006).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sun, H., Li, M. Enhancing unsupervised domain adaptation by exploiting the conceptual consistency of multiple self-supervised tasks. Sci. China Inf. Sci. 66, 142101 (2023). https://doi.org/10.1007/s11432-021-3535-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11432-021-3535-2