Abstract

Purpose

The manual generation of training data for the semantic segmentation of medical images using deep neural networks is a time-consuming and error-prone task. In this paper, we investigate the effect of different levels of realism on the training of deep neural networks for semantic segmentation of robotic instruments. An interactive virtual-reality environment was developed to generate synthetic images for robot-aided endoscopic surgery. In contrast with earlier works, we use physically based rendering for increased realism.

Methods

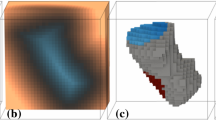

Using a virtual reality simulator that replicates our robotic setup, three synthetic image databases with an increasing level of realism were generated: flat, basic, and realistic (using the physically-based rendering). Each of those databases was used to train 20 instances of a UNet-based semantic-segmentation deep-learning model. The networks trained with only synthetic images were evaluated on the segmentation of 160 endoscopic images of a phantom. The networks were compared using the Dwass–Steel–Critchlow–Fligner nonparametric test.

Results

Our results show that the levels of realism increased the mean intersection-over-union (mIoU) of the networks on endoscopic images of a phantom (\({p}<0.01\)). The median mIoU values were 0.235 for the flat dataset, 0.458 for the basic, and 0.729 for the realistic. All the networks trained with synthetic images outperformed naive classifiers. Moreover, in an ablation study, we show that the mIoU of physically based rendering is superior to texture mapping (\({p}<0.01\)) of the instrument (0.606), the background (0.685), and the background and instruments combined (0.672).

Conclusions

Using physical-based rendering to generate synthetic images is an effective approach to improve the training of neural networks for the semantic segmentation of surgical instruments in endoscopic images. Our results show that this strategy can be an essential step in the broad applicability of deep neural networks in semantic segmentation tasks and help bridge the domain gap in machine learning.

Similar content being viewed by others

Notes

Refer to https://github.com/mmmarinho/levels-of-realism-ijcars2020 for more information.

The initial version of the code is submitted along the manuscript. For a more up-to-date version, refer to https://github.com/mmmarinho/levels-of-realism-ijcars2020.

References

Allan M, Shvets A, Kurmann T, Zhang Z, Duggal R, Su Y, Rieke N, Laina I, Kalavakonda N, Bodenstedt S, García-Peraza LC, Li W, Iglovikov V, Luo H, Yang J, Stoyanov D, Maier-Hein L, Speidel S, Azizian M (2019) 2017 robotic instrument segmentation challenge. CoRR. arXiv:1902.06426

Arata J, Fujisawa Y, Nakadate R, Kiguchi K, Harada K, Mitsuishi M, Hashizume M (2019) Compliant four degree-of-freedom manipulator with locally deformable elastic elements for minimally invasive surgery. In: 2019 International conference on robotics and automation (ICRA). IEEE. https://doi.org/10.1109/icra.2019.8793798

Bishop G, Weimer DM (1986) Fast phong shading. ACM SIGGRAPH Comput Graph 20(4):103–106. https://doi.org/10.1145/15886.15897

Budsberg J, Zafar NB, Aldén M (2014) Elastic and plastic deformations with rigid body dynamics. In: ACM SIGGRAPH 2014 talks on-SIGGRAPH14. ACM Press. https://doi.org/10.1145/2614106.2614132

Dolgun A, Demirhan H (2016) Performance of nonparametric multiple comparison tests under heteroscedasticity, dependency, and skewed error distribution. Commun Stat Simul Comput 46(7):5166–5183. https://doi.org/10.1080/03610918.2016.1146761

Douglas CE, Michael FA (1991) On distribution-free multiple comparisons in the one-way analysis of variance. Commun Stati Theory Methods 20(1):127–139. https://doi.org/10.1080/03610929108830487

Hartwig S, Ropinski T (2019) Training object detectors on synthetic images containing reflecting materials. arXiv preprint arXiv:1904.00824

Hinterstoisser S, Pauly O, Heibel H, Marek M, Bokeloh M (2019) An annotation saved is an annotation earned: Using fully synthetic training for object instance detection. CoRR arXiv:1902.09967

Kilgard MJ (2000) A practical and robust bump-mapping technique for todays gpus. Game Dev Conf 2000:1–39

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Malangoni MA, Biester TW, Jones AT, Klingensmith ME, Lewis FR (2013) Operative experience of surgery residents: trends and challenges. J Surg Educ 70(6):783–788. https://doi.org/10.1016/j.jsurg.2013.09.015

Marinho MM, Adorno BV, Harada K, Mitsuishi M (2019) Dynamic active constraints for surgical robots using vector-field inequalities. IEEE Trans Robot 35(5):1166–1185. https://doi.org/10.1109/tro.2019.2920078

Marinho MM, Harada K, Morita A, Mitsuishi M (2019) Smartarm: integration and validation of a versatile surgical robotic system for constrained workspaces. Int J Med Robot Comput Assist Surg. https://doi.org/10.1002/rcs.2053

Masuda T, Kanako H, Adachi S, Arai F, Omata S, Morita A, kin T, Saito N, Yamashita J, Chinzei K, Haswgawa A, Fukuda T. (2018) Patients simulator for transsphenoidal surgery. In: 2018 International symposium on micro-nanomechatronics and human science (MHS). IEEE https://doi.org/10.1109/mhs.2018.8886922

Peddie J (2019) Work flow and material standards. In: Ray tracing: a tool for all, pp 65–90. Springer. https://doi.org/10.1007/978-3-030-17490-3_5

Pharr M, Humphreys G, Jakob W (2016) Physically based rendering. Elsevier LTD, Oxford. https://www.ebook.de/de/product/25867811/matt_pharr_greg_humphreys_wenzel_jakob_physically_based_rendering.html

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, pp. 91–99. http://papers.nips.cc/paper/5638-faster-r-cnn-towards-real-time-object-detection-with-region-proposal-networks

Reznick RK, MacRae H (2006) Teaching surgical skills: changes in the wind. N Engl J Med 355(25):2664–2669. https://doi.org/10.1056/nejmra054785

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: Lecture notes in computer science, pp 234–241. Springer. https://doi.org/10.1007/978-3-319-24574-4_28

Shelhamer E, Long J, Darrell T (2017) Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intel 39(4):640–651. https://doi.org/10.1109/tpami.2016.2572683

Tobin J, Fong R, Ray A, Schneider J, Zaremba W, Abbeel P (2017) Domain randomization for transferring deep neural networks from simulation to the real world. In: 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE. https://doi.org/10.1109/iros.2017.8202133

Torrance KE, Sparrow EM (1967) Theory for off-specular reflection from roughened surfaces. J Opt Soc Am 57(9):1105. https://doi.org/10.1364/josa.57.001105

van der Sluis PC, Ruurda JP, van der Horst S, Goense L, van Hillegersberg R (2018) Learning curve for robot-assisted minimally invasive thoracoscopic esophagectomy: results from 312 cases. Ann Thorac Surg 106(1):264–271. https://doi.org/10.1016/j.athoracsur.2018.01.038

Zisimopoulos O, Flouty E, Stacey M, Muscroft S, Giataganas P, Nehme J, Chow A, Stoyanov D (2017) Can surgical simulation be used to train detection and classification of neural networks? Healthc Technol Lett 4(5):216–222. https://doi.org/10.1049/htl.2017.0064

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This work was supported by JSPS KAKENHI Grant No. JP19K14935.

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Heredia Perez, S.A., Marques Marinho, M., Harada, K. et al. The effects of different levels of realism on the training of CNNs with only synthetic images for the semantic segmentation of robotic instruments in a head phantom. Int J CARS 15, 1257–1265 (2020). https://doi.org/10.1007/s11548-020-02185-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-020-02185-0