Abstract

Purpose

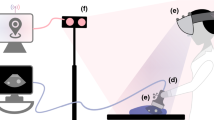

Microsoft HoloLens is a pair of augmented reality (AR) smart glasses that could improve the intraprocedural visualization of ultrasound-guided procedures. With the wearable HoloLens headset, an ultrasound image can be virtually rendered and registered with the ultrasound transducer and placed directly in the practitioner’s field of view.

Methods

A custom application, called HoloUS, was developed using the HoloLens and a portable ultrasound machine connected through a wireless network. A custom 3D-printed case with an AR-pattern for the ultrasound transducer permitted ultrasound image tracking and registration. Voice controls on the HoloLens supported the scaling and movement of the ultrasound image as desired. The ultrasound images were streamed and displayed in real-time. A user study was performed to assess the effectiveness of the HoloLens as an alternative display platform. Novices and experts were timed on tasks involving targeting simulated vessels using a needle in a custom phantom.

Results

Technical characterization of the HoloUS app was conducted using frame rate, tracking accuracy, and latency as performance metrics. The app ran at 25 frames/s, had an 80-ms latency, and could track the transducer with an average reprojection error of 0.0435 pixels. With AR visualization, the novices’ times improved by 17% but the experts’ times decreased slightly by 5%, which may reflect the experts’ training and experience bias.

Conclusion

The HoloUS application was found to enhance user experience and simplify hand–eye coordination. By eliminating the need to alternately observe the patient and the ultrasound images presented on a separate monitor, the proposed AR application has the potential to improve efficiency and effectiveness of ultrasound-guided procedures.

Similar content being viewed by others

References

Shafqat A, Ferguson E, Thanawala V, Bedforth NM, Hardman JG, McCahon RA (2015) Visuospatial ability as a predictor of novice performance in ultrasound-guided regional anesthesia. Anesthesiology 123(5):1188–1197. https://doi.org/10.1097/ALN.0000000000000870

García-Vázquez V, Von Haxthausen F, Jäckle S, Schumann C, Kuhlemann I, Bouchagiar J, Höfer AC, Matysiak F, Hüttmann G, Goltz JP, Kleemann M (2018) Navigation and visualisation with HoloLens in endovascular aortic repair. Innov Surg Sci 3(3):167–177. https://doi.org/10.1515/iss-2018-2001

Kuhlemann I, Kleemann M, Jauer P, Schweikard A, Ernst F (2017) Towards X-ray free endovascular interventions–using HoloLens for on-line holographic visualisation. Healthcare Technol Lett 4(5):184–187. https://doi.org/10.1049/htl.2017.0061

Pepe A, Trotta GF, Mohr-Ziak P, Gsaxner C, Wallner J, Bevilacqua V, Egger J (2019) A marker-less registration approach for mixed reality–aided maxillofacial surgery: a pilot evaluation. J Digit Imag 32(6):1008–1018. https://doi.org/10.1007/s10278-019-00272-6

Kuzhagaliyev T, Clancy NT, Janatka M, Tchaka K, Vasconcelos F, Clarkson MJ, Gurusamy K, Hawkes DJ, Davidson B, Stoyanov D (2018) Augmented reality needle ablation guidance tool for irreversible electroporation in the pancreas. InMedical Imaging Image-Guided Procedures, Robotic Interventions, and Modeling. Int Soc Opt Photon. https://doi.org/10.1117/12.2293671

Rüger C, Feufel MA, Moosburner S, Özbek C, Pratschke J, Sauer IM (2020) Ultrasound in augmented reality: a mixed-methods evaluation of head-mounted displays in image-guided interventions. Int J Comput Assist Radiol Surg 15(11):1895–1905. https://doi.org/10.1007/s11548-020-02236-6

Liebmann F, Roner S, von Atzigen M, Scaramuzza D, Sutter R, Snedeker J, Farshad M, Fürnstahl P (2019) Pedicle screw navigation using surface digitization on the Microsoft HoloLens. Int J Comput Assist Radiol Surg 14(7):1157–1165. https://doi.org/10.1007/s11548-019-01973-7

Fuchs H, Pisano ED, Garrett WF, Hirota G, Livingston M, Whitton MC, Pizer SM. (1996) Towards performing ultrasound-guided needle biopsies from within a head-mounted display. In International Conference on Visualization in Biomedical Computing. Springer, Berlin, Heidelberg. pp. 591–600 https://doi.org/10.1007/BFb0047002

Rosenthal M, State A, Lee J, Hirota G, Ackerman J, Keller K, Pisano ED, Jiroutek M, Muller K, Fuchs H (2002) Augmented reality guidance for needle biopsies: an initial randomized, controlled trial in phantoms. Med Image Anal 6(3):313–320. https://doi.org/10.1016/S1361-8415(02)00088-9

Farshad-Amacker NA, Bay T, Rosskopf AB, Spirig JM, Wanivenhaus F, Pfirrmann CW, Farshad M (2020) Ultrasound-guided interventions with augmented reality in situ visualisation: a proof-of-mechanism phantom study. Eur Radiol Exp 4(1):1–7. https://doi.org/10.1186/s41747-019-0129-y

Lin MA, Siu AF, Bae JH, Cutkosky MR, Daniel BL (2018) HoloNeedle: augmented reality guidance system for needle placement investigating the advantages of three-dimensional needle shape reconstruction. IEEE Robot Automation Lett 3(4):4156–4162. https://doi.org/10.1109/LRA.2018.2863381

Qian L, Deguet A, Kazanzides P (2018) ARssist: augmented reality on a head-mounted display for the first assistant in robotic surgery. Healthcare Technol Lett 5(5):194–200. https://doi.org/10.1049/htl.2018.5065

Nitsche JF, Shumard KM, Brost BC (2017) Development and assessment of a novel task trainer and targeting tasks for ultrasound-guided invasive procedures. Acad Radiol 24(6):700–708. https://doi.org/10.1016/j.acra.2016.10.008

Gsaxner C, Pepe A, Li J, Ibrahimpasic U, Wallner J, Schmalstieg D, Egger J (2021) Augmented reality for head and neck carcinoma imaging: Description and feasibility of an instant calibration, markerless approach. Comput Methods Programs Biomed 1(200):105854. https://doi.org/10.1016/j.cmpb.2020.105854

Liu X, Kang S, Plishker W, Zaki G, Kane TD, Shekhar R (2016) Laparoscopic stereoscopic augmented reality: toward a clinically viable electromagnetic tracking solution. J Med Imaging 3(4):045001. https://doi.org/10.1117/1.JMI.3.4.045001

Lasso A, Heffter T, Rankin A, Pinter C, Ungi T, Fichtinger G (2014) PLUS: open-source toolkit for ultrasound-guided intervention systems. IEEE Trans Biomed Eng 61(10):2527–2537. https://doi.org/10.1109/TBME.2014.2322864

von Haxthausen F, Jäckle S, Strehlow J, Ernst F, García-Vázquez V (2019) Catheter pose-dependent virtual angioscopy images visualized on augmented reality glasses. Current Directions in Biomed Eng 5(1):289–291. https://doi.org/10.1515/cdbme-2019-0073

Kraft, Valentin & Strehlow, Jan & Jäckle, Sonja & Garcia-Vázquez, Veronica & Link, Florian & von Haxthausen, Felix & Schenk, Andrea & Schumann, Christian. (2019) A comparison of streaming methods for the Microsoft HoloLens. Deutsche Gesellschaft für Computer- und Roboterassistierte Chirurgie CURAC, Reutlingen, Germany 18.

Incekara F, Smits M, Dirven C, Vincent A (2018) Clinical feasibility of a wearable mixed-reality device in neurosurgery. World Neurosurg 1(118):e422–e427. https://doi.org/10.1016/j.wneu.2018.06.208

Acknowledgements

We wish to acknowledge equipment loan and technical support from Terason that facilitated the described research and development. We also acknowledge software enhancements made by Imran Hossain, a summer intern in our laboratory, and Xinyang Liu volunteering to be pictured using the system. Finally, we acknowledge the assistance of Tyler Salvador in helping the team design the 3D-printed holder.

Funding

No external funding source for this project.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Raj Shekhar is the founder of IGI Technologies, Inc. William Plishker is the co-founder and an employee of IGI Technologies. All other authors have no conflicts of interest or financial ties to disclose.

Ethics approval

No ethical approval was needed for this phantom study.

Informed consent

For this type of study formal consent is not required.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nguyen, T., Plishker, W., Matisoff, A. et al. HoloUS: Augmented reality visualization of live ultrasound images using HoloLens for ultrasound-guided procedures. Int J CARS 17, 385–391 (2022). https://doi.org/10.1007/s11548-021-02526-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-021-02526-7