Abstract

Purpose:

Mixed reality (MR) for image-guided surgery may enable unobtrusive solutions for precision surgery. To display preoperative treatment plans at the correct physical position, it is essential to spatially align it with the patient intra-operatively. Accurate alignment is safety critical because it will guide treatment, but cannot always be achieved for varied reasons. Effective visualization mechanisms that reveal misalignment are crucial to prevent adverse surgical outcomes to ensure safe execution.

Methods:

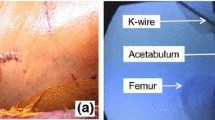

We test the effectiveness of three MR visualization paradigms in revealing spatial misalignment: wireframe, silhouette, and heatmap, which encodes residual registration error. We conduct a user study among 12 participants and use an anthropomorphic phantom mimicking total shoulder arthroplasty. Participants wearing Microsoft HoloLens 2 are presented with 36 randomly ordered spatial (mis)alignments of a virtual glenoid model overlaid on the phantom, each rendered using one of the three methods. Users choose whether to accept or reject the spatial alignment at every trial. Upon completion, participants report their perceived difficulty while using the visualization paradigms.

Results:

Across all visualization paradigms, the ability of participants to reliably judge the accuracy of spatial alignment was moderate (58.33%).The three visualization paradigms showed comparable performance. However, the heatmap-based visualization resulted in significantly better detectability than random chance (\(p=0.007\)). Despite heatmap enabling the most accurate decisions according to our measurements, wireframe was the most liked paradigm (50 %), followed by silhouette (41.7 %) and heatmap (8.3 %).

Conclusion:

Our findings suggest that conventional mixed reality visualization paradigms are not sufficiently effective in enabling users to differentiate between accurate and inaccurate spatial alignment of virtual content to the environment.

Similar content being viewed by others

References

Edwards PJE, Chand M, Birlo M, Stoyanov D (2021) The challenge of augmented reality in surgery. Digital surgery. Springer, pp 121–135

Unberath M, Gao C, Hu Y, Judish M, Taylor RH, Armand M, Grupp R (2021) The impact of machine learning on 2d/3d registration for image-guided interventions: a systematic review and perspective. Frontiers in Robotics and AI, 260

Martin-Gomez A, Eck U, Navab N (2019) Visualization techniques for precise alignment in vr: A comparative study. In: 2019 IEEE conference on virtual reality and 3D user interfaces (VR), pp 735–741. IEEE

Fischer M, Leuze C, Perkins S, Rosenberg J, Daniel B, Martin-Gomez A (2020) Evaluation of different visualization techniques for perception-based alignment in medical AR. In: 2020 IEEE ISMAR-Adjunct, pp 45–50. IEEE

Kriechling P, Roner S, Liebmann F, Casari F, Fürnstahl P, Wieser K (2020) Augmented reality for base plate component placement in reverse total shoulder arthroplasty: a feasibility study. Archives of orthopaedic and trauma surgery, 1–7

Gu W, Shah K, Knopf J, Navab N, Unberath M (2021) Feasibility of image-based augmented reality guidance of total shoulder arthroplasty using Microsoft HoloLens 1. Comput Methods Biomech Biomedl Eng Imaging Vis 9(3):261–270

Gu W, Shah K, Knopf J, Josewski C, Unberath M (2021) A calibration-free workflow for image-based mixed reality navigation of total shoulder arthroplasty. Comput Methods Biomech Biomed Eng Imaging Vis, 1–9

Bajura M, Fuchs H, Ohbuchi R (1992) Merging virtual objects with the real world: seeing ultrasound imagery within the patient. ACM SIGGRAPH Comput Graphics 26(2):203–210

Edwards P, Hill D, Hawkes D, Spink R (1995) Stereo overlays in the operating microscope for image-guided surgery. Comput Assisted Radiol 1995:1197–1202

Blackwell M, Nikou C, DiGioia AM, Kanade T (2000) An image overlay system for medical data visualization. Med Image Anal 4(1):67–72

Sauer F, Khamene A, Vogt S (2002) An augmented reality navigation system with a single-camera tracker: system design and needle biopsy phantom trial. MICCAI. Springer, pp 116–124

Vogt S, Khamene A, Sauer F (2006) Reality augmentation for medical procedures: system architecture, single camera marker tracking, and system evaluation. IJCV 70(2):179–190

Bichlmeier C, Wimmer F, Heining SM, Navab N (2007) Contextual anatomic mimesis hybrid in-situ visualization method for improving multi-sensory depth perception in medical augmented reality. In: 2007 6th IEEE and ACM ISMAR, pp 129–138. IEEE

Navab N, Heining S-M, Traub J (2009) Camera augmented mobile C-Arm (CAMC): calibration, accuracy study, and clinical applications. IEEE TMI 29(7):1412–1423

Gavaghan KA, Anderegg S, Peterhans M, Oliveira-Santos T, Weber S (2011) Augmented reality image overlay projection for image guided open liver ablation of metastatic liver cancer. Workshop on AE-CAI. Springer, pp 36–46

Gavaghan K, Oliveira-Santos T, Peterhans M, Reyes M, Kim H, Anderegg S, Weber S (2012) Evaluation of a portable image overlay projector for the visualisation of surgical navigation data: phantom studies. IJCARS 7(4):547–556

Liebmann F, Roner S, von Atzigen M, Scaramuzza D, Sutter R, Snedeker J, Farshad M, Fürnstahl P (2019) Pedicle screw navigation using surface digitization on the Microsoft HoloLens. IJCARS 14(7):1157–1165

Kassil K, Stewart AJ (2009) Evaluation of a tool-mounted guidance display for computer-assisted surgery. In: Proceedings of the SIGCHI conference on human factors in computing systems, pp 1275–1278

Schütz L, Brendle C, Esteban J, Krieg SM, Eck U, Navab N (2021) Usability of graphical visualizations on a tool-mounted interface for spine surgery. J Imaging 7(8):159

Drascic D, Milgram P (1996) Perceptual issues in augmented reality. In: Stereoscopic Displays and Virtual Reality Systems III, vol. 2653, pp. 123–134. International Society for Optics and Photonics

Kruijff E, Swan JE, Feiner S (2010) Perceptual issues in augmented reality revisited. In: 2010 IEEE ISMAR, pp 3–12. IEEE

Swan JE, Jones A, Kolstad E, Livingston MA, Smallman HS (2007) Egocentric depth judgments in optical, see-through augmented reality. IEEE TVCG 13(3):429–442

Singh G, Swan JE, Jones JA, Ellis SR (2010) Depth judgment measures and occluding surfaces in near-field augmented reality. In: Proceedings of the 7th symposium on APGV, pp 149–156

Swan JE, Singh G, Ellis SR (2015) Matching and reaching depth judgments with real and augmented reality targets. IEEE TVCG 21(11):1289–1298

Singh G, Ellis SR, Swan JE (2018) The effect of focal distance, age, and brightness on near-field augmented reality depth matching. IEEE TVCG 26(2):1385–1398

Peillard E, Itoh Y, Moreau G, Normand J-M, Lécuyer A, Argelaguet F (2020) Can retinal projection displays improve spatial perception in augmented reality? In: 2020 IEEE ISMAR, pp. 80–89. IEEE

Hoff W, Vincent T (2000) Analysis of head pose accuracy in augmented reality. IEEE TVCG 6(4):319–334

Sielhorst T, Bichlmeier C, Heining SM, Navab N (2006) Depth perception-a major issue in medical AR: evaluation study by twenty surgeons. MICCAI. Springer, pp 364–372

Fotouhi J, Song T, Mehrfard A, Taylor G, Wang Q, Xian F, Martin-Gomez A, Fuerst B, Armand M, Unberath M et al (2020) Reflective-AR display: an interaction methodology for virtual-to-real alignment in medical robotics. IEEE RA-L 5(2):2722–2729

Martin-Gomez A, Fotouhi J, Eck U, Navab N (2020) Gain a new perspective: towards exploring multi-view alignment in mixed reality. In: 2020 IEEE ISMAR, pp 207–216. IEEE

Ristovski G, Preusser T, Hahn HK, Linsen L (2014) Uncertainty in medical visualization: towards a taxonomy. Comput Gr 39:60–73

Gillmann C, Saur D, Wischgoll T, Scheuermann G (2021) Uncertainty-aware visualization in medical imaging-a survey. STAR 40(3):665–689

Simpson AL, Ma B, Chen EC, Ellis RE, Stewart AJ (2006) Using registration uncertainty visualization in a user study of a simple surgical task. MICCAI. Springer, pp 397–404

Wieser H-P, Cisternas E, Wahl N, Ulrich S, Stadler A, Mescher H, Müller L-R, Klinge T, Gabrys H, Burigo L et al (2017) Development of the open-source dose calculation and optimization toolkit matRad. Med Phys 44(6):2556–2568

Amunts K, Mohlberg H, Bludau S, Zilles K (2020) Julich-brain: a 3d probabilistic atlas of the human brain’s cytoarchitecture. Science 369(6506):988–992

Carl B, Bopp M, Saß B, Pojskic M, Gjorgjevski M, Voellger B, Nimsky C (2019) Reliable navigation registration in cranial and spine surgery based on intraoperative computed tomography. Neurosurg Focus 47(6):11

Brecheisen R, Platel B, ter Haar Romeny BM, Vilanova A (2013) Illustrative uncertainty visualization of dti fiber pathways. Vis Comput 29(4):297–309

Risholm P, Pieper S, Samset E, Wells WM (2010) Summarizing and visualizing uncertainty in non-rigid registration. MICCAI. Springer, pp 554–561

Hendel MD, Bryan JA, Barsoum WK, Rodriguez EJ, Brems JJ, Evans PJ, Iannotti JP (2012) Comparison of patient-specific instruments with standard surgical instruments in determining glenoid component position: a randomized prospective clinical trial. JBJS 94(23):2167–2175

Lieberman IH, Hardenbrook MA, Wang JC, Guyer RD (2012) Assessment of pedicle screw placement accuracy, procedure time, and radiation exposure using a miniature robotic guidance system. Clin Spine Surg 25(5):241–248

Acknowledgements

This work was funded in part by a sponsored research agreement between Arthrex Inc. and the Johns Hopkins University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare.

Ethical approval

Studies are approved and performed in accordance with ethical standards.

Informed consent

Informed consents were obtained from all participants in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gu, W., Martin-Gomez, A., Cho, S.M. et al. The impact of visualization paradigms on the detectability of spatial misalignment in mixed reality surgical guidance. Int J CARS 17, 921–927 (2022). https://doi.org/10.1007/s11548-022-02602-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-022-02602-6