Abstract

As camera quality improves and their deployment moves to areas with limited bandwidth, communication bottlenecks can impair real-time constraints of an intelligent transportation systems application, such as video-based real-time pedestrian detection. Video compression reduces the bandwidth requirement to transmit the video which degrades the video quality. As the quality level of the video decreases, it results in the corresponding decreases in the accuracy of the vision-based pedestrian detection model. Furthermore, environmental conditions, such as rain and night-time darkness impact the ability to leverage compression by making it more difficult to maintain high pedestrian detection accuracy. The objective of this study is to develop a real-time error-bounded lossy compression (EBLC) strategy to dynamically change the video compression level depending on different environmental conditions to maintain a high pedestrian detection accuracy. We conduct a case study to show the efficacy of our dynamic EBLC strategy for real-time vision-based pedestrian detection under adverse environmental conditions. Our strategy dynamically selects the lossy compression error tolerances that maintain a high detection accuracy across a representative set of environmental conditions. Analyses reveal that for adverse environmental conditions, our dynamic EBLC strategy increases pedestrian detection accuracy up to 14% and reduces the communication bandwidth up to 14 × compared to the state-of-the-practice. Moreover, we show our dynamic EBLC strategy is independent of pedestrian detection models and environmental conditions allowing other detection models and environmental conditions to be easily incorporated.

Similar content being viewed by others

Availability of data and materials

Not applicable.

Code availability

Not applicable.

References

Pedestrian Safety. https://www.nhtsa.gov/road-safety/pedestrian-safety. Accessed 9 Sep 2020.

Sewalkar, P., Seitz, J.: Vehicle-to-pedestrian communication for vulnerable road users: survey, design considerations, and challenges. Sensors. 19(2), 358 (2019)

Gerónimo, D., López, A.M.: Vision-based pedestrian protection systems for intelligent vehicles. Springer, New York (2014)

Rosenbaum, D., Gurman, A., and Stein, G.: Forward collision warning trap and pedestrian advanced warning system. US Patent 9,251,708. Mobileye Vision Technologies Ltd (2016)

Rahman, M., Islam, M., Calhoun, J., Chowdhury, M.: Real-time pedestrian detection approach with an efficient data communication bandwidth strategy. Transp. Res. Rec. (2019). https://doi.org/10.1177/0361198119843255

Islam, M., Rahman, M., Chowdhury, M., Comert, G., Sood, E.D., Apon, A.: Vision-based personal safety messages (PSMs) generation for connected vehicles. IEEE Trans. Veh. Technol. (2020). https://doi.org/10.1109/TVT.2020.2982189,2020

Ohm, J.R., Sullivan, G.J., Schwarz, H., Tan, T.K., Wiegand, T.: Comparison of the Coding Efficiency of Video Coding Standards—Including High Efficiency Video Coding (HEVC). IEEE Trans. Circuits Syst. Video Technol. 22, 1669–1684 (2012)

Shizhong, L., Bovik, A.C.: Efficient DCT-domain blind measurement and reduction of blocking artifacts. IEEE Trans. Circuits Syst. Video Technol. (2002). https://doi.org/10.1109/TCSVT.2002.806819

Wang, Z., Bovik, A.C.: A universal image quality index. IEEE Signal Process. Lett. (2002). https://doi.org/10.1109/97.995823

De Cock, J., Li, Z., Manohara, M., Aaron, A.: Complexity-based consistent-quality encoding in the cloud. 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, (2016). https://doi.org/10.1109/ICIP.2016.7532605

Ziv, J., Lempel, A.: A universal algorithm for sequential data compression. IEEE Trans. Inf. Theory 23(3), 337–343 (1977). https://doi.org/10.1109/TIT.1977.1055714

Zemliachenko, A., Lukin, V., Ponomarenko, N., Egiazarian, K., Astola, J.: Still image/video frame lossy compression providing a desired visual quality. Multidimens. Syst. Signal Process. (2016). https://doi.org/10.1007/s11045-015-0333-8

Sayood, K.: Introduction to data compression. Morgan Kaufmann (2017). ISBN 978-0128094747.

Di, S., Cappello, F.: Fast error-bounded lossy HPC data compression with SZ. 2016 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Chicago, IL, (2016). https://doi.org/10.1109/IPDPS.2016

ITU-T and ISO/IEC JTC 1. Advanced video coding for generic audiovisual services. ITU-T Rec. H.264 and ISO/IEC 14496-10 (MPEG-4), (2017)

ITU-T and ISO/IEC JTC 1. High efficiency video coding. ITU-T Rec. H.265 and ISO/IEC 23008–2, (2018)

Ahmed, N., Natarajan, T., Rao, K.R.: Discrete cosine transform. IEEE Trans. Comput. (1974). https://doi.org/10.1109/T-C.1974.223784

Galteri, L., Bertini, M., Seidenari, L., Del Bimbo, A.: Video Compression for Object Detection Algorithms. 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, (2018). https://doi.org/10.1109/ICPR.2018.8546064.

Kong, L., Dai, R.: Object-detection-based video compression for wireless surveillance systems. IEEE Multimed. (2017). https://doi.org/10.1109/MMUL.2017.29

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, (2014). https://doi.org/10.1109/CVPR.2014.81

Girshick, R.: Fast R-CNN. In: Proceedings of the IEEE international conference on computer vision, (2015). https://doi.org/10.1109/ICCV.2015.169

Hanna, E., Cardillo, M.: Faster R-CNN: towards real-time object detection with region proposal networks. Biol. Cons. (2013). https://doi.org/10.1016/j.biocon.2012.08.014

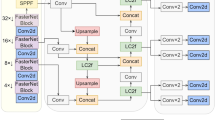

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C. Y., Berg, A. C.: SSD: single shot multibox detector. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Redmon, J., Farhadi, A., Ap, C.: YOLOv3: An Incremental Improvement. arXiv preprint arXiv:1804.02767, (2018).

Rawat, W., Wang, Z.: Deep convolutional neural networks for image classification: a comprehensive review. Neural Comput. 29(9), 2352–2449 (2017)

Bengio, Y.: Learning deep architectures for AI. Found Trends Mach Learn. 2(1), 1–127 (2009)

Husemann, R., Susin, A.A., Roesler, V.: Optimized solution to accelerate in hardware an intra H. 264/SVC video encoder. IEEE Micro 38(6), 8–17 (2018)

FFmepg Developers. ffmpeg tool (Version N-82324-g872b358) (2018).http://ffmpeg.org

Automold--Road-Augmentation-Library. (2019) https://mail.google.com/mail/u/0/#inbox/FMfcgxwDqThrFzXlSbjbfZjckWqNjLbZ

ARC-IT. Service Packages (2019). https://local.iteris.com/arc-it/html/servicepackages/servicepackages-areaspsort.html

Rothe, R., Guillaumin, M., Van Gool, L.: Non-maximum suppression for object detection by passing messages between windows. In: Asian Conference on Computer Vision. Springer, Cham, pp. 290–306 (2014)

Acknowledgements

This material is based on a study partially supported by the Center for Connected Multimodal Mobility (C2M2) (USDOT Tier 1 University Transportation Center) Grant headquartered at Clemson University, Clemson, South Carolina, USA. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the Center for Connected Multimodal Mobility (C2M2), and the U.S. Government assumes no liability for the contents or use thereof. This material is also based upon work supported by the National Science Foundation under Grant No. SHF-1910197.

Funding

This material is based on a study partially supported by the Center for Connected Multimodal Mobility (C2M2) (USDOT Tier 1 University Transportation Center) Grant headquartered at Clemson University, Clemson, South Carolina, USA. This material is also based upon work supported by the National Science Foundation under Grant No. SHF-1910197.

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: MR: conceptualization; methodology; data curation; formal analysis; and roles/writing—original draft. MI: data curation; formal analysis; and writing—original draft preparation. CH: formal analysis and writing—original draft preparation. JC: conceptualization, funding acquisition; writing—review and editing. MC: conceptualization, methodology, funding acquisition; writing—review and editing.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rahman, M., Islam, M., Holt, C. et al. Dynamic error-bounded lossy compression to reduce the bandwidth requirement for real-time vision-based pedestrian safety applications. J Real-Time Image Proc 19, 117–131 (2022). https://doi.org/10.1007/s11554-021-01165-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-021-01165-0