Abstract

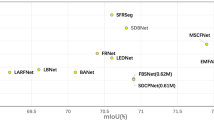

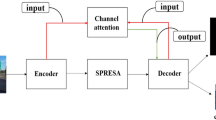

The task of traffic line detection is a fundamental yet challenging problem in computer vision. Previous traffic line segmentation models either tend to increase the network depth to enhance the representation ability to achieve high accuracy, or tend to reduce the number of model layers or hyper-parameters to achieve real-time efficiency, but how to trade off high accuracy and low inference time is still challenging. In this paper, we propose a reinforced attention method (RAM) to increase the saliency of traffic lines in feature abstraction, using RAM to optimize the model can achieve better traffic line detection accuracy without increasing inference time. In the RAM processing, we define the line to context contrast weight (LCCW) to represent the traffic line saliency in the feature map, which can be calculated by the ratio of the traffic line energy to the total feature energy. After LCCW calculation, we add a RAM loss item to the total loss in backward processing, and then retrain the model to obtain the new parameter weights. To validate RAM on real-time traffic line detection models, we applied RAM to seven popular real-time models and evaluate them on two popular traffic line detection benchmarks (CULane and TuSimple). Experimental results show that RAM can increase line detection accuracy by 1–2% on the CULane and TuSimple benchmarks, and the ERFNet and CGNet almost reach state-of-the-art performance after the models are optimized by RAM. The results also show that RAM can be applied to the optimization of almost all encoder–decoder-based models, and the optimized models are more robust to occlusion and extreme lighting conditions.

Similar content being viewed by others

References

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate (2014). arXiv preprint arXiv:1409.0473

Chetan, N.B., Gong, J., Zhou, H., Bi, D., Lan, J., Qie, L.: An overview of recent progress of lane detection for autonomous driving. In: 2019 6th International Conference on Dependable Systems and Their Applications (DSA), pp. 341–346 (2020). https://doi.org/10.1109/DSA.2019.00052

Ghafoorian, M., Nugteren, C., Baka, N., Booij, O., Hofmann, M.: El-gan: Embedding loss driven generative adversarial networks for lane detection. In: Proceedings of the European Conference on Computer vision (ECCV) Workshops, pp. 0–0 (2018)

Hou, Y., Ma, Z., Liu, C., Loy, C.C.: Learning lightweight lane detection cnns by self attention distillation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1013–1021 (2019)

Huval, B., Wang, T., Tandon, S., Kiske, J., Song, W., Pazhayampallil, J., Andriluka, M., Rajpurkar, P., Migimatsu, T., Cheng-Yue, R., et al.: An empirical evaluation of deep learning on highway driving. arXiv preprint arXiv:1504.01716 (2015)

Lee, C., Moon, J.: Robust lane detection and tracking for real-time applications. IEEE Trans. Intell. Transp. Syst. 19(12), 4043–4048 (2018). https://doi.org/10.1109/TITS.2018.2791572

Lee, M., Lee, J., Lee, D., Kim, W., Hwang, S., Lee, S.: Robust lane detection via expanded self attention. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 533–542 (2022)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

Mehta, S., Rastegari, M., Caspi, A., Shapiro, L., Hajishirzi, H.: Espnet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 552–568 (2018)

Mehta, S., Rastegari, M., Shapiro, L., Hajishirzi, H.: Espnetv2: A light-weight, power efficient, and general purpose convolutional neural network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9190–9200 (2019)

Neven, D., De Brabandere, B., Georgoulis, S., Proesmans, M., Van Gool, L.: Towards end-to-end lane detection: an instance segmentation approach. In: 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, pp. 286–291 (2018)

Niu, J., Lu, J., Xu, M., Lv, P., Zhao, X.: Robust lane detection using two-stage feature extraction with curve fitting. Pattern Recogn. 59, 225–233 (2016)

Pan, X., Shi, J., Luo, P., Wang, X., Tang, X.: Spatial as deep: spatial cnn for traffic scene understanding. In: Proceedings of the AAAI Conference on Artificial Intelligence, 32 (2018)

Paszke, A., Chaurasia, A., Kim, S., Culurciello, E.: ENet : A Deep Neural Network Architecture for Real-Time Semantic Segmentation, 7 Jun pp. 1–10 (2016). arXiv:1606.02147v1 [cs.CV]

Romera, E., Alvarez, J.M., Bergasa, L.M., Arroyo, R.: ERFNet: efficient residual factorized ConvNet for real-time semantic segmentation. IEEE Trans. Intell. Transp. Syst. 19(1), 263–272 (2018). https://doi.org/10.1109/TITS.2017.2750080

Tusimple: Lane detection challenge (dataset) (2017). https://github.com/TuSimple/tusimple-benchmark/tree/master/doc/lane_detection

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7794–7803 (2018)

Wang, Z., Ren, W., Qiu, Q.: Lanenet: Real-time lane detection networks for autonomous driving (2018). arXiv preprint arXiv:1807.01726

Woo, S., Park, J., Lee, J.Y., Kweon, I.S.: Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Wu, T., Tang, S., Zhang, R., Cao, J., Zhang, Y.: Cgnet: a light-weight context guided network for semantic segmentation. IEEE Trans. Image Process. 30, 1169–1179 (2020)

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhudinov, R., Zemel, R., Bengio, Y.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning. PMLR, pp. 2048–2057 (2015)

Yu, B., Jain, A.K.: Lane boundary detection using a multiresolution hough transform. In: Proceedings of International Conference on Image Processing, 2. IEEE, pp. 748–751 (1997)

Yu, C., Wang, J., Peng, C., Gao, C., Yu, G., Sang, N.: Bisenet: Bilateral segmentation network for real-time semantic segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV) (2018)

Yu, C., Wang, J., Peng, C., Gao, C., Yu, G., Sang, N.: Bisenet: bilateral segmentation network for real-time semantic segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 325–341 (2018)

Yu, C., Gao, C., Wang, J., Yu, G., Shen, C., Sang, N.: Bisenet v2: bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 129(11), 3051–3068 (2021)

Zhang, J., Xie, Z., Sun, J., Zou, X., Wang, J.: A cascaded r-cnn with multiscale attention and imbalanced samples for traffic sign detection. IEEE Access 8, 29742–29754 (2020)

Zhang, J., Sun, J., Wang, J., Yue, X.G.: Visual object tracking based on residual network and cascaded correlation filters. J. Ambient Intell. Humaniz. Comput. 12(8), 8427–8440 (2021)

Zhang, J., Feng, W., Yuan, T., Wang, J., Sangaiah, A.K.: Scstcf: spatial-channel selection and temporal regularized correlation filters for visual tracking. Appl. Soft Comput. 118, 108485 (2022)

Zhang, J., Sun, J., Wang, J., Li, Z., Chen, X.: An object tracking framework with recapture based on correlation filters and Siamese networks. Comput. Electr. Eng. 98, 107730 (2022)

Zheng, T., Fang, H., Zhang, Y., Tang, W., Yang, Z., Liu, H., Cai, D.: Resa: recurrent feature-shift aggregator for lane detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, 35, pp. 3547–3554 (2021)

Acknowledgements

This research was supported by Zhejiang Provincial Natural Science Foundation of China under Grant no. LGG21F030008.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Y., Xu, P., Zhu, L. et al. Reinforced attention method for real-time traffic line detection. J Real-Time Image Proc 19, 957–968 (2022). https://doi.org/10.1007/s11554-022-01236-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-022-01236-w